持续创作,加速成长!这是我参与「掘金日新计划 · 10 月更文挑战」的第7天,点击查看活动详情

配置环境:

云中心

- CentOS 7.6 64位

- 内存:80GB

- 显卡:NVIDIA Tesla T4

边缘节点

| 主机名 | 角色 | IP | 服务 |

|---|---|---|---|

| VM-0-9-centos | 云端 | 172.21.0.9(内网) 49.232.76.138(公网) | kuberbetes、docker、cloudcore |

| berbai02 | 边端 | 192.168.227.4 | docker、edgecore |

| demo | 边端 | 10.0.12.17 | docker、edgecore |

驱动安装

安装依赖包

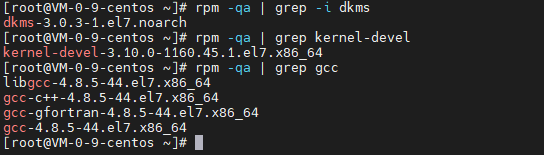

# 安装dkms

sudo yum install -y dkms gcc kernel-devel yum-utils

# 检查是否已经安装dkms

rpm -qa | grep -i dkms

# 检查是否安装kernel-devel

rpm -qa | grep kernel-devel

# 检查是否安装GCC

rpm -qa | grep gcc

# 检查是否安装yum-utils

rpm -qa | grep yum-utils

如图1所示则表示已安装上述包。

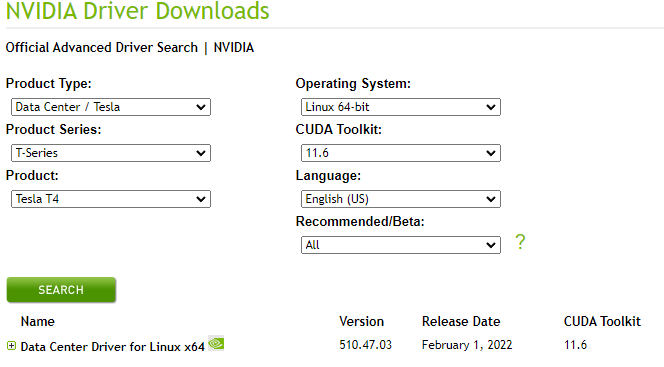

下载驱动

访问www.nvidia.com/Download/Fi…,选择对应的驱动版本。下载页面如图2所示。

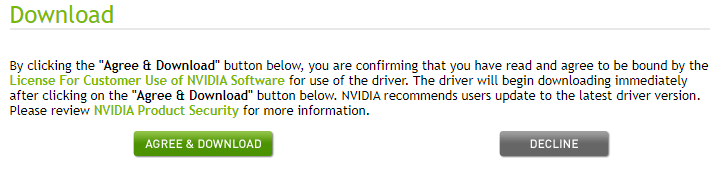

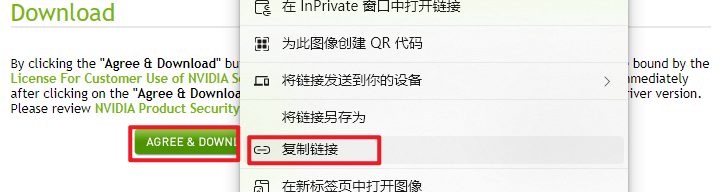

复制驱动下载链接

复制过程如图3-1、图3-2所示。

下载驱动

通过wget在服务器上下载。有可以通过在本机下载驱动安装包再上传到服务器上。

wget https://us.download.nvidia.com/tesla/510.47.03/NVIDIA-Linux-x86_64-510.47.03.run

安装驱动

# 添加执行权限

chmod +x NVIDIA-Linux-x86_64-418.126.02.run

# 安装驱动

sudo sh NVIDIA-Linux-x86_64-510.47.03.run

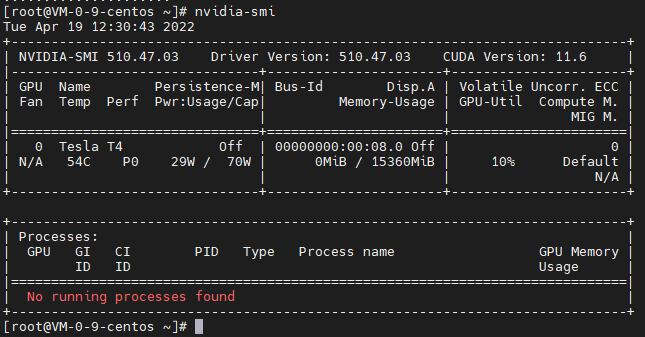

验证驱动安装情况

nvidia-smi

KubeEdge安装

安装设置

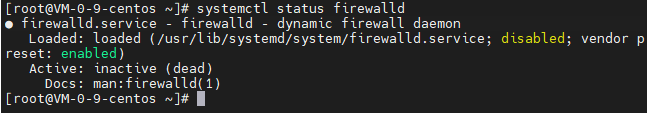

- 关闭防火墙

# 停止防火墙

systemctl stop firewalld

# 关闭防火墙自启动

systemctl disable firewalld

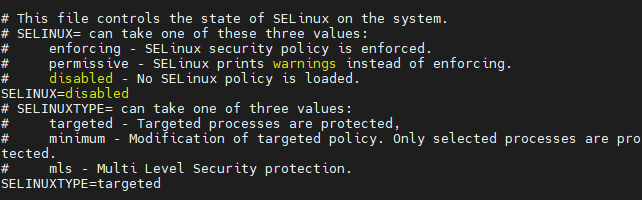

- 禁用SELINUX

编辑文件/etc/selinux/config,内容修改如下:

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

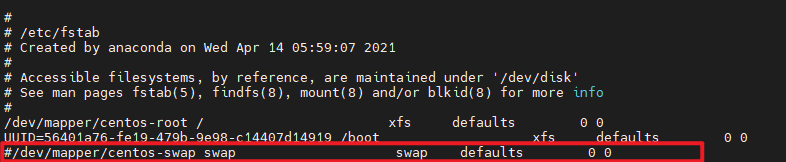

- 关闭swap

kubernetes安装需要关闭掉swap。修改/etc/fstab 文件中的 swap配置

sed -ri 's/.*swap.*/#&/' /etc/fstab

- 重启系统,使修改生效

reboot

安装Docker

# 设置镜像仓库

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 更新yum软件包索引

yum makecache fast

# 安装Docker CE

yum install docker-ce docker-ce-cli containerd.io

# 启动Docker

systemctl start docker

# 自启动

systemctl enable docker

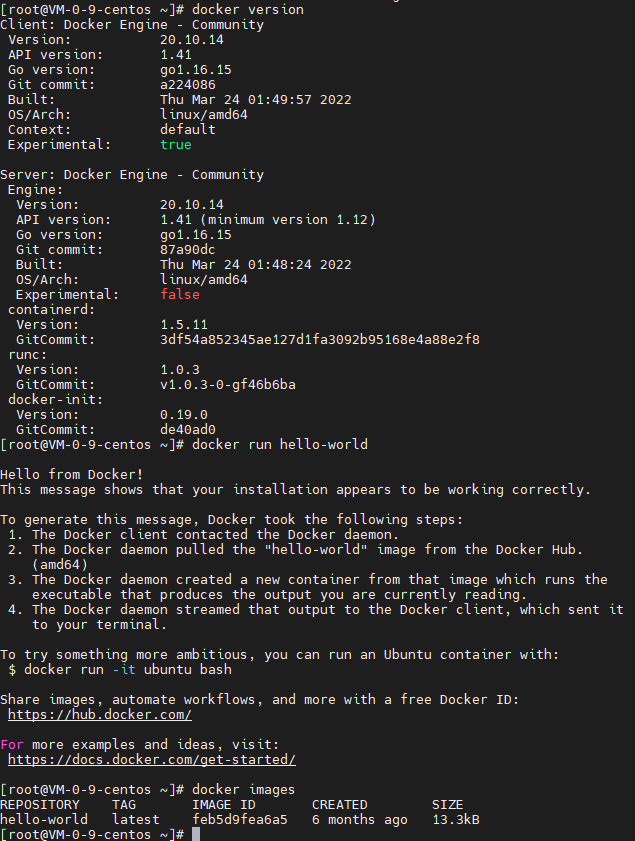

测试docker安装情况

docker version

docker run hello-world

docker images

部署Kubernetes

配置yum源

yum替换阿里云镜像源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubeadm、kubectl

yum makecache

yum install -y kubelet kubeadm kubectl ipvsadm

# 指定版本安装

yum install kubelet-1.17.0-0.x86_64 kubeadm-1.17.0-0.x86_64 kubectl-1.17.0-0.x86_64

配置内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF

# 加载所有的 sysctl 配置

sysctl --system

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

# 加载ipvs相关内核模块

# 如果重新开机,需要重新加载(可以写在 /etc/rc.local 中开机自动加载)

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

# 查看是否加载成功

lsmod | grep ip_vs

拉取镜像

查看kubeadm对应的k8s组件镜像版本,输出的镜像都是需要后续下载的。

[root@VM-0-9-centos ~]# kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.23.5

k8s.gcr.io/kube-controller-manager:v1.23.5

k8s.gcr.io/kube-scheduler:v1.23.5

k8s.gcr.io/kube-proxy:v1.23.5

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

拉取上述镜像,国内机器大概率是不能从k8s.gcr.io拉取镜像的。解决办法参考镜像下载方法

kubeadm config images pull

拉取完后查看镜像

[root@VM-0-9-centos ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.23.5 3fc1d62d6587 4 weeks ago 135MB

k8s.gcr.io/kube-proxy v1.23.5 3c53fa8541f9 4 weeks ago 112MB

k8s.gcr.io/kube-controller-manager v1.23.5 b0c9e5e4dbb1 4 weeks ago 125MB

k8s.gcr.io/kube-scheduler v1.23.5 884d49d6d8c9 4 weeks ago 53.5MB

k8s.gcr.io/etcd 3.5.1-0 25f8c7f3da61 5 months ago 293MB

k8s.gcr.io/coredns 1.8.6 a4ca41631cc7 6 months ago 46.8MB

hello-world latest feb5d9fea6a5 6 months ago 13.3kB

k8s.gcr.io/pause 3.6 6270bb605e12 7 months ago 683kB

配置Kubelet(可选)

在云中心配置Kubelet主要是为了验证K8s集群的部署是否正确。

# 获取Docker的cgroups

DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f4)

echo $DOCKER_CGROUPS

# 配置kubelet的cgroups

cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=k8s.gcr.io/pause:3.1"

EOF

# 启动kubelet

systemctl daemon-reload

systemctl enable kubelet && systemctl start kubelet

特别说明: 在这里使用systemctl status kubelet会发现报错误信息,这个错误在运行kubeadm init 生成CA证书后会被自动解决,此处可先忽略。

初始化集群

使用kudeadm init进行集群的初始化,初始化完成后需要记录下最后的输出——node节点添加到集群的命令。如果忘记该命令,可以使用kubeadm token create --print-join-command查看。

若采用云服务器可以去掉--apiserver-advertise-address配置,具体参考初始化超时原因

kubeadm init --kubernetes-version=v1.23.5 \

--pod-network-cidr=10.244.0.0/16 \

--apiserver-advertise-address= 172.21.0.9 \

--ignore-preflight-errors=Swap

[root@VM-0-9-centos ~]# kubeadm init --kubernetes-version=v1.23.5 \

> --pod-network-cidr=10.244.0.0/16 \

> --ignore-preflight-errors=Swap

[init] Using Kubernetes version: v1.23.5

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.21.0.9:6443 --token 1tyany.dxr5ymxu2g3j0dzl \

--discovery-token-ca-cert-hash sha256:a63bd724813ebe0c4aabadb8cc8c747b6c84c474b80d4104497542ec265ec36a

| 参数 | 说明 |

|---|---|

| --apiserver-advertise-address | master 和 worker 间能互相通信的 IP |

| --kubernetes-version | 指定版本 |

| --token-ttl=0 | token 永不过期 |

| --apiserver-cert-extra-sans | 节点验证证书阶段忽略错误 |

注意:

- 出现

/proc/sys/net/ipv4/ip_forward contents are not set to 1错误参考 ip_forward为0错误

进一步配置kubectl

rm -rf $HOME/.kube

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

到这一步,master节点安装完成。

查看node节点

[root@VM-0-9-centos ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

vm-0-9-centos NotReady control-plane,master 21m v1.23.5

配置网络插件 flannel(可选)

flannel Github地址:github.com/flannel-io/…

Kubernetes v1.17及以上 使用以下命令安装 flannel:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

查看

[root@VM-0-9-centos ~]# kubectl get node -owide

NAME STATUS ROLES AGE VERSION

vm-0-9-centos NotReady control-plane,master 31m v1.23.5

[root@VM-0-9-centos ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-64897985d-9hfz2 0/1 Pending 0 31m

coredns-64897985d-9hr2z 0/1 Pending 0 31m

etcd-vm-0-9-centos 1/1 Running 0 31m

kube-apiserver-vm-0-9-centos 1/1 Running 0 31m

kube-controller-manager-vm-0-9-centos 1/1 Running 3 31m

kube-flannel-ds-t4k2w 0/1 Init:ImagePullBackOff 0 50s

kube-proxy-c6mck 1/1 Running 0 31m

kube-scheduler-vm-0-9-centos 1/1 Running 4 31m

[root@VM-0-9-centos ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 32m

注意: 只有网络插件也安装配置完成之后,等待几分钟后,node才能会显示为ready状态。

配置 iptables 转发 IP

由于初始化时删除了 --apiserver-advertise-address 参数,返回的节点加入集群命令为内网IP,但几个云服务器内网不互通,所以我们需要使用 iptables 进行 IP 转发,将主节点公网IP转发至内网IP,由于node节点加入集群的命令是内网IP,因此还需要配置 node 节点将主节点的内网IP转发至主节点的公网IP。

# 在主节点 master

sudo iptables -t nat -A OUTPUT -d <主节点公网IP> -j DNAT --to-destination <主节点私有IP>

# 在 node 节点上

sudo iptables -t nat -A OUTPUT -d <主节点私有IP> -j DNAT --to-destination <主节点公网IP>

# cloud

sudo iptables -t nat -A OUTPUT -d 49.232.76.138 -j DNAT --to-destination 172.21.0.9

# edge

sudo iptables -t nat -A OUTPUT -d 172.21.0.9 -j DNAT --to-destination 49.232.76.138

KubeEdge安装

Cloud配置

cloud端负责编译KubeEdge的相关组件与运行cloudcore。

安装必要环境

- 下载golang

wget https://golang.google.cn/dl/go1.18.1.linux-amd64.tar.gz

tar -zxvf go1.18.1.linux-amd64.tar.gz -C /usr/local

- 配置golang环境

在/etc/profile文件末尾配置golang环境。

cat >> /etc/profile << EOF

# golang env

export GOROOT=/usr/local/go

export GOPATH=/data/gopath

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

EOF

mkdir -p /data/gopath && cd /data/gopath

mkdir -p src pkg bin

# 使配置生效

source /etc/profile

- 下载KudeEdge源码

# 安装Git

yum install git

# 拉取源码

git clone https://github.com/kubeedge/kubeedge $GOPATH/src/github.com/kubeedge/kubeedge

部署cloudcore

- 编译kubeadm

cd $GOPATH/src/github.com/kubeedge/kubeedge

make all WHAT=keadm

说明:编译后的二进制文件在./_output/local/bin下

# 进入./_output/local/bin

cd ./_output/local/bin

- 创建cloud节点

[root@VM-0-9-centos kubeedge]# ./keadm init --advertise-address="172.21.0.9"

Kubernetes version verification passed, KubeEdge installation will start...

...

KubeEdge cloudcore is running, For logs visit: /var/log/kubeedge/cloudcore.log

CloudCore started

创建cloud时发现网络被墙了,解决方法参考:修改hosts

# 查看cloudcore状态

systemctl status cloudcore.service

#

Edge配置

edge端也可以通过keadm进行配置,可以将cloud端编译生成的二进制可执行文件通过scp命令复制到edge端。

安装必要环境

root@raspberrypi:~# uname -a

Linux raspberrypi 5.10.17-v7l+ #1414 SMP Fri Apr 30 13:20:47 BST 2021 armv7l GNU/Linux

树莓派需要安装ARMv6版本的golang环境。

wget https://golang.google.cn/dl/go1.18.1.linux-armv6l.tar.gz

tar -zxvf go1.18.1.linux-armv6l.tar.gz -C /usr/local

配置环境变量

cat >> /home/pi/.bashrc << EOF

# golang env

export GOROOT=/usr/local/go

export GOPATH=/data/gopath

export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

EOF

# 使配置生效

source /home/pi/.bashrc

下载kubeedge源码

# 安装Git

apt-get install git

# 拉取源码

git clone https://github.com/kubeedge/kubeedge $GOPATH/src/github.com/kubeedge/kubeedge

云端获取token令牌

token令牌在加入边缘节点时使用。

[root@VM-0-9-centos bin]# ./keadm gettoken

b6107885645ec34725a76e7cf3fd9d8bf130dfa5e6f37d4c51b6bc1bee49cd48.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2NTA2MjE0MDR9.ZMtQnF_oWYG4pijYO-SaxVtUYhHQSuCIauf5iWFkNMY

部署edgecore

编译keadm

cd $GOPATH/src/github.com/kubeedge/kubeedge

make all WHAT=keadm

# 进入./_output/local/bin

cd ./_output/local/bin

keadm join将安装edgecore和mqtt。它还提供了一个标志,通过它可以设置特定的版本。

./keadm join --cloudcore-ipport=172.21.0.9:10000 --token=9c71cbbb512afd65da8813fba9a48d19ab8602aa27555af7a07cd44b508858ae.eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJleHAiOjE2NTA3MDgwNjN9.-S0YBgSSAz6loQsi0XaTgFeWyHsHDm8E2SAefluVTJA

Host has /usr/sbin/mosquitto already installed and running. Hence skipping the installation steps !!!

...

KubeEdge edgecore is running, For logs visit: journalctl -u edgecore.service -xe

注意:

- --cloudcore-ipport 标志是强制性标志。

- --token会自动为边缘节点应用证书。

- 云和边缘端使用的kubeEdge版本应相同。

验证

边缘端在启动edgecore后,会与云端的cloudcore进行通信,K8s进而会将边缘端作为一个node纳入K8s的管控。

[root@VM-0-9-centos bin]# kubectl get node

NAME STATUS ROLES AGE VERSION

berbai02 Ready agent,edge 32m v1.22.6-kubeedge-v1.10.0

demo Ready agent,edge 34m v1.22.6-kubeedge-v1.10.0

vm-0-9-centos Ready control-plane,master 27h v1.23.5

[root@VM-0-9-centos bin]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-64897985d-9hfz2 1/1 Running 2 (69m ago) 27h

coredns-64897985d-9hr2z 1/1 Running 2 (69m ago) 27h

etcd-vm-0-9-centos 1/1 Running 2 (69m ago) 27h

kube-apiserver-vm-0-9-centos 1/1 Running 2 (69m ago) 27h

kube-controller-manager-vm-0-9-centos 1/1 Running 13 (69m ago) 27h

kube-flannel-ds-hsss5 0/1 Error 10 (2m23s ago) 34m

kube-flannel-ds-qp6xj 0/1 Error 9 (4m46s ago) 36m

kube-flannel-ds-t4k2w 1/1 Running 2 (69m ago) 27h

kube-proxy-6qcfb 0/1 ContainerCreating 0 34m

kube-proxy-c6mck 1/1 Running 2 (69m ago) 27h

kube-proxy-hvpz9 1/1 Running 0 36m

kube-scheduler-vm-0-9-centos 1/1 Running 14 (69m ago) 27h

说明:如果在K8s集群中配置过flannel网络插件,这里由于edge节点没有部署kubelet,所以调度到edge节点上的flannel pod会创建失败。这不影响KubeEdge的使用,可以先忽略这个问题。

KubeEdge实例

实例一:边端计数器

KubeEdge Counter Demo计数器是一个伪设备,用户无需任何额外的物理设备即可运行此演示。计数器在边缘侧运行,用户可以从云侧在Web中对其进行控制,也可以从云侧在Web中获得计数器值。

下载源码

git clone https://github.com/kubeedge/examples.git $GOPATH/src/github.com/kubeedge/examples

创建device model

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

kubectl create -f kubeedge-counter-model.yaml

创建device

根据你的实际情况修改matchExpressions:

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

# 替换 "demo" 为你的 edge 节点名称

sed -i "s#edge-node#demo#" kubeedge-counter-instance.yaml

kubectl create -f kubeedge-counter-instance.yaml

部署云端应用

修改代码:云端应用web-controller-app用来控制边缘端的pi-counter-app应用,该程序默认监听的端口号为80,此处修改为8989。

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/web-controller-app

sed -i "s#80#8989#" main.go

构建镜像:

注意:构建镜像时,如果开启了go mod请关闭。

make

make docker

部署web-controller-app

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

kubectl create -f kubeedge-web-controller-app.yaml

部署边缘端应用

边缘端的pi-counter-app应用受云端应用控制,主要与mqtt服务器通信,进行简单的计数功能。

构建镜像

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/counter-mapper

make

make docker

部署Pi Counter App

cd $GOPATH/src/github.com/kubeedge/examples/kubeedge-counter-demo/crds

kubectl create -f kubeedge-pi-counter-app.yaml

说明:为了防止Pod的部署卡在ContainerCreating,这里直接通过docker save、scp和docker load命令将镜像发布到边缘端

docker save -o kubeedge-pi-counter.tar kubeedge/kubeedge-pi-counter:v1.0.0

scp kubeedge-pi-counter.tar root@10.0.12.17:/root

docker load -i kubeedge-pi-counter.tar

运行效果

现在,KubeEdge Demo的云端部分和边缘端的部分都已经部署完毕,如下:

[root@VM-0-9-centos crds]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kubeedge-counter-app-55848d84d9-545kh 1/1 Running 0 27m 172.21.0.9 vm-0-9-centos <none> <none>

kubeedge-pi-counter-54b7997965-t2bxr 1/1 Running 0 31m 10.0.12.17 demo <none> <none>

我们现在开始测试一下该Demo运行效果:

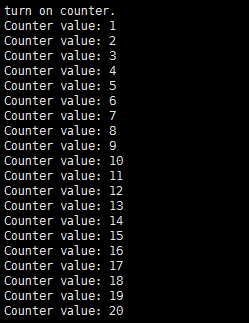

执行ON命令

在web页面上选择ON,并点击Execute,可以在edge节点上通过以下命令查看执行结果:

# 修改 counter-container-id 为kubeedge-pi-counter容器id

[root@demo kube]# docker logs -f counter-container-id

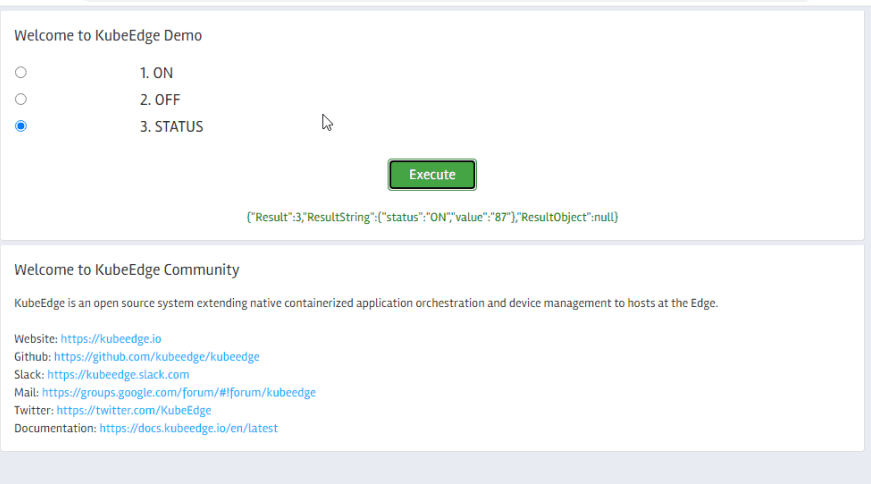

查看counter STATUS

在web页面上选择STATUS,并点击Execute,会在Web页面上返回counter当前的status。

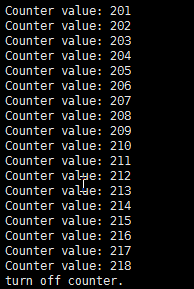

执行OFF命令

在web页面上选择OFF,并点击Execute,可以再edge节点上通过以下命令查看执行结果。

报错及解决

镜像下载失败

不能下载镜像可以参考下述方法:

# 编写脚本

vim pull_k8s_images.sh

内容如下:

set -o errexit

set -o nounset

set -o pipefail

KUBE_VERSION=v1.23.5

KUBE_PAUSE_VERSION=3.6

ETCD_VERSION=3.5.1-0

DNS_VERSION=v1.8.6

GCR_URL=k8s.gcr.io

# mirrorgooglecontainers

DOCKERHUB_URL=registry.aliyuncs.com/google_containers

images=(

kube-proxy:${KUBE_VERSION}

kube-scheduler:${KUBE_VERSION}

kube-controller-manager:${KUBE_VERSION}

kube-apiserver:${KUBE_VERSION}

pause:${KUBE_PAUSE_VERSION}

etcd:${ETCD_VERSION}

coredns:${DNS_VERSION}

)

for imageName in ${images[@]} ; do

docker pull $DOCKERHUB_URL/$imageName

if [ $imageName = coredns:${DNS_VERSION} ]

then docker tag $DOCKERHUB_URL/$imageName $GCR_URL/coredns/$imageName

else docker tag $DOCKERHUB_URL/$imageName $GCR_URL/$imageName

fi

docker rmi $DOCKERHUB_URL/$imageName

done

修改脚本文件权限

# 授予执行权限

chmod +x ./pull_k8s_images.sh

# 执行

./pull_k8s_images.sh

ip_forward为0错误

修改/proc/sys/net/ipv4/ip_forward文件内容。

echo "1" > /proc/sys/net/ipv4/ip_forward

net.ipv4.ip_forward是数据包转发,出于安全考虑,Linux系统默认是禁止数据包转发的。所谓转发即当主机拥有多于一块的网卡时,其中一块收到数据包,根据数据包的目的ip地址将数据包发往本机另一块网卡,该网卡根据路由表继续发送数据包。这通常是路由器所要实现的功能。 要让Linux系统具有路由转发功能,需要配置一个Linux的内核参数net.ipv4.ip_forward。这个参数指定了Linux系统当前对路由转发功能的支持情况;其值为0时表示禁止进行IP转发;如果是1,则说明IP转发功能已经打开。

docker与kubelet的cgroup driver不一致问题

[kubelet-check] It seems like the kubelet isn't running or healthy.

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

...

Unfortunately, an error has occurred:

timed out waiting for the condition

This error is likely caused by:

- The kubelet is not running

- The kubelet is unhealthy due to a misconfiguration of the node in some way (required cgroups disabled)

If you are on a systemd-powered system, you can try to troubleshoot the error with the following commands:

- 'systemctl status kubelet'

- 'journalctl -xeu kubelet'

Additionally, a control plane component may have crashed or exited when started by the container runtime.

To troubleshoot, list all containers using your preferred container runtimes CLI.

Here is one example how you may list all Kubernetes containers running in docker:

- 'docker ps -a | grep kube | grep -v pause'

Once you have found the failing container, you can inspect its logs with:

- 'docker logs CONTAINERID'

error execution phase wait-control-plane: couldn't initialize a Kubernetes cluster

To see the stack trace of this error execute with --v=5 or higher

问题排查

tail /var/log/messages查看日志,可以看出kubelet cgroup driver是systemd,而docker cgroup driver是cgroupfs,两者不一致。

Apr 21 11:28:12 VM-0-9-centos kubelet: E0421 11:28:12.598760 5682 server.go:302] "Failed to run kubelet" err="failed to run Kubelet: misconfiguration: kubelet cgroup driver: "systemd" is different from docker cgroup driver: "cgroupfs""

Apr 21 11:28:12 VM-0-9-centos systemd: Unit kubelet.service entered failed state.

Apr 21 11:28:12 VM-0-9-centos systemd: kubelet.service failed.

解决方法

方式一:驱动向 docker 看齐 方式二:驱动向 kubelet 看齐

这里把docker cgroup driver修改成systemd。

cat >> /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

重启docker

systemctl restart docker

查看kubelet cgroup driver和docker cgroup driver配置,由此已成功将两者驱动修改一致。

[root@VM-0-9-centos ~]# cat /var/lib/kubelet/config.yaml |grep group

cgroupDriver: systemd

[root@VM-0-9-centos ~]# docker info | grep -i "Cgroup Driver"

Cgroup Driver: systemd

init时端口被占用

[init] Using Kubernetes version: v1.23.5

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-10259]: Port 10259 is in use

[ERROR Port-10257]: Port 10257 is in use

[ERROR FileAvailable--etc-kubernetes-manifests-kube-apiserver.yaml]: /etc/kubernetes/manifests/kube-apiserver.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-controller-manager.yaml]: /etc/kubernetes/manifests/kube-controller-manager.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-kube-scheduler.yaml]: /etc/kubernetes/manifests/kube-scheduler.yaml already exists

[ERROR FileAvailable--etc-kubernetes-manifests-etcd.yaml]: /etc/kubernetes/manifests/etcd.yaml already exists

[ERROR Port-10250]: Port 10250 is in use

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

解决方法

执行reset指令释放占用的端口和文件

kubeadm reset

Initial timeout of 40s passed,初始化超时

一般是--apiserver-advertise-address设置成了公网ip,导致访问不上。原因是云服务器主机网络是VPC,在系统中看到的是内网ip,通过NAT方式将公网IP映射到服务器的内网IP。即使用ifconfig只能查看到内网IP。

解决方法

删除初始化命令中的 --apiserver-advertise-address 参数或使用内网IP,然后重新初始化,主机之间的通信采用 iptables 转发。

初始化没有明确错误信息,排错自查清单

- 镜像拉取失败

- 检查容器是否正常启动

- 检查防火墙配置

- 如果init失败则执行

kubeadm reset清除初始化信息。

无法访问GitHub

手动下载github中文件,放至指定路径。

execute keadm command failed: failed to exec 'bash -c cd /etc/kubeedge/ && wget -k --no-check-certificate --progress=bar:force https://github.com/kubeedge/kubeedge/releases/download/v1.10.0/kubeedge-v1.10.0-linux-amd64.tar.gz', err: --2022-04-22 17:30:02-- https://github.com/kubeedge/kubeedge/releases/download/v1.10.0/kubeedge-v1.10.0-linux-amd64.tar.gz

edge节点加入提示成功,cloud没有显示

# 查看edgecore状态

systemctl status edgecore.service

# 查看日志

journalctl -u edgecore -n 50

错误1

init new edged error, misconfiguration: kubelet cgroup driver: "cgroupfs" is different from docker cgroup driver: "system"

参考docker与kubelet的cgroup driver不一致问题

其他

删除edgecore

./keadm reset

rm -rf /var/lib/kubeedge /var/lib/edged /etc/kubeedge

rm -rf /etc/systemd/system/edgecore.service

rm -rf /usr/local/bin/edgecoreps