import { OrbitControls } from "./three/examples/jsm/controls/OrbitControls.js";

import { GUI } from "./three/examples/jsm/libs/dat.gui.module.js";

import { RGBELoader } from "./three/examples/jsm/loaders/RGBELoader.js";

import { EquirectangularToCubeGenerator } from "./three/examples/jsm/loaders/EquirectangularToCubeGenerator.js";

import { PMREMGenerator } from "./three/examples/jsm/pmrem/PMREMGenerator.js";

import { PMREMCubeUVPacker } from "./three/examples/jsm/pmrem/PMREMCubeUVPacker.js";

var camera, scene, renderer, controls;

var meshCube,uniformsCube,materialCube,materialBox,meshBox;

var vShader,vCubeMap,fPBR,fHdrDecode,fIrradianceConvolute,fPrefilter,vPlane,fBRDF,fSimplePlane,vSimplePlane;

var envMap,cubeCamera,cubeCamera2;

var cubeCameraPrefilter0,cubeCameraPrefilter1,cubeCameraPrefilter2,cubeCameraPrefilter3,cubeCameraPrefilter4;

var materialPrefilterBox,meshPrefilterBox0,meshPrefilterBox1,meshPrefilterBox2,meshPrefilterBox3,meshPrefilterBox4;

var bufferScene,bufferTexture;

var materialBallB,meshBallB;

var container;

const lightStrength = 2.20;

var directionalLightDir = new THREE.Vector3(10.0, 10.0, 10.0);

var params = {

metallic: 0.78,

roughness: 0.26

};

function init() {

container = document.createElement("div");

document.body.appendChild(container);

camera = new THREE.PerspectiveCamera(

60,

window.innerWidth / window.innerHeight,

1,

100000

);

camera.position.x = 0;

camera.position.y = 0;

camera.position.z = 15;

scene = new THREE.Scene();

renderer = new THREE.WebGLRenderer();

var directionalLight=new THREE.DirectionalLight(0xff0000);

directionalLight.position.set(0,100,100);

var directionalLightHelper=new THREE.DirectionalLightHelper(directionalLight,50,0xff0000);

renderer.setPixelRatio(window.devicePixelRatio);

renderer.setSize(window.innerWidth, window.innerHeight);

renderer.gammaOutput = true;

container.appendChild(renderer.domElement);

controls = new OrbitControls(camera, renderer.domElement);

var gui = new GUI();

gui.add(params, "metallic", 0, 1);

gui.add(params, "roughness", 0, 1);

gui.open();

var loader = new THREE.FileLoader();

var numFilesLeft = 10;

function runMoreIfDone() {

--numFilesLeft;

if (numFilesLeft === 0) {

more();

}

}

loader.load("./shaders/vertex.vs", function(data) {

vShader = data;

runMoreIfDone();

});

loader.load("./shaders/pbr.frag", function(data) {

fPBR = data;

runMoreIfDone();

});

loader.load("./shaders/cubeMap.vs", function(data) {

vCubeMap = data;

runMoreIfDone();

});

loader.load("./shaders/hdrDecode.frag", function(data) {

fHdrDecode = data;

runMoreIfDone();

});

loader.load("./shaders/irradianceConvolute.frag", function(data) {

fIrradianceConvolute = data;

runMoreIfDone();

});

loader.load("./shaders/prefilter.frag", function(data) {

fPrefilter = data;

runMoreIfDone();

});

loader.load("./shaders/plane.vs", function(data) {

vPlane = data;

runMoreIfDone();

});

loader.load("./shaders/BRDF.frag", function(data) {

fBRDF = data;

runMoreIfDone();

});

loader.load("./shaders/SimplePlane.frag", function(data) {

fSimplePlane = data;

runMoreIfDone();

});

loader.load("./shaders/SimplePlane.vs", function(data) {

vSimplePlane = data;

runMoreIfDone();

});

}

- 加载HDR与背景,生成envMap立方体贴图

- 然后背景贴上背景图

new RGBELoader()

.setType( THREE.UnsignedByteType )

.setPath( './three/examples/textures/equirectangular/' )

.load(

"venice_sunset_2k.hdr",

function(texture) {

var cubeGenerator = new EquirectangularToCubeGenerator(texture, {

resolution: 1024

});

cubeGenerator.update(renderer);

var pmremGenerator = new PMREMGenerator(

cubeGenerator.renderTarget.texture

);

pmremGenerator.update(renderer);

var pmremCubeUVPacker = new PMREMCubeUVPacker(pmremGenerator.cubeLods);

pmremCubeUVPacker.update(renderer);

envMap = pmremCubeUVPacker.CubeUVRenderTarget.texture;

pmremGenerator.dispose();

pmremCubeUVPacker.dispose();

scene.background = cubeGenerator.renderTarget;

}

);

- hdr的RGBA通过特定的算法tonemapping转为RGB

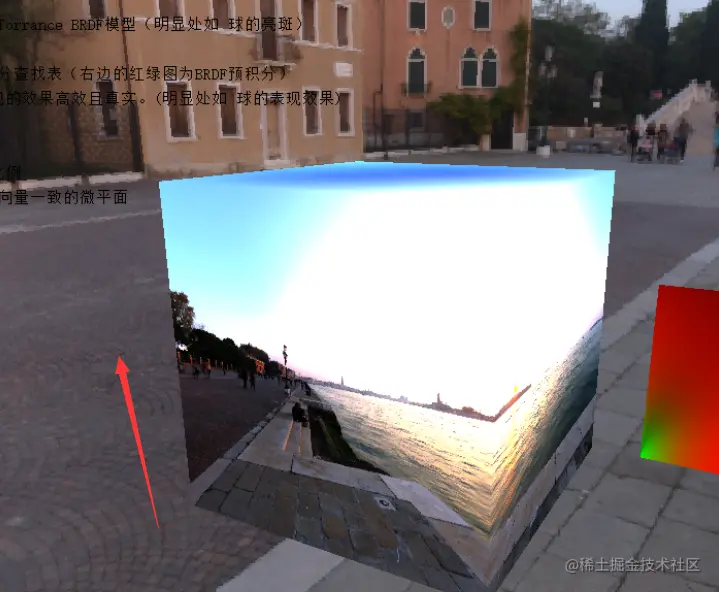

- meshCube贴上贴图后展示的效果如下

varying vec3 cubeMapTexcoord;

//正常渲染立方体

void main(){

cubeMapTexcoord = position;

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

uniform samplerCube tCube;

varying vec3 cubeMapTexcoord;

// hdr文件存储每个浮点值的方式

// 每个通道存储 8 位,再以 alpha 通道存放指数

// 因此利用这种方式解码

vec3 hdrDecode(vec4 encoded){

float exponent = encoded.a * 256.0 - 128.0;

vec3 mantissa = encoded.rgb;

return exp2(exponent) * mantissa;

}

void main(){

vec4 color = textureCube(tCube, cubeMapTexcoord);

vec3 envColor = hdrDecode(color);

gl_FragColor = vec4(envColor, 1.0);

}

var geometryCube = new THREE.BoxBufferGeometry(10, 10, 10);

var uniformsCubeOriginal = {

"tCube": { value: null }

};

uniformsCube = THREE.UniformsUtils.clone(uniformsCubeOriginal);

uniformsCube["tCube"].value = envMap;

materialCube = new THREE.ShaderMaterial({

uniforms: uniformsCube,

vertexShader: vCubeMap,

fragmentShader: fHdrDecode,

side: THREE.BackSide

});

meshCube = new THREE.Mesh(geometryCube, materialCube);

scene.add(meshCube);

- shader进行采样

- cubeCamera.renderTarget.texture应该是tonemapping之后的Texture

- 效果如下(展现这张图的时候非常卡)

varying vec3 cubeMapTexcoord;

//正常渲染立方体

void main(){

cubeMapTexcoord = position;

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

// 根据方程,预计算漫反射积分

uniform samplerCube tCube;

varying vec3 cubeMapTexcoord;

const float PI = 3.1415926;

// 黎曼积分步长

const float sampleDelta = 0.025;//0.025;

void main(){

// 在切线空间的半球采样

// 世界向量充当原点的切线曲面的法线,立方体归一化以后就是球的方向向量,也就是法线

// 给定此法线,计算环境的所有传入辐射。

vec3 N = normalize(cubeMapTexcoord);

vec3 up = vec3(0.0, 1.0, 0.0);

vec3 right = normalize(cross(up, N));

up = normalize(cross(N, right));

float nrSamples = 0.0;

vec3 irradiance = vec3(0.0);

// 两重循环,获取采样方向向量

for(float phi = 0.0; phi < 2.0 * PI; phi += sampleDelta){

float cosPhi = cos(phi);

float sinPhi = sin(phi);

for(float theta = 0.0; theta < 0.5 * PI; theta += sampleDelta){

float cosTheta = cos(theta);

float sinTheta = sin(theta);

// 在切线空间中,从球面坐标到笛卡尔坐标

vec3 tangentDir = vec3(cosPhi * sinTheta, sinPhi * sinTheta, cosTheta);

// 切线空间到世界空间

// 没有往常一样构建TBN变换矩阵,直接用计算了的切线空间的三个基向量。

vec3 worldDir = tangentDir.x * right + tangentDir.y * up + tangentDir.z * N;

irradiance += textureCube(tCube, worldDir).rgb * cosTheta * sinTheta;

nrSamples++;

}

}

irradiance = irradiance * (1.0 / float(nrSamples)) * PI;

gl_FragColor = vec4(irradiance, 1.0);

}

cubeCamera = new THREE.CubeCamera( 1, 100,512);

scene.add( cubeCamera );

var geometryBox = new THREE.BoxBufferGeometry(10, 10, 10);

var uniformsBoxOriginal = {

"tCube": { value: null }

};

var uniformsBox = THREE.UniformsUtils.clone(uniformsBoxOriginal);

uniformsBox["tCube"].value = cubeCamera.renderTarget.texture;

materialBox = new THREE.ShaderMaterial({

uniforms: uniformsBox,

vertexShader: vCubeMap,

fragmentShader: fIrradianceConvolute,

side: THREE.BackSide

});

meshBox = new THREE.Mesh(geometryBox, materialBox);

meshBox.position.x += 10.0;

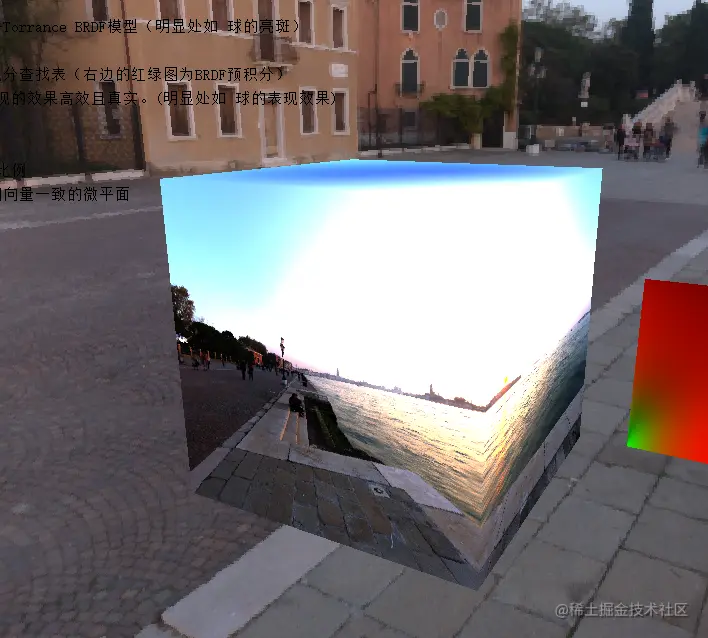

- cubeCamera.renderTarget.texture应该是tonemapping之后的Texture

- 主要作用是让贴图更加模糊来适应不同roughness时的表现

- 以下代码会生成5个roughness等级的贴图 效果类似以下

varying vec3 cubeMapTexcoord;

//正常渲染立方体

void main(){

cubeMapTexcoord = position;

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

// 预计算镜面反射积分 的 环境贴图部分

// 构建二维的Hammersley序列,实现将法线分布函数与余弦映射结合起来做重要性采样,计算蒙特卡洛积分

// 将粗糙度换分成5个等级,每个等级根据当前的粗糙度进行重要性采样

uniform samplerCube tCube;

uniform float roughness;

varying vec3 cubeMapTexcoord;

const float PI = 3.1415926;

// Van Der Corput 序列生成(用于不能移位操作的WebGL)

float VanDerCorpus(int n, int base){

float invBase = 1.0 / float(base);

float denom = 1.0;

float result = 0.0;

for(int i = 0; i < 32; ++i){

if(n > 0){

denom = mod(float(n), 2.0);

result += denom * invBase;

invBase = invBase / 2.0;

n = int(float(n) / 2.0);

}

}

return result;

}

// 超均匀分布序列(Low-discrepancy Sequence)——Hammersley序列

// 利用Van Der Corput 序列生成Hammersley序列

vec2 HammersleyNoBitOps(int i, int N){

return vec2(float(i)/float(N), VanDerCorpus(i, 2));

}

// 利用法线分布函数与余弦映射结合起来做重要性采样

vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness){

float a = roughness * roughness;

// 在球面空间将法线分布函数与余弦映射结合起来做重要性采样

// 将Hammersley序列x_i=(u,v)^T 映射到(\phi,\theta),然后转换成笛卡尔坐标下的向量形式。

float phi = 2.0 * PI * Xi.x;

float cosTheta = sqrt((1.0 - Xi.y) / (1.0 + (a*a - 1.0) * Xi.y));

float sinTheta = sqrt(1.0 - cosTheta * cosTheta);

// 从球面到笛卡尔坐标

vec3 H;

H.x = cos(phi) * sinTheta;

H.y = sin(phi) * sinTheta;

H.z = cosTheta;

// 从切线空间变换到世界空间

vec3 up = abs(N.z) < 0.999 ? vec3(0.0, 0.0, 1.0) : vec3(1.0, 0.0, 0.0);

vec3 tangent = normalize(cross(up, N));

vec3 bitangent = cross(N, tangent);

vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;

return normalize(sampleVec);

}

void main(){

vec3 N = normalize(cubeMapTexcoord);

vec3 V = N;

vec3 R = N;//假设视角方向是镜面反射方向,总是等于输出采样方向,总是等于法线

//假设近似会导致NDF波瓣从各向异性变为各向同性

//最明显的现象就是在掠射角(grazing angles)看表面时没法得到拖长的反射

const int SAMPLE_COUNT = 1024;

vec3 color = vec3(0.0);

float totalWeight = 0.0;

// 进行采样

for(int i = 0; i < SAMPLE_COUNT; i++){

// 生成Hammersley序列进行采样

vec2 Xi = HammersleyNoBitOps(i, SAMPLE_COUNT);

// 重要性采样偏移采样向量

// 获得了一个采样向量,该向量大体围绕着预估的微表面的半程向量

vec3 H = ImportanceSampleGGX(Xi, N, roughness);

// 反推了光照方向

vec3 L = normalize(2.0 * dot(V, H) * H - V);

// 如果该光照方向和法线点积大于0,则可以采用纳入预积分计算

float NdotL = max(dot(N, L), 0.0);

if(NdotL > 0.0){

// Ephic Games公司发现再乘上一个权重cos效果更加,因而在采样之后,又乘以了NdotL

color += textureCube(tCube, L).rgb * NdotL;

totalWeight += NdotL;

}

}

color /= totalWeight;

gl_FragColor = vec4(color, 1.0);

}

cubeCamera2 = new THREE.CubeCamera( 1, 100, 32 );

scene.add( cubeCamera2 );

cubeCamera2.position.x += 10.0;

cubeCameraPrefilter0 = new THREE.CubeCamera( 1, 100, 128 );

scene.add( cubeCameraPrefilter0 );

cubeCameraPrefilter0.position.x += 20.0;

cubeCameraPrefilter1 = new THREE.CubeCamera( 1, 100, 64 );

scene.add( cubeCameraPrefilter1 );

cubeCameraPrefilter1.position.x += 30.0;

cubeCameraPrefilter2 = new THREE.CubeCamera( 1, 100, 32 );

scene.add( cubeCameraPrefilter2 );

cubeCameraPrefilter2.position.x += 40.0;

cubeCameraPrefilter3 = new THREE.CubeCamera( 1, 100, 16 );

scene.add( cubeCameraPrefilter3 );

cubeCameraPrefilter3.position.x += 50.0;

cubeCameraPrefilter4 = new THREE.CubeCamera( 1, 100, 8 );

scene.add( cubeCameraPrefilter4 );

cubeCameraPrefilter4.position.x += 60.0;

var uniformsPrefilterBoxOriginal = {

"tCube": { value: null },

"roughness" : {value: 0.5}

};

var uniformsPrefilterBox = THREE.UniformsUtils.clone(uniformsPrefilterBoxOriginal);

uniformsPrefilterBox["tCube"].value = cubeCamera.renderTarget.texture;

materialPrefilterBox = new THREE.ShaderMaterial({

uniforms: uniformsPrefilterBox,

vertexShader: vCubeMap,

fragmentShader: fPrefilter,

side: THREE.BackSide

});

meshPrefilterBox0 = new THREE.Mesh(geometryBox, materialPrefilterBox);

meshPrefilterBox0.position.x += 20.0;

meshPrefilterBox1 = new THREE.Mesh(geometryBox, materialPrefilterBox);

meshPrefilterBox1.position.x += 30.0;

meshPrefilterBox2 = new THREE.Mesh(geometryBox, materialPrefilterBox);

meshPrefilterBox2.position.x += 40.0;

meshPrefilterBox3 = new THREE.Mesh(geometryBox, materialPrefilterBox);

meshPrefilterBox3.position.x += 50.0;

meshPrefilterBox4 = new THREE.Mesh(geometryBox, materialPrefilterBox);

meshPrefilterBox4.position.x += 60.0;

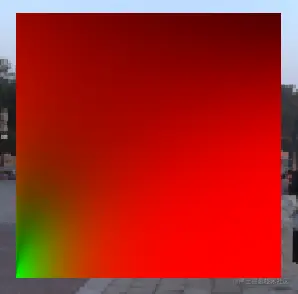

- 计算BRDF 生成贴图bufferTexture

- 效果如下

varying vec2 texcoord;

void main(){

texcoord = uv;

gl_Position = vec4( position, 1.0 );

}

// 预计算 BRDF

// 分成两部分

varying vec2 texcoord;

const float PI = 3.1415926;

// Van Der Corput 序列生成(用于不能移位操作的WebGL)

float VanDerCorpus(int n, int base){

float invBase = 1.0 / float(base);

float denom = 1.0;

float result = 0.0;

for(int i = 0; i < 32; ++i){

if(n > 0){

denom = mod(float(n), 2.0);

result += denom * invBase;

invBase = invBase / 2.0;

n = int(float(n) / 2.0);

}

}

return result;

}

// 超均匀分布序列(Low-discrepancy Sequence)——Hammersley序列

// 利用Van Der Corput 序列生成Hammersley序列

vec2 HammersleyNoBitOps(int i, int N){

return vec2(float(i)/float(N), VanDerCorpus(i, 2));

}

// 利用法线分布函数与余弦映射结合起来做重要性采样

vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness){

float a = roughness * roughness;

// 在球面空间将法线分布函数与余弦映射结合起来做重要性采样

// 将Hammersley序列x_i=(u,v)^T 映射到(\phi,\theta),然后转换成笛卡尔坐标下的向量形式。

float phi = 2.0 * PI * Xi.x;

float cosTheta = sqrt((1.0 - Xi.y) / (1.0 + (a*a - 1.0) * Xi.y));

float sinTheta = sqrt(1.0 - cosTheta * cosTheta);

// 从球面到笛卡尔坐标

vec3 H;

H.x = cos(phi) * sinTheta;

H.y = sin(phi) * sinTheta;

H.z = cosTheta;

// 从切线空间变换到世界空间

vec3 up = abs(N.z) < 0.999 ? vec3(0.0, 0.0, 1.0) : vec3(1.0, 0.0, 0.0);

vec3 tangent = normalize(cross(up, N));

vec3 bitangent = cross(N, tangent);

vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;

return normalize(sampleVec);

}

//Schlick-GGX近似法

//用来计算观察方向的几何遮蔽(Geometry Obstruction)

//以及光线方向的几何阴影(Geometry Shadowing)

float geometrySub(float NdotL, float mappedRough){

float numerator = NdotL;

float denominator = NdotL * (1.0 - mappedRough) + mappedRough;

return numerator / denominator;

}

// 采用史密斯法(Smith’s method)结合Schlick-GGX近似法,计算几何函数

float geometry(float NdotL, float NdotV, float rough){

float mappedRough = rough * rough / 2.0; // IBL的特有系数

float geoLight = geometrySub(NdotL, mappedRough);

float geoView = geometrySub(NdotV, mappedRough);

return geoLight * geoView;

}

// 进行BRDF的预积分

void main(){

// 构建这样的一个二维查找表,它的横轴坐标取值为NdotV,纵轴坐标取值为粗糙度roughness。

float NdotV = texcoord.x;

float roughness = texcoord.y;

// 认为N在切线空间,求出V

vec3 N = vec3(0.0, 0.0, 1.0);

vec3 V;

V.z = NdotV;

V.x = sqrt(1.0 - NdotV * NdotV);

V.y = 0.0;

const int SAMPLE_COUNT = 1024;

float A = 0.0;

float B = 0.0;

for(int i = 0; i < SAMPLE_COUNT; i++){

// 生成Hammersley序列进行采样

vec2 Xi = HammersleyNoBitOps(i, SAMPLE_COUNT);

// 重要性采样偏移采样向量

// 获得了一个采样向量,该向量大体围绕着预估的微表面的半程向量

vec3 H = ImportanceSampleGGX(Xi, N, roughness);

// 反推了光照方向

vec3 L = normalize(2.0 * dot(V, H) * H - V);

float NdotL = max(dot(N, L), 0.0);

float NdotH = max(H.z, 0.0);

float VdotH = max(dot(V, H), 0.0);

// 如果该光照方向和法线点积大于0,则可以采用纳入预积分计算

if(NdotL > 0.0){

// 计算两部分的积分

// 计算系数

float G = geometry(NdotL, NdotV, roughness);

float G_Vis = (G / (NdotV * NdotH)) * VdotH;

// 计算乘方

float Fc = pow(1.0 - VdotH, 5.0);

// 计算两部分积分 把A,B存成色值,方便后面直接读取乘以F0

A += (1.0 - Fc) * G_Vis;

B += Fc * G_Vis;

}

}

A /= float(SAMPLE_COUNT);

B /= float(SAMPLE_COUNT);

gl_FragColor = vec4(A, B, 0.0, 1.0);

}

bufferScene = new THREE.Scene();

bufferTexture = new THREE.WebGLRenderTarget( 512, 512);

var geometryBRDF = new THREE.PlaneGeometry(2, 2);

var materialBRDF = new THREE.ShaderMaterial({

vertexShader: vPlane,

fragmentShader: fBRDF

});

var BRDF = new THREE.Mesh( geometryBRDF, materialBRDF );

bufferScene.add( BRDF );

- 只是为了做展示

- 效果还是如下

uniform sampler2D texture;

varying vec2 texcoord;

void main() {

vec4 color = texture2D(texture, texcoord);

gl_FragColor = vec4(color.xyz, 1.0);

}

varying vec2 texcoord;

void main(){

texcoord = uv;

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

var geometryPlane = new THREE.PlaneGeometry( 5, 5 );

var uniformsPlaneOriginal = {

"texture": { value: null }

};

var uniformsPlane = THREE.UniformsUtils.clone(uniformsPlaneOriginal);

uniformsPlane["texture"].value = bufferTexture.texture;

var materialPlane = new THREE.ShaderMaterial( {

uniforms: uniformsPlane,

vertexShader: vSimplePlane,

fragmentShader: fSimplePlane,

side: THREE.DoubleSide

} );

var plane = new THREE.Mesh( geometryPlane, materialPlane );

scene.add( plane );

plane.position.x += 10;

- 把上面的贴图都带进去 进行最后的小球计算

varying vec3 fragPos;

varying vec3 vNormal;

//获得着色点,法向量,输出顶点位置

void main(){

fragPos = (modelMatrix * vec4(position, 1.0)).xyz;

vNormal = mat3(modelMatrix) * normal;

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

// 计算用IBL与直接光的PBR效果

varying vec3 fragPos;

varying vec3 vNormal;

uniform vec3 albedo;

uniform float metallic;

uniform float rough;

uniform vec3 directionalLightDir;

uniform float lightStrength;

uniform samplerCube tCube;

uniform samplerCube prefilterCube0;

uniform samplerCube prefilterCube1;

uniform samplerCube prefilterCube2;

uniform samplerCube prefilterCube3;

uniform samplerCube prefilterCube4;

uniform float prefilterScale;

uniform sampler2D BRDFlut;

const float PI = 3.1415926;

vec3 F0;

// ----------------------------------------------------------------------------

//法线分布函数,采用Trowbridge-Reitz GGX进行近似

//根据迪士尼公司给出的观察以及后来被Epic Games公司采用的光照模型

//光照在法线分布函数中,采用粗糙度的平方会让光照看起来更加自然。

float distribution(vec3 halfDir, vec3 normal, float rough){

float rough2 = rough * rough;

float NdotH = max(dot(normal, halfDir), 0.0);

float numerator = rough2;

float media = NdotH * NdotH * (rough2 - 1.0) + 1.0;

float denominator = PI * media * media;

return numerator / max(denominator, 0.001);

}

// ----------------------------------------------------------------------------

//Schlick-GGX近似法

//用来计算观察方向的几何遮蔽(Geometry Obstruction)

//以及光线方向的几何阴影(Geometry Shadowing)

float geometrySub(float NdotL, float mappedRough){

float numerator = NdotL;

float denominator = NdotL * (1.0 - mappedRough) + mappedRough;

return numerator / denominator;

}

// ----------------------------------------------------------------------------

// 采用史密斯法(Smith’s method)结合Schlick-GGX近似法,计算几何函数

float geometry(float NdotL, float NdotV, float rough){

float mappedRough = (rough + 1.0) * (rough + 1.0) / 8.0;

float geoLight = geometrySub(NdotL, mappedRough);

float geoView = geometrySub(NdotV, mappedRough);

return geoLight * geoView;

}

// ----------------------------------------------------------------------------

// 菲涅尔方程用Fresnel-Schlick近似法求近似解

vec3 fresnel(vec3 baseReflect, vec3 viewDir, vec3 halfDir){

float HdotV = clamp(dot(halfDir, viewDir), 0.0, 1.0);

float media = pow((1.0 - HdotV), 5.0);

return baseReflect + (1.0 -baseReflect) * media;

}

// 直接光的计算,按照反射方程计算

vec3 explicitLighting(vec3 normal, vec3 viewDir, float NdotV){

vec3 lightDir = normalize(directionalLightDir);

vec3 halfDir = normalize(viewDir + lightDir);

float NdotL = max(dot(normal, lightDir), 0.0);

// 计算DFG

float D = distribution(halfDir, normal, rough);

vec3 F = fresnel(F0, viewDir, halfDir);

float G = geometry(NdotL, NdotV, rough);

// 为了保证能量守恒,剩下的光都会被折射

vec3 kd = vec3(1.0) - F;

// 因为金属不会折射光线,因此不会有漫反射。

// 所以如果表面是金属的,我们会把系数kD 变成0

// 如果是之间的就插值

kd *= 1.0 - metallic;

// 最终的结果实际上是反射方程在半球领域Ω的积分的结果

// 但是我们实际上不需要去求积,因为是直接光照

// 根据反射方程进行计算一根光线就可以

vec3 difBRDF = kd * albedo / PI;

vec3 specBRDF = D * F * G / max((4.0 * NdotV * NdotL), 0.001);

vec3 BRDF = difBRDF + specBRDF;

// 光强

vec3 radiance = vec3(lightStrength);

return BRDF * radiance * NdotL;

}

// 菲涅尔方程用Fresnel-Schlick近似法求近似解

// 粗糙度为1则没有F0

vec3 fresnelRoughness(float cosTheta, vec3 F0, float roughness){

return F0 + (max(vec3(1.0 - roughness), F0) - F0) * pow(1.0 - cosTheta, 5.0);

}

// 根据粗糙度划分的等级进行插值

vec3 samplePrefilter(vec3 cubeMapTexcoord){

int prefilterLevel = int(floor(prefilterScale));

float coeMix = prefilterScale - float(prefilterLevel);

vec3 colorA, colorB, colorP;

if(prefilterLevel == 0){

colorA = textureCube(prefilterCube0, cubeMapTexcoord).xyz;

colorB = textureCube(prefilterCube1, cubeMapTexcoord).xyz;

}

else if(prefilterLevel == 1){

colorA = textureCube(prefilterCube1, cubeMapTexcoord).xyz;

colorB = textureCube(prefilterCube2, cubeMapTexcoord).xyz;

}

else if(prefilterLevel == 2){

colorA = textureCube(prefilterCube2, cubeMapTexcoord).xyz;

colorB = textureCube(prefilterCube3, cubeMapTexcoord).xyz;

}

else if(prefilterLevel == 3){

colorA = textureCube(prefilterCube3, cubeMapTexcoord).xyz;

colorB = textureCube(prefilterCube4, cubeMapTexcoord).xyz;

}

else{

colorA = textureCube(prefilterCube4, cubeMapTexcoord).xyz;

colorB = textureCube(prefilterCube4, cubeMapTexcoord).xyz;

}

colorP = mix(colorA, colorB, coeMix);

return colorP;

}

void main(){

// 法向量与观察向量

vec3 normal = normalize(vNormal);

vec3 viewDir = normalize(cameraPosition - fragPos);

// 反射向量

vec3 R = reflect(-viewDir, normal);

// cos值

float NdotV = max(dot(normal, viewDir), 0.0);

// 在PBR金属流中,我们简单地认为大多数的绝缘体在F0为0.04的时候看起来视觉上是正确的

// 根据表面的金属性来改变F0值, 并且在原来的F0和反射率中插值计算F0

F0 = mix(vec3(0.04), albedo, metallic);

// 计算直接光

vec3 colorFromLight = explicitLighting(normal, viewDir, NdotV);

// 计算环境光的菲涅尔方程系数kd

vec3 F = fresnelRoughness(NdotV, F0, rough);

vec3 kS = F;

vec3 kD = 1.0 - kS;

kD *= 1.0 - metallic;

// 采样环境光预积分 的 环境图(镜面反射的第一部分)

vec3 prefilteredColor = samplePrefilter(R);

// 采样环境光预积分 的 BRDF

// texture2D一般是这么使用的texture2D(texture, texcoord);

// brdflut 是查找表 所以这么写

vec2 brdf = texture2D(BRDFlut, vec2(NdotV, rough)).xy;

// 采样并计算环境光预积分 的 漫反射部分

vec3 ambientDiffuse = kD * albedo * textureCube(tCube, normal).rgb;

// 计算镜面反射部分

vec3 ambientSpecular = prefilteredColor * (F * brdf.x + brdf.y);

vec3 ambient = ambientDiffuse + ambientSpecular;

// 加上直接光的效果

vec3 color = ambient + colorFromLight;

// 我们希望所有光照的输入都尽可能的接近他们在物理上的取值

// 这样他们的反射率或者说颜色值就会在色谱上有比较大的变化空间。

// 所以进行色调映射,Reinhard色调映射算法平均地将所有亮度值分散到LDR上

color = color / (color + vec3(1.0));

// 直到现在,我们假设的所有计算都在线性的颜色空间中进行的,因此我们需要在着色器最后做伽马矫正

color = pow(color, vec3(1.0/2.2));

gl_FragColor = vec4(color, 1.0);

}

var geometryBall = new THREE.SphereBufferGeometry(1, 32, 32);

var uniformsBallOriginalB = {

albedo: {

type: "c",

value: new THREE.Color(0xb87333)

},

metallic: { value: params.metallic },

rough: { value: params.roughness },

directionalLightDir: { value: directionalLightDir },

lightStrength: { value: lightStrength },

"tCube": { value: null },

"prefilterCube0": { value: null },

"prefilterCube1": { value: null },

"prefilterCube2": { value: null },

"prefilterCube3": { value: null },

"prefilterCube4": { value: null },

"prefilterScale": { value: null },

"BRDFlut": { value: null }

};

var uniformsBallB = THREE.UniformsUtils.clone(uniformsBallOriginalB);

uniformsBallB["tCube"].value = cubeCamera2.renderTarget.texture;

uniformsBallB["prefilterCube0"].value = cubeCameraPrefilter0.renderTarget.texture;

uniformsBallB["prefilterCube1"].value = cubeCameraPrefilter1.renderTarget.texture;

uniformsBallB["prefilterCube2"].value = cubeCameraPrefilter2.renderTarget.texture;

uniformsBallB["prefilterCube3"].value = cubeCameraPrefilter3.renderTarget.texture;

uniformsBallB["prefilterCube4"].value = cubeCameraPrefilter4.renderTarget.texture;

uniformsBallB["prefilterScale"].value = 4.0 * params.roughness;

uniformsBallB["BRDFlut"].value = bufferTexture.texture;

materialBallB = new THREE.ShaderMaterial({

uniforms: uniformsBallB,

vertexShader: vShader,

fragmentShader: fPBR

});

meshBallB = new THREE.Mesh(geometryBall, materialBallB);

meshBallB.position.x = 0;

meshBallB.position.y = 0;

meshBallB.position.z = 0;

meshBallB.scale.x = meshBallB.scale.y = meshBallB.scale.z = 3;

var flagEnvMap = true;

function render() {

materialBallB.uniforms.metallic = { value: params.metallic };

materialBallB.uniforms.rough = { value: params.roughness };

var prefilterScale = 4.0 * params.roughness;

materialBallB.uniforms.prefilterScale = { value: prefilterScale };

if(envMap && flagEnvMap){

cubeCamera.update( renderer, scene );

scene.remove(meshCube);

scene.add(meshBox);

cubeCamera2.update( renderer, scene );

scene.remove(meshBox);

scene.add(meshPrefilterBox0);

materialPrefilterBox.uniforms["roughness"].value = 0.025;

cubeCameraPrefilter0.update( renderer, scene );

scene.remove(meshPrefilterBox0);

scene.add(meshPrefilterBox1);

materialPrefilterBox.uniforms["roughness"].value = 0.25;

cubeCameraPrefilter1.update( renderer, scene );

scene.remove(meshPrefilterBox1);

scene.add(meshPrefilterBox2);

materialPrefilterBox.uniforms["roughness"].value = 0.5;

cubeCameraPrefilter2.update( renderer, scene );

scene.remove(meshPrefilterBox2);

scene.add(meshPrefilterBox3);

materialPrefilterBox.uniforms["roughness"].value = 0.75;

cubeCameraPrefilter3.update( renderer, scene );

scene.remove(meshPrefilterBox3);

scene.add(meshPrefilterBox4);

materialPrefilterBox.uniforms["roughness"].value = 1.0;

cubeCameraPrefilter4.update( renderer, scene );

scene.remove(meshPrefilterBox4);

renderer.setRenderTarget(bufferTexture);

renderer.render(bufferScene, camera);

renderer.setRenderTarget(null);

scene.add(meshBallB);

flagEnvMap = false;

}

renderer.render(scene, camera);

}

function animate() {

requestAnimationFrame(animate);

controls.update();

render();

}