- 光照

- 直接光照(light灯光)

- 间接光照(IBL光照)

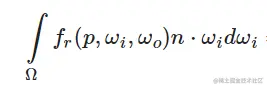

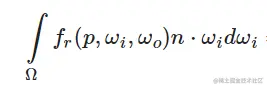

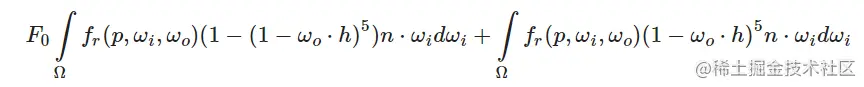

反射方程

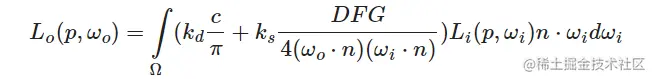

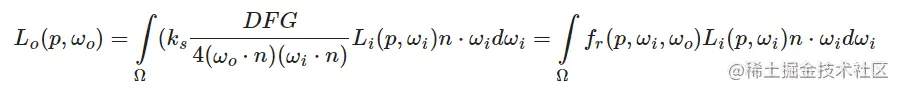

镜面反射部分

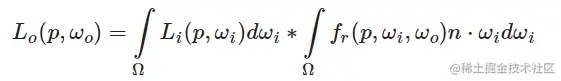

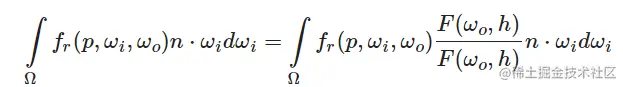

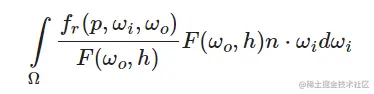

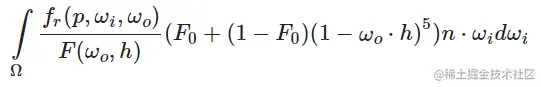

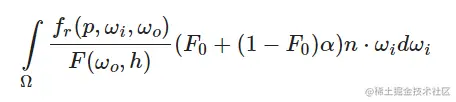

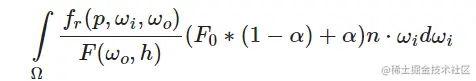

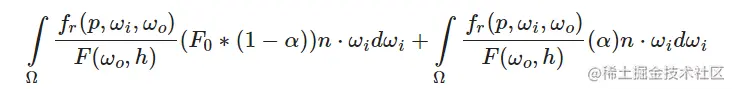

简化 分割求和近似法

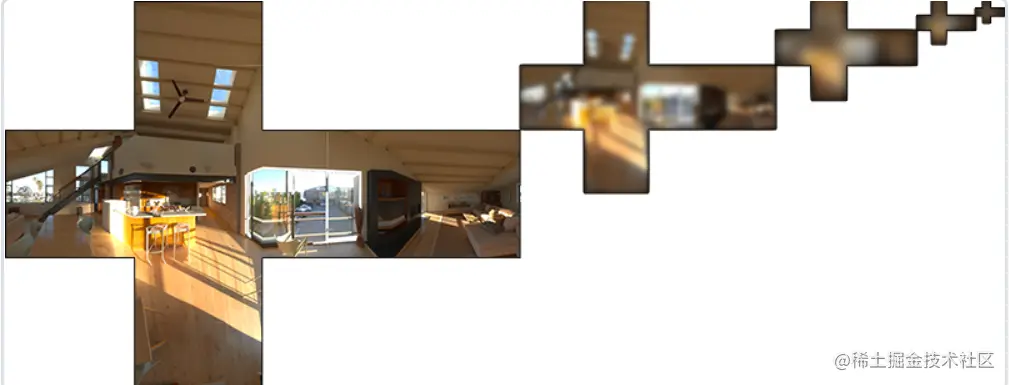

预滤波环境贴图

预滤波环境贴图

- 似于辐照度图,是预先计算的环境卷积贴图

- 卷积的每个粗糙度级别,我们将按顺序把模糊后的结果存储在预滤波贴图的 mipmap 中

简化

- 代入F菲涅尔方程 简化方程

- 由于 f(p,ωi,ωo) 已经包含 F 项,它们被约分了,这里的 f 中不计算 F 项。

代码

- cubeMap顶点着色器(1,用于生成6六张贴图;2,用于生成

export var vs_cubemap =

`#version 300 es

precision mediump float;

layout (location = 0) in vec3 aPos;

out vec3 WorldPos;

uniform mat4 projection;

uniform mat4 view;

void main()

{

WorldPos = aPos;

gl_Position = projection * view * vec4(WorldPos, 1.0);

}`

- equirectangularToCubemap片元着色器(用于生成6张贴图)

export var fs_equirectangularToCubemap =

`#version 300 es

precision mediump float;

out vec4 FragColor;

//out uvec4 uFragColor;

in vec3 WorldPos;

uniform sampler2D equirectangularMap;

//conversion from (-pi, pi)=>(-1/2, 1/2) and (-pi/2, pi/2)=>(-1/2, 1/2)

const vec2 invAtan = vec2(0.1591, 0.3183);

vec2 SampleSphericalMap(vec3 v)

{

vec2 uv = vec2(atan(v.z, v.x), asin(v.y));

uv *= invAtan;

uv += 0.5;

return uv;

}

void main()

{

vec2 uv = SampleSphericalMap(normalize(WorldPos));

vec3 color = texture(equirectangularMap, uv).rgb;

FragColor = vec4(color, 1.0);

//uFragColor = uvec4(100, 0, 0, 1);

}`

export var vs_background =

`#version 300 es

precision mediump float;

layout (location = 0) in vec3 aPos;

uniform mat4 projection;

uniform mat4 view;

out vec3 WorldPos;

void main()

{

WorldPos = aPos;

mat4 rotView = mat4(mat3(view));

vec4 clipPos = projection * rotView * vec4(WorldPos, 1.0);

gl_Position = clipPos.xyww;

}`

export var fs_background =

`#version 300 es

precision mediump float;

out vec4 FragColor;

in vec3 WorldPos;

uniform samplerCube environmentMap;

void main()

{

vec3 envColor = texture(environmentMap, WorldPos).rgb;

// HDR tonemap and gamma correct

envColor = envColor / (envColor + vec3(1.0));

envColor = pow(envColor, vec3(1.0/2.2));

FragColor = vec4(envColor, 1.0);

}`

- irradianceConvolution片元着色器(用于生成漫反射)

export var fs_irradianceConvolution =

`#version 300 es

precision mediump float;

out vec4 FragColor;

in vec3 WorldPos;

uniform samplerCube environmentMap;

const float PI = 3.14159265359;

void main()

{

// The world vector acts as the normal of a tangent surface

// from the origin, aligned to WorldPos. Given this normal, calculate all

// incoming radiance of the environment. The result of this radiance

// is the radiance of light coming from -Normal direction, which is what

// we use in the PBR shader to sample irradiance.

vec3 N = normalize(WorldPos);

vec3 irradiance = vec3(0.0);

// tangent space calculation from origin point

vec3 up = vec3(0.0, 1.0, 0.0);

vec3 right = cross(up, N);

up = cross(N, right);

float sampleDelta = 0.025;

float nrSamples = 0.0;

for(float phi = 0.0; phi < 2.0 * PI; phi += sampleDelta)

{

for(float theta = 0.0; theta < 0.5 * PI; theta += sampleDelta)

{

// spherical to cartesian (in tangent space)

vec3 tangentSample = vec3(sin(theta) * cos(phi), sin(theta) * sin(phi), cos(theta));

// tangent space to world

vec3 sampleVec = tangentSample.x * right + tangentSample.y * up + tangentSample.z * N;

irradiance += texture(environmentMap, sampleVec).rgb * cos(theta) * sin(theta);

nrSamples++;

}

}

irradiance = PI * irradiance * (1.0 / float(nrSamples));

FragColor = vec4(irradiance, 1.0);

}`

export var fs_prefilter =

`#version 300 es

precision mediump float;

out vec4 FragColor;

in vec3 WorldPos;

uniform samplerCube environmentMap;

uniform float roughness;

const float PI = 3.14159265359;

// ----------------------------------------------------------------------------

float DistributionGGX(vec3 N, vec3 H, float roughness)

{

float a = roughness*roughness;

float a2 = a*a;

float NdotH = max(dot(N, H), 0.0);

float NdotH2 = NdotH*NdotH;

float nom = a2;

float denom = (NdotH2 * (a2 - 1.0) + 1.0);

denom = PI * denom * denom;

return nom / denom;

}

// ----------------------------------------------------------------------------

// http://holger.dammertz.org/stuff/notes_HammersleyOnHemisphere.html

// efficient VanDerCorpus calculation.

float RadicalInverse_VdC(uint bits)

{

bits = (bits << 16u) | (bits >> 16u);

bits = ((bits & 0x55555555u) << 1u) | ((bits & 0xAAAAAAAAu) >> 1u);

bits = ((bits & 0x33333333u) << 2u) | ((bits & 0xCCCCCCCCu) >> 2u);

bits = ((bits & 0x0F0F0F0Fu) << 4u) | ((bits & 0xF0F0F0F0u) >> 4u);

bits = ((bits & 0x00FF00FFu) << 8u) | ((bits & 0xFF00FF00u) >> 8u);

return float(bits) * 2.3283064365386963e-10; // / 0x100000000

}

// ----------------------------------------------------------------------------

vec2 Hammersley(uint i, uint N)

{

return vec2(float(i)/float(N), RadicalInverse_VdC(i));

}

// ----------------------------------------------------------------------------

vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness)

{

float a = roughness*roughness;

float phi = 2.0 * PI * Xi.x;

float cosTheta = sqrt((1.0 - Xi.y) / (1.0 + (a*a - 1.0) * Xi.y));

float sinTheta = sqrt(1.0 - cosTheta*cosTheta);

// from spherical coordinates to cartesian coordinates - halfway vector

vec3 H;

H.x = cos(phi) * sinTheta;

H.y = sin(phi) * sinTheta;

H.z = cosTheta;

// from tangent-space H vector to world-space sample vector

vec3 up = abs(N.z) < 0.999 ? vec3(0.0, 0.0, 1.0) : vec3(1.0, 0.0, 0.0);

vec3 tangent = normalize(cross(up, N));

vec3 bitangent = cross(N, tangent);

vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;

return normalize(sampleVec);

}

// ----------------------------------------------------------------------------

void main()

{

vec3 N = normalize(WorldPos);

// make the simplyfying assumption that V equals R equals the normal

vec3 R = N;

vec3 V = R;

const uint SAMPLE_COUNT = 1024u;

vec3 prefilteredColor = vec3(0.0);

float totalWeight = 0.0;

for(uint i = 0u; i < SAMPLE_COUNT; ++i)

{

// generates a sample vector that's biased towards the preferred alignment direction (importance sampling).

vec2 Xi = Hammersley(i, SAMPLE_COUNT);

vec3 H = ImportanceSampleGGX(Xi, N, roughness);

vec3 L = normalize(2.0 * dot(V, H) * H - V);

float NdotL = max(dot(N, L), 0.0);

if(NdotL > 0.0)

{

// sample from the environment's mip level based on roughness/pdf

float D = DistributionGGX(N, H, roughness);

float NdotH = max(dot(N, H), 0.0);

float HdotV = max(dot(H, V), 0.0);

float pdf = D * NdotH / (4.0 * HdotV) + 0.0001;

float resolution = 512.0; // resolution of source cubemap (per face)

float saTexel = 4.0 * PI / (6.0 * resolution * resolution);

float saSample = 1.0 / (float(SAMPLE_COUNT) * pdf + 0.0001);

float mipLevel = roughness == 0.0 ? 0.0 : 0.5 * log2(saSample / saTexel);

prefilteredColor += textureLod(environmentMap, L, mipLevel).rgb * NdotL;

totalWeight += NdotL;

}

}

prefilteredColor = prefilteredColor / totalWeight;

FragColor = vec4(prefilteredColor, 1.0);

}`

export var vs_brdf =

`#version 300 es

precision mediump float;

layout (location = 0) in vec3 aPos;

//D3Q: location 1 introduced because of code in renderQuad()

layout (location = 1) in vec3 aColor;

layout (location = 2) in vec2 aTexCoords;

out vec2 TexCoords;

void main()

{

TexCoords = aTexCoords;

gl_Position = vec4(aPos, 1.0);

}`

export var fs_brdf =

`#version 300 es

precision mediump float;

out vec2 FragColor;

in vec2 TexCoords;

const float PI = 3.14159265359;

// ----------------------------------------------------------------------------

// http://holger.dammertz.org/stuff/notes_HammersleyOnHemisphere.html

// efficient VanDerCorpus calculation.

float RadicalInverse_VdC(uint bits)

{

bits = (bits << 16u) | (bits >> 16u);

bits = ((bits & 0x55555555u) << 1u) | ((bits & 0xAAAAAAAAu) >> 1u);

bits = ((bits & 0x33333333u) << 2u) | ((bits & 0xCCCCCCCCu) >> 2u);

bits = ((bits & 0x0F0F0F0Fu) << 4u) | ((bits & 0xF0F0F0F0u) >> 4u);

bits = ((bits & 0x00FF00FFu) << 8u) | ((bits & 0xFF00FF00u) >> 8u);

return float(bits) * 2.3283064365386963e-10; // / 0x100000000

}

// ----------------------------------------------------------------------------

vec2 Hammersley(uint i, uint N)

{

return vec2(float(i)/float(N), RadicalInverse_VdC(i));

}

// ----------------------------------------------------------------------------

vec3 ImportanceSampleGGX(vec2 Xi, vec3 N, float roughness)

{

float a = roughness*roughness;

float phi = 2.0 * PI * Xi.x;

float cosTheta = sqrt((1.0 - Xi.y) / (1.0 + (a*a - 1.0) * Xi.y));

float sinTheta = sqrt(1.0 - cosTheta*cosTheta);

// from spherical coordinates to cartesian coordinates - halfway vector

vec3 H;

H.x = cos(phi) * sinTheta;

H.y = sin(phi) * sinTheta;

H.z = cosTheta;

// from tangent-space H vector to world-space sample vector

vec3 up = abs(N.z) < 0.999 ? vec3(0.0, 0.0, 1.0) : vec3(1.0, 0.0, 0.0);

vec3 tangent = normalize(cross(up, N));

vec3 bitangent = cross(N, tangent);

vec3 sampleVec = tangent * H.x + bitangent * H.y + N * H.z;

return normalize(sampleVec);

}

// ----------------------------------------------------------------------------

float GeometrySchlickGGX(float NdotV, float roughness)

{

// note that we use a different k for IBL

float a = roughness;

float k = (a * a) / 2.0;

float nom = NdotV;

float denom = NdotV * (1.0 - k) + k;

return nom / denom;

}

// ----------------------------------------------------------------------------

float GeometrySmith(vec3 N, vec3 V, vec3 L, float roughness)

{

float NdotV = max(dot(N, V), 0.0);

float NdotL = max(dot(N, L), 0.0);

float ggx2 = GeometrySchlickGGX(NdotV, roughness);

float ggx1 = GeometrySchlickGGX(NdotL, roughness);

return ggx1 * ggx2;

}

// ----------------------------------------------------------------------------

vec2 IntegrateBRDF(float NdotV, float roughness)

{

vec3 V;

V.x = sqrt(1.0 - NdotV*NdotV);

V.y = 0.0;

V.z = NdotV;

float A = 0.0;

float B = 0.0;

vec3 N = vec3(0.0, 0.0, 1.0);

const uint SAMPLE_COUNT = 1024u;

for(uint i = 0u; i < SAMPLE_COUNT; ++i)

{

// generates a sample vector that's biased towards the

// preferred alignment direction (importance sampling).

vec2 Xi = Hammersley(i, SAMPLE_COUNT);

vec3 H = ImportanceSampleGGX(Xi, N, roughness);

vec3 L = normalize(2.0 * dot(V, H) * H - V);

float NdotL = max(L.z, 0.0);

float NdotH = max(H.z, 0.0);

float VdotH = max(dot(V, H), 0.0);

if(NdotL > 0.0)

{

float G = GeometrySmith(N, V, L, roughness);

float G_Vis = (G * VdotH) / (NdotH * NdotV);

float Fc = pow(1.0 - VdotH, 5.0);

A += (1.0 - Fc) * G_Vis;

B += Fc * G_Vis;

}

}

A /= float(SAMPLE_COUNT);

B /= float(SAMPLE_COUNT);

return vec2(A, B);

}

// ----------------------------------------------------------------------------

void main()

{

vec2 integratedBRDF = IntegrateBRDF(TexCoords.x, TexCoords.y);

FragColor = integratedBRDF;

}`

export var vs_pbr =

`#version 300 es

precision mediump float;

layout (location = 0) in vec3 aPos;

layout (location = 1) in vec2 aTexCoords;

layout (location = 2) in vec3 aNormal;

out vec2 TexCoords;

out vec3 WorldPos;

out vec3 Normal;

uniform mat4 projection;

uniform mat4 view;

uniform mat4 model;

void main()

{

TexCoords = aTexCoords;

WorldPos = vec3(model * vec4(aPos, 1.0));

Normal = mat3(model) * aNormal;

gl_Position = projection * view * vec4(WorldPos, 1.0);

}`

export var fs_pbr =

`#version 300 es

precision mediump float;

out vec4 FragColor;

in vec2 TexCoords;

in vec3 WorldPos;

in vec3 Normal;

// material parameters

uniform vec3 albedo;

uniform float metallic;

uniform float roughness;

uniform float ao;

// IBL

uniform samplerCube irradianceMap;

// lights

uniform vec3 lightPositions[4];

uniform vec3 lightColors[4];

uniform vec3 camPos;

const float PI = 3.14159265359;

// ----------------------------------------------------------------------------

float DistributionGGX(vec3 N, vec3 H, float roughness)

{

float a = roughness*roughness;

float a2 = a*a;

float NdotH = max(dot(N, H), 0.0);

float NdotH2 = NdotH*NdotH;

float nom = a2;

float denom = (NdotH2 * (a2 - 1.0) + 1.0);

denom = PI * denom * denom;

return nom / denom;

}

// ----------------------------------------------------------------------------

float GeometrySchlickGGX(float NdotV, float roughness)

{

float r = (roughness + 1.0);

float k = (r*r) / 8.0;

float nom = NdotV;

float denom = NdotV * (1.0 - k) + k;

return nom / denom;

}

// ----------------------------------------------------------------------------

float GeometrySmith(vec3 N, vec3 V, vec3 L, float roughness)

{

float NdotV = max(dot(N, V), 0.0);

float NdotL = max(dot(N, L), 0.0);

float ggx2 = GeometrySchlickGGX(NdotV, roughness);

float ggx1 = GeometrySchlickGGX(NdotL, roughness);

return ggx1 * ggx2;

}

// ----------------------------------------------------------------------------

vec3 fresnelSchlick(float cosTheta, vec3 F0)

{

return F0 + (1.0 - F0) * pow(1.0 - cosTheta, 5.0);

}

// ----------------------------------------------------------------------------

void main()

{

vec3 N = Normal;

vec3 V = normalize(camPos - WorldPos);

vec3 R = reflect(-V, N);

// calculate reflectance at normal incidence; if dia-electric (like plastic) use F0

// of 0.04 and if it's a metal, use the albedo color as F0 (metallic workflow)

vec3 F0 = vec3(0.04);

F0 = mix(F0, albedo, metallic);

// reflectance equation

vec3 Lo = vec3(0.0);

for(int i = 0; i < 4; ++i)

{

// calculate per-light radiance

vec3 L = normalize(lightPositions[i] - WorldPos);

vec3 H = normalize(V + L);

float distance = length(lightPositions[i] - WorldPos);

float attenuation = 1.0 / (distance * distance);

vec3 radiance = lightColors[i] * attenuation;

// Cook-Torrance BRDF

float NDF = DistributionGGX(N, H, roughness);

float G = GeometrySmith(N, V, L, roughness);

vec3 F = fresnelSchlick(max(dot(H, V), 0.0), F0);

vec3 nominator = NDF * G * F;

float denominator = 4.0 * max(dot(N, V), 0.0) * max(dot(N, L), 0.0) + 0.001; // 0.001 to prevent divide by zero.

vec3 specular = nominator / denominator;

// kS is equal to Fresnel

vec3 kS = F;

// for energy conservation, the diffuse and specular light can't

// be above 1.0 (unless the surface emits light); to preserve this

// relationship the diffuse component (kD) should equal 1.0 - kS.

vec3 kD = vec3(1.0) - kS;

// multiply kD by the inverse metalness such that only non-metals

// have diffuse lighting, or a linear blend if partly metal (pure metals

// have no diffuse light).

kD *= 1.0 - metallic;

// scale light by NdotL

float NdotL = max(dot(N, L), 0.0);

// add to outgoing radiance Lo

Lo += (kD * albedo / PI + specular) * radiance * NdotL; // note that we already multiplied the BRDF by the Fresnel (kS) so we won't multiply by kS again

}

// ambient lighting (we now use IBL as the ambient term)

vec3 kS = fresnelSchlick(max(dot(N, V), 0.0), F0);

vec3 kD = 1.0 - kS;

kD *= 1.0 - metallic;

vec3 irradiance = texture(irradianceMap, N).rgb;

vec3 diffuse = irradiance * albedo;

vec3 ambient = (kD * diffuse) * ao;

// vec3 ambient = vec3(0.002);

vec3 color = ambient + Lo;

// HDR tonemapping

color = color / (color + vec3(1.0));

// gamma correct

color = pow(color, vec3(1.0/2.2));

FragColor = vec4(color , 1.0);

}`

const sizeFloat = 4;

const whCube = 512;

const SCR_WIDTH = 1280;

const SCR_HEIGHT = 720;

const ext = gl.getExtension("EXT_color_buffer_float");

let showCubeMap = true;

let spacePressed = false;

let pbrShader = null;

let equirectangularToCubemapShader = null;

let irradianceShader = null;

let prefilterShader = null;

let brdfShader = null;

let backgroundShader = null;

let projection = mat4.create(), view = mat4.create();

let model = mat4.create();

let lightPositions = null;

let lightColors = null;

const nrRows = 7;

const nrColumns = 7;

const spacing = 2.5;

let cubeVAO = null;

let quadVAO = null;

let envCubemap = null;

let sphereVAO = null;

let sphere;

let captureFBO = null;

let irradianceMap = null;

let prefilterMap = null;

let brdfLUTTexture = null;

let deltaTime = 0.0;

let lastFrame = 0.0;

let main = function () {

gl.enable(gl.DEPTH_TEST);

gl.depthFunc(gl.LEQUAL);

pbrShader = new Shader(gl, vs_pbr, fs_pbr);

equirectangularToCubemapShader = new Shader(gl, vs_cubemap, fs_equirectangularToCubemap);

irradianceShader = new Shader(gl, vs_cubemap, fs_irradianceConvolution);

prefilterShader = new Shader(gl, vs_cubemap, fs_prefilter);

brdfShader = new Shader(gl, vs_brdf, fs_brdf);

backgroundShader = new Shader(gl, vs_background, fs_background);

pbrShader.use(gl);

pbrShader.setInt(gl, "irradianceMap", 0);

pbrShader.setInt(gl, "prefilterMap", 1);

pbrShader.setInt(gl, "brdfLUT", 2);

gl.uniform3f(gl.getUniformLocation(pbrShader.programId, "albedo"), 0.5, 0.0, 0.0);

pbrShader.setFloat(gl, "ao", 1.0);

backgroundShader.use(gl);

backgroundShader.setInt(gl, "environmentMap", 0);

loadHDR("../../textures/hdr/newport_loft.hdr", toCubemap);

}();

function animate() {

render();

requestAnimationFrame(animate);

}

function render() {

let currentFrame = performance.now();

deltaTime = currentFrame - lastFrame;

lastFrame = currentFrame;

processInput();

gl.clearColor(0.1, 0.1, 0.1, 1.0);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

pbrShader.use(gl);

let camera = new Camera(vec3.fromValues(0.0, 0.0, 3.0), vec3.fromValues(0.0, 1.0, 0.0));

view = camera.GetViewMatrix();

gl.uniformMatrix4fv(gl.getUniformLocation(pbrShader.programId, "view"), false, view);

gl.uniform3fv(gl.getUniformLocation(pbrShader.programId, "camPos"), new Float32Array(camera.Position));

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_CUBE_MAP, irradianceMap);

gl.activeTexture(gl.TEXTURE1);

gl.bindTexture(gl.TEXTURE_CUBE_MAP, prefilterMap);

gl.activeTexture(gl.TEXTURE2);

gl.bindTexture(gl.TEXTURE_2D, brdfLUTTexture);

mat4.identity(model);

for (let row = 0; row < nrRows; ++row) {

pbrShader.setFloat(gl, "metallic", row / nrRows);

for (let col = 0; col < nrColumns; ++col) {

pbrShader.setFloat(gl, "roughness", Math.min(Math.max(col / nrColumns, 0.025), 1.0));

mat4.identity(model);

mat4.translate(model, model, vec3.fromValues((col - (nrColumns / 2)) * spacing, (row - (nrRows / 2)) * spacing, 0.0));

gl.uniformMatrix4fv(gl.getUniformLocation(pbrShader.programId, "model"), false, model);

renderSphere2();

}

}

lightPositions = new Float32Array([

-10.0, 10.0, 10.0,

10.0, 10.0, 10.0,

-10.0, -10.0, 10.0,

10.0, -10.0, 10.0

]);

lightColors = new Float32Array([

300.0, 300.0, 300.0,

300.0, 300.0, 300.0,

300.0, 300.0, 300.0,

300.0, 300.0, 300.0

]);

for (let i = 0; i < lightPositions.length / 3; ++i) {

let newPos = vec3.fromValues(lightPositions[3 * i], lightPositions[3 * i + 1], lightPositions[3 * i + 2]);

pbrShader.setFloat(gl, "metallic", 1.0);

pbrShader.setFloat(gl, "roughness", 1.0);

mat4.identity(model);

mat4.translate(model, model, newPos);

mat4.scale(model, model, vec3.fromValues(0.5, 0.5, 0.5));

gl.uniformMatrix4fv(gl.getUniformLocation(pbrShader.programId, "model"), false, model);

renderSphere2();

}

backgroundShader.use(gl);

gl.uniformMatrix4fv(gl.getUniformLocation(backgroundShader.programId, "view"), false, view);

gl.activeTexture(gl.TEXTURE0);

if (showCubeMap)

gl.bindTexture(gl.TEXTURE_CUBE_MAP, envCubemap);

else

gl.bindTexture(gl.TEXTURE_CUBE_MAP, irradianceMap);

renderCube();

}

function renderQuad() {

if (!quadVAO) {

let vertices = new Float32Array([

-1.0, -1.0, 1.0, 0.0, 0.0, -1.0, 0.0, 0.0,

1.0, 1.0, 1.0, 0.0, 0.0, -1.0, 1.0, 1.0,

1.0, -1.0, 1.0, 0.0, 0.0, -1.0, 1.0, 0.0,

1.0, 1.0, 1.0, 0.0, 0.0, -1.0, 1.0, 1.0,

-1.0, -1.0, 1.0, 0.0, 0.0, -1.0, 0.0, 0.0,

-1.0, 1.0, 1.0, 0.0, 0.0, -1.0, 0.0, 1.0,

]);

quadVAO = gl.createVertexArray();

let quadVBO = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, quadVBO);

gl.bufferData(gl.ARRAY_BUFFER, vertices, gl.STATIC_DRAW);

gl.bindVertexArray(quadVAO);

gl.enableVertexAttribArray(0);

gl.vertexAttribPointer(0, 3, gl.FLOAT, false, 8 * sizeFloat, 0);

gl.enableVertexAttribArray(1);

gl.vertexAttribPointer(1, 3, gl.FLOAT, false, 8 * sizeFloat, (3 * sizeFloat));

gl.enableVertexAttribArray(2);

gl.vertexAttribPointer(2, 2, gl.FLOAT, false, 8 * sizeFloat, (6 * sizeFloat));

gl.bindBuffer(gl.ARRAY_BUFFER, null);

gl.bindVertexArray(null);

}

gl.bindVertexArray(quadVAO);

gl.drawArrays(gl.TRIANGLES, 0, 6);

gl.bindVertexArray(null);

}

function renderSphere2() {

if (sphereVAO == null) {

sphere = new Sphere2(14, 14)

sphereVAO = gl.createVertexArray()

let vbo = gl.createBuffer()

let ebo = gl.createBuffer()

gl.bindVertexArray(sphereVAO)

gl.bindBuffer(gl.ARRAY_BUFFER, vbo)

gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(sphere.vertices), gl.STATIC_DRAW)

gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, ebo)

gl.bufferData(gl.ELEMENT_ARRAY_BUFFER, new Uint16Array(sphere.indices), gl.STATIC_DRAW)

let stride = (3 + 2 + 3) * sizeFloat

gl.enableVertexAttribArray(0)

gl.vertexAttribPointer(0, 3, gl.FLOAT, false, stride, 0)

gl.enableVertexAttribArray(1)

gl.vertexAttribPointer(1, 2, gl.FLOAT, false, stride, (6 * sizeFloat))

gl.enableVertexAttribArray(2)

gl.vertexAttribPointer(2, 3, gl.FLOAT, false, stride, (3 * sizeFloat))

}

gl.bindVertexArray(sphereVAO)

gl.drawElements(gl.TRIANGLE_STRIP, sphere.indices.length, gl.UNSIGNED_SHORT, 0)

}

function loadHDR(url, completion) {

var req = m(new XMLHttpRequest(), { responseType: "arraybuffer" });

req.onerror = completion.bind(req, false);

req.onload = function () {

if (this.status >= 400)

return this.onerror();

var header = '', pos = 0, d8 = new Uint8Array(this.response), format;

while (!header.match(/\n\n[^\n]+\n/g))

header += String.fromCharCode(d8[pos++]);

format = header.match(/FORMAT=(.*)$/m)[1];

if (format != '32-bit_rle_rgbe')

return console.warn('unknown format : ' + format), this.onerror();

var rez = header.split(/\n/).reverse()[1].split(' '), width = +rez[3] * 1, height = +rez[1] * 1;

var img = new Uint8Array(width * height * 4), ipos = 0;

for (var j = 0; j < height; j++) {

var rgbe = d8.slice(pos, pos += 4), scanline = [];

if (rgbe[0] != 2 || (rgbe[1] != 2) || (rgbe[2] & 0x80)) {

var len = width, rs = 0;

pos -= 4;

while (len > 0) {

img.set(d8.slice(pos, pos += 4), ipos);

if (img[ipos] == 1 && img[ipos + 1] == 1 && img[ipos + 2] == 1) {

for (img[ipos + 3] << rs; i > 0; i--) {

img.set(img.slice(ipos - 4, ipos), ipos);

ipos += 4;

len--;

}

rs += 8;

}

else {

len--;

ipos += 4;

rs = 0;

}

}

}

else {

if ((rgbe[2] << 8) + rgbe[3] != width)

return console.warn('HDR line mismatch ..'), this.onerror();

for (var i = 0; i < 4; i++) {

var ptr = i * width, ptr_end = (i + 1) * width, buf, count;

while (ptr < ptr_end) {

buf = d8.slice(pos, pos += 2);

if (buf[0] > 128) {

count = buf[0] - 128;

while (count-- > 0)

scanline[ptr++] = buf[1];

}

else {

count = buf[0] - 1;

scanline[ptr++] = buf[1];

while (count-- > 0)

scanline[ptr++] = d8[pos++];

}

}

}

for (var i = 0; i < width; i++) {

img[ipos++] = scanline[i];

img[ipos++] = scanline[i + width];

img[ipos++] = scanline[i + 2 * width];

img[ipos++] = scanline[i + 3 * width];

}

}

}

completion && completion(img, width, height);

};

req.open("GET", url, true);

req.send(null);

return req;

}

- 加载完hdr文件后,回调函数生成背景图以及其他很多图

function toCubemap(data, width, height) {

captureFBO = gl.createFramebuffer();

let captureRBO = gl.createRenderbuffer();

gl.bindFramebuffer(gl.FRAMEBUFFER, captureFBO);

gl.bindRenderbuffer(gl.RENDERBUFFER, captureRBO);

gl.renderbufferStorage(gl.RENDERBUFFER, gl.DEPTH_COMPONENT24, whCube, whCube);

gl.framebufferRenderbuffer(gl.FRAMEBUFFER, gl.DEPTH_ATTACHMENT, gl.RENDERBUFFER, captureRBO);

envCubemap = gl.createTexture();

gl.bindTexture(gl.TEXTURE_CUBE_MAP, envCubemap);

for (let i = 0; i < 6; ++i) {

gl.texImage2D(gl.TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, gl.RGBA16F, whCube, whCube, 0, gl.RGBA, gl.FLOAT, new Float32Array(whCube * whCube * 4));

}

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_R, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

let captureProjection = mat4.create();

mat4.perspective(captureProjection, (90.0) * Math.PI / 180, 1.0, 0.1, 10.0);

equirectangularToCubemapShader.use(gl);

equirectangularToCubemapShader.setInt(gl, "equirectangularMap", 0);

gl.uniformMatrix4fv(gl.getUniformLocation(equirectangularToCubemapShader.programId, "projection"), false, captureProjection);

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_2D, hdrTexture);

gl.viewport(0, 0, whCube, whCube);

gl.bindFramebuffer(gl.FRAMEBUFFER, captureFBO);

let error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(equirect) status error= " + error);

let captureViews = [

mat4.lookAt(mat4.create(), vec3.fromValues(0.0, 0.0, 0.0), vec3.fromValues(1.0, 0.0, 0.0), vec3.fromValues(0.0, -1.0, 0.0)),

mat4.lookAt(mat4.create(), vec3.fromValues(0.0, 0.0, 0.0), vec3.fromValues(-1.0, 0.0, 0.0), vec3.fromValues(0.0, -1.0, 0.0)),

mat4.lookAt(mat4.create(), vec3.fromValues(0.0, 0.0, 0.0), vec3.fromValues(0.0, 1.0, 0.0), vec3.fromValues(0.0, 0.0, 1.0)),

mat4.lookAt(mat4.create(), vec3.fromValues(0.0, 0.0, 0.0), vec3.fromValues(0.0, -1.0, 0.0), vec3.fromValues(0.0, 0.0, -1.0)),

mat4.lookAt(mat4.create(), vec3.fromValues(0.0, 0.0, 0.0), vec3.fromValues(0.0, 0.0, 1.0), vec3.fromValues(0.0, -1.0, 0.0)),

mat4.lookAt(mat4.create(), vec3.fromValues(0.0, 0.0, 0.0), vec3.fromValues(0.0, 0.0, -1.0), vec3.fromValues(0.0, -1.0, 0.0))

];

for (let i = 0; i < 6; ++i) {

gl.uniformMatrix4fv(gl.getUniformLocation(equirectangularToCubemapShader.programId, "view"), false, captureViews[i]);

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_CUBE_MAP_POSITIVE_X + i, envCubemap, 0);

let error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(equirect-texture) status error= " + error);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

renderCube();

}

- 立方体贴图生成mipmap图

- 立方体贴图生成漫反射贴图

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

gl.bindTexture(gl.TEXTURE_CUBE_MAP, envCubemap);

gl.generateMipmap(gl.TEXTURE_CUBE_MAP);

const whIrradiance = 32;

irradianceMap = gl.createTexture();

gl.bindTexture(gl.TEXTURE_CUBE_MAP, irradianceMap);

for (let i = 0; i < 6; ++i) {

gl.texImage2D(gl.TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, gl.RGBA16F, whIrradiance, whIrradiance, 0, gl.RGBA, gl.FLOAT, new Float32Array(whIrradiance * whIrradiance * 4));

}

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_R, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

gl.bindFramebuffer(gl.FRAMEBUFFER, captureFBO);

gl.bindRenderbuffer(gl.RENDERBUFFER, captureRBO);

gl.renderbufferStorage(gl.RENDERBUFFER, gl.DEPTH_COMPONENT24, whIrradiance, whIrradiance);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(irradiance-depth) status error= " + error);

irradianceShader.use(gl);

irradianceShader.setInt(gl, "environmentMap", 0);

gl.uniformMatrix4fv(gl.getUniformLocation(irradianceShader.programId, "projection"), false, captureProjection);

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_CUBE_MAP, envCubemap);

gl.viewport(0, 0, whIrradiance, whIrradiance);

gl.bindFramebuffer(gl.FRAMEBUFFER, captureFBO);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(irradiance) status error= " + error);

for (let i = 0; i < 6; ++i) {

gl.uniformMatrix4fv(gl.getUniformLocation(irradianceShader.programId, "view"), false, captureViews[i]);

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_CUBE_MAP_POSITIVE_X + i, irradianceMap, 0);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(irradiance-texture) status error= " + error);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

renderCube();

}

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

const whPre = 128;

prefilterMap = gl.createTexture();

gl.bindTexture(gl.TEXTURE_CUBE_MAP, prefilterMap);

for (let i = 0; i < 6; ++i) {

gl.texImage2D(gl.TEXTURE_CUBE_MAP_POSITIVE_X + i, 0, gl.RGB, whPre, whPre, 0, gl.RGB, gl.UNSIGNED_BYTE, new Uint8Array(whPre * whPre * 3));

}

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_WRAP_R, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_MIN_FILTER, gl.LINEAR_MIPMAP_LINEAR);

gl.texParameteri(gl.TEXTURE_CUBE_MAP, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

gl.generateMipmap(gl.TEXTURE_CUBE_MAP);

prefilterShader.use(gl);

prefilterShader.setInt(gl, "environmentMap", 0);

gl.uniformMatrix4fv(gl.getUniformLocation(prefilterShader.programId, "projection"), false, captureProjection);

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_CUBE_MAP, envCubemap);

gl.bindFramebuffer(gl.FRAMEBUFFER, captureFBO);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(prefilter) status error= " + error);

const maxMipLevels = 5;

for (let mip = 0; mip < maxMipLevels; ++mip) {

let mipWidth = 128 * Math.pow(0.5, mip);

let mipHeight = 128 * Math.pow(0.5, mip);

gl.bindRenderbuffer(gl.RENDERBUFFER, captureRBO);

gl.renderbufferStorage(gl.RENDERBUFFER, gl.DEPTH_COMPONENT24, mipWidth, mipHeight);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(prefilter-depth) status error= " + error);

gl.viewport(0, 0, mipWidth, mipHeight);

let roughness = mip / (maxMipLevels - 1);

prefilterShader.setFloat(gl, "roughness", roughness);

for (let i = 0; i < 6; ++i) {

gl.uniformMatrix4fv(gl.getUniformLocation(prefilterShader.programId, "view"), false, captureViews[i]);

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_CUBE_MAP_POSITIVE_X + i, prefilterMap, mip);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(prefilter-texture) status error= " + error);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

renderCube();

}

}

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

brdfLUTTexture = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D, brdfLUTTexture);

gl.texImage2D(gl.TEXTURE_2D, 0, gl.RG16F, whCube, whCube, 0, gl.RG, gl.FLOAT, new Float32Array(whCube * whCube * 2), 0);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

gl.bindFramebuffer(gl.FRAMEBUFFER, captureFBO);

gl.bindRenderbuffer(gl.RENDERBUFFER, captureRBO);

gl.renderbufferStorage(gl.RENDERBUFFER, gl.DEPTH_COMPONENT24, whCube, whCube);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(brdf-depth) status error= " + error);

gl.framebufferTexture2D(gl.FRAMEBUFFER, gl.COLOR_ATTACHMENT0, gl.TEXTURE_2D, brdfLUTTexture, 0);

error = gl.checkFramebufferStatus(gl.FRAMEBUFFER);

if (error != gl.FRAMEBUFFER_COMPLETE)

console.log("framebuf(brdf-tetxure) status error= " + error);

gl.viewport(0, 0, whCube, whCube);

brdfShader.use(gl);

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

renderQuad();

gl.bindFramebuffer(gl.FRAMEBUFFER, null);

let projection = mat4.create();

mat4.perspective(projection, (camera.Zoom) * Math.PI / 180, SCR_WIDTH / SCR_HEIGHT, 0.1, 100.0);

pbrShader.use(gl);

gl.uniformMatrix4fv(gl.getUniformLocation(pbrShader.programId, "projection"), false, projection);

gl.uniform3fv(gl.getUniformLocation(pbrShader.programId, "lightPositions"), lightPositions);

gl.uniform3fv(gl.getUniformLocation(pbrShader.programId, "lightColors"), lightColors);

backgroundShader.use(gl);

gl.uniformMatrix4fv(gl.getUniformLocation(backgroundShader.programId, "projection"), false, projection);

gl.viewport(0, 0, canvas.width, canvas.height);

animate();

}

- 这个是drawcall 方便framebufferTexture2D生成贴图

- 另外一个是生成背景图

function renderCube() {

if (!cubeVAO) {

let vertices = new Float32Array([

-1.0, -1.0, -1.0, 0.0, 0.0, -1.0, 0.0, 0.0,

1.0, 1.0, -1.0, 0.0, 0.0, -1.0, 1.0, 1.0,

1.0, -1.0, -1.0, 0.0, 0.0, -1.0, 1.0, 0.0,

1.0, 1.0, -1.0, 0.0, 0.0, -1.0, 1.0, 1.0,

-1.0, -1.0, -1.0, 0.0, 0.0, -1.0, 0.0, 0.0,

-1.0, 1.0, -1.0, 0.0, 0.0, -1.0, 0.0, 1.0,

-1.0, -1.0, 1.0, 0.0, 0.0, 1.0, 0.0, 0.0,

1.0, -1.0, 1.0, 0.0, 0.0, 1.0, 1.0, 0.0,

1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 1.0, 1.0,

1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 1.0, 1.0,

-1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0, 1.0,

-1.0, -1.0, 1.0, 0.0, 0.0, 1.0, 0.0, 0.0,

-1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 1.0, 0.0,

-1.0, 1.0, -1.0, -1.0, 0.0, 0.0, 1.0, 1.0,

-1.0, -1.0, -1.0, -1.0, 0.0, 0.0, 0.0, 1.0,

-1.0, -1.0, -1.0, -1.0, 0.0, 0.0, 0.0, 1.0,

-1.0, -1.0, 1.0, -1.0, 0.0, 0.0, 0.0, 0.0,

-1.0, 1.0, 1.0, -1.0, 0.0, 0.0, 1.0, 0.0,

1.0, 1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,

1.0, -1.0, -1.0, 1.0, 0.0, 0.0, 0.0, 1.0,

1.0, 1.0, -1.0, 1.0, 0.0, 0.0, 1.0, 1.0,

1.0, -1.0, -1.0, 1.0, 0.0, 0.0, 0.0, 1.0,

1.0, 1.0, 1.0, 1.0, 0.0, 0.0, 1.0, 0.0,

1.0, -1.0, 1.0, 1.0, 0.0, 0.0, 0.0, 0.0,

-1.0, -1.0, -1.0, 0.0, -1.0, 0.0, 0.0, 1.0,

1.0, -1.0, -1.0, 0.0, -1.0, 0.0, 1.0, 1.0,

1.0, -1.0, 1.0, 0.0, -1.0, 0.0, 1.0, 0.0,

1.0, -1.0, 1.0, 0.0, -1.0, 0.0, 1.0, 0.0,

-1.0, -1.0, 1.0, 0.0, -1.0, 0.0, 0.0, 0.0,

-1.0, -1.0, -1.0, 0.0, -1.0, 0.0, 0.0, 1.0,

-1.0, 1.0, -1.0, 0.0, 1.0, 0.0, 0.0, 1.0,

1.0, 1.0, 1.0, 0.0, 1.0, 0.0, 1.0, 0.0,

1.0, 1.0, -1.0, 0.0, 1.0, 0.0, 1.0, 1.0,

1.0, 1.0, 1.0, 0.0, 1.0, 0.0, 1.0, 0.0,

-1.0, 1.0, -1.0, 0.0, 1.0, 0.0, 0.0, 1.0,

-1.0, 1.0, 1.0, 0.0, 1.0, 0.0, 0.0, 0.0

]);

cubeVAO = gl.createVertexArray();

let cubeVBO = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, cubeVBO);

gl.bufferData(gl.ARRAY_BUFFER, vertices, gl.STATIC_DRAW);

gl.bindVertexArray(cubeVAO);

gl.enableVertexAttribArray(0);

gl.vertexAttribPointer(0, 3, gl.FLOAT, false, 8 * sizeFloat, 0);

gl.enableVertexAttribArray(1);

gl.vertexAttribPointer(1, 3, gl.FLOAT, false, 8 * sizeFloat, (3 * sizeFloat));

gl.enableVertexAttribArray(2);

gl.vertexAttribPointer(2, 2, gl.FLOAT, false, 8 * sizeFloat, (6 * sizeFloat));

gl.bindBuffer(gl.ARRAY_BUFFER, null);

gl.bindVertexArray(null);

}

gl.bindVertexArray(cubeVAO);

gl.drawArrays(gl.TRIANGLES, 0, 36);

gl.bindVertexArray(null);

}

let hdrTexture;

if (data) {

let floats = rgbeToFloat(data);

hdrTexture = gl.createTexture();

gl.bindTexture(gl.TEXTURE_2D, hdrTexture);

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true);

gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGB16F, width, height, 0, gl.RGB, gl.FLOAT, floats);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.LINEAR);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.LINEAR);

}

else {

console.log("Failed to load HDR image.");

}

function rgbeToFloat(buffer) {

var s, l = buffer.byteLength >> 2, res = res || new Float32Array(l * 3);

for (var i = 0; i < l; i++) {

s = Math.pow(2, buffer[i * 4 + 3] - (128 + 8));

res[i * 3] = buffer[i * 4] * s;

res[i * 3 + 1] = buffer[i * 4 + 1] * s;

res[i * 3 + 2] = buffer[i * 4 + 2] * s;

}

return res;

}

function m(a, b) { for (var i in b)

a[i] = b[i]; return a; }

;

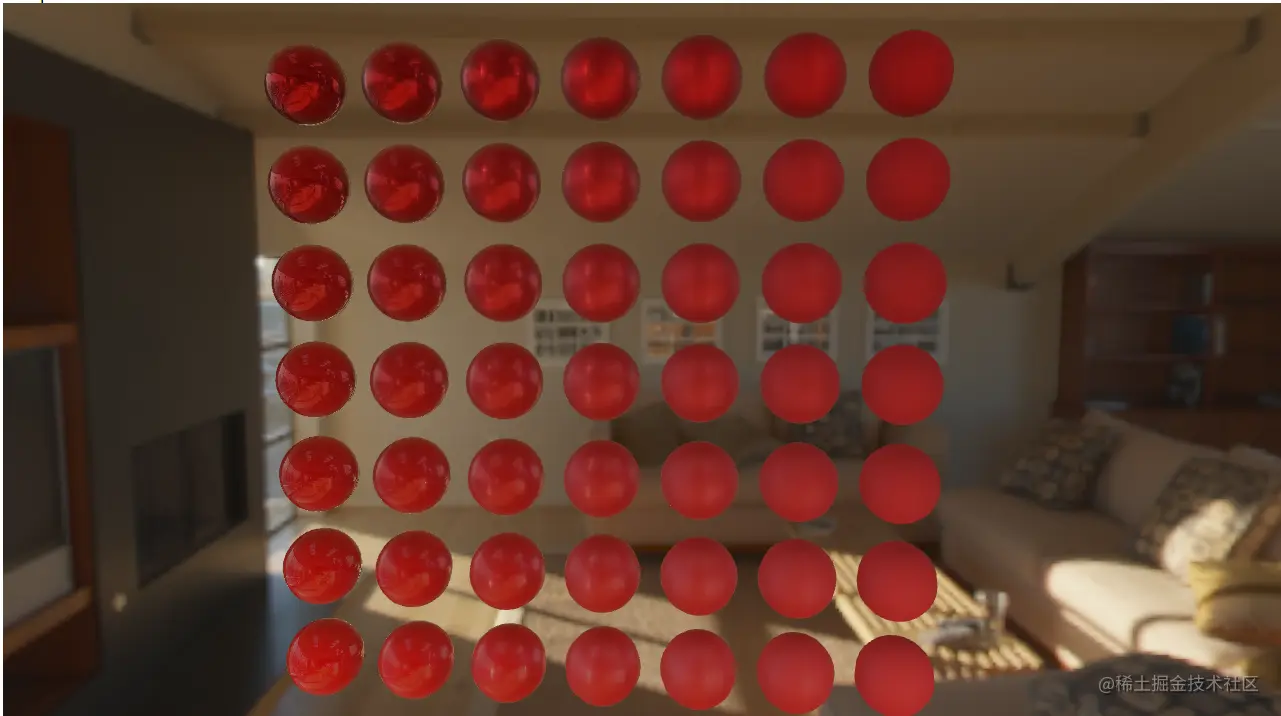

预滤波环境贴图