背景:

线上kubernetes集群,tke 1.20.6版本,跑了两年多了。六月底升级了master节点到1.22.5版本

升级须知里面风险点各种文档检查都正常.......

work节点没有升级,只是升级了master节点,就这样跑了三周,期间缩容的时候出现了eck admissionregistration.k8s.io/v1beta1 api的问题。今天集群中一个节点上面的traefik pod 重启了,然后随之的问题来了......http访问出现大量404!

问题排查过程

关于traefik

2021年安装的,详见Kubernetes 1.20.5 安装traefik在腾讯云下的实践.基本就是如下的样子:

图都是后面随手截过来凑数的!

确认问题出现在网关代理层

扫了一眼服务基本都跑了十多天了没有什么异常,直接定位去看traefik 网关代理:

kubectl get pods -n traefik

注:图片就拿来凑数的 ,当时没有截图,手都要发抖了.......

看到有一个traefik pod 重启了,查看一下traefik pod的日志:

kubectl logs -f traefik-ingress-controller-xxxx -n traefik

出现大量**Failed to watch *v1beta1.Ingress: failed to list *v1beta1.Ingress: the server could not find the requested resource (get ingresses.extensions) 。**看到这里,个人基本确认是kubernetes集群升级留下的后遗症!还是crd rbac这些自定义资源的问题......

升级traefik集群

基本就是重新执行了一遍 crd 文件和rbac配置文件:

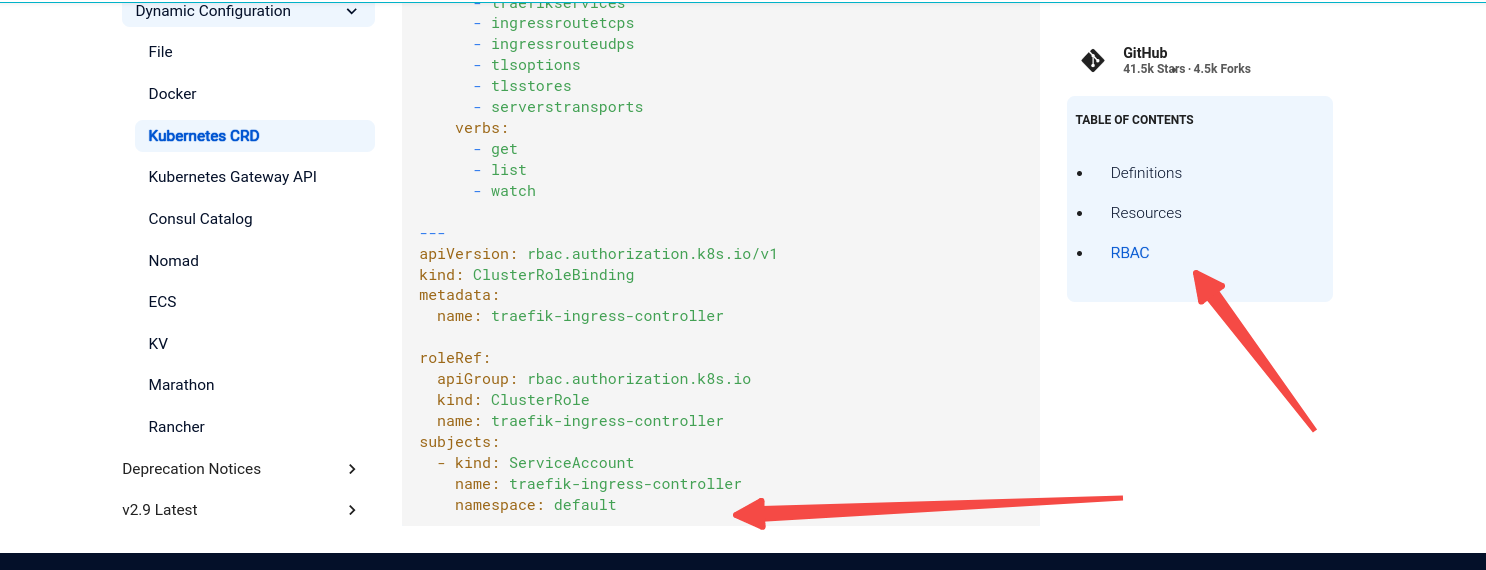

参照:doc.traefik.io/traefik/ref…,一切以官方文件为准:

crd篇幅太长,官网直接获取吧

kubectl apply -f traefik-crd.yaml

还是参照上面的文档 但是rbac文件中注意namespace的名称 sa要与部署应用在同一namespace!

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- traefik.containo.us

resources:

- middlewares

- middlewaretcps

- ingressroutes

- traefikservices

- ingressroutetcps

- ingressrouteudps

- tlsoptions

- tlsstores

- serverstransports

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: traefik

<!---->

kubectl apply -f traefik-rbac.yaml -n traefik

然后顺手修改了一下traefik镜像版本 2.4-2.9。等待所有pod running后404异常解除,当然了这里还可能有以下问题:

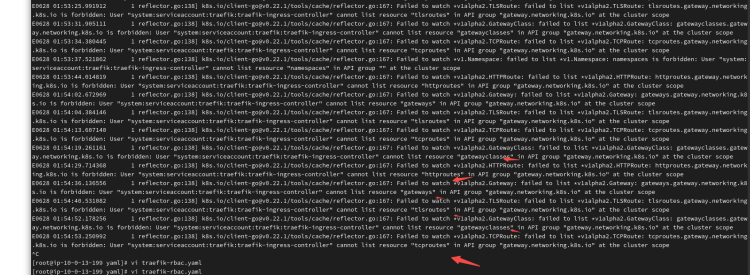

traefik pod日志中可能会出现这样的日志:

参照:

<https://doc.traefik.io/traefik/reference/dynamic-configuration/kubernetes-gateway/#rbac>

cat gateway-rbac.yaml

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: gateway-role rules:

- apiGroups:

- "" resources:

- namespaces verbs:

- list

- watch

- apiGroups:

- "" resources:

- services

- endpoints

- secrets verbs:

- get

- list

- watch

- apiGroups:

- gateway.networking.k8s.io resources:

- gatewayclasses

- gateways

- httproutes

- tcproutes

- tlsroutes verbs:

- get

- list

- watch

- apiGroups:

- gateway.networking.k8s.io resources:

- gatewayclasses/status

- gateways/status

- httproutes/status

- tcproutes/status

- tlsroutes/status verbs:

- update

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: gateway-controller

roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: gateway-role subjects:

- kind: ServiceAccount name: traefik-ingress-controller namespace: traefik

kubectl apply -f gateway-rbac.yaml -n traefik

异常解除..... 差不多花了二十多分钟....主要也是当时毛了,手忙脚乱......

# 总结

1 .升级确保检查组件版本 与集群版本兼容匹配性。跟腾讯tke团队反馈过这个问题,他们后续也会增加版本升级的api兼容性检查。

2\. 以后项目更新过程中对集群组件进行适当的更新保持组件版本的迭代更新,尽量利用更新维护时间发现问题解决问题。避免线上环境突发这样的异常状态。

3. 404异常访问告警的添加,404类报警开始都屏蔽了,认为是正常状态。后续添加404访问到达阈值或者其他计算方法的一个报警规则。

# 另外

以上gateway-rbac的操作在[AWS alb eks traefik realip后端真实IP](https://www.yuque.com/duiniwukenaihe/ehb02i/fpoqmzh3c35b9p09#Nc49a)中有遇到过