纵向聚合VS横向聚合

普通的聚合函数是纵向聚合

- 普通的聚合函数是纵向聚合,也就是多个series进行聚合

- 如求机器的平均cpu user态利用率

avg(rate(node_cpu_seconds_total{mode="user"}[1m])) by (instance) *100

- 内置含义是 所有的CPU 核心按照instance算平均值,就是纵向聚合

{instance="192.168.26.54:9100"} 3.477700495544539

{instance="localhost:9100"} 0.04444938326480675

agg_over_time 横向聚合

- 文档地址

- 可以立即为这个series的时间数据从左到右应用聚合函数,如最近一天内的平均可用内存大小

avg_over_time(node_memory_MemAvailable_bytes[1d])

{instance="192.168.26.54:9100", job="node"} 2553257976.0155945

{instance="localhost:9100", job="node"} 7786764569.695171

算机器的使用率

cpu使用率

最近1天内的cpu平均使用率

avg_over_time((avg(rate(node_cpu_seconds_total{mode="user"}[1m])) by (instance) *100)[1d:1m])

- 解读一下,先纵向将所有核数据聚合成instance节点级别

- 然后应用avg_over_time,求得instance节点级别自身 1天的平均值,结果如下

{instance="localhost:9100"} 0.03197398802457715

{instance="192.168.26.54:9100"} 2.313837468666956

最近1天内的cpu最大使用率

max_over_time((avg(rate(node_cpu_seconds_total{mode="user"}[1m])) by (instance) *100)[1d:1m])

{instance="localhost:9100"} 0.09999333377774823

{instance="192.168.26.54:9100"} 3.4777004955445383

最近1天内的cpu最小使用率

min_over_time((avg(rate(node_cpu_seconds_total{mode="user"}[1m])) by (instance) *100)[1d:1m])

{instance="192.168.26.54:9100"} 1.188701746783102

{instance="localhost:9100"} 0

内存使用率

最近1天内的内存平均使用率

avg_over_time(((1 - (node_memory_MemAvailable_bytes / (node_memory_MemTotal_bytes)))* 100)[1d:1m])

{instance="localhost:9100", job="node"} 6.800830969585009

{instance="192.168.26.54:9100", job="node"} 38.17206296267312

最近1天内的内存最大使用率

max_over_time(((1 - (node_memory_MemAvailable_bytes / (node_memory_MemTotal_bytes)))* 100)[1d:1m])

{instance="localhost:9100", job="node"} 7.11062956707611

{instance="192.168.26.54:9100", job="node"} 38.95369342822029

最近1天内的内存最小使用率

min_over_time(((1 - (node_memory_MemAvailable_bytes / (node_memory_MemTotal_bytes)))* 100)[1d:1m])

{instance="localhost:9100", job="node"} 6.592866230172056

{instance="192.168.26.54:9100", job="node"} 35.787472020235846

使go语言脚本查询数据

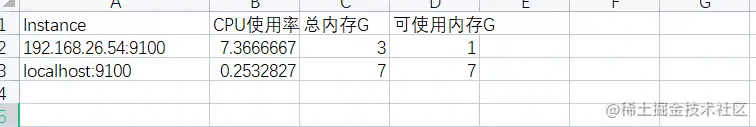

yaml配置文件

sheetName: "MySheet"

metrics:

- name: "Metric1"

query: "avg_over_time((avg(rate(node_cpu_seconds_total{mode=\"user\"}[1m])) by (instance) *100)[1d:1m])"

header: ["CPU使用率"]

- name: "Metric2"

query: "floor(node_memory_MemTotal_bytes / 1024 /1024 /1024)"

header: ["总内存G"]

- name: "Metric3"

query: "floor(node_memory_MemFree_bytes / 1024 /1024 /1024)"

header: ["可使用内存G"]

go脚本

package main

import (

"context"

"fmt"

"github.com/360EntSecGroup-Skylar/excelize"

"github.com/prometheus/client_golang/api"

v1 "github.com/prometheus/client_golang/api/prometheus/v1"

"github.com/prometheus/common/model"

"gopkg.in/yaml.v2"

"io/ioutil"

"strconv"

"time"

)

type Metric struct {

Name string `yaml:"name"`

Query string `yaml:"query"`

Header []string `yaml:"header"`

}

type QueryConfig struct {

SheetName string `yaml:"sheetName"`

Metrics []Metric `yaml:"metrics"`

}

func createPrometheusClient(address string) (v1.API, error) {

client, err := api.NewClient(api.Config{

Address: address,

})

if err != nil {

return nil, fmt.Errorf("error creating client: %w", err)

}

v1api := v1.NewAPI(client)

return v1api, nil

}

func queryPrometheus(api v1.API, query string, queryTime time.Time) (model.Vector, error) {

ctx, cancel := context.WithTimeout(context.Background(), 10*time.Second)

defer cancel()

result, warnings, err := api.Query(ctx, query, queryTime)

if err != nil {

return nil, fmt.Errorf("error querying Prometheus: %w", err)

}

if len(warnings) > 0 {

fmt.Printf("Warnings: %v\n", warnings)

}

vector, ok := result.(model.Vector)

if !ok {

return nil, fmt.Errorf("query result is not a vector")

}

return vector, nil

}

func readQueryConfig(filename string) (*QueryConfig, error) {

data, err := ioutil.ReadFile(filename)

if err != nil {

return nil, err

}

var config QueryConfig

err = yaml.Unmarshal(data, &config)

if err != nil {

return nil, err

}

return &config, nil

}

func writeToExcelFile(fileName string, sheetName string, metrics []Metric, vectors []model.Vector) error {

f := excelize.NewFile()

index := f.NewSheet(sheetName)

f.SetCellValue(sheetName, "A1", "Instance")

for i, metric := range metrics {

col := i + 2

f.SetCellValue(sheetName, convertToColumnName(col)+"1", metric.Header[0])

}

for i, vector := range vectors {

col := i + 2

for j, sample := range vector {

row := strconv.Itoa(j + 2)

f.SetCellValue(sheetName, "A"+row, string(sample.Metric["instance"]))

f.SetCellValue(sheetName, convertToColumnName(col)+row, float64(sample.Value))

}

}

f.SetActiveSheet(index)

if err := f.SaveAs(fileName); err != nil {

return fmt.Errorf("error saving Excel file: %w", err)

}

return nil

}

func convertToColumnName(col int) string {

colStr := ""

for col > 0 {

col--

colStr = string('A'+col%26) + colStr

col /= 26

}

return colStr

}

func main() {

v1api, err := createPrometheusClient("http://192.168.26.21:9090")

if err != nil {

fmt.Println(err)

return

}

config, err := readQueryConfig("D:\\work-space\\test1\\client\\query.yaml")

if err != nil {

fmt.Println(err)

return

}

vectors := make([]model.Vector, len(config.Metrics))

for i, metric := range config.Metrics {

vector, err := queryPrometheus(v1api, metric.Query, time.Now())

if err != nil {

fmt.Println(err)

return

}

vectors[i] = vector

}

if err := writeToExcelFile("Report"+time.Now().Format("2006-01-02")+".xlsx", config.SheetName, config.Metrics, vectors); err != nil {

fmt.Println(err)

}

}

总结

可以将服务分组信息通过服务发现打入监控

[

{

"targets": [

"172.20.70.205:9100"

],

"labels": {

"account": "aliyun-01",

"region": "ap-south-1",

"env": "prod",

"group": "inf",

"project": "monitor",

"stree_gpa": "inf.monitor.prometheus"

}

},

{

"targets": [

"172.20.70.215:9100"

],

"labels": {

"account": "aliyun-02",

"region": "ap-south-2",

"env": "prod",

"group": "inf",

"project": "middleware",

"stree_gpa": "inf.middleware.kafka"

}

}

]

- 通过查询的时候按照 stree_gpa等标签 聚合,就可以得到每个业务组的cpu和内存数据

生成业务资源利用率报表