Application

- stock market forecast

- self-driving car

- recommendation

Step 1: Model

Linear model: y=b+∑iwixi

Step 2: Goodness of Function

Loss function L

Input: a function

Output: how bad it is

L(f)=L(w,b)=i∑(y^n−(b+i∑wixin))2

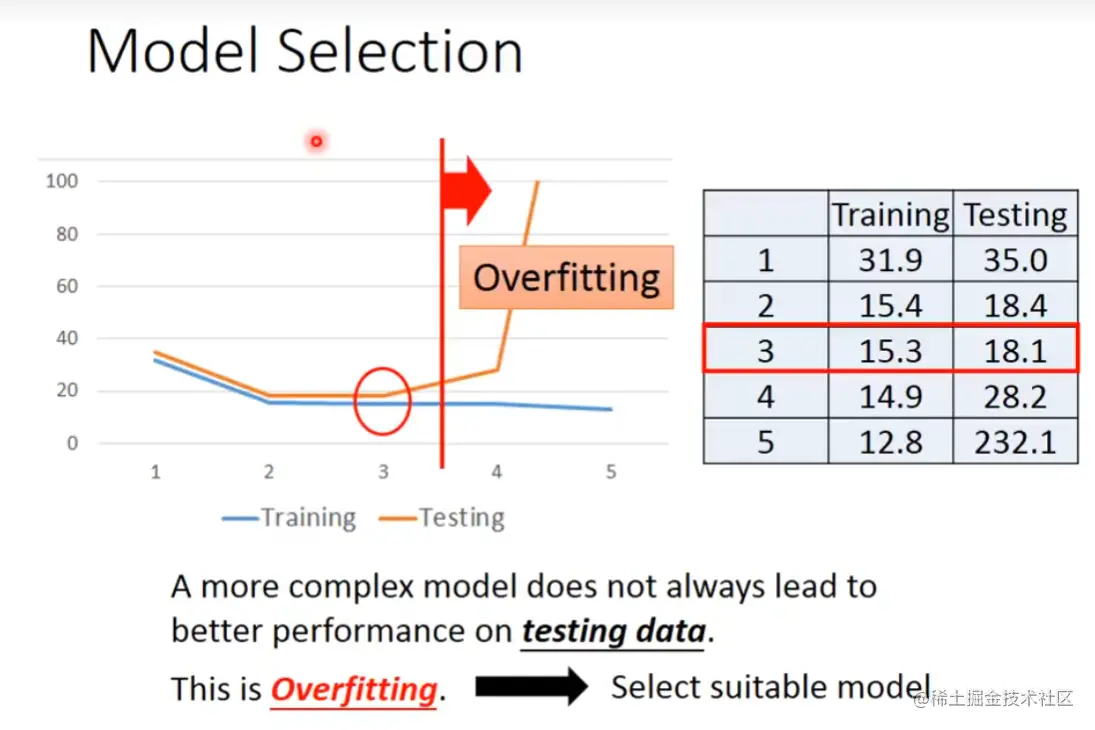

Regularization

L(f)=L(w,b)=i∑(y^n−(b+i∑wixin))2+λi∑(wi)2

- smooth functions are preferred (smaller wi -> smaller change when x changes) - not sensitive to noise

- larger λ -> larger training error, not necessarily smaller testing error

- How smooth? -> select λ obtaining the best model

- bias should not be considered in regularization (只提供位移,与smooth无关)

Step3: Gradiend Descent

Consider L(w)

- (Randomly) pick an initial value w0

- Compute dwdL∣w=w0, w1=w0−ηdwdL∣w=w0

- Repeat -> local optimal

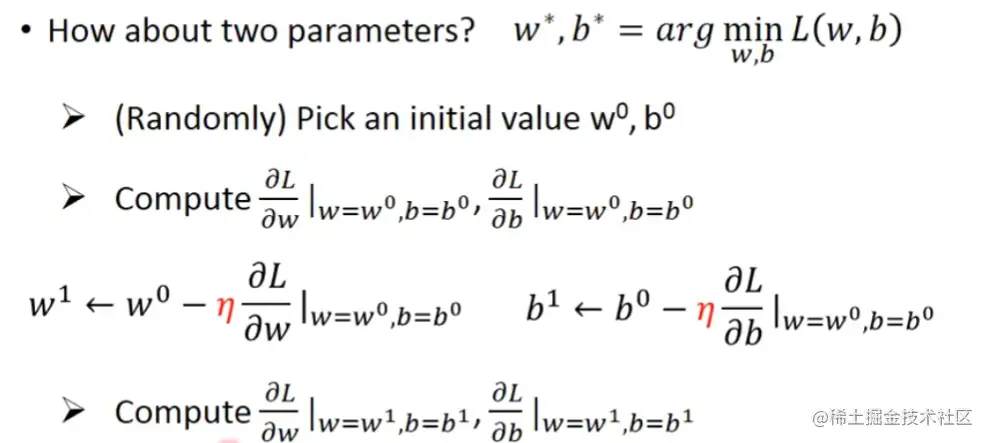

Two parameters L(w,b)

Gradient: ∇L=⎣⎡∂w∂L∂b∂L⎦⎤