这是我参与「第五届青训营 」伴学笔记创作活动的第 35 天

本节课将重点介绍 ByteFaaS 数据面架构设计与实现原理,尤其是函数常规启动调用流量调度与函数冷启动相关内容,结合日常研发中实际案例帮助大家更好地理解 FaaS 场景下企业级应用与最佳实践。

Overview

- FaaS - Function as a Service

- users only write a function, instead of the whole service

- users focus more on code, not infrastructure

- Event- driven serverless computing platform

- different event source integration

- Pay as you go

- auto-scale up and down (even scale to zero)

- only pay for or consume resources as needed (cost-efficiency)

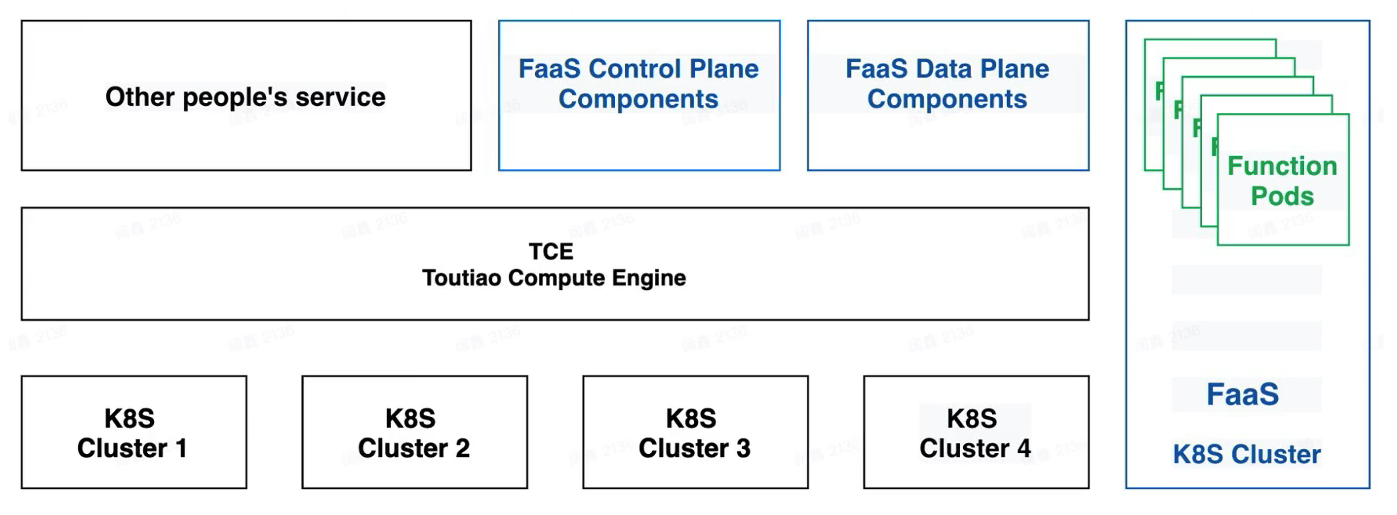

Overview of ByteFaaS Architecture

- 1-stop serverless computing platform

- create, build, deploy function; logging, monitoring, auto-scaling.

- function execution, readiness check, service discovery, proxying, routing, load balancing

Function Runtime

- Scheduled and executed as k8s pod

- deployment pod for steady traffic(pod承载稳态流量)

- warming pod for burst traffic(用于函数冷启动和突发流量)

- Unified base image + custom function code(加速启动)

- 镜像代码分离的设计:同一组runtime的函数都是用统一的基础镜像。基于基础镜像把pod启动起来,再加载函数代码

- 所有机器可以先预置基础镜像,启动代码的时候,就可以复用缓存中的镜像,加速Pod启动

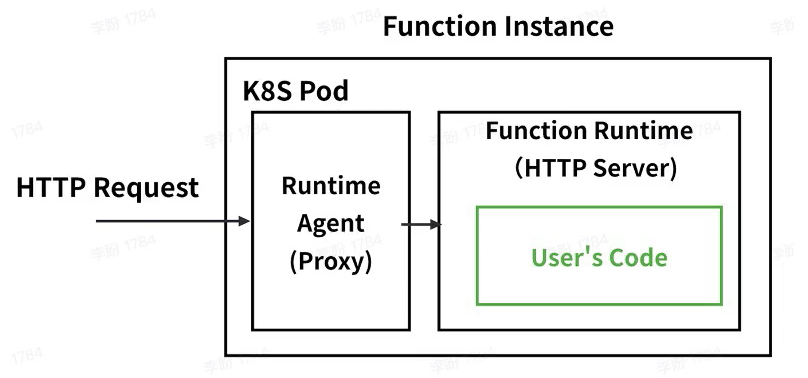

- Runtime-agent + function

- Runtime-agent

- load function environments/code, start runtime process

- proxy requests to runtime server, traffic control

- health check, log/ metrics collector, billing

- Function runtime

- multi language support (Go, Python, Nodejs, Java, Rust, ....

- FaaS native (HTTP/RPC)

- custom runtime

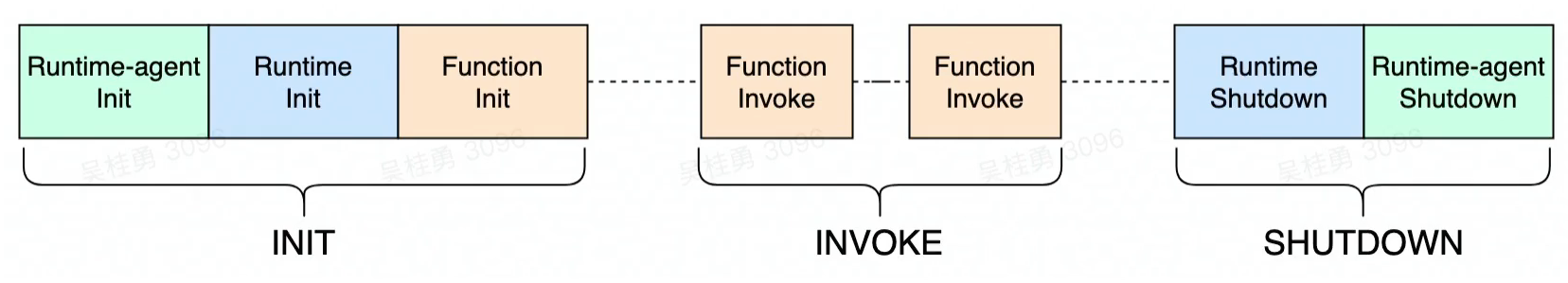

Function Instance Lifecycle

INIT

- Runtime-agent Init

- runtime- agent starts, and load configuration file and environments

- create metrics and logger clients

- start proxy server and standby for loading function

- Runtime Init (/v1/load)

- load function code and environments

- start function process

- hot load (Python, Nodejs, Java)

- detect protocol and setup proxy client to runtime server

- Function Init (/v1/initialize)

- load configs or initialize clients to downstream

- executed only once when there is no exception

- runtime -agent will not proxy request to runtime server handler until function successfully initialized

- retry with lower cost and avoid restarting function process

- Function route state transition

- pending > loading > loaded > ready (happy path)

INVOKE

-

Function request identification

- X-Bytefaas-Function-ld / X- Bytefaas-Function-Psm

- the address may be reassigned to other functions .

-

Function response

- invocation succeed and function returns EventResponse

- invocation failed

- X-Bytefaas-Response-Error-Code

- X-Bytefaas-Response-Error-Message

- X-Bytefaas-Request-ld

Function Routing Policy

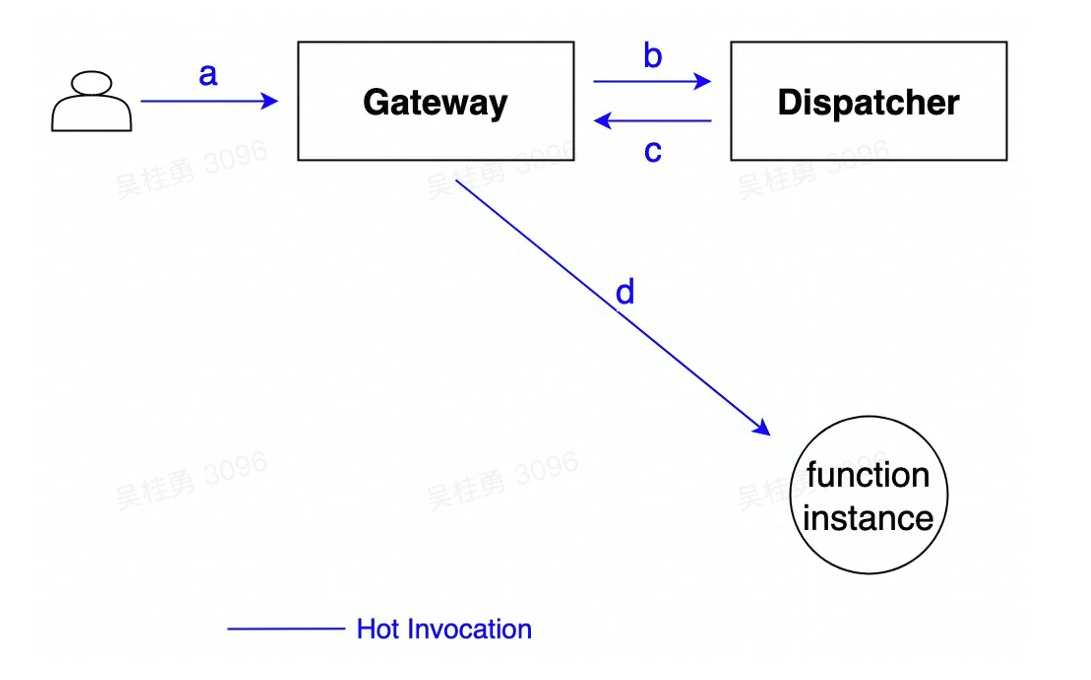

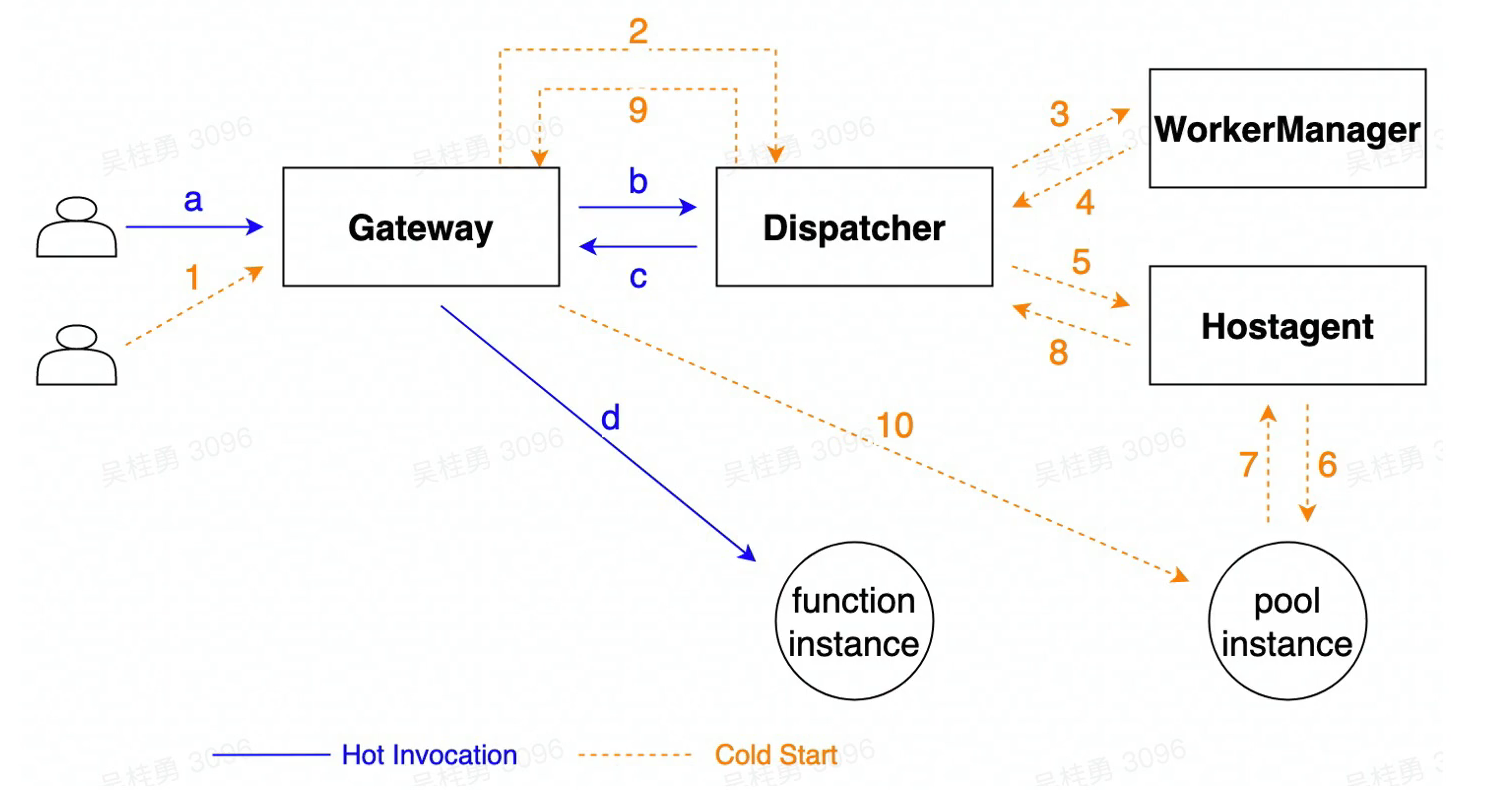

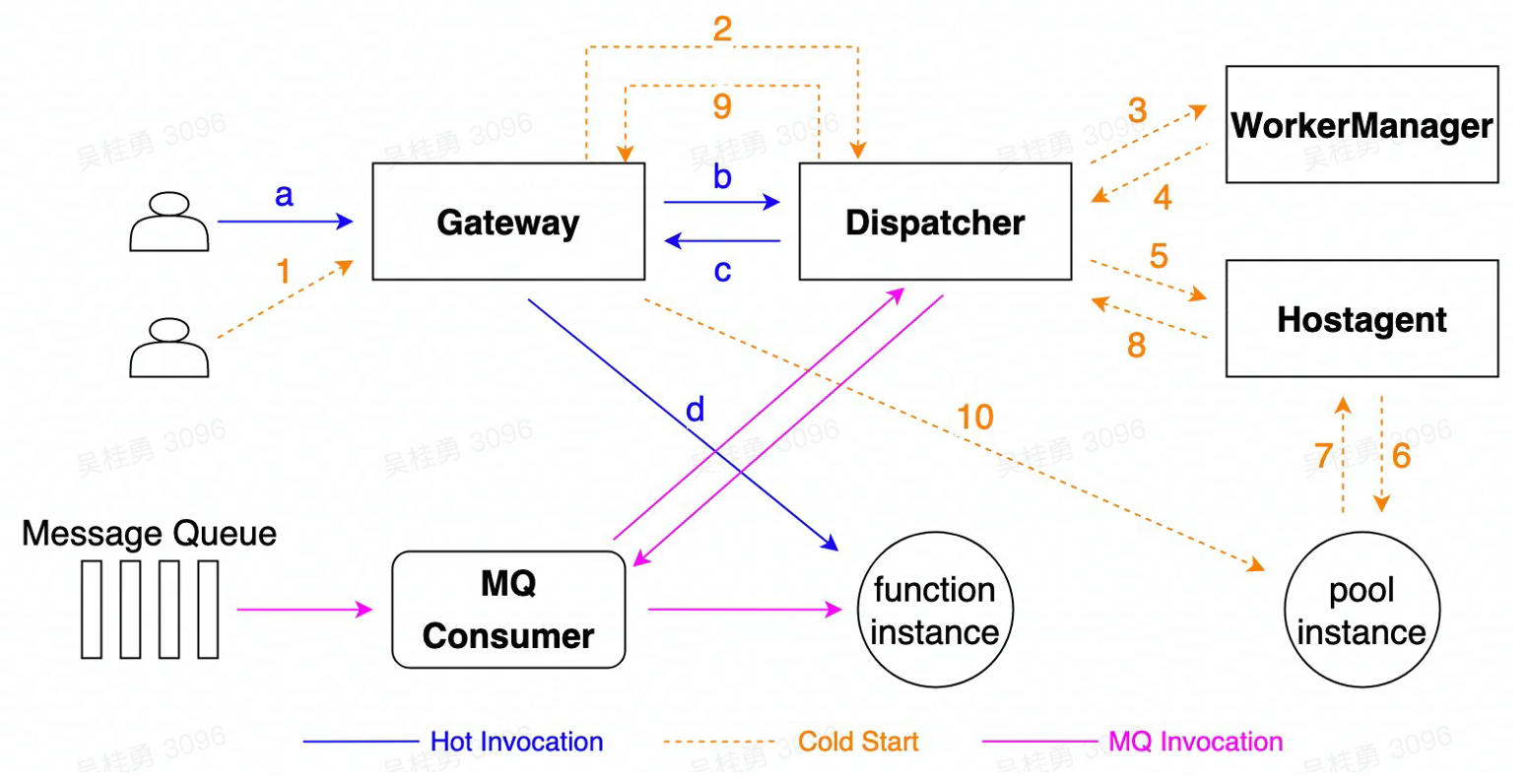

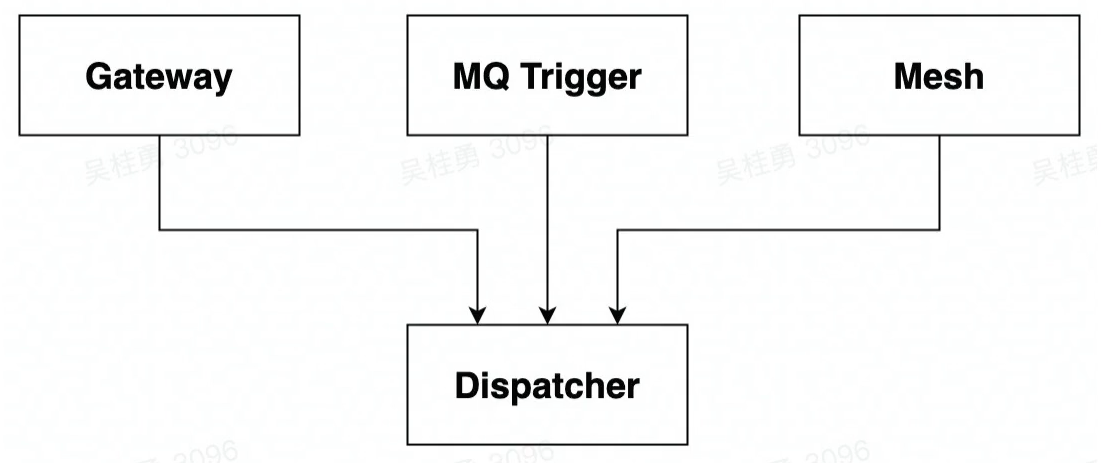

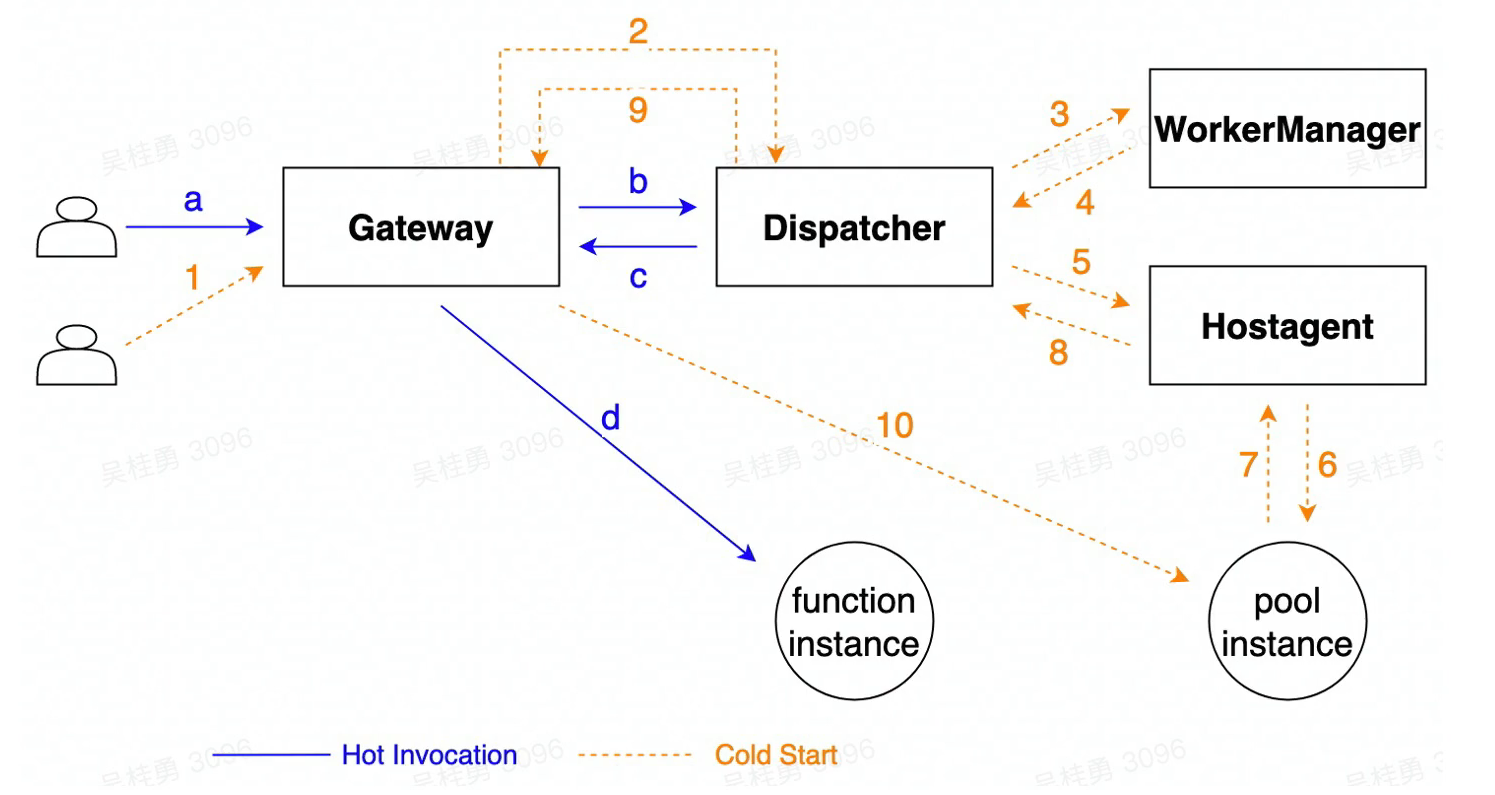

Function invocation path

- hot invocation:

- Cold start

- MQ Trigger Invocation

请求流量高,不需要太精确,绕过Gateway性能就高很多昂!!!

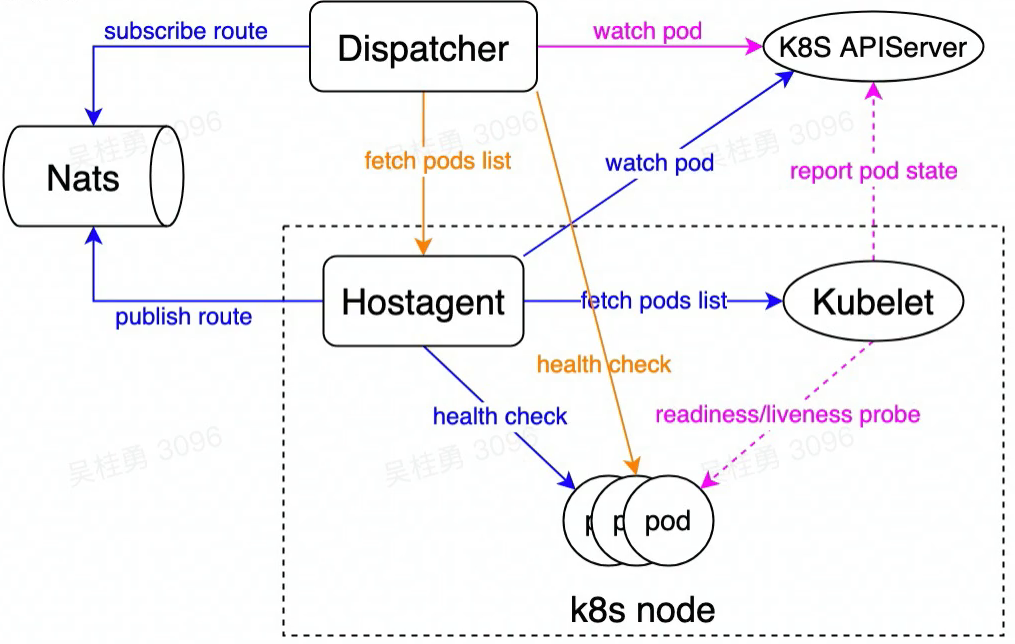

Function route management

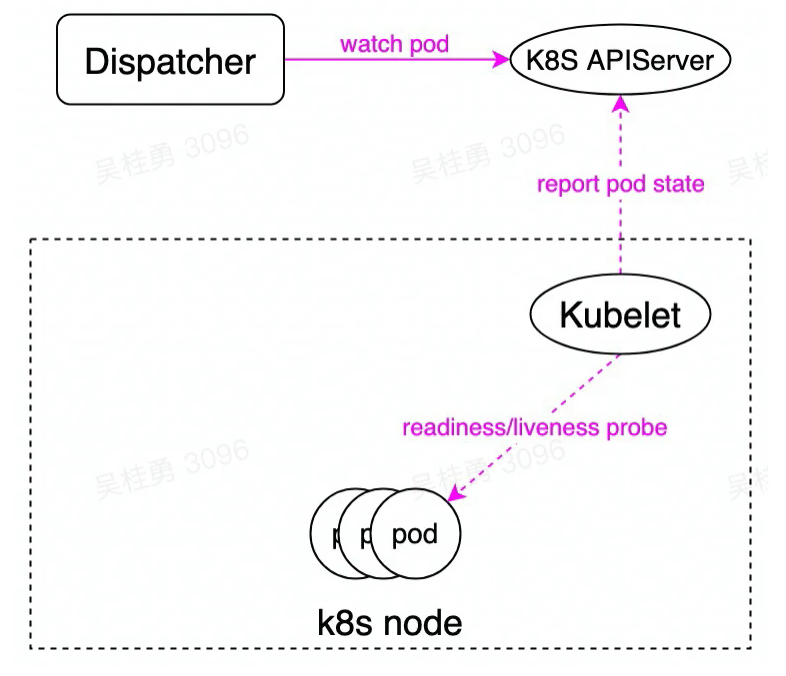

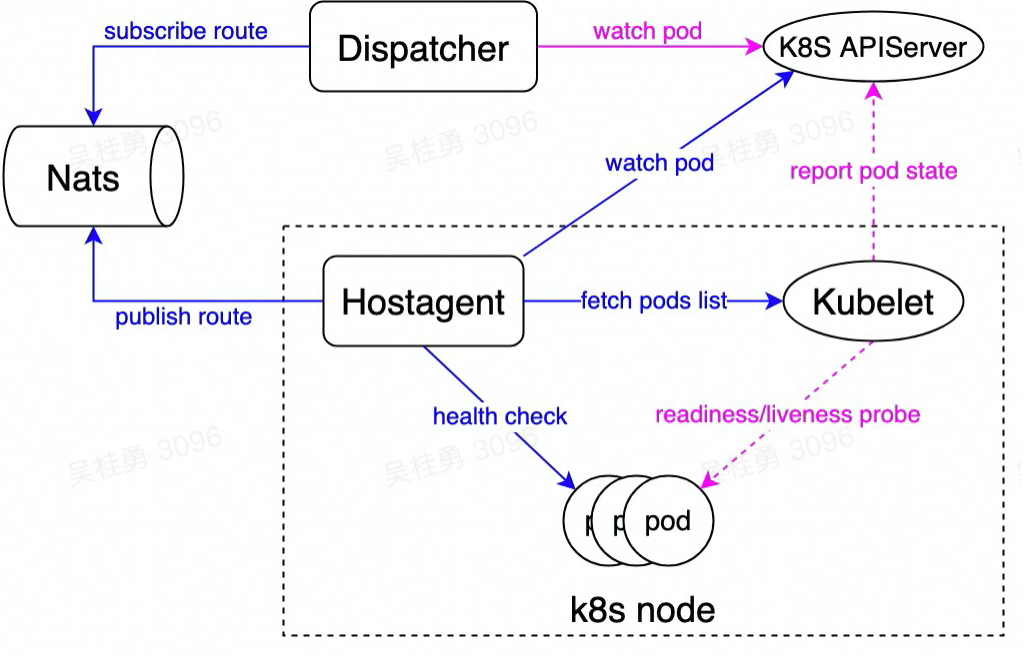

Readiness Check & Service Discovery:

-

health check for function

- /v1/ping 200/404

-

Kubernetes readiness/liveness probe

- readiness probe: 2s * 3

- liveness probe: 45s * 3

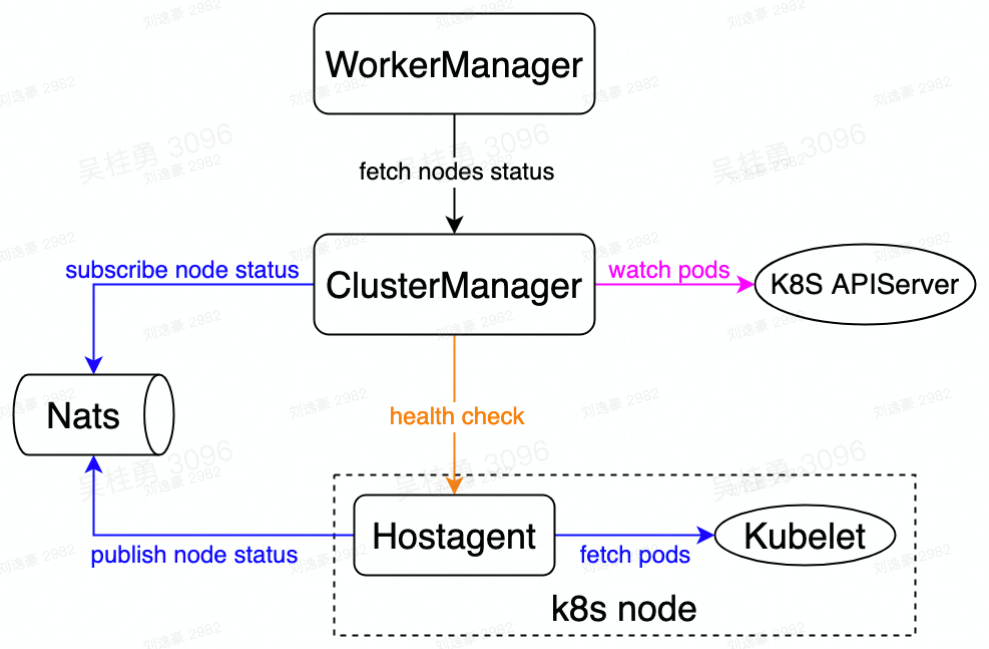

- Hostagent local health check

- tolerate kubelet failure

- pub/sub with Nats

- Dispatcher remote health check

- Tolerate network isolation

Single-instance Failure Tolerance

- Circuit breaker

- Gateway and MQ trigger

- Mesh

- Single-instance fault detection and auto-migration (ongoing -> newest feature)

保证函数路由表中的实例是健康的昂!!!

Function invocation concurrency control

- Request concurrency

- "the number of requests the function processes simultaneously

- Concurrency limit for function

- restrict single- instance concurrency to cap the load on function instance

- restrict total request concurrency for function to prevent downstream services or database being overwhelmed

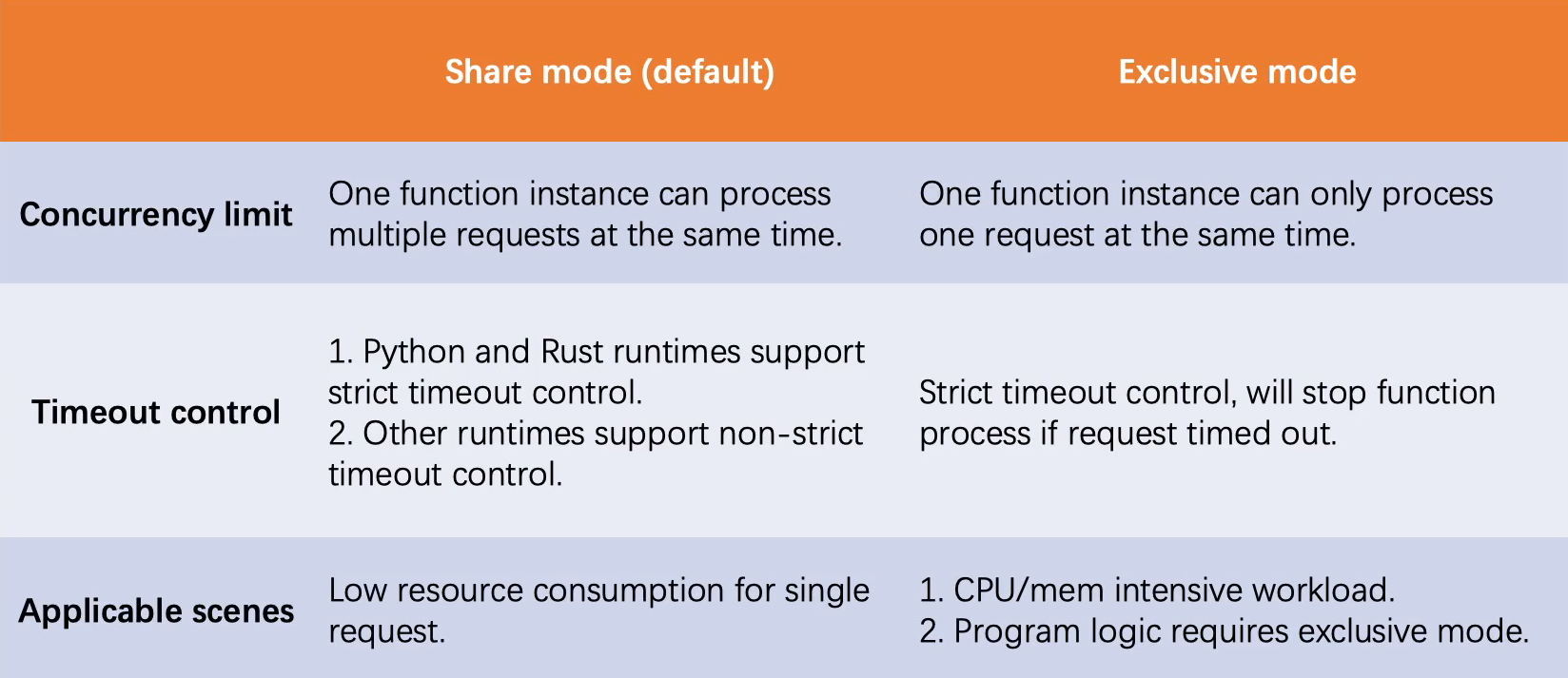

- Share/Exclusive mode

Share/Exclusive Mode

一般建议使用共享模式,单个请求资源消耗比较少,Exclusive浪费资源,不适合大流量,高并发的场景,后台压力太大了昂!!!

Concurrency control

- Pre-scaling by concurrency utilization

- faster detects the load change and scales up the function

- Concurrency awareness routing policy

- achieve load balancing among function instances

- In-memory concurrency counter & function sharding strategy

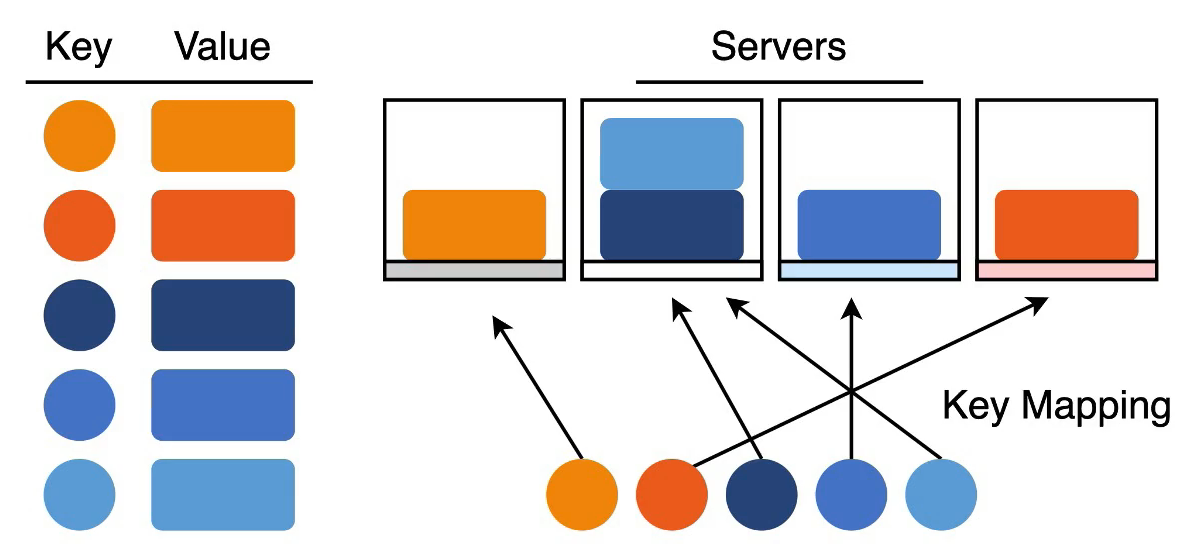

Function sharding strategy

-

Load balancing

- each server is responsible for the same number of loads.

-

Scalability

- we can add and remove servers without too much effort.

-

Lookup speed

- given a key, we can quickly identify the correct server.

-

Hash is popular!!!

- 又是一致性Hash!!!

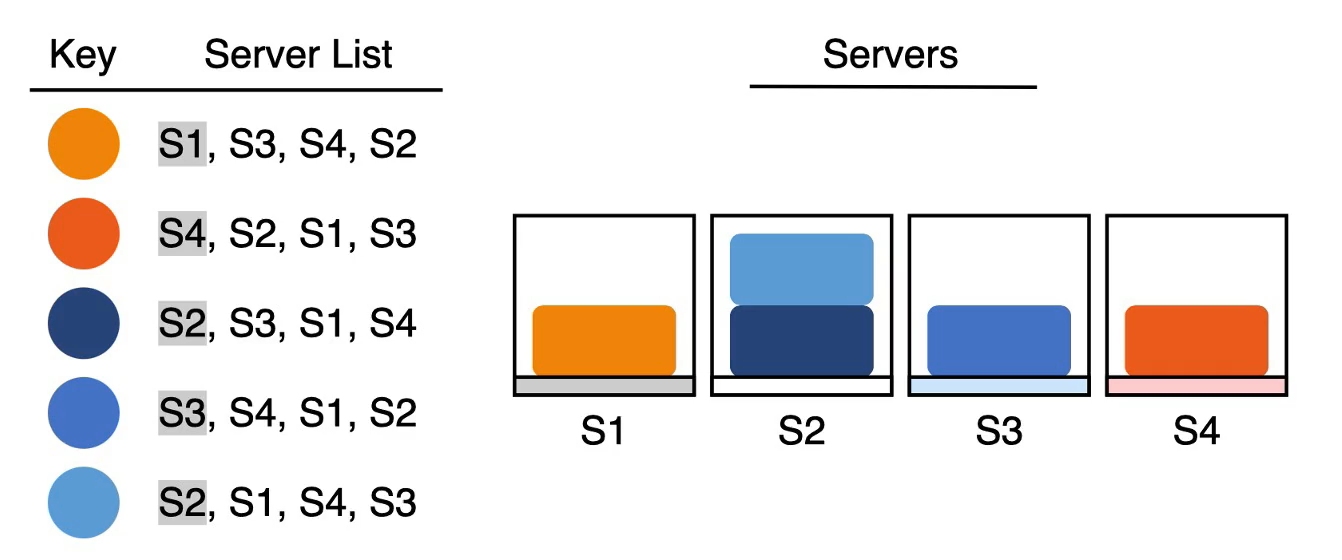

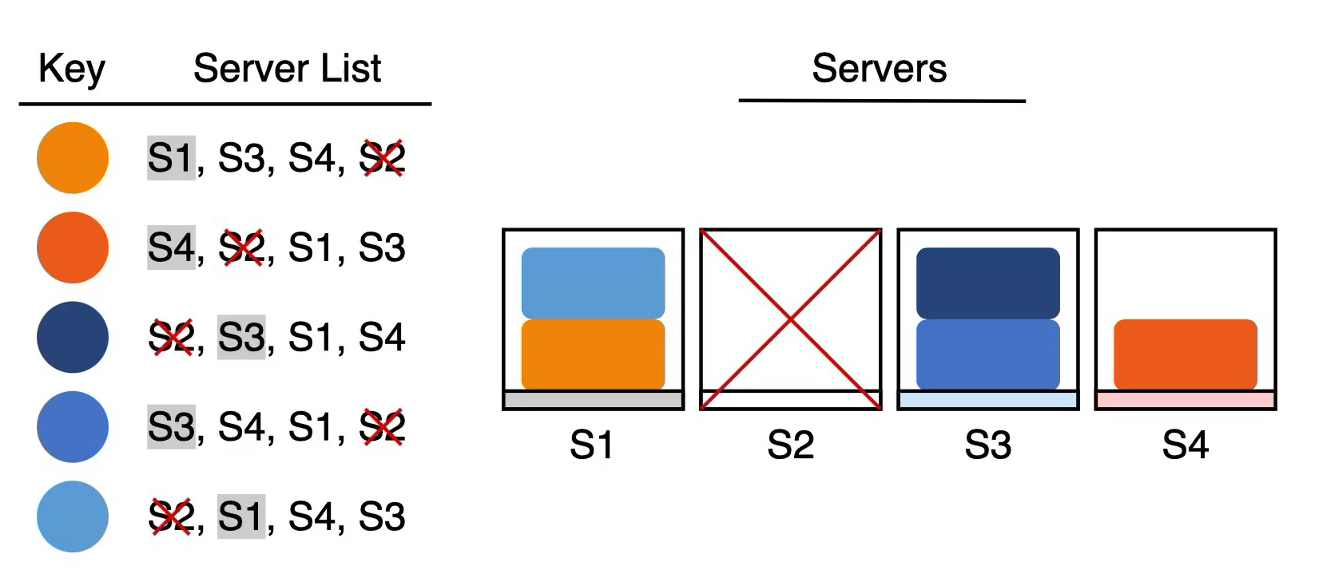

Sharding functions with Rendezvous Hashing

- each key generates a randomly sorted list of servers and chooses the first server from the list

- if our first choice for a server goes offline, we simply move the key to the second server in the list

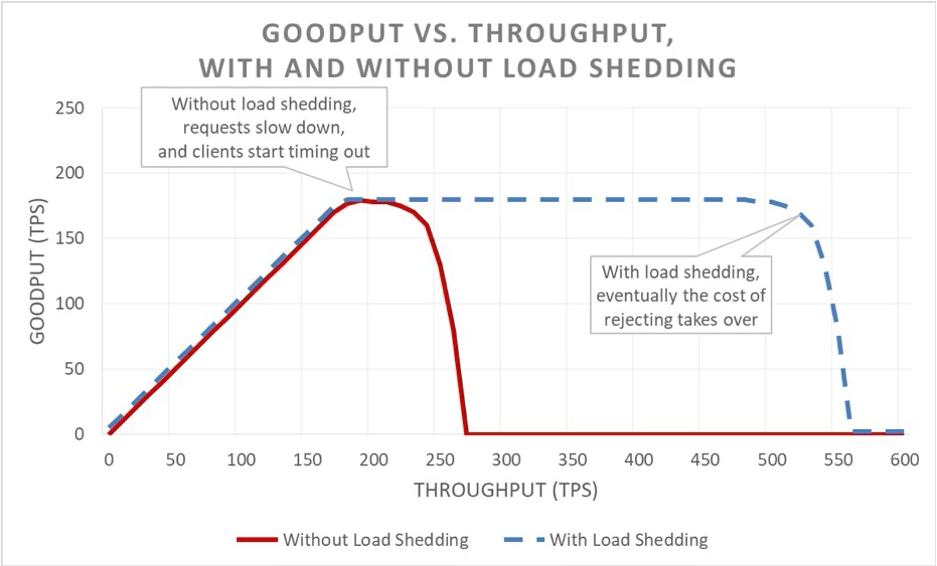

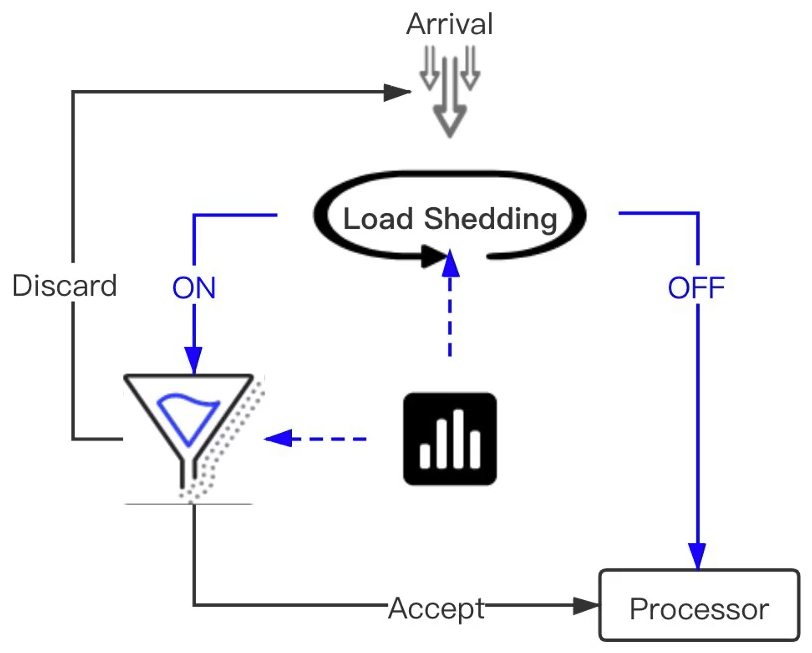

Load shedding

思路很简单,如果负载饱和了,有新的请求来了,我就拒绝掉呗!!!

- Prevent the system from getting overloaded

- Load shedding lets a server maintain its goodput and complete as many requests as it can, even as offered throughput increases.

- Load shedding workflow

- check server-level metrics/counters to turn on/off load shedding

- check client-level metrics/ counters to limit request rate (choose the right victims)

Function Cold Start

why and how to cold start a function instance

-

Cold start if there is no available route concurrency

- function concurrency limit exhausted

- function is scaled down to zero

-

Cold start workflow

- take pod from warming pool

- download function code

- load function

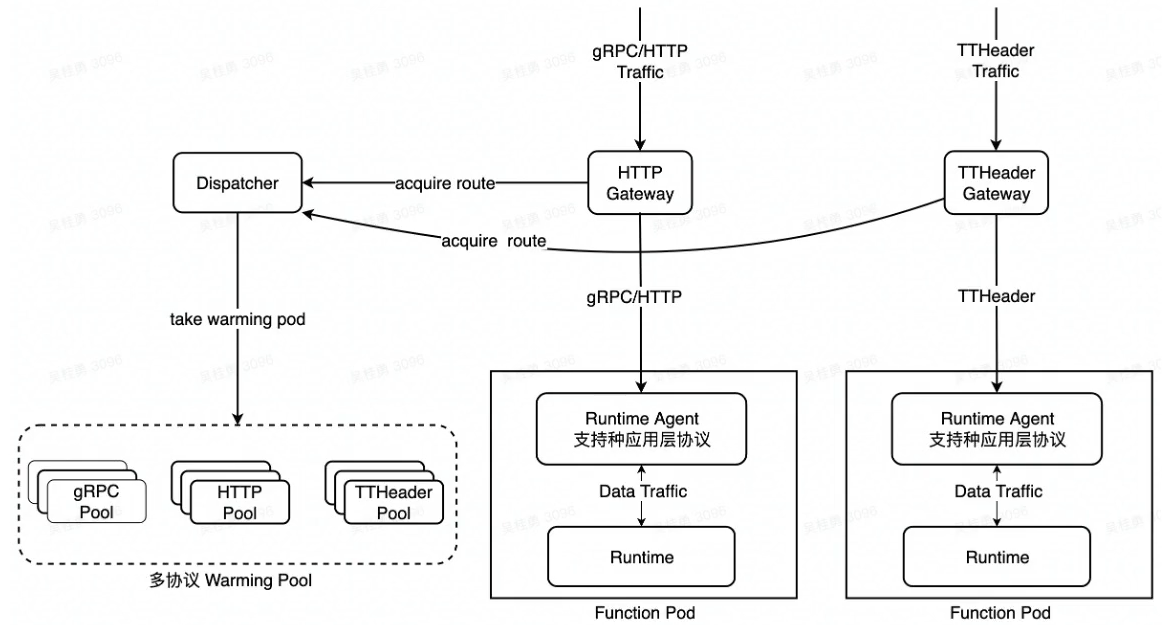

Warming Pool Management

- Warming pool provides 'generic' standby pods

- support different runtime and protocol

- pool pods are shared by functions

空跑一些pod,方便后面使用。只有Runtime-agent,没有具体的Function。相当于公用的资源池

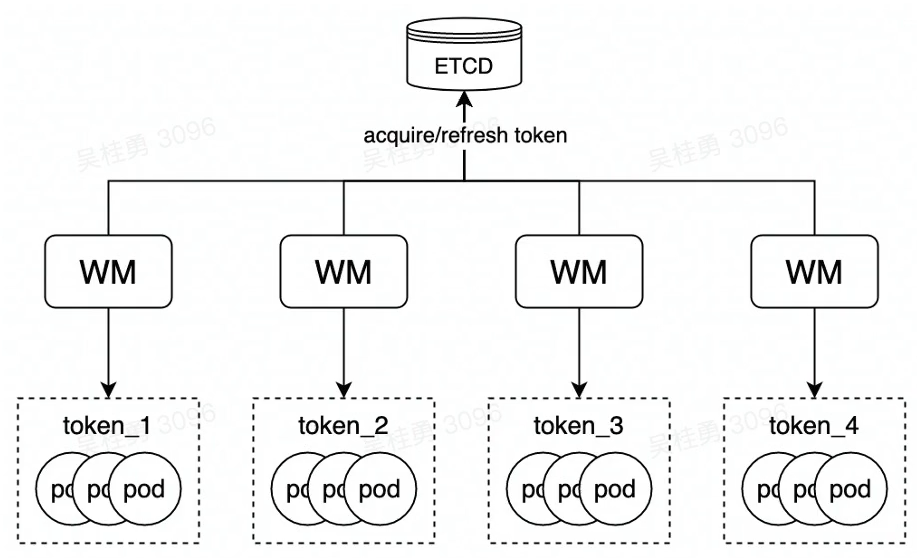

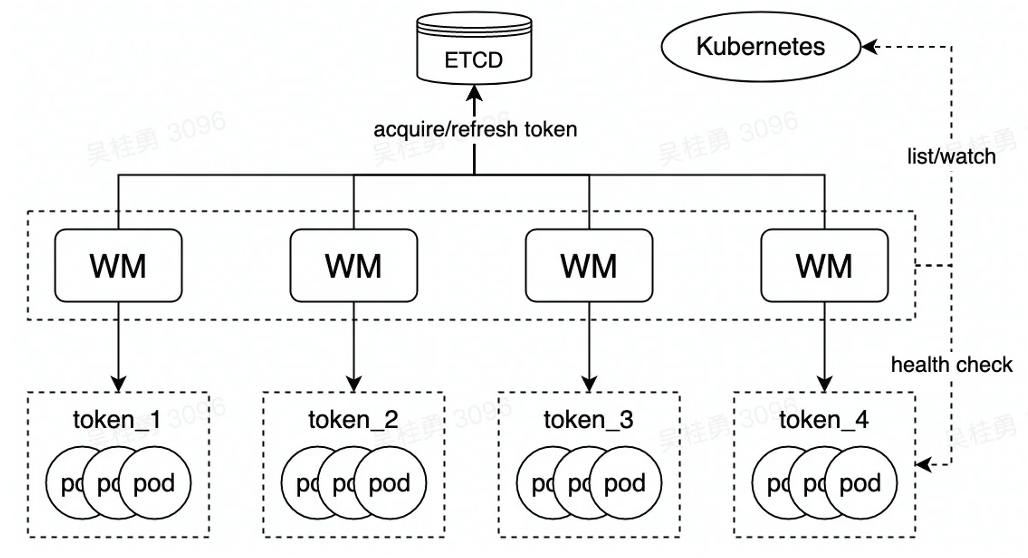

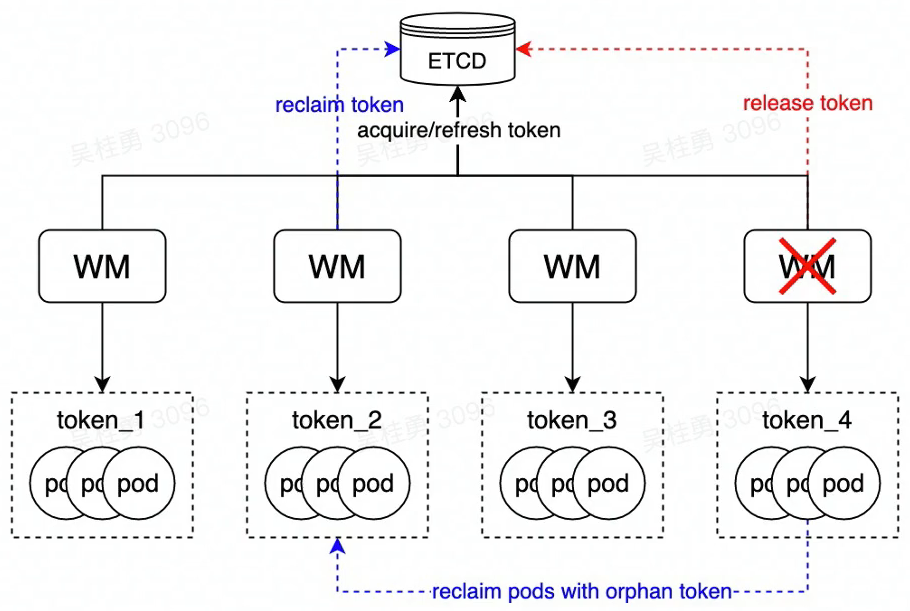

- Lease-based sharded pool management

- avoid repeated pod allocation(没给pod带有一个token,这个token就是租约,并且需要定期来续租,刷新token昂!保证一个pod只被一个WM管理,避免重复分配)

- Light-weight list/watch & health check

- pods handover when adding or removing servers

Function Code Start

-

Download function code package

- local cache > Nginx cache > Luban

-

Cache/ pre-load code to local store

- disk utilization / available size

- store metadata of historical usage

- LRU replacement policy

-

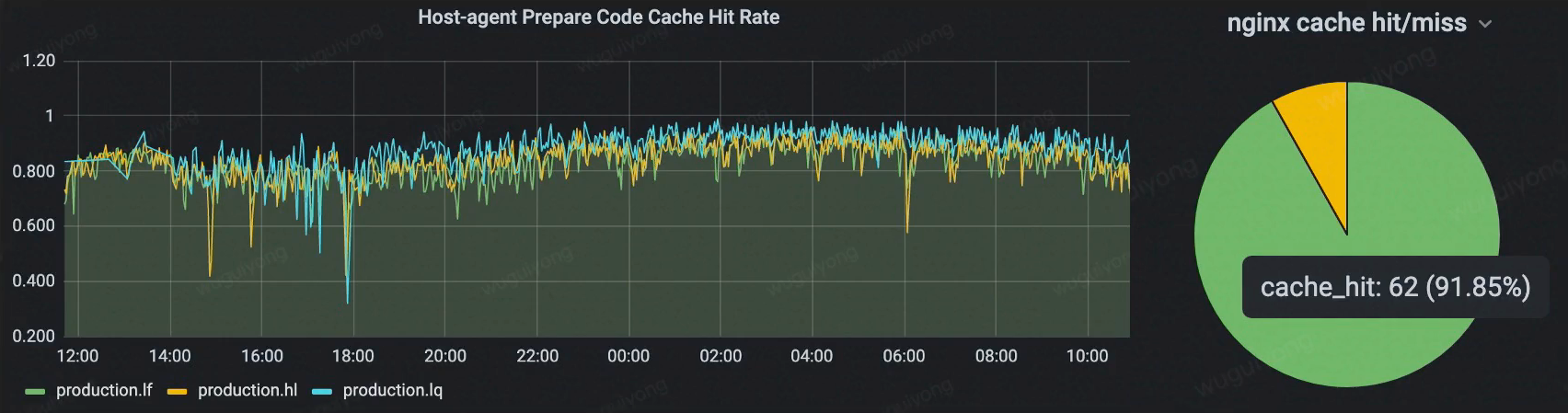

Code cache efficiency

- local cache (80~90%) > Nginx (~90%) > Luban

- improve Nginx cache scalability (ongoing)

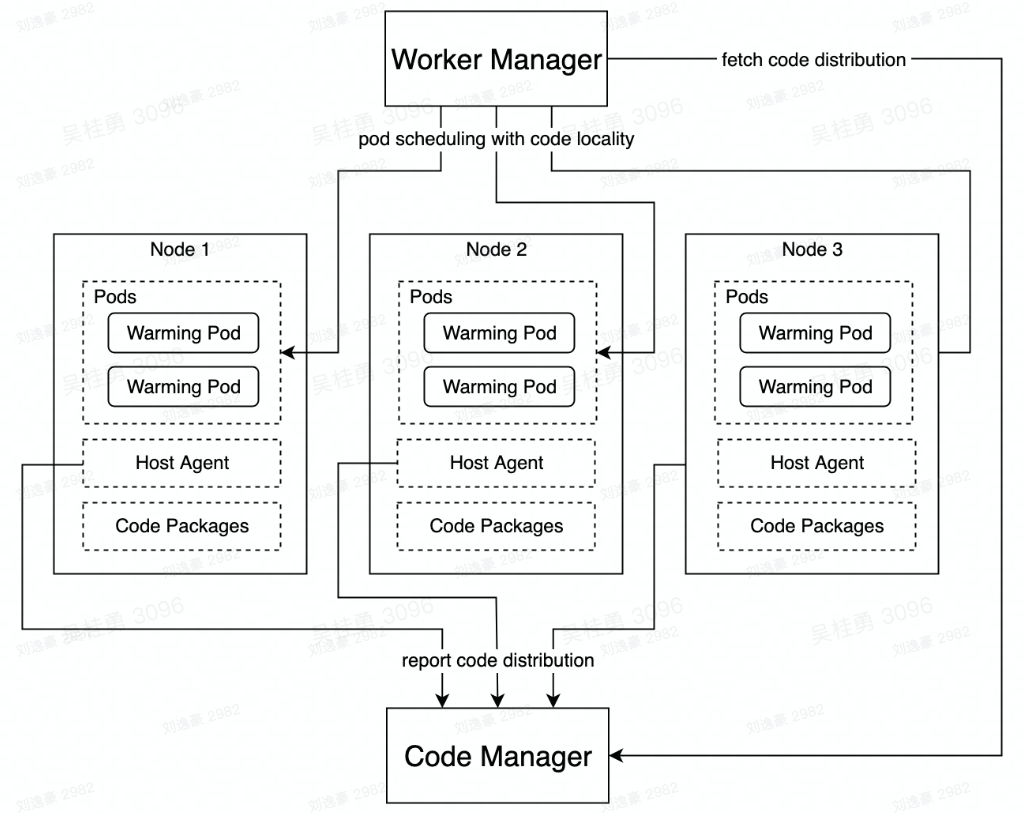

Warming Pod Scheduling

- Code distribution awareness scheduling

- report and gather code distribution

- affinity scheduling with code locality

- Node state awareness scheduling

- skip pods on unhealthy nodes

Future Work

- High scalability

- high-throughput pod scheduling and launching

- high-throughput code distribution

- low-latency function loading

- High availability

- fault detection and self- -healing

- loose coupling and automatic failover

- Intelligent routing policy

- smarter traffic/ load

- control to improve QoS

- reduce ops responsibilities for users

Summary

- Function Runtime

- function runtime standard

- function instance lifecycle

- Function Routing Policy

- function route management

- function invocation concurrency control

- load shedding

- Function Cold Start

- Warming pool management

- function code management

- warming pod scheduling