1. kgsl_driver定义

struct kgsl_driver {

struct cdev cdev;

dev_t major;

struct class *class;

struct device virtdev;

struct kobject *ptkobj;

struct kobject *prockobj;

struct kgsl_device *devp[1];

struct list_head process_list;

struct list_head pagetable_list;

spinlock_t ptlock;

struct mutex process_mutex;

rwlock_t proclist_lock;

struct mutex devlock;

struct {

atomic_long_t vmalloc;

atomic_long_t vmalloc_max;

atomic_long_t page_alloc;

atomic_long_t page_alloc_max;

atomic_long_t coherent;

atomic_long_t coherent_max;

atomic_long_t secure;

atomic_long_t secure_max;

atomic_long_t mapped;

atomic_long_t mapped_max;

} stats;

unsigned int full_cache_threshold;

struct workqueue_struct *workqueue;

struct workqueue_struct *mem_workqueue;

struct kthread_worker worker;

struct task_struct *worker_thread;

};

2. kgsl_driver初始化

struct kgsl_driver kgsl_driver = {

.process_mutex = __MUTEX_INITIALIZER(kgsl_driver.process_mutex),

.proclist_lock = __RW_LOCK_UNLOCKED(kgsl_driver.proclist_lock),

.ptlock = __SPIN_LOCK_UNLOCKED(kgsl_driver.ptlock),

.devlock = __MUTEX_INITIALIZER(kgsl_driver.devlock),

.full_cache_threshold = SZ_16M,

.stats.vmalloc = ATOMIC_LONG_INIT(0),

.stats.vmalloc_max = ATOMIC_LONG_INIT(0),

.stats.page_alloc = ATOMIC_LONG_INIT(0),

.stats.page_alloc_max = ATOMIC_LONG_INIT(0),

.stats.coherent = ATOMIC_LONG_INIT(0),

.stats.coherent_max = ATOMIC_LONG_INIT(0),

.stats.secure = ATOMIC_LONG_INIT(0),

.stats.secure_max = ATOMIC_LONG_INIT(0),

.stats.mapped = ATOMIC_LONG_INIT(0),

.stats.mapped_max = ATOMIC_LONG_INIT(0),

};

3. kgsl_fops

static const struct file_operations kgsl_fops = {

.owner = THIS_MODULE,

.release = kgsl_release,

.open = kgsl_open,

.mmap = kgsl_mmap,

.read = kgsl_read,

.get_unmapped_area = kgsl_get_unmapped_area,

.unlocked_ioctl = kgsl_ioctl,

.compat_ioctl = kgsl_compat_ioctl,

};

3.1 kgsl_open

static int kgsl_open(struct inode *inodep, struct file *filep)

{

int result;

struct kgsl_device_private *dev_priv;

struct kgsl_device *device;

unsigned int minor = iminor(inodep);

device = kgsl_get_minor(minor);

if (device == NULL) {

pr_err("kgsl: No device found\n");

return -ENODEV;

}

result = pm_runtime_get_sync(&device->pdev->dev);

if (result < 0) {

dev_err(device->dev,

"Runtime PM: Unable to wake up the device, rc = %d\n",

result);

return result;

}

result = 0;

dev_priv = device->ftbl->device_private_create();

if (dev_priv == NULL) {

result = -ENOMEM;

goto err;

}

dev_priv->device = device;

filep->private_data = dev_priv;

result = kgsl_open_device(device);

if (result)

goto err;

dev_priv->process_priv = kgsl_process_private_open(device);

if (IS_ERR(dev_priv->process_priv)) {

result = PTR_ERR(dev_priv->process_priv);

kgsl_close_device(device);

goto err;

}

err:

if (result) {

filep->private_data = NULL;

kfree(dev_priv);

pm_runtime_put(&device->pdev->dev);

}

return result;

}

3.2 adreno_device_private_create

static struct kgsl_device_private *adreno_device_private_create(void)

{

struct adreno_device_private *adreno_priv =

kzalloc(sizeof(*adreno_priv), GFP_KERNEL);

if (adreno_priv) {

INIT_LIST_HEAD(&adreno_priv->perfcounter_list);

return &adreno_priv->dev_priv;

}

return NULL;

}

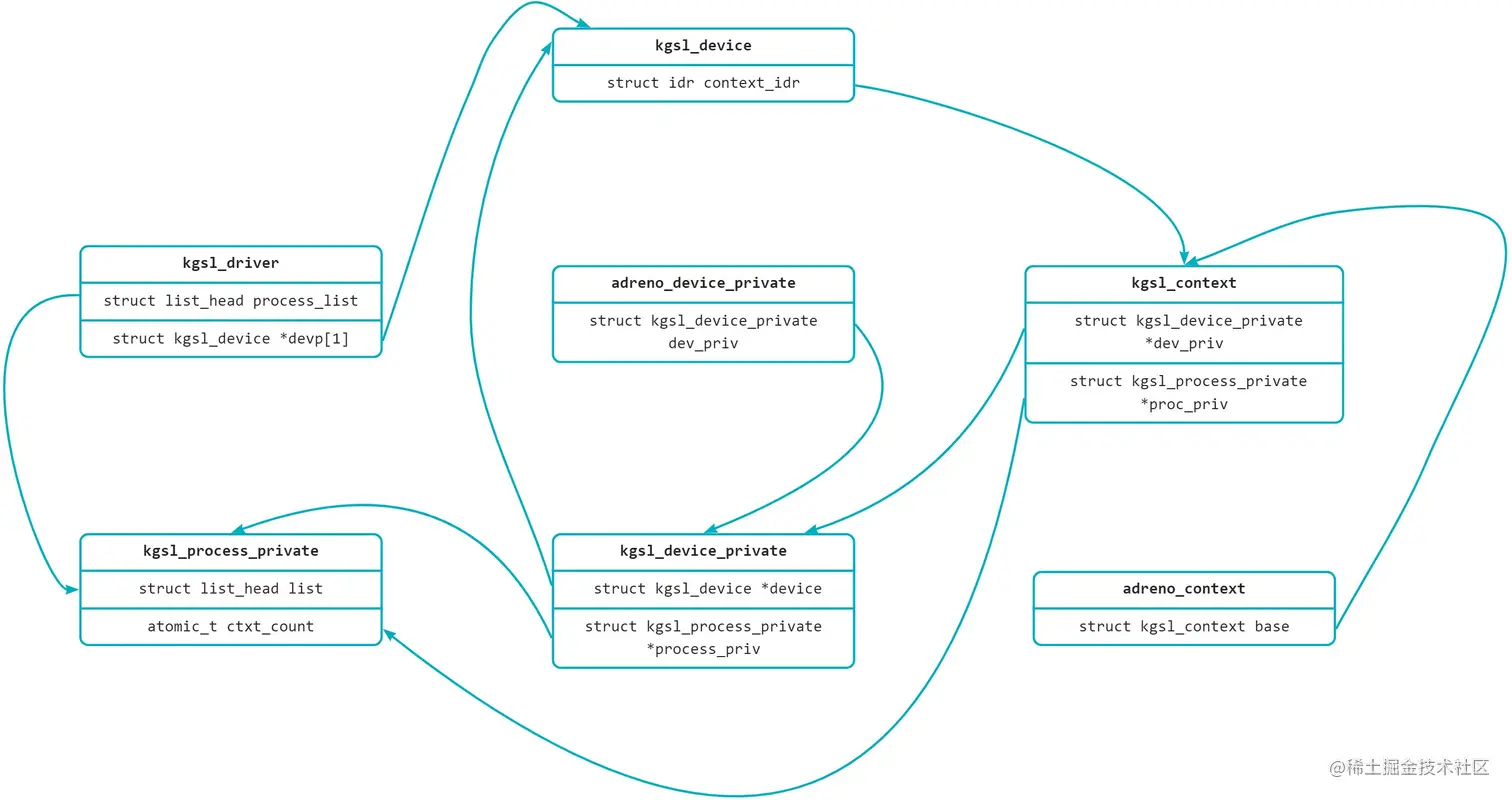

3.2.1 adreno_device_private

struct adreno_device_private {

struct kgsl_device_private dev_priv;

struct list_head perfcounter_list;

};

3.2.2 kgsl_device_private

struct kgsl_device_private {

struct kgsl_device *device;

struct kgsl_process_private *process_priv;

};

3.2.3 kgsl_process_private

struct kgsl_process_private {

unsigned long priv;

struct pid *pid;

char comm[TASK_COMM_LEN];

spinlock_t mem_lock;

struct kref refcount;

struct idr mem_idr;

struct kgsl_pagetable *pagetable;

struct list_head list;

struct kobject kobj;

struct kobject kobj_memtype;

struct dentry *debug_root;

struct {

atomic64_t cur;

uint64_t max;

} stats[KGSL_MEM_ENTRY_MAX];

atomic64_t gpumem_mapped;

struct idr syncsource_idr;

spinlock_t syncsource_lock;

int fd_count;

atomic_t ctxt_count;

spinlock_t ctxt_count_lock;

atomic64_t frame_count;

};

3.2.4 adreno_context

struct adreno_context {

struct kgsl_context base;

unsigned int timestamp;

unsigned int internal_timestamp;

unsigned int type;

spinlock_t lock;

struct kgsl_drawobj *drawqueue[ADRENO_CONTEXT_DRAWQUEUE_SIZE];

unsigned int drawqueue_head;

unsigned int drawqueue_tail;

wait_queue_head_t wq;

wait_queue_head_t waiting;

wait_queue_head_t timeout;

int queued;

unsigned int fault_policy;

struct dentry *debug_root;

unsigned int queued_timestamp;

struct adreno_ringbuffer *rb;

unsigned int submitted_timestamp;

uint64_t submit_retire_ticks[SUBMIT_RETIRE_TICKS_SIZE];

int ticks_index;

struct list_head active_node;

unsigned long active_time;

};

3.2.5 kgsl_context

struct kgsl_context {

struct kref refcount;

uint32_t id;

uint32_t priority;

pid_t tid;

struct kgsl_device_private *dev_priv;

struct kgsl_process_private *proc_priv;

unsigned long priv;

struct kgsl_device *device;

unsigned int reset_status;

struct kgsl_sync_timeline *ktimeline;

struct kgsl_event_group events;

unsigned int flags;

struct kgsl_pwr_constraint pwr_constraint;

struct kgsl_pwr_constraint l3_pwr_constraint;

unsigned int fault_count;

unsigned long fault_time;

struct kgsl_mem_entry *user_ctxt_record;

unsigned int total_fault_count;

unsigned int last_faulted_cmd_ts;

bool gmu_registered;

u32 gmu_dispatch_queue;

};

3.3 kgsl_open_device

static int kgsl_open_device(struct kgsl_device *device)

{

int result = 0;

mutex_lock(&device->mutex);

if (device->open_count == 0) {

result = device->ftbl->first_open(device);

if (result)

goto out;

}

device->open_count++;

out:

mutex_unlock(&device->mutex);

return result;

}

3.4 kgsl_process_private_open

static struct kgsl_process_private *kgsl_process_private_open(

struct kgsl_device *device)

{

struct kgsl_process_private *private;

int i;

private = _process_private_open(device);

for (i = 0; (PTR_ERR_OR_ZERO(private) == -EEXIST) && (i < 5); i++) {

usleep_range(10, 100);

private = _process_private_open(device);

}

return private;

}

3.4.1 _process_private_open

static struct kgsl_process_private *_process_private_open(

struct kgsl_device *device)

{

struct kgsl_process_private *private;

mutex_lock(&kgsl_driver.process_mutex);

private = kgsl_process_private_new(device);

if (IS_ERR(private))

goto done;

private->fd_count++;

done:

mutex_unlock(&kgsl_driver.process_mutex);

return private;

}

3.4.2 kgsl_process_private_new

static struct kgsl_process_private *kgsl_process_private_new(

struct kgsl_device *device)

{

struct kgsl_process_private *private;

struct pid *cur_pid = get_task_pid(current->group_leader, PIDTYPE_PID);

list_for_each_entry(private, &kgsl_driver.process_list, list) {

if (private->pid == cur_pid) {

if (!kgsl_process_private_get(private))

private = ERR_PTR(-EEXIST);

put_pid(cur_pid);

return private;

}

}

private = kzalloc(sizeof(struct kgsl_process_private), GFP_KERNEL);

if (private == NULL) {

put_pid(cur_pid);

return ERR_PTR(-ENOMEM);

}

kref_init(&private->refcount);

private->pid = cur_pid;

get_task_comm(private->comm, current->group_leader);

spin_lock_init(&private->mem_lock);

spin_lock_init(&private->syncsource_lock);

spin_lock_init(&private->ctxt_count_lock);

idr_init(&private->mem_idr);

idr_init(&private->syncsource_idr);

private->pagetable = kgsl_mmu_getpagetable(&device->mmu, pid_nr(cur_pid));

if (IS_ERR(private->pagetable)) {

int err = PTR_ERR(private->pagetable);

idr_destroy(&private->mem_idr);

idr_destroy(&private->syncsource_idr);

put_pid(private->pid);

kfree(private);

private = ERR_PTR(err);

return private;

}

kgsl_process_init_sysfs(device, private);

kgsl_process_init_debugfs(private);

write_lock(&kgsl_driver.proclist_lock);

list_add(&private->list, &kgsl_driver.process_list);

write_unlock(&kgsl_driver.proclist_lock);

return private;

}