鸣叫 鸣叫 分享 分享

最后更新于2022年3月28日

数据可视化是所有人工智能和机器学习应用的一个重要方面。你可以通过不同的图形表示法获得对数据的关键洞察力。在本教程中,我们将讨论Python中数据可视化的几个选项。我们将使用MNIST数据集和Tensorflow库来进行数字计算和数据处理。为了说明创建不同类型图形的各种方法,我们将使用Python的图形库,即matplotlib、Seaborn和Bokeh。

完成本教程后,你将知道。

- 如何在matplotlib中实现图像的可视化

- 如何在matplotlib、Seaborn和Bokeh中制作散点图

- 如何在matplotlib、Seaborn和Bokeh中制作多线图

让我们开始吧。

在Python中用matplotlib、Seaborn和Bokeh进行数据可视化

图片来源:Mehreen Saeed,保留部分权利。

教程概述

本教程分为7个部分;它们是。

- 散点数据的准备

- matplotlib中的数字

- 在matplotlib和Seaborn中绘制散点图

- Bokeh中的散点图

- 线形图数据的准备

- 在matplotlib、Seaborn和Bokeh中绘制线状图

- 关于可视化的更多信息

散点数据的准备

在这篇文章中,我们将使用matplotlib、seaborn和bokeh。它们都是需要安装的外部库。使用pip 来安装它们,运行以下命令。

pip install matplotlib seaborn bokeh

为了演示,我们还将使用MNIST的手写数字数据集。我们将从Tensorflow中加载它,并对其运行PCA算法。因此,我们还需要安装Tensorflow和pandas。

pip install tensorflow pandas

之后的代码将假设以下导入被执行。

# Importing from tensorflow and keras

from tensorflow.keras.datasets import mnist

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape

from tensorflow.keras import utils

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

# For math operations

import numpy as np

# For plotting with matplotlib

import matplotlib.pyplot as plt

# For plotting with seaborn

import seaborn as sns

# For plotting with bokeh

from bokeh.plotting import figure, show

from bokeh.models import Legend, LegendItem

# For pandas dataframe

import pandas as pd

我们从keras.datasets 库中加载MNIST数据集。为了保持简单,我们将只保留包含前三位数字的数据子集。我们也将暂时忽略测试集。

...

# load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Shape of training data

total_examples, img_length, img_width = x_train.shape

# Print the statistics

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

Training data has 18623 images

Each image is of size 28 x 28

matplotlib中的数字

Seaborn确实是matplotlib的一个插件。因此,即使你使用Seaborn,你也需要了解matplotlib如何处理图。

Matplotlib将其画布称为图。你可以把图分成几个部分,称为子图,所以你可以把两个可视化的东西并排放在一起。

作为一个例子,让我们用matplotlib将我们的MNIST数据集的前16张图片可视化。我们将使用subplots() 函数创建2行和8列。subplots() 函数将为每个单元创建轴对象。然后我们将使用imshow() 方法在每个轴对象上显示每张图片。最后,将使用show() 函数来显示该图。

img_per_row = 8

fig,ax = plt.subplots(nrows=2, ncols=img_per_row,

figsize=(18,4),

subplot_kw=dict(xticks=[], yticks=[]))

for row in [0, 1]:

for col in range(img_per_row):

ax[row, col].imshow(x_train[row*img_per_row + col].astype('int'))

plt.show()

训练数据集的前16张图片以2行8列的形式显示出来

这里我们可以看到matplotlib的一些属性。在matplotlib中,有一个默认的图和默认的轴。在matplotlib的pyplot 子模块中定义了一些函数,用于在默认坐标轴上绘图。如果我们想在某个特定的坐标轴上绘图,我们可以使用坐标轴对象下的绘图函数。操作一个图形的操作是程序性的。意思是,matplotlib内部有一个数据结构,我们的操作将改变它。show() 函数只是显示一系列操作的结果。正因为如此,我们可以逐渐对图上的很多细节进行微调。在上面的例子中,我们通过将xticks 和yticks 设置为空列表,隐藏了 "刻度线"(即轴上的标记)。

matplotlib和Seaborn中的散点图

我们在机器学习项目中常用的可视化方法之一是散点图。

作为一个例子,我们将PCA应用于MNIST数据集,并提取每个图像的前三个成分。在下面的代码中,我们从数据集中计算出特征向量和特征值,然后沿着特征向量的方向投影每张图像的数据,并将结果存储在x_pca 。为了简单起见,我们在计算特征向量之前没有将数据归一化为零均值和单位方差。这一省略并不影响我们的可视化目的。

...

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

打印出来的特征值如下。

3 largest eigenvalues: tf.Tensor([5.1999642e+09 1.1419439e+10 4.8231231e+10], shape=(3,), dtype=float32)

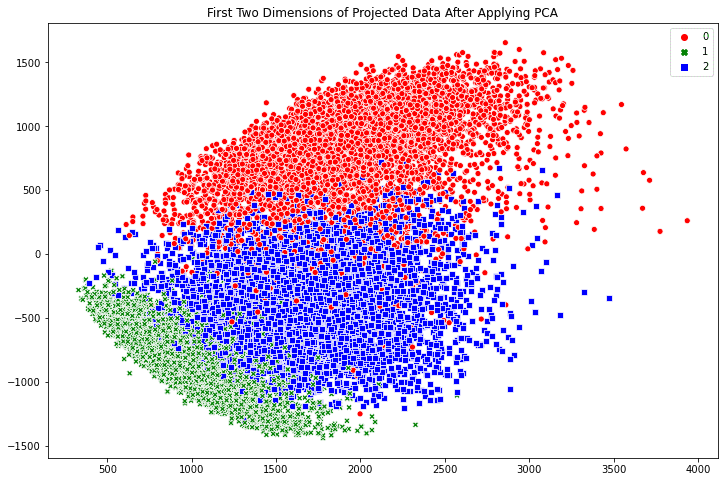

数组x_pca ,形状为18623 x 784。让我们把最后两列看作是x和y坐标,并使每一行的点在图中出现。我们可以根据该点所对应的数字进一步着色。

下面的代码使用matplotlib生成了一个散点图。该图是使用axes对象的scatter() 函数创建的,该函数将x-和y-坐标作为前两个参数。scatter() 方法的c 参数指定了一个将成为其颜色的值。s 参数指定了它的大小。该代码还创建了一个图例,并为该图添加了一个标题。

fig, ax = plt.subplots(figsize=(12, 8))

scatter = ax.scatter(x_pca[:, -1], x_pca[:, -2], c=train_labels, s=5)

legend_plt = ax.legend(*scatter.legend_elements(),

loc="lower left", title="Digits")

ax.add_artist(legend_plt)

plt.title('First Two Dimensions of Projected Data After Applying PCA')

plt.show()

使用matplotlib生成的二维散点图

综合上述内容,以下是使用matplotlib生成2D散点图的完整代码。

from tensorflow.keras.datasets import mnist

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

import numpy as np

import matplotlib.pyplot as plt

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

# Create the plot

fig, ax = plt.subplots(figsize=(12, 8))

scatter = ax.scatter(x_pca[:, -1], x_pca[:, -2], c=train_labels, s=5)

legend_plt = ax.legend(*scatter.legend_elements(),

loc="lower left", title="Digits")

ax.add_artist(legend_plt)

plt.title('First Two Dimensions of Projected Data After Applying PCA')

plt.show()

Matplotlib还允许生成一个三维散点图。要做到这一点,你需要首先创建一个具有3D投影的轴对象。然后用scatter3D() ,用x、y、z坐标作为前三个参数来创建3D散点图。下面的代码使用沿最大的三个特征值对应的特征向量投影的数据。该代码没有创建图例,而是创建了一个色条。

fig = plt.figure(figsize=(12, 8))

ax = plt.axes(projection='3d')

plt_3d = ax.scatter3D(x_pca[:, -1], x_pca[:, -2], x_pca[:, -3], c=train_labels, s=1)

plt.colorbar(plt_3d)

plt.show()

使用matplotlib生成的三维散点图

scatter3D() 函数只是将点放到三维空间上。之后,我们仍然可以修改图的显示方式,如每个轴的标签和背景颜色。但在3D绘图中,一个常见的调整是视口,即我们看3D空间的角度。视口是由坐标轴对象中的view_init() 函数控制的。

ax.view_init(elev=30, azim=-60)

视口由仰角(即与地平线平面的角度)和方位角(即在地平线平面上的旋转)控制。默认情况下,matplotlib使用30度仰角和-60度方位角,如上所示。

把所有东西放在一起,下面是在matplotlib中创建3D散点图的完整代码。

from tensorflow.keras.datasets import mnist

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

import numpy as np

import matplotlib.pyplot as plt

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

# Create the plot

fig = plt.figure(figsize=(12, 8))

ax = plt.axes(projection='3d')

ax.view_init(elev=30, azim=-60)

plt_3d = ax.scatter3D(x_pca[:, -1], x_pca[:, -2], x_pca[:, -3], c=train_labels, s=1)

plt.colorbar(plt_3d)

plt.show()

在Seaborn中创建散点图也同样简单。scatterplot() 方法会自动创建一个图例,并在绘制点的时候为不同的类使用不同的符号。默认情况下,该图是在来自matplotlib的 "当前坐标轴 "上创建的,除非通过ax 参数指定坐标轴对象。

fig, ax = plt.subplots(figsize=(12, 8))

sns.scatterplot(x_pca[:, -1], x_pca[:, -2],

style=train_labels, hue=train_labels,

palette=["red", "green", "blue"])

plt.title('First Two Dimensions of Projected Data After Applying PCA')

plt.show()

使用Seaborn生成的二维散点图

与matplotlib相比,Seaborn的好处有两个方面。首先,我们有一个抛光的默认样式。例如,如果我们比较上面两个散点图中的点的样式,Seaborn的那个点周围有一个边框,以防止许多点被弄到一起。事实上,如果我们在调用任何matplotlib函数之前运行下面这一行。

sns.set(style = "darkgrid")

我们仍然可以使用matplotlib函数,但通过使用Seaborn的风格得到一个更好看的图。其次,如果我们使用pandas DataFrame来保存我们的数据,那么使用Seaborn会更方便。作为一个例子,让我们把我们的MNIST数据从一个张量转换成一个pandas DataFrame。

df_mnist = pd.DataFrame(x_pca[:, -3:].numpy(), columns=["pca3","pca2","pca1"])

df_mnist["label"] = train_labels

print(df_mnist)

其中的DataFrame看起来像下面这样。

pca3 pca2 pca1 label

0 -537.730103 926.885254 1965.881592 0

1 167.375885 -947.360107 1070.359375 1

2 553.685425 -163.121826 1754.754272 2

3 -642.905579 -767.283020 1053.937988 1

4 -651.812988 -586.034424 662.468201 1

... ... ... ... ...

18618 415.358948 -645.245972 853.439209 1

18619 754.555786 7.873116 1897.690552 2

18620 -321.809357 665.038086 1840.480225 0

18621 643.843628 -85.524895 1113.795166 2

18622 94.964279 -549.570984 561.743042 1

[18623 rows x 4 columns]

然后,我们可以用以下方式重现Seaborn的散点图。

fig, ax = plt.subplots(figsize=(12, 8))

sns.scatterplot(data=df_mnist, x="pca1", y="pca2",

style="label", hue="label",

palette=["red", "green", "blue"])

plt.title('First Two Dimensions of Projected Data After Applying PCA')

plt.show()

其中我们没有将数组作为坐标传递给scatterplot() ,而是将列名传递给data 参数。

下面是使用Seaborn生成散点图的完整代码,数据存储在pandas中。

from tensorflow.keras.datasets import mnist

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

# Making pandas DataFrame

df_mnist = pd.DataFrame(x_pca[:, -3:].numpy(), columns=["pca3","pca2","pca1"])

df_mnist["label"] = train_labels

# Create the plot

fig, ax = plt.subplots(figsize=(12, 8))

sns.scatterplot(data=df_mnist, x="pca1", y="pca2",

style="label", hue="label",

palette=["red", "green", "blue"])

plt.title('First Two Dimensions of Projected Data After Applying PCA')

plt.show()

Seaborn作为一些matplotlib函数的封装器,并没有完全取代matplotlib。例如,Seaborn不支持3D绘图,我们仍然需要求助于matplotlib函数来实现这种目的。

Bokeh中的散点图

由matplotlib和Seaborn创建的图是静态图像。如果你需要放大、平移或切换显示图中的某些部分,你应该使用Bokeh来代替。

在Bokeh中创建散点图也很容易。下面的代码生成了一个散点图并添加了一个图例。Bokeh库的show() 方法打开一个新的浏览器窗口来显示图像。你可以通过缩放、放大、滚动和更多的选项与该图互动,这些选项显示在渲染的图旁边的工具栏中。你还可以通过点击图例来隐藏部分散点。

colormap = {0: "red", 1:"green", 2:"blue"}

my_scatter = figure(title="First Two Dimensions of Projected Data After Applying PCA",

x_axis_label="Dimension 1",

y_axis_label="Dimension 2")

for digit in [0, 1, 2]:

selection = x_pca[train_labels == digit]

my_scatter.scatter(selection[:,-1].numpy(), selection[:,-2].numpy(),

color=colormap[digit], size=5,

legend_label="Digit "+str(digit))

my_scatter.legend.click_policy = "hide"

show(my_scatter)

Bokeh将在HTML中用Javascript生成图表。你控制绘图的所有动作都由一些Javascript函数处理。它的输出看起来像下面这样。

在一个新的浏览器窗口中使用Bokeh生成的2D散点图。请注意右边的各种选项,以便与该图进行互动。

以下是使用Bokeh生成上述散点图的完整代码。

from tensorflow.keras.datasets import mnist

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

import numpy as np

from bokeh.plotting import figure, show

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

# Create scatter plot in Bokeh

colormap = {0: "red", 1:"green", 2:"blue"}

my_scatter = figure(title="First Two Dimensions of Projected Data After Applying PCA",

x_axis_label="Dimension 1",

y_axis_label="Dimension 2")

for digit in [0, 1, 2]:

selection = x_pca[train_labels == digit]

my_scatter.scatter(selection[:,-1].numpy(), selection[:,-2].numpy(),

color=colormap[digit], size=5, alpha=0.5,

legend_label="Digit "+str(digit))

my_scatter.legend.click_policy = "hide"

show(my_scatter)

如果你在Jupyter笔记本中渲染Bokeh图,你可能会看到该图是在一个新的浏览器窗口中生成的。要把图放在Jupyter笔记本中,你需要通过在Bokeh函数前运行以下内容告诉Bokeh你在笔记本环境下。

from bokeh.io import output_notebook

output_notebook()

还要注意,我们在一个循环中创建三位数的散点图,一次一个数字。这是使图例互动的需要,因为每次调用scatter() ,都会创建一个新对象。如果我们使用一次创建所有的散点,就像下面这样,点击图例将隐藏和显示所有的东西,而不是只显示其中一个数字的点。

colormap = {0: "red", 1:"green", 2:"blue"}

colors = [colormap[i] for i in train_labels]

my_scatter = figure(title="First Two Dimensions of Projected Data After Applying PCA",

x_axis_label="Dimension 1", y_axis_label="Dimension 2")

scatter_obj = my_scatter.scatter(x_pca[:, -1].numpy(), x_pca[:, -2].numpy(), color=colors, size=5)

legend = Legend(items=[

LegendItem(label="Digit 0", renderers=[scatter_obj], index=0),

LegendItem(label="Digit 1", renderers=[scatter_obj], index=1),

LegendItem(label="Digit 2", renderers=[scatter_obj], index=2),

])

my_scatter.add_layout(legend)

my_scatter.legend.click_policy = "hide"

show(my_scatter)

线形图数据的准备

在我们继续展示如何将线图数据可视化之前,让我们生成一些数据进行说明。下面是一个使用Keras库的简单分类器,我们训练它来学习手写数字分类。fit() 方法返回的历史对象是一个字典,包含训练阶段的所有学习历史。为了简单起见,我们将只用10个epochs来训练这个模型。

epochs = 10

y_train = utils.to_categorical(train_labels)

input_dim = img_length*img_width

# Create a Sequential model

model = Sequential()

# First layer for reshaping input images from 2D to 1D

model.add(Reshape((input_dim, ), input_shape=(img_length, img_width)))

# Dense layer of 8 neurons

model.add(Dense(8, activation='relu'))

# Output layer

model.add(Dense(total_classes, activation='softmax'))

# Compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_split=0.33, epochs=epochs, batch_size=10, verbose=0)

print('Learning history: ', history.history)

上面的代码将产生一个键为loss 、accuracy 、val_loss 、val_accuracy 的字典,如下所示。

Learning history: {'loss': [0.5362154245376587, 0.08184114843606949, ...],

'accuracy': [0.9426144361495972, 0.9763565063476562, ...],

'val_loss': [0.09874073415994644, 0.07835448533296585, ...],

'val_accuracy': [0.9716889262199402, 0.9788480401039124, ...]}

在matplotlib、Seaborn和Bokeh中绘制线图

让我们看看有哪些选项可以将训练分类器得到的学习历史可视化。

在matplotlib中创建一个多线图就像下面这样简单。我们从历史记录中获得训练和验证准确率的数值列表,默认情况下,matplotlib会将其视为连续数据(即x坐标是从0开始计算的整数)。

plt.plot(history.history['accuracy'], label="Training accuracy")

plt.plot(history.history['val_accuracy'], label="Validation accuracy")

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

使用Matplotlib绘制多线图

创建多线图的完整代码如下。

from tensorflow.keras.datasets import mnist

from tensorflow.keras import utils

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape

import numpy as np

import matplotlib.pyplot as plt

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Prepare for classifier network

epochs = 10

y_train = utils.to_categorical(train_labels)

input_dim = img_length*img_width

# Create a Sequential model

model = Sequential()

# First layer for reshaping input images from 2D to 1D

model.add(Reshape((input_dim, ), input_shape=(img_length, img_width)))

# Dense layer of 8 neurons

model.add(Dense(8, activation='relu'))

# Output layer

model.add(Dense(total_classes, activation='softmax'))

# Compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_split=0.33, epochs=epochs, batch_size=10, verbose=0)

print('Learning history: ', history.history)

# Plot accuracy in Matplotlib

plt.plot(history.history['accuracy'], label="Training accuracy")

plt.plot(history.history['val_accuracy'], label="Validation accuracy")

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show()

同样地,我们也可以在Seaborn中做同样的事情。正如我们在散点图中所看到的,我们可以将数据作为一系列数值明确地传递给Seaborn,或者通过pandas DataFrame。让我们在下面使用pandas DataFrame来绘制训练损失和验证损失。

# Create pandas DataFrame

df_history = pd.DataFrame(history.history)

print(df_history)

# Plot using Seaborn

my_plot = sns.lineplot(data=df_history[["loss","val_loss"]])

my_plot.set_xlabel('Epochs')

my_plot.set_ylabel('Loss')

plt.legend(labels=["Training", "Validation"])

plt.title('Training and Validation Loss')

plt.show()

它将打印以下表格,这就是我们从历史记录中创建的DataFrame。

loss accuracy val_loss val_accuracy

0 0.536215 0.942614 0.098741 0.971689

1 0.081841 0.976357 0.078354 0.978848

2 0.064002 0.978841 0.080637 0.972991

3 0.055695 0.981726 0.064659 0.979987

4 0.054693 0.984371 0.070817 0.983729

5 0.053512 0.985173 0.069099 0.977709

6 0.053916 0.983089 0.068139 0.979662

7 0.048681 0.985093 0.064914 0.977709

8 0.052084 0.982929 0.080508 0.971363

9 0.040484 0.983890 0.111380 0.982590

而它生成的图则如下。

使用Seaborn的多线图

默认情况下,Seaborn会理解来自DataFrame的列标签并将其作为图例。在上面,我们为每个绘图提供一个新的标签。此外,线图的X轴默认取自DataFrame的索引,在我们的例子中,它是一个从0到9的整数,我们可以在上面看到。

在Seaborn中制作该图的完整代码如下。

from tensorflow.keras.datasets import mnist

from tensorflow.keras import utils

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Prepare for classifier network

epochs = 10

y_train = utils.to_categorical(train_labels)

input_dim = img_length*img_width

# Create a Sequential model

model = Sequential()

# First layer for reshaping input images from 2D to 1D

model.add(Reshape((input_dim, ), input_shape=(img_length, img_width)))

# Dense layer of 8 neurons

model.add(Dense(8, activation='relu'))

# Output layer

model.add(Dense(total_classes, activation='softmax'))

# Compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_split=0.33, epochs=epochs, batch_size=10, verbose=0)

# Prepare pandas DataFrame

df_history = pd.DataFrame(history.history)

print(df_history)

# Plot loss in seaborn

my_plot = sns.lineplot(data=df_history[["loss","val_loss"]])

my_plot.set_xlabel('Epochs')

my_plot.set_ylabel('Loss')

plt.legend(labels=["Training", "Validation"])

plt.title('Training and Validation Loss')

plt.show()

lineplot() 正如你所期望的,如果我们想精确地控制X和Y坐标,我们也可以像上面Seaborn散点图的例子一样,在调用x 和y 以及data 。

Bokeh还可以生成多线图,如下面的代码所说明的。正如我们在散点图的例子中所看到的,我们需要明确地提供x和y坐标,并且一次做一行。同样,show() 方法会打开一个新的浏览器窗口来显示该图,你可以与之互动。

p = figure(title="Training and validation accuracy",

x_axis_label="Epochs", y_axis_label="Accuracy")

epochs_array = np.arange(epochs)

p.line(epochs_array, df_history['accuracy'], legend_label="Training",

color="blue", line_width=2)

p.line(epochs_array, df_history['val_accuracy'], legend_label="Validation",

color="green")

p.legend.click_policy = "hide"

p.legend.location = 'bottom_right'

show(p)

使用Bokeh的多线图。注意右边的工具栏上显示的用户互动选项。

制作Bokeh图的完整代码如下。

from tensorflow.keras.datasets import mnist

from tensorflow.keras import utils

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape

import numpy as np

import pandas as pd

from bokeh.plotting import figure, show

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Prepare for classifier network

epochs = 10

y_train = utils.to_categorical(train_labels)

input_dim = img_length*img_width

# Create a Sequential model

model = Sequential()

# First layer for reshaping input images from 2D to 1D

model.add(Reshape((input_dim, ), input_shape=(img_length, img_width)))

# Dense layer of 8 neurons

model.add(Dense(8, activation='relu'))

# Output layer

model.add(Dense(total_classes, activation='softmax'))

# Compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_split=0.33, epochs=epochs, batch_size=10, verbose=0)

# Prepare pandas DataFrame

df_history = pd.DataFrame(history.history)

print(df_history)

# Plot accuracy in Bokeh

p = figure(title="Training and validation accuracy",

x_axis_label="Epochs", y_axis_label="Accuracy")

epochs_array = np.arange(epochs)

p.line(epochs_array, df_history['accuracy'], legend_label="Training",

color="blue", line_width=2)

p.line(epochs_array, df_history['val_accuracy'], legend_label="Validation",

color="green")

p.legend.click_policy = "hide"

p.legend.location = 'bottom_right'

show(p)

关于可视化的更多信息

我们上面介绍的每个工具都有很多功能,可以让我们控制可视化中的细节的点点滴滴。在他们各自的文档上搜索,找到你可以打磨你的图的方法是很重要的。同样重要的是,在他们的文档中查看示例代码,了解如何可能使你的可视化效果更好。

在不提供太多细节的情况下,这里有一些你可能想添加到你的可视化中的想法。

- 添加辅助线,比如在时间序列数据上标记训练和验证数据集。来自matplotlib的

axvline()函数可以为此目的在图上制作一条垂直线。 - 添加注释,如箭头和文本标签,以识别图上的关键点。参见matplotlib坐标轴对象中的

annotate()函数。 - 在图形元素重叠的情况下控制透明度水平。我们上面介绍的所有绘图函数都允许一个

alpha参数,以提供一个介于0和1之间的值,用来表示我们可以看透图形的程度。 - 如果数据以这种方式得到更好的说明,我们可以以对数比例显示一些轴。它通常被称为对数图或半对数图。

在我们结束这篇文章之前,下面是一个例子,我们可以在matplotlib中创建一个并排的可视化图,其中一个是用Seaborn创建的。

from tensorflow.keras.datasets import mnist

from tensorflow.keras import utils

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

# Prepare for classifier network

epochs = 10

y_train = utils.to_categorical(train_labels)

input_dim = img_length*img_width

# Create a Sequential model

model = Sequential()

# First layer for reshaping input images from 2D to 1D

model.add(Reshape((input_dim, ), input_shape=(img_length, img_width)))

# Dense layer of 8 neurons

model.add(Dense(8, activation='relu'))

# Output layer

model.add(Dense(total_classes, activation='softmax'))

# Compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_split=0.33, epochs=epochs, batch_size=10, verbose=0)

# Prepare pandas DataFrame

df_history = pd.DataFrame(history.history)

print(df_history)

# Plot side-by-side

fig, ax = plt.subplots(nrows=1, ncols=2, figsize=(15,6))

# left plot

scatter = ax[0].scatter(x_pca[:, -1], x_pca[:, -2], c=train_labels, s=5)

legend_plt = ax[0].legend(*scatter.legend_elements(),

loc="lower left", title="Digits")

ax[0].add_artist(legend_plt)

ax[0].set_title('First Two Dimensions of Projected Data After Applying PCA')

# right plot

my_plot = sns.lineplot(data=df_history[["loss","val_loss"]], ax=ax[1])

my_plot.set_xlabel('Epochs')

my_plot.set_ylabel('Loss')

ax[1].legend(labels=["Training", "Validation"])

ax[1].set_title('Training and Validation Loss')

plt.show()

使用matplotlib和Seaborn创建的并排可视化图片

在Bokeh中的等价物是单独创建每个子图,然后在我们显示它时指定布局。

from tensorflow.keras.datasets import mnist

from tensorflow.keras import utils

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Reshape

from tensorflow import dtypes, tensordot

from tensorflow import convert_to_tensor, linalg, transpose

import numpy as np

import pandas as pd

from bokeh.plotting import figure, show

from bokeh.layouts import row

# Load dataset

(x_train, train_labels), (_, _) = mnist.load_data()

# Choose only the digits 0, 1, 2

total_classes = 3

ind = np.where(train_labels < total_classes)

x_train, train_labels = x_train[ind], train_labels[ind]

# Verify the shape of training data

total_examples, img_length, img_width = x_train.shape

print('Training data has ', total_examples, 'images')

print('Each image is of size ', img_length, 'x', img_width)

# Convert the dataset into a 2D array of shape 18623 x 784

x = convert_to_tensor(np.reshape(x_train, (x_train.shape[0], -1)),

dtype=dtypes.float32)

# Eigen-decomposition from a 784 x 784 matrix

eigenvalues, eigenvectors = linalg.eigh(tensordot(transpose(x), x, axes=1))

# Print the three largest eigenvalues

print('3 largest eigenvalues: ', eigenvalues[-3:])

# Project the data to eigenvectors

x_pca = tensordot(x, eigenvectors, axes=1)

# Prepare for classifier network

epochs = 10

y_train = utils.to_categorical(train_labels)

input_dim = img_length*img_width

# Create a Sequential model

model = Sequential()

# First layer for reshaping input images from 2D to 1D

model.add(Reshape((input_dim, ), input_shape=(img_length, img_width)))

# Dense layer of 8 neurons

model.add(Dense(8, activation='relu'))

# Output layer

model.add(Dense(total_classes, activation='softmax'))

# Compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

history = model.fit(x_train, y_train, validation_split=0.33, epochs=epochs, batch_size=10, verbose=0)

# Prepare pandas DataFrame

df_history = pd.DataFrame(history.history)

print(df_history)

# Create scatter plot in Bokeh

colormap = {0: "red", 1:"green", 2:"blue"}

my_scatter = figure(title="First Two Dimensions of Projected Data After Applying PCA",

x_axis_label="Dimension 1",

y_axis_label="Dimension 2",

width=500, height=400)

for digit in [0, 1, 2]:

selection = x_pca[train_labels == digit]

my_scatter.scatter(selection[:,-1].numpy(), selection[:,-2].numpy(),

color=colormap[digit], size=5, alpha=0.5,

legend_label="Digit "+str(digit))

my_scatter.legend.click_policy = "hide"

# Plot accuracy in Bokeh

p = figure(title="Training and validation accuracy",

x_axis_label="Epochs", y_axis_label="Accuracy",

width=500, height=400)

epochs_array = np.arange(epochs)

p.line(epochs_array, df_history['accuracy'], legend_label="Training",

color="blue", line_width=2)

p.line(epochs_array, df_history['val_accuracy'], legend_label="Validation",

color="green")

p.legend.click_policy = "hide"

p.legend.location = 'bottom_right'

show(row(my_scatter, p))

在Bokeh中创建的并排图

进一步阅读

如果你想深入了解,本节提供了更多关于该主题的资源。

书籍

- Think Python:如何像计算机科学家一样思考》艾伦-B-多尼著

- Python 3编程:Python语言的完整介绍》,作者:Mark Summerfield

- Python编程。约翰-泽勒的《计算机科学导论

- 数据分析的Python,第二版,Wes McKinney著

文章

API参考

- matplotlib.pyplot.scatter

- matplotlib.pyplot.plot

- seaborn.scatterplot

- seaborn.lineeplot

- 用基本字形进行虚化绘图

- 虚化的散点图

- 虚化线图

总结

在本教程中,你发现了Python中数据可视化的各种选项。

具体来说,你学到了

- 如何在不同的行和列中创建子图

- 如何使用Matplotlib渲染图像

- 如何使用Matplotlib生成2D和3D的散点图

- 如何使用seaborn和Bokeh创建2D图

- 如何使用Matplotlib、Seaborn和Bokeh创建多线图

你对这篇文章中讨论的数据可视化选项有什么问题吗?在下面的评论中提出你的问题,我将尽力回答。

鸣叫 鸣叫 分享 分享

The postData Visualization in Python with matplotlib, Seaborn and Bokehappeared first onMachine Learning Mastery.