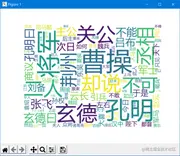

python爬取《三国演义》小说&统计词频&生成词云图

注意点:

- 爬取小说正文时用的正则表达式涉及到多行匹配。需要开启多行模式 (?s)

book_content_re = re.compile(r'(?s)<div.*?id="htmlContent">(.*?)</div>')

源代码练习

import re

import requests

import jieba

import jieba.analyse

import codecs

from collections import Counter

import wordcloud

import matplotlib.pyplot as plt

url = 'http://www.janpn.com/book/sanguoyanyi2.html'

def get_content(url):

txt = requests.get(url).content.decode('utf-8')

book_title = re.compile(r'<h3 class="bookTitle">(.+)</h3>')

book_chapters_re = re.compile(r'<li><a href="(.*?\.html)".*?>(.+?)</a></li>')

book_chapters = book_chapters_re.findall(txt)

book_content_re = re.compile(r'(?s)<div.*?id="htmlContent">(.*?)</div>')

m3 = re.compile(r'\r\n')

m4 = re.compile(r' ')

m5 = re.compile(r'<br />')

print(book_chapters)

with open('三国演义.txt','a') as f:

for i in book_chapters:

print([i[0],i[1]])

print(i[0])

i_url = i[0]

print("正在下载--->%s" % i[1])

content_html = requests.get(i_url).content.decode('utf-8')

content = book_content_re.findall(content_html)[0]

print(content)

content = m3.sub('',content)

content = m4.sub('',content)

content = m5.sub('',content)

print(content)

f.write('\n'+i[1]+'\n')

f.write(content)

def stopwordlist():

stopwords = [line.strip() for line in open('../结巴分词/hit_stopwords.txt',encoding='UTF-8').readline()]

return stopwords

def seg_depart(sentence):

print('正在分词')

sentence_depart = jieba.cut(sentence.strip())

stopwords = stopwordlist()

outstr = ''

for word in sentence_depart:

if word not in stopwords:

if word != '\t':

outstr += word

outstr += ' '

return outstr

filepath = '三国演义.txt'

def create_word_cloud(filepath):

content = codecs.open(filepath,'r','gbk').read()

content = seg_depart(content)

wordlist = jieba.cut(content)

wl = ' '.join(wordlist)

print(wl)

wc = wordcloud.WordCloud(

background_color='white',

max_words=100,

font_path = 'C:\Windows\Fonts\msyh.ttc',

height=1200,

width=1600,

max_font_size=300,

random_state=50

)

myword = wc.generate(wl)

plt.imshow(myword)

plt.axis('off')

plt.show()

create_word_cloud(filepath)

def count_from_file(filepath,top_limit=0):

with codecs.open(filepath,'r','gbk') as f:

content = f.read()

content = re.sub(r'\s+',r' ',content)

content = re.sub(r'\.+',r' ',content)

content = seg_depart(content)

return count_from_str(content)

def count_from_str(content,top_limit=0):

if top_limit<=0:

top_limit=100

tags = jieba.analyse.extract_tags(content,topK=100)

print("关键词:")

print(tags)

words = jieba.cut(content)

counter = Counter()

for word in words:

if word in tags:

counter[word]+=1

return counter.most_common(top_limit)

print("打印词频统计")

result = count_from_file(filepath)

print(result)

def test(url):

book_content_re = re.compile(r'(?s)<div.*?id="htmlContent">(.*?)</div>')

content_html = requests.get(url).content.decode('gbk')

print(content_html)

content = book_content_re.findall(content_html)

print(content)