前期准备(1master 2node)

hostnamectl set-hostname master/node1/node2

cat >> /etc/hosts << EOF

10.211.55.3 master

10.211.55.5 node1

10.211.55.6 node2

EOF

swapoff -a

sed -i.bak '/swap/s/^/#/' /etc/fstab

yum install -y wget

mkdir /opt/centos-yum.bak

mv /etc/yum.repos.d/* /opt/centos-yum.bak/

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y net-tools vim gcc bash-completion

echo 'source <(kubectl completion bash)' >>~/.bashrc

kubectl completion bash >/etc/bash_completion.d/kubectl

source /usr/share/bash-completion/bash_completion

systemctl stop firewalld && systemctl disable firewalld

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all && yum -y makecache

安装docker

yum list installed | grep docker

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

curl -sSL https://get.daocloud.io/docker | sh && systemctl start docker && systemctl enable docker

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://6v5jzbiz.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

sudo systemctl daemon-reload && sudo systemctl restart docker

安装k8s---所有节点执行

yum list kubelet --showduplicates | sort -r

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

vi k8s.sh

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=v1.23.0

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

chmod 755 k8s.sh

./k8s.sh

docker images

配置相关模块---Master执行

lsmod |grep br_netfilter

cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules ; do

[ -x $file ] && $file

done

EOF

cat > /etc/sysconfig/modules/br_netfilter.modules << EOF

modprobe br_netfilter

EOF

chmod 755 /etc/sysconfig/modules/br_netfilter.modules

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

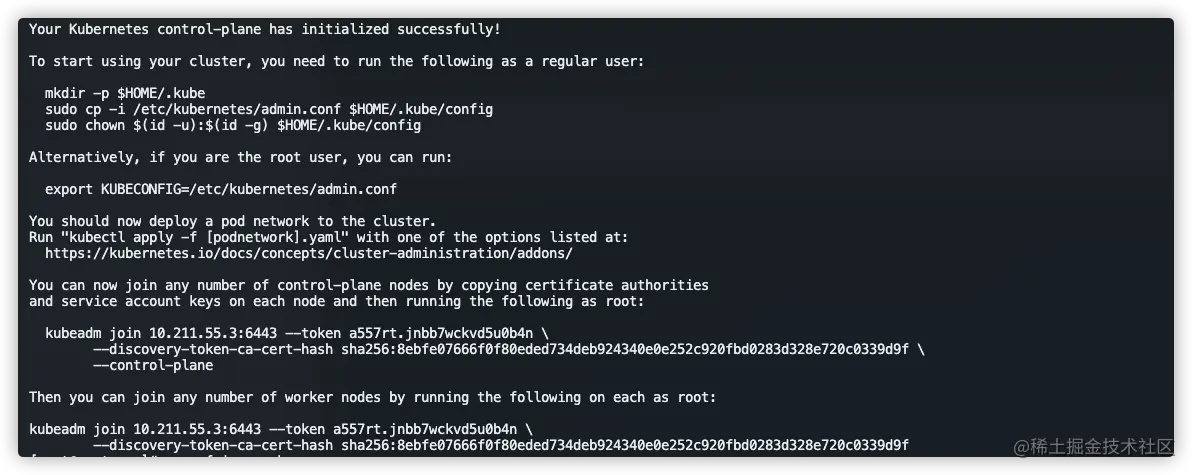

初始化Master

kubeadm config print init-defaults > init.default.yaml

vi kubeadm.conf.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.23.0

apiServer:

certSANs:

- master

- node1

- node2

- 10.211.55.3

- 10.211.55.5

- 10.211.55.6

controlPlaneEndpoint: "10.211.55.3:6443"

networking:

podSubnet: "10.96.0.0/12"

echo "1" > /proc/sys/net/ipv4/ip_forward && service network restart

kubeadm init --config=kubeadm.conf.yaml

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables

echo "1" >/proc/sys/net/ipv4/ip_forward contents

kubeadm reset

rm -rf $HOME/.kube/config

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source .bash_profile

kubeadm join 10.211.55.3:6443

安装网络插件---master执行

# Calico (推荐)

wget https://docs.projectcalico.org/v3.20/manifests/calico.yaml

#大约在3878行,取消注释,并改为前面kubeadm init中pod-network-cidr字段定义的网段 与前面kubeadm init中定义的pod-network-cidr网段一样

vim calico.yaml +3878

...

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

...

kubectl apply -f calico.yaml

# flannel (不推荐)

# 下载kube-flannel.yml文件(相关文件请自行下载) https://github.com/flannel-io/flannel/blob/master/Documentation/kube-flannel.yml

# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

Node节点加入集群 --所有Node节点上执行

# 如果Node已经加入其它集群,需要退出集群

kubeadm reset

# 加入集群 (刚刚master启动完成后最后一句话)

kubeadm join 192.168.0.10:6443 --token 2inyud.ly9di8k2cb1ofqr5 --discovery-token-ca-cert-hash sha256:b6252cb28e59516c96ac1fa3aac6a3b00448f8d83b62723f830b79d64bfea509

# Master上查看节点

kubectl get nodes

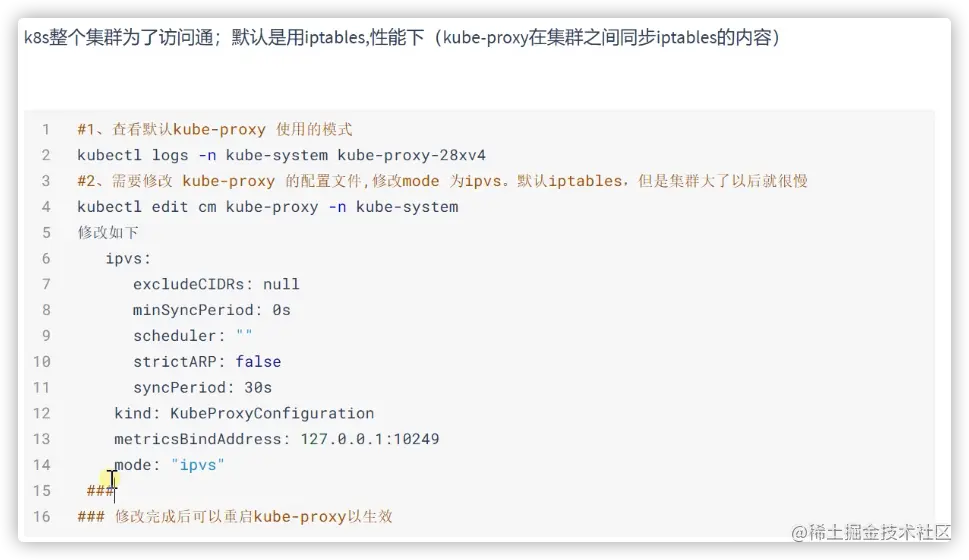

修改kube-proxy mode

验证

kubectl get nodes

kubectl get cs

kubectl get po -o wide -n kube-system

dashboard安装

# https://github.com/kubernetes/dashboard/releases

# 修改镜像地址

sed -i 's/kubernetesui/registry.cn-hangzhou.aliyuncs.com/loong576/g' recommended.yaml

# 下载Dashboard镜像

docker pull kubernetesui/dashboard:v2.0.5

# 配置外网访问

sed -i '/targetPort: 8443/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' recommended.yaml

kubectl apply -f recommended.yaml

kubectl get pods -n kubernetes-dashboard

kubectl get pods,svc -n kubernetes-dashboard

kubectl get all -n kubernetes-dashboard

# 创建用户

kubectl create serviceaccount dashboard-admin -n kube-system

# 用户授权

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

# 获取用户Token

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

# 查看令牌

kubectl describe secrets -n kubernetes-dashboard dashboard-admin

# 重新获取令牌

kubectl describe secret -n kubernetes-dashboard $(kubectl get secret -n kubernetes-dashboard |grep kubernetes-dashboard-token | awk '{print $1}') |grep token | awk '{print $2}'