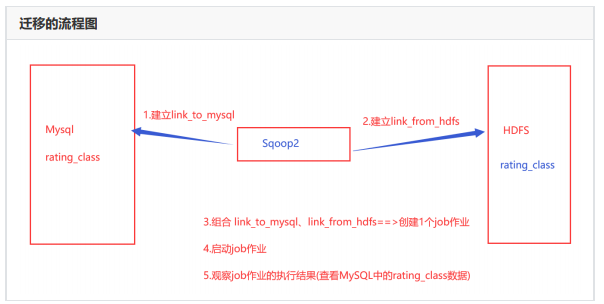

Sqoop2数据迁移

把分析完的结果迁移到mysql数据库里

sqoop是数据迁移工具,可以双向迁移,可以把大数据集群里的数据迁出到关系型数据库里,也可以把关系型数据库里的数据迁移到到大数据集群里。

是关系型数据库和大数据集群之前数据迁入迁出工具。

1.依赖环境

- 操作系统:centos8

- 大数据环境:hdfs,hadoop-3.2.1

- 数据库:MySQL-8.0.19

- Sqoop2版本:1.99.7蛇和新技术学习

- Sqoop1版本:1.4.7,稳定版本,用于生产环境

2.服务启动

-

hdfs服务 我们的数据在hive上面,归根到底就是在hdfs上面

我们要把这里的数据迁出去

- yarn服务 迁移不是剪切哈,底层默认基于mapreduce,n多个并发,reduce规约,要开着资源管理的服务

- sqoop2-server服务

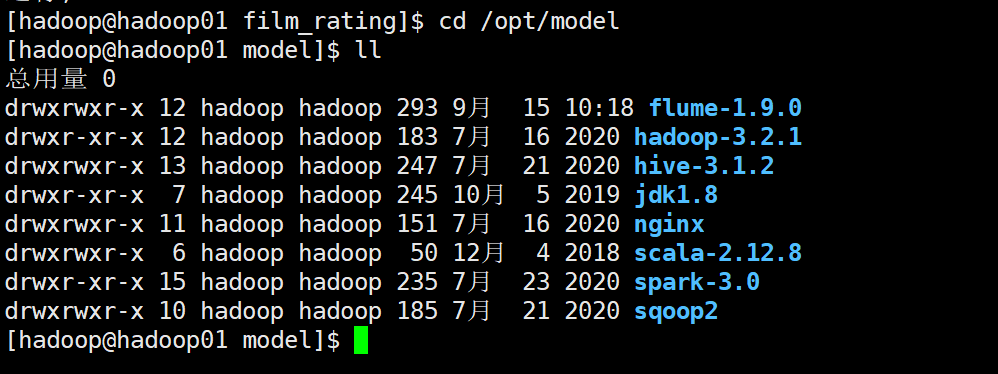

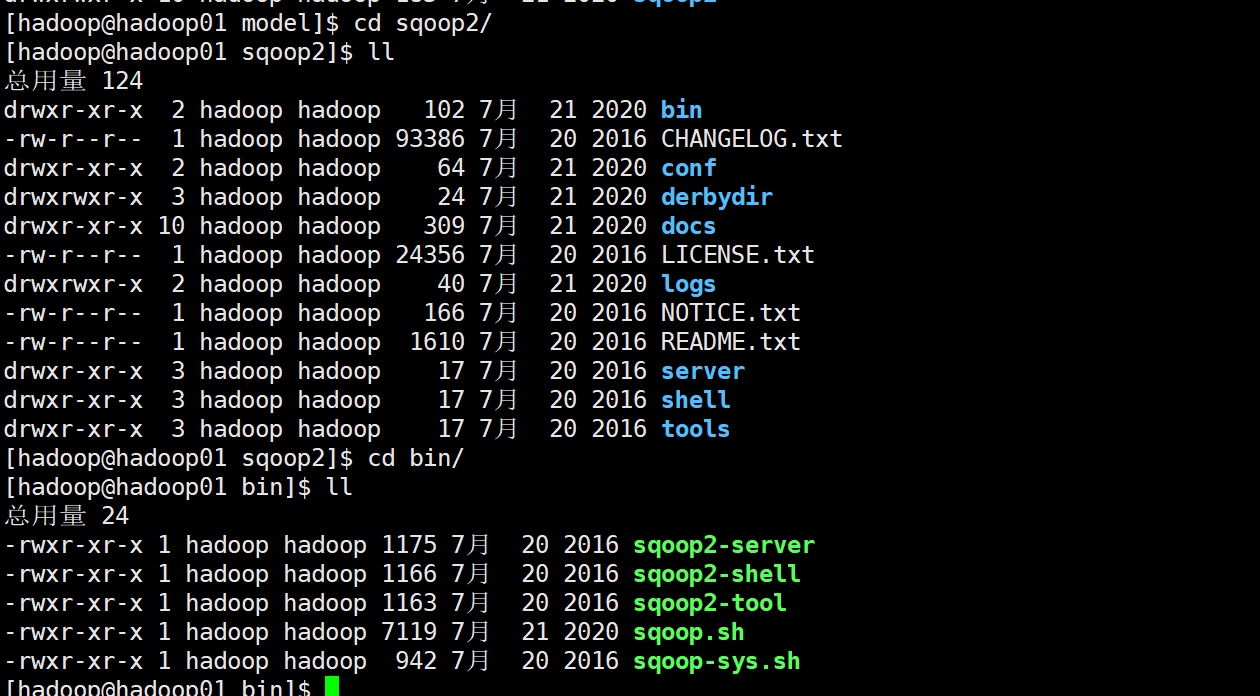

sqoop2依然在这个文件夹

sqoop2-server:启动服务

sqoop2-shell:进入sqoop2客户端

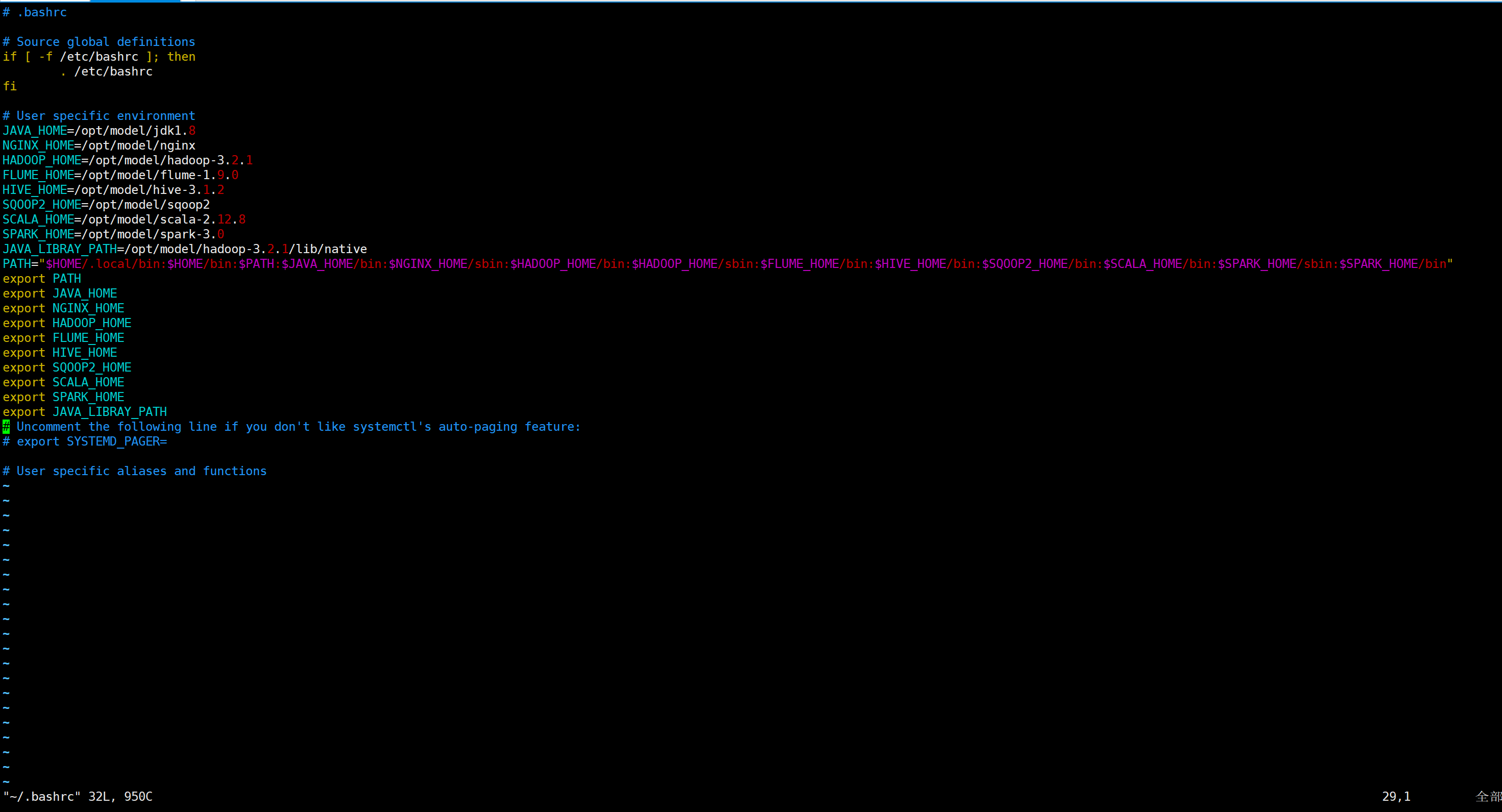

这些命令之所以能够直接使用,是因为配置了环境变量

[hadoop@hadoop01 sqoop2]$ vi ~/.bashrc

说法一:

~/是进入当前用户的主目录。比如我用的用户名是aaa

那么命令

cd ~/ 就进入了/home/aaa 目录。

.bashrc 是进入.bashrc文件夹,就是用户目录下的名字是.bashrc的目录。

2.1 启动服务进程

2.2 启动服务进程

[hadoop@hadoop01 ~]$ sqoop2-server start #启动服务

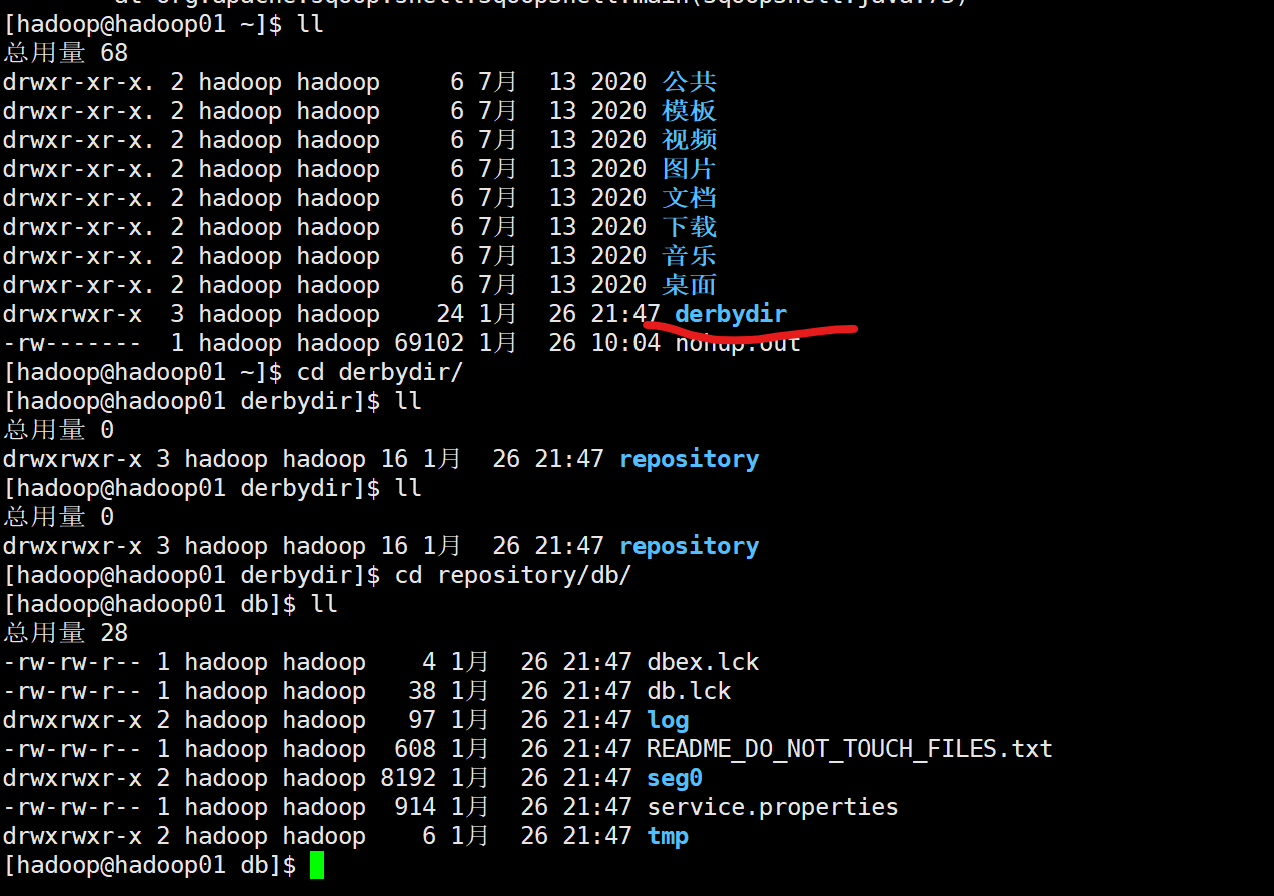

在哪里启动就会在哪里产生一个derbydir的文件夹【有点像clone git仓库?】

这次在哪里启动,下次还在哪里启动就行

[hadoop@hadoop01 data]$ sqoop2-server stop #停止Sqoop2-server

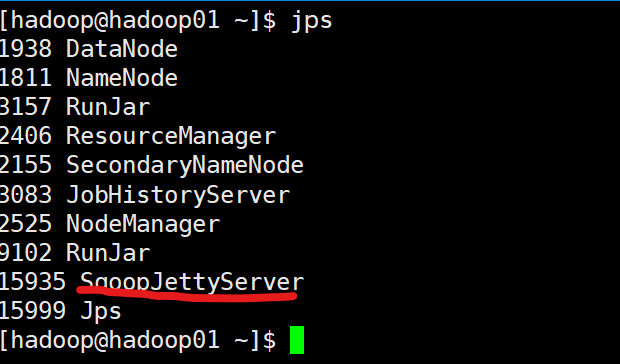

检查进程:

没问题

2.2启动sqoop2客户端

以及启动了服务端,要在客户端界面才能实现数据迁移

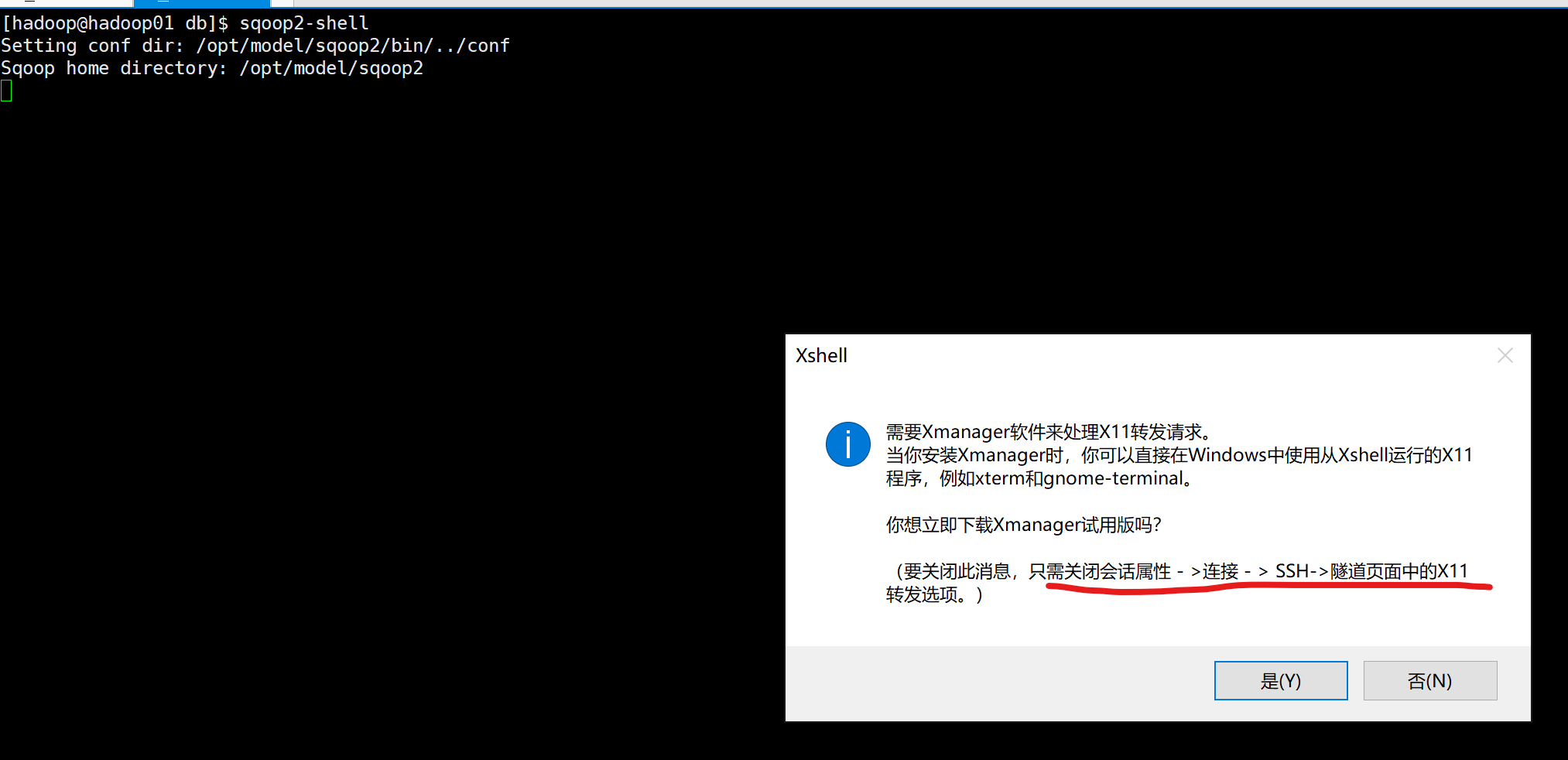

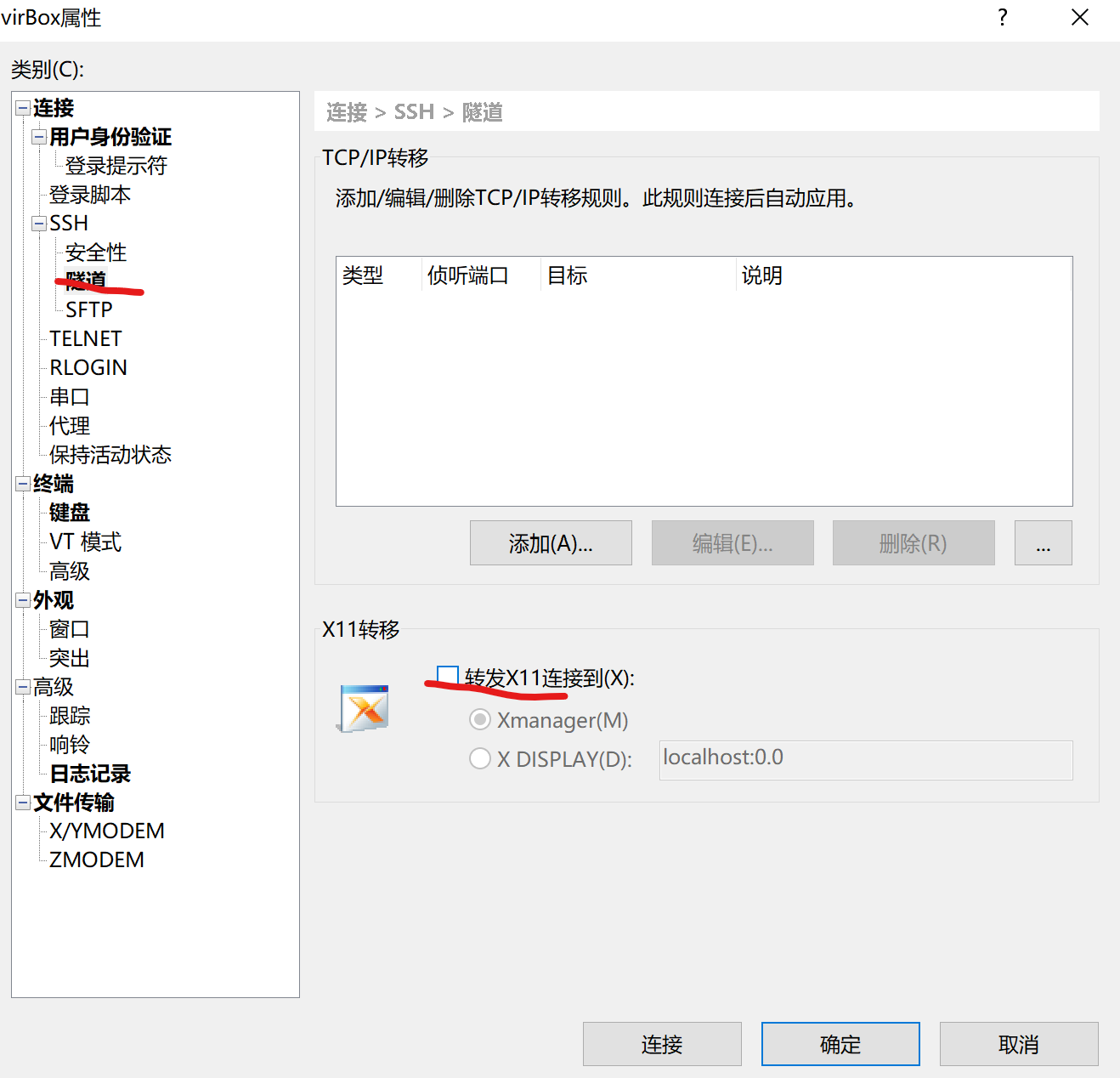

启动时,回出现这个错误,根据提示解决就行

[hadoop@hadoop01 ~]$ sqoop2-shell #1. 开启客户端

Setting conf dir: /opt/model/sqoop2/bin/../conf

Sqoop home directory: /opt/model/sqoop2

Sqoop Shell: Type 'help' or '\h' for help.

sqoop:000> set server --host hadoop01 --port 12000 #2.每次都必须设置服务器连接信息

Server is set successfully

sqoop:000> show server -all #查看服务器的配置信息

Server host: hadoop01

Server port: 12000

Server webapp: sqoop

sqoop:000> show connector #查看连接器

0 [main] WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

+------------------------+---------+------------------------------------------------------------+----------------------+

| Name | Version | Class | Supported Directions |

+------------------------+---------+------------------------------------------------------------+----------------------+

#generic-jdbc-connector是sqoop和关系型数据库连接的驱动

| generic-jdbc-connector | 1.99.7 | org.apache.sqoop.connector.jdbc.GenericJdbcConnector | FROM/TO |

| kite-connector | 1.99.7 | org.apache.sqoop.connector.kite.KiteConnector | FROM/TO |

| oracle-jdbc-connector | 1.99.7 | org.apache.sqoop.connector.jdbc.oracle.OracleJdbcConnector | FROM/TO |

| ftp-connector | 1.99.7 | org.apache.sqoop.connector.ftp.FtpConnector | TO # hdfs-connector是sqoop和hdfs连接的驱动 |

| hdfs-connector | 1.99.7 | org.apache.sqoop.connector.hdfs.HdfsConnector | FROM/TO |

| kafka-connector | 1.99.7 | org.apache.sqoop.connector.kafka.KafkaConnector | TO |

| sftp-connector | 1.99.7 | org.apache.sqoop.connector.sftp.SftpConnector | TO |

+------------------------+---------+------------------------------------------------------------+----------------------+

sqoop:000> show link

+------+----------------+---------+

| Name | Connector Name | Enabled |

+------+----------------+---------+

+------+----------------+---------+

sqoop:000> show job

+----+------+----------------+--------------+---------+

| Id | Name | From Connector | To Connector | Enabled |

+----+------+----------------+--------------+---------+

+----+------+----------------+--------------+---------+

sqoop:000>

3.Sqoop数据迁移

3.1依赖的服务

- hdfs服务

- yarn服务

- sqoop2-server服务

- mysqld服务: sudo systemctl status mysqld

3.2 迁移HDFS数据到MySQL

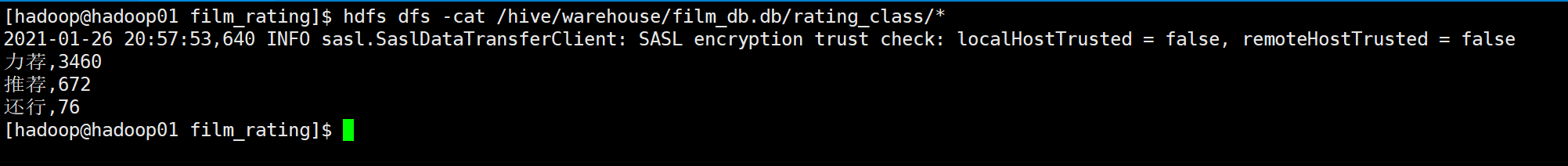

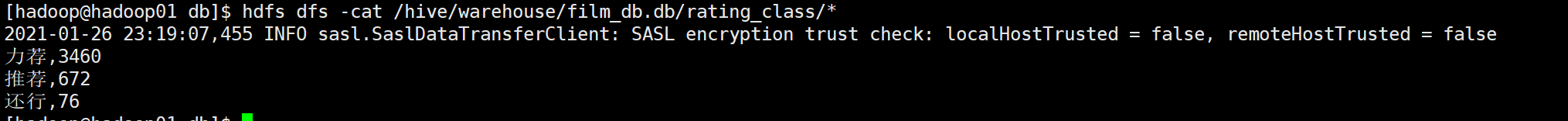

3.2.1 功能需求

- hive数据仓库(film_db),rating_class(文件)

- 将/hive/warehouse/film_db.db/rating_class/000000_0 文件 迁移到 MySQL数据库表中

3.2.2 在MySQL中创建rating_class表

[hadoop@hadoop01 data]$ mysql -uroot -p

数据密码: ROOTroot_1

mysql> use world; --1.引用该数据库

mysql> create table rating_class( --2.创建空表结构(没有数据)

-> rating_score varchar(20),

-> count int);

Query OK, 0 rows affected (0.38 sec)

mysql> select * from rating_class;

Empty set (0.00 sec)

3.2.3 Sqoop2-shell 创建job

3.2.3.1创建from_link(HDFS)

sqoop:000> create link -c hdfs-connector #1)命令

Creating link for connector with name hdfs-connector

Please fill following values to create new link object

Name: link_from_hdfs_rating_class #2)给link设置名字

HDFS cluster

URI: hdfs://hadoop01:9000 #3)hdfs集群文件的访问根路径

Conf directory: /opt/model/hadoop-3.2.1/etc/hadoop #4)hadoop本地的配置目录路径

Additional configs::

There are currently 0 values in the map:

entry#

New link was successfully created with validation status OK and name

link_from_hdfs_rating_class

【检查link创建列表】

sqoop:000> show link

+-----------------------------+----------------+---------+

| Name | Connector Name | Enabled |

+-----------------------------+----------------+---------+

| link_from_hdfs_rating_class | hdfs-connector | true |

+-----------------------------+----------------+---------+

3.2.3.2 创建to_link(MySQL)

sqoop:000> create link -c generic-jdbc-connector #1)命令 生成一个link 使用内置generic-jdbc-connector驱动

Creating link for connector with name generic-jdbc-connector

Please fill following values to create new link object

Name: link_to_mysql_rating_class #2)link名字

Database connection

Driver class: com.mysql.cj.jdbc.Driver #3)mysql8的驱动字符串

Connection String: jdbc:mysql://hadoop01:3306/world?serverTimezone=Asia/Shanghai #4)url 导入本机hadoop01的数据库

Username: root

Password: ********** #6)密码ROOTroot_1

Fetch Size:

Connection Properties:

There are currently 0 values in the map:

entry#

SQL Dialect

Identifier enclose: #7)注意:必须输入“1”个空格

New link was successfully created with validation status OK and name link_to_mysql_rating_class

sqoop:000> show link

+-----------------------------+------------------------+---------+

| Name | Connector Name | Enabled |

+-----------------------------+------------------------+---------+

| link_to_mysql_rating_class | generic-jdbc-connector | true |

| link_from_hdfs_rating_class | hdfs-connector | true |

3.2.33 创建job

sqoop:000> create job -f link_from_hdfs_rating_class -t link_to_mysql_rating_class #1)命令

Creating job for links with from name link_from_hdfs_rating_class and to name link_to_mysql_rating_class

Please fill following values to create new job object

Name: job_hdfs2mysql_rating_class #2)job的名字

Input configuration

Input directory: /hive/warehouse/film_db.db/rating_class #3)hdfs文件所在的目录位置

Override null value:

Null value:

Incremental import

Incremental type: #自增方式

0 : NONE

1 : NEW_FILES

Choose: 0 #4)输入0

Last imported date: #5)直接Enter

Database target

Schema name: world #6)输入mysql的数据库名

Table name: rating_class #7) 数据表的名字

Column names:

There are currently 0 values in the list:

element#

Staging table:

Clear stage table:

Throttling resources

Extractors:

Loaders:

Classpath configuration

Extra mapper jars:

There are currently 0 values in the list:

element#

New job was successfully created with validation status OK and name job_hdfs2mysql_rating_class

【查看效果】

sqoop:000> show job

:couple_with_heart: 怎么关闭连接?

修改连接

update link -n link_name

常用命令sqoop.apache.org/docs/1.99.7…

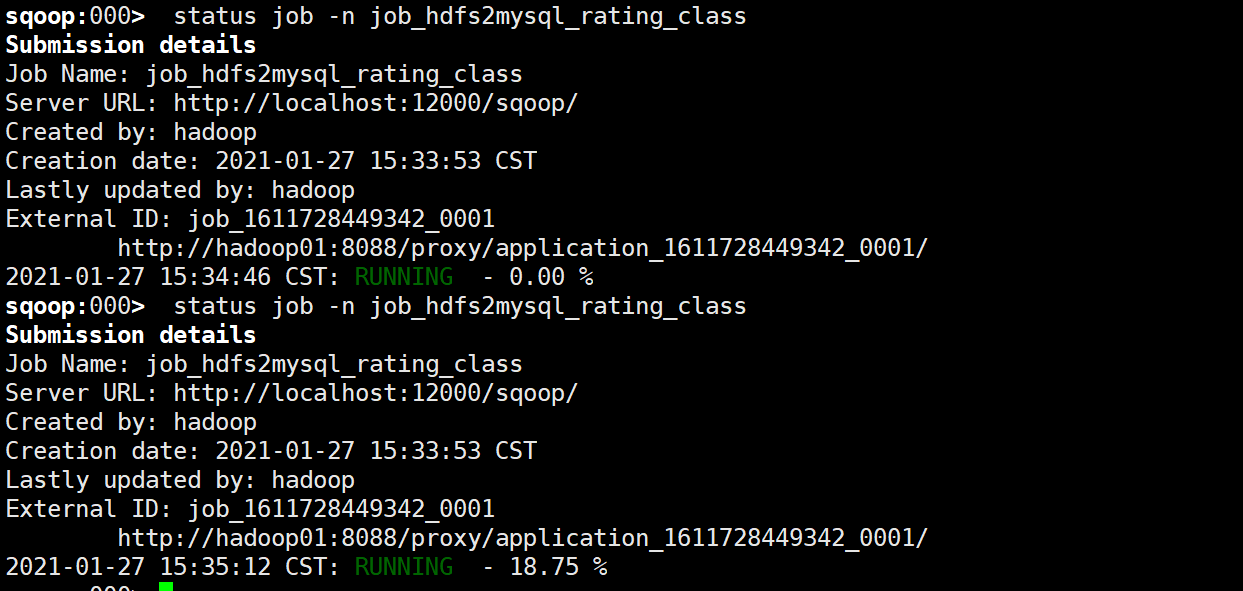

3.2.4 启动job

sqoop:000> start job -n job_hdfs2mysql_rating_class

Submission details

Job Name: job_hdfs2mysql_rating_class

Server URL: http://localhost:12000/sqoop/

Created by: hadoop

Creation date: 2021-01-27 15:33:53 CST

Lastly updated by: hadoop

External ID: job_1611728449342_0001

http://hadoop01:8088/proxy/application_1611728449342_0001/

2021-01-27 15:33:53 CST: BOOTING - Progress is not available

3.2.5 查看Job执行的状态

sqoop:000> status job -n job_hdfs2mysql_rating_class

查看mysql效果

结论:

现在已经完成了分析完的数据导出到mysql里,接下来就可以用springBoot访问mysql,然后再前台界面进行渲染