前言

Sqoop’s import tool’s main function is to upload your data into files in HDFS. If you have a Hive metastore associated with your HDFS cluster, Sqoop can also import the data into Hive by generating and executing a CREATE TABLE statement to define the data’s layout in Hive. Importing data into Hive is as simple as adding the --hive-import option to your Sqoop command line.

Sqoop的导入工具的主要功能是将您的数据上传到HDFS中的文件中。如果您拥有与HDFS群集关联的Hive元存储,Sqoop还可以通过生成并执行CREATE TABLE语句在Hive中定义数据的布局,将数据导入Hive。将数据导入Hive在Sqoop命令行中添加--hive-import选项。查询导入语句时,不必翻看官方文档,只需在shell中执行sqoop help命令即可。

如果你对SQOOP尚未了解,请移步至Apache SQOOP这篇文章。

导入过程中出现的Error你也可以在文章中寻找到解决方式

表结构与数据源

- Mysql表结构

create table mysql_student(

id int,

name varchar(10),

age int,

sex varchar(5),

create_date DATE,

primary key (id)

);

- Mysql插入数据

insert into mysql_student values

(1,'xinxing',22,'boy','2020-06-12'),

(2,'xiaohong',26,'girl','2020-06-17'),

(3,'xiaoming',22,'boy','2020-06-13'),

(4,'shishi',17,'girl','2020-05-12'),

(5,'xiaoli',30,'boy','2020-07-12');

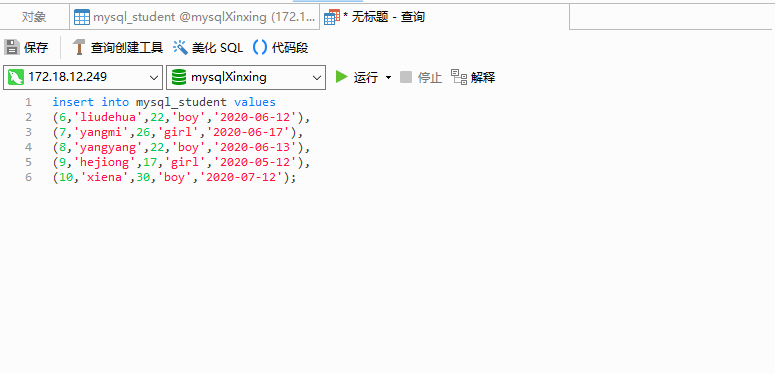

- Mysql更新数据源

insert into mysql_student values

(6,'liudehua',22,'boy','2020-06-12'),

(7,'yangmi',26,'girl','2020-06-17'),

(8,'yangyang',22,'boy','2020-06-13'),

(9,'hejiong',17,'girl','2020-05-12'),

(10,'xiena',30,'boy','2020-07-12');

- Hive表结构

create table hive_student(

id int,

name string,

age int,

sex string,

create_date string

)

comment '全量导入学生表'

row format delimited fields terminated by ',';

抽取MYSQL数据到(HDFS)Hive单分区中

全量抽取

sqoop import \

--connect jdbc:mysql://xinxingdata001:3306/mysqlXinxing \

--username root \

--password 123456 \

--hive-database xinxing \

--table mysql_student \

--hive-table hive_student \

--hive-import \

--delete-target-dir \

--fields-terminated-by ',' \

--mapreduce-job-name Xinxing00001Job;

查询hive_student表

hive (xinxing)> select * from hive_student;

OK

hive_student.id hive_student.name hive_student.age hive_student.sex hive_student.create_date

1 xinxing 22 boy 2020-06-12

2 xiaohong 26 girl 2020-06-17

3 xiaoming 22 boy 2020-06-13

4 shishi 17 girl 2020-05-12

5 xiaoli 30 boy 2020-07-12

Time taken: 0.04 seconds, Fetched: 5 row(s)

增量抽取

–check-column用来指定一些列,这些列在导入时用来检查做决定数据是否要被作为增量数据。注意:字符类型不能作为增量标识字段–incremental用来指定增量导入的模式(Mode),append和lastmodified–last-value指定上一次导入中检查列指定字段最大值

sqoop import \

--connect jdbc:mysql://xinxingdata001:3306/mysqlXinxing \

--username root \

--password 123456 \

--hive-database xinxing \

--table mysql_student \

--hive-table hive_student \

--hive-import \

--fields-terminated-by ',' \

--check-column id \

--incremental append \

--last-value 5 \

--mapreduce-job-name Xinxing00001Job \

查询hive_student表

hive (xinxing)> select * from hive_student;

OK

hive_student.id hive_student.name hive_student.age hive_student.sex hive_student.create_date

1 xinxing 22 boy 2020-06-12

6 liudehua 22 boy 2020-06-12

2 xiaohong 26 girl 2020-06-17

7 yangmi 26 girl 2020-06-17

3 xiaoming 22 boy 2020-06-13

8 yangyang 22 boy 2020-06-13

4 shishi 17 girl 2020-05-12

9 hejiong 17 girl 2020-05-12

5 xiaoli 30 boy 2020-07-12

10 xiena 30 boy 2020-07-12

Time taken: 0.031 seconds, Fetched: 10 row(s)

增量抽取十有问题所在的,如果第二条数据

xiaohong同学做了变性手术,性别更改为了boy,增量是无法实现数据更新的。后面会引伸出拉链表来解决此类问题。

抽取MYSQL数据到(HDFS)Hive多分区中

全量抽取

创建hive分区表结构

create table hive_Partition_student(

id int,

name string,

age int,

sex string,

create_date string

)

comment '全量导入学生表'

partitioned by (day string)

row format delimited fields terminated by ',';

抽取

sqoop import \

--connect jdbc:mysql://xinxingdata001:3306/mysqlXinxing \

--username root \

--password 123456 \

--hive-database xinxing \

--table mysql_student \

--hive-table hive_Partition_student \

--hive-import \

--delete-target-dir \

--fields-terminated-by ',' \

--hive-partition-key day \

--hive-partition-value 12 \

--mapreduce-job-name Xinxing00001Job;

查询hive_Partition_student表

hive (xinxing)> select * from hive_Partition_student where day=12;

OK

hive_partition_student.id hive_partition_student.name hive_partition_student.age hive_partition_student.sex hive_partition_student.create_date hive_partition_student.day

1 xinxing 22 boy 2020-06-12 12

2 xiaohong 26 girl 2020-06-17 12

3 xiaoming 22 boy 2020-06-13 12

4 shishi 17 girl 2020-05-12 12

增量抽取

sqoop import \

--connect jdbc:mysql://xinxingdata001:3306/mysqlXinxing \

--username root \

--password 123456 \

--hive-import \

--hive-database xinxing \

--table mysql_student \

--hive-table hive_Partition_student \

--fields-terminated-by ',' \

--check-column id \

--incremental append \

--last-value 4 \

--hive-partition-key day \

--hive-partition-value 13 \

--mapreduce-job-name Xinxing00001Job;

查询hive_Partition_student表

hive (xinxing)> select * from hive_partition_student;

OK

hive_partition_student.id hive_partition_student.name hive_partition_student.age hive_partition_student.sex hive_partition_student.create_date hive_partition_student.day

1 xinxing 22 boy 2020-06-12 12

2 xiaohong 26 girl 2020-06-17 12

3 xiaoming 22 boy 2020-06-13 12

4 shishi 17 girl 2020-05-12 12

6 liudehua 22 boy 2020-06-12 13

7 yangmi 26 girl 2020-06-17 13

8 yangyang 22 boy 2020-06-13 13

9 hejiong 17 girl 2020-05-12 13

10 xiena 30 boy 2020-07-12 13

Time taken: 0.03 seconds, Fetched: 9 row(s)

Hive增量更新数据

创建Hive表结构

create table hive_student(

id int,

name string,

age int,

sex string,

create_date string

)

comment '全量导入学生表'

row format delimited fields terminated by ',';

抽取全量数据

sqoop import \

--connect jdbc:mysql://xinxingdata001:3306/mysqlXinxing \

--username root \

--password 123456 \

--hive-database xinxing \

--table mysql_student \

--hive-table hive_student \

--hive-import \

--delete-target-dir \

--fields-terminated-by ',' \

--mapreduce-job-name Xinxing00001Job;

mysql插入新数据并update之前存在的数据字段值

把更新之后的Mysql_student导入到Hive_ods层中

sqoop import \

--connect jdbc:mysql://xinxingdata001:3306/mysqlXinxing \

--username root \

--password 123456 \

--hive-database xinxing \

--table mysql_student \

--hive-table hive_ods_student \

--hive-import \

--delete-target-dir \

--fields-terminated-by ',' \

--mapreduce-job-name Xinxing00001Job;

将两个表进行left join,将hive_ods_student表中发生修改的数据更新到hive_ods_student表中

insert overwrite table hive_student

select

a.*

from

(

select * from hive_student

) as a

left join

(

select * from hive_ods_student

) as b

on a.id = b.id

where b.id is null

union all

select

*

from hive_ods_student