前言

上一篇文章简单介绍了AVFoundation的使用,这篇文章就来看下怎么利用VideoToolbox和AudioToolbox去实现音视频的编解码。研究的视频流格式是H264,音频流格式是AAC,若不了解这两种格式,点击这里快速了解。

VideoToolbox

VideoToolbox 是一个低级的框架,可直接访问硬件的编解码器。能够为视频提供压缩和解压缩的服务,同时也提供存储在 CoreVideo 像素缓冲区的图像进行格式的转换。

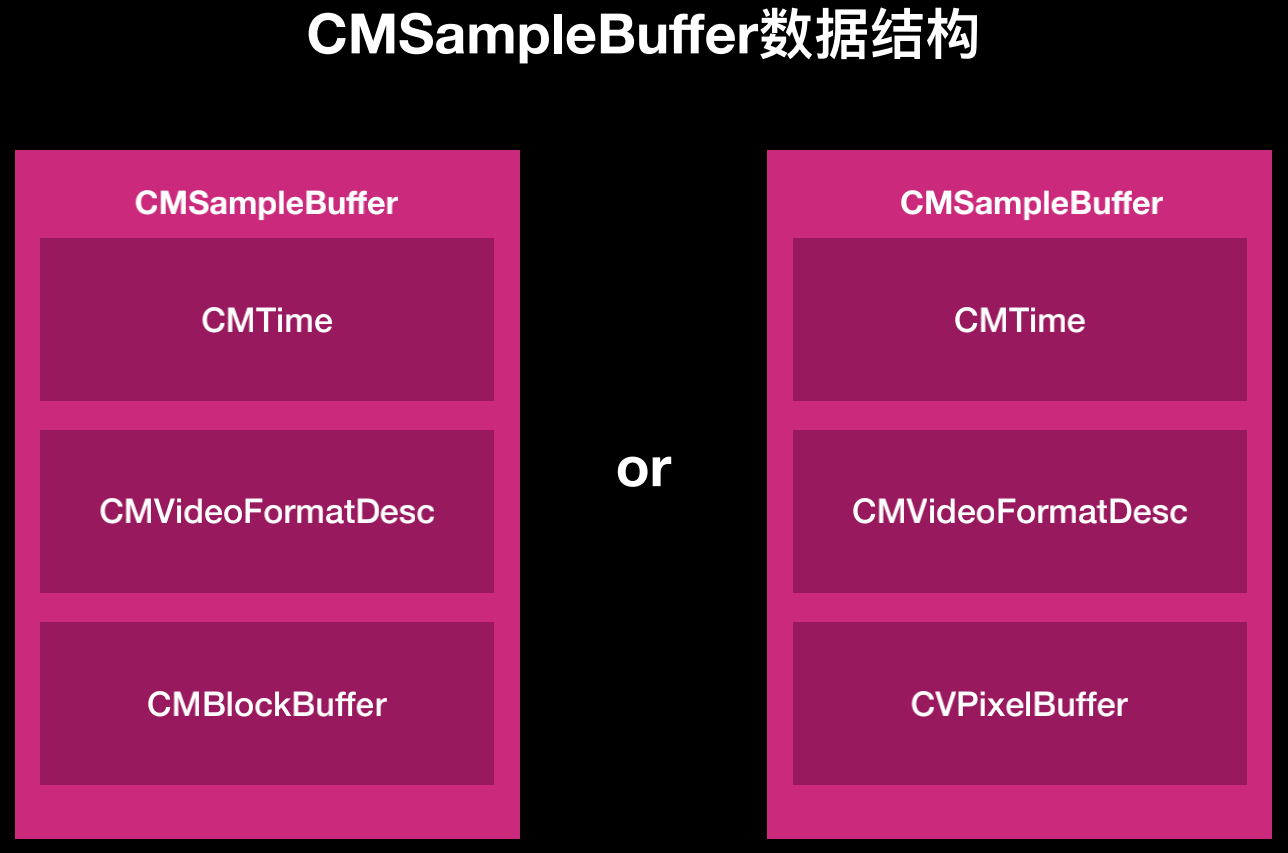

首先来了解一下CMSampleBuffer数据结构,在视频采集过程中,数据结构有以下两种可以看出,不同的地方是CMBlockBuffer和CVPixelBuffer。

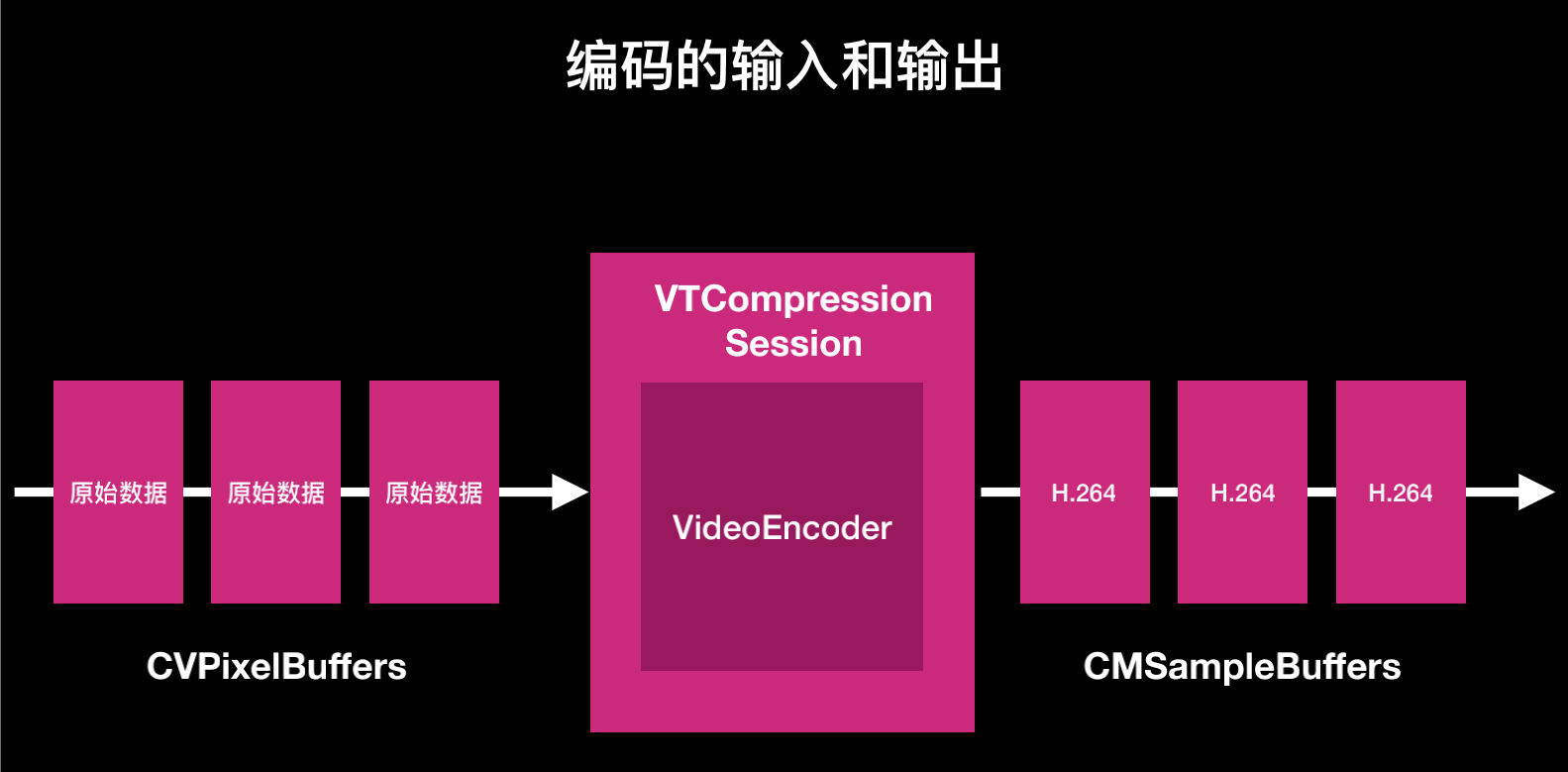

CVPixelBuffer是未经过编码的原始数据,而CMBlockBuffer是经过编码的H264裸流数据,图示如下:下面来用代码去实现这个流程

编码部分

初始化

#pragma mark - 编码

- (void)encodeInit{

OSStatus status = VTCompressionSessionCreate(kCFAllocatorDefault, (uint32_t)_width, (uint32_t)_height, kCMVideoCodecType_H264, NULL, NULL, NULL, encodeCallBack, (__bridge void * _Nullable)(self), &_encoderSession);

if (status != noErr) {

return;

}

// 设置实时编码

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_RealTime, kCFBooleanTrue);

// 设置过滤B帧

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_ProfileLevel, kVTProfileLevel_H264_Baseline_AutoLevel);

// 设置码率均值

CFNumberRef bitRate = (__bridge CFNumberRef)(@(_height*1000));

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_AverageBitRate, bitRate);

// 设置最值码率

CFArrayRef limits = (__bridge CFArrayRef)(@[@(_height*1000/4),@(_height*1000*4)]);

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_DataRateLimits, limits);

// 设置FPS

CFNumberRef fps = (__bridge CFNumberRef)(@(25));

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_ExpectedFrameRate, fps);

// 设置I帧间隔

CFNumberRef maxKeyFrameInterval = (__bridge CFNumberRef)(@(25*2));

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_MaxKeyFrameInterval, maxKeyFrameInterval);

// 准备编码

status = VTCompressionSessionPrepareToEncodeFrames(_encoderSession);

}

VTCompressionSessionCreate 参数解析

/*

* allocator:内存分配器,填NULL为默认分配器

* width、height:视频帧像素的宽高,如果编码器不支持这个宽高的话可能会改变

* codecType:编码类型,枚举

* encoderSpecification:指定特定的编码器,填NULL的话由VideoToolBox自动选择

* sourceImageBufferAttributes:源像素缓冲区的属性,用于为源帧创建像素缓冲池。如果这个参数有值的话,VideoToolBox会创建一个缓冲池,不需要缓冲池可以设置为NULL。使用VideoToolbox没有分配的像素缓冲区可能会增加复制图像数据的机会。

* compressedDataAllocator:压缩后数据的内存分配器,填NULL使用默认分配器

* outputCallback:视频压缩后输出数据的回调函数。这个函数在调用VTCompressionSessionEncodeFrame的线程上被异步调用。NULL,为编码帧调用VTCompressionSessionEncodeFrameWithOutputHandler时调用。

* param outputCallbackRefCon:回调函数中的自定义指针,我们通常传self,在回调函数中就可以拿到当前类的方法和属性了

* compressionSessionOut:编码器句柄,传入编码器的指针

*/

VT_EXPORT OSStatus

VTCompressionSessionCreate(

CM_NULLABLE CFAllocatorRef allocator,

int32_t width,

int32_t height,

CMVideoCodecType codecType,

CM_NULLABLE CFDictionaryRef encoderSpecification,

CM_NULLABLE CFDictionaryRef sourceImageBufferAttributes,

CM_NULLABLE CFAllocatorRef compressedDataAllocator,

CM_NULLABLE VTCompressionOutputCallback outputCallback,

void * CM_NULLABLE outputCallbackRefCon,

CM_RETURNS_RETAINED_PARAMETER CM_NULLABLE VTCompressionSessionRef * CM_NONNULL compressionSessionOut) API_AVAILABLE(macosx(10.8), ios(8.0), tvos(10.2));

数据输入

- (void)encodeSampleBuffer:(CMSampleBufferRef)sampleBuffer{

if (!self.encoderSession) {

[self encodeInit];

}

CFRetain(sampleBuffer);

dispatch_async(self.encoderQueue, ^{

// 原始数据获取

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

// 该帧的时间戳

CMTime TimeStamp = CMTimeMake(self->frameID, 1000);

self->frameID++;

// 持续时间

CMTime duration = kCMTimeInvalid;

VTEncodeInfoFlags infoFlagsOut;

// 编码

OSStatus status = VTCompressionSessionEncodeFrame(self.encoderSession, imageBuffer, TimeStamp, duration, NULL, NULL, &infoFlagsOut);

if (status != noErr) {

NSLog(@"error");

}

CFRelease(sampleBuffer);

});

}

编码回调方法

void encodeCallBack (

void * CM_NULLABLE outputCallbackRefCon,

void * CM_NULLABLE sourceFrameRefCon,

OSStatus status,

VTEncodeInfoFlags infoFlags,

CM_NULLABLE CMSampleBufferRef sampleBuffer ){

if (status != noErr) {

NSLog(@"encodeVideoCallBack: encode error, status = %d",(int)status);

return;

}

if (!CMSampleBufferDataIsReady(sampleBuffer)) {

NSLog(@"encodeVideoCallBack: data is not ready");

return;

}

Demo2ViewController *VC = (__bridge Demo2ViewController *)(outputCallbackRefCon);

// 判断是否为关键帧

BOOL isKeyFrame = NO;

CFArrayRef attachArray = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, true);

isKeyFrame = !CFDictionaryContainsKey(CFArrayGetValueAtIndex(attachArray, 0), kCMSampleAttachmentKey_NotSync);//(注意取反符号)

if (isKeyFrame && !VC->hasSpsPps) {

size_t spsSize, spsCount;

size_t ppsSize, ppsCount;

const uint8_t *spsData, *ppsData;

// 获取图像源格式

CMFormatDescriptionRef formatDesc = CMSampleBufferGetFormatDescription(sampleBuffer);

OSStatus status1 = CMVideoFormatDescriptionGetH264ParameterSetAtIndex(formatDesc, 0, &spsData, &spsSize, &spsCount, 0);

OSStatus status2 = CMVideoFormatDescriptionGetH264ParameterSetAtIndex(formatDesc, 1, &ppsData, &ppsSize, &ppsCount, 0);

if (status1 == noErr && status2 == noErr) {

VC->hasSpsPps = true;

//sps data

VC->sps = [NSMutableData dataWithCapacity:4 + spsSize];

[VC->sps appendBytes:StartCode length:4];

[VC->sps appendBytes:spsData length:spsSize];

//pps data

VC->pps = [NSMutableData dataWithCapacity:4 + ppsSize];

[VC->pps appendBytes:StartCode length:4];

[VC->pps appendBytes:ppsData length:ppsSize];

[VC decodeH264Data:VC->sps];

[VC decodeH264Data:VC->pps];

}

}

// 获取NALU数据

size_t lengthAtOffset, totalLength;

char *dataPoint;

// 将数据复制到dataPoint

CMBlockBufferRef blockBuffer = CMSampleBufferGetDataBuffer(sampleBuffer);

OSStatus error = CMBlockBufferGetDataPointer(blockBuffer, 0, &lengthAtOffset, &totalLength, &dataPoint);

if (error != kCMBlockBufferNoErr) {

NSLog(@"VideoEncodeCallback: get datapoint failed, status = %d", (int)error);

return;

}

// 循环获取nalu数据

size_t offet = 0;

// 返回的nalu数据前四个字节不是0001的startcode(不是系统端的0001),而是大端模式的帧长度length

const int lengthInfoSize = 4;

while (offet < totalLength - lengthInfoSize) {

uint32_t naluLength = 0;

// 获取nalu 数据长度

memcpy(&naluLength, dataPoint + offet, lengthInfoSize);

// 大端转系统端

naluLength = CFSwapInt32BigToHost(naluLength);

// 获取到编码好的视频数据

NSMutableData *data = [NSMutableData dataWithCapacity:4 + naluLength];

[data appendBytes:StartCode length:4];

[data appendBytes:dataPoint + offet + lengthInfoSize length:naluLength];

dispatch_async(VC.encoderCallbackQueue, ^{

[VC decodeH264Data:data];

});

// 移动下标,继续读取下一个数据

offet += lengthInfoSize + naluLength;

}

}

VTCompressionOutputCallback 参数解析

/*

* outputCallbackRefCon:回调函数的引用值。

* sourceFrameRefCon:帧的参考值,从sourceFrameRefCon参数复制到VTCompressionSessionEncodeFrame。

* status:如果压缩成功则返回noErr;如果压缩不成功,则发出错误代码。

* infoFlags:包含有关编码操作的信息。如果编码是异步运行的,则设置kVTEncodeInfo_Asynchronous。如果帧被丢弃,则设置kVTEncodeInfo_FrameDropped。

* sampleBuffer:如果压缩成功且没有删除该帧,则包含该压缩帧;否则,空。

*/

typedef void (*VTCompressionOutputCallback)(

void *outputCallbackRefCon,

void *sourceFrameRefCon,

OSStatus status,

VTEncodeInfoFlags infoFlags,

CMSampleBufferRef sampleBuffer

);

解码部分

初始化

- (void)decodeVideoInit {

const uint8_t * const parameterSetPointers[2] = {_sps, _pps};

const size_t parameterSetSizes[2] = {_spsSize, _ppsSize};

int naluHeaderLen = 4;

// 根据sps pps配置解码参数

OSStatus status = CMVideoFormatDescriptionCreateFromH264ParameterSets(kCFAllocatorDefault, 2, parameterSetPointers, parameterSetSizes, naluHeaderLen, &_decodeDesc);

if (status != noErr) {

NSLog(@"Video hard DecodeSession create H264ParameterSets(sps, pps) failed status= %d", (int)status);

return;

}

// 配置视频输出参数

NSDictionary *destinationPixBufferAttrs =

@{

(id)kCVPixelBufferPixelFormatTypeKey: [NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarFullRange], //iOS上 nv12(uvuv排布) 而不是nv21(vuvu排布)

(id)kCVPixelBufferWidthKey: [NSNumber numberWithInteger:_width],

(id)kCVPixelBufferHeightKey: [NSNumber numberWithInteger:_height],

(id)kCVPixelBufferOpenGLCompatibilityKey: [NSNumber numberWithBool:true]

};

VTDecompressionOutputCallbackRecord callbackRecord;

callbackRecord.decompressionOutputCallback = videoDecompressionOutputCallback;

callbackRecord.decompressionOutputRefCon = (__bridge void * _Nullable)(self);

// 创建解码器

status = VTDecompressionSessionCreate(kCFAllocatorDefault, _decodeDesc, NULL, (__bridge CFDictionaryRef _Nullable)(destinationPixBufferAttrs), &callbackRecord, &_decoderSession);

if (status != noErr) {

NSLog(@"Video hard DecodeSession create failed status= %d", (int)status);

return;

}

// 设置解码的实时

VTSessionSetProperty(_decoderSession, kVTDecompressionPropertyKey_RealTime, kCFBooleanTrue);

}

CMVideoFormatDescriptionCreateFromH264ParameterSets参数解析

/*

* allocator:分配器

* parameterSetCount:参数个数

* parameterSetPointers:参数集指针

* parameterSetSizes:参数集大小

* NALUnitHeaderLength:start code 的长度 4

* formatDescriptionOut:解码器描述

*/

CM_EXPORT

OSStatus CMVideoFormatDescriptionCreateFromH264ParameterSets(

CFAllocatorRef CM_NULLABLE allocator,

size_t parameterSetCount,

const uint8_t * CM_NONNULL const * CM_NONNULL parameterSetPointers,

const size_t * CM_NONNULL parameterSetSizes,

int NALUnitHeaderLength,

CM_RETURNS_RETAINED_PARAMETER CMFormatDescriptionRef CM_NULLABLE * CM_NONNULL formatDescriptionOut )

VTDecompressionOutputCallback 回调参数解析

/*

* decompressionOutputRefCon:回调的引用

* sourceFrameRefCon:帧的引用

* status:一个状态标识 (包含未定义的代码)

* infoFlags:指示同步/异步解码,或者解码器是否打算丢帧的标识

* imageBuffer:实际图像的缓冲

* presentationTimeStamp:出现的时间戳

* presentationDuration:出现的持续时间

*/

typedef void (*VTDecompressionOutputCallback)(

void * CM_NULLABLE decompressionOutputRefCon,

void * CM_NULLABLE sourceFrameRefCon,

OSStatus status,

VTDecodeInfoFlags infoFlags,

CM_NULLABLE CVImageBufferRef imageBuffer,

CMTime presentationTimeStamp,

CMTime presentationDuration );

VTDecompressionSessionCreate 参数解析

/*

* allocator:内存的会话

* videoFormatDescription:描述源视频帧

* videoDecoderSpecification:指定必须使用的特定视频解码器

* destinationImageBufferAttributes:描述源像素缓冲区的要求

* outputCallback:使用已解压缩的帧调用的回调

* decompressionSessionOut:指向一个变量以接收新的解压会话

*/

VT_EXPORT OSStatus

VTDecompressionSessionCreate(

CM_NULLABLE CFAllocatorRef allocator,

CM_NONNULL CMVideoFormatDescriptionRef videoFormatDescription,

CM_NULLABLE CFDictionaryRef videoDecoderSpecification,

CM_NULLABLE CFDictionaryRef destinationImageBufferAttributes,

const VTDecompressionOutputCallbackRecord * CM_NULLABLE outputCallback,

CM_RETURNS_RETAINED_PARAMETER CM_NULLABLE VTDecompressionSessionRef * CM_NONNULL decompressionSessionOut) API_AVAILABLE(macosx(10.8), ios(8.0), tvos(10.2));

H264数据输入

- (void)decodeH264Data:(NSData *)h264Data {

if (!self.decoderSession) {

[self decodeVideoInit];

}

uint8_t *frame = (uint8_t *)h264Data.bytes;

uint32_t size = (uint32_t)h264Data.length;

int type = (frame[4] & 0x1F);

// 将NALU的开始码转为4字节大端NALU的长度信息

uint32_t naluSize = size - 4;

uint8_t *pNaluSize = (uint8_t *)(&naluSize);

frame[0] = *(pNaluSize + 3);

frame[1] = *(pNaluSize + 2);

frame[2] = *(pNaluSize + 1);

frame[3] = *(pNaluSize);

switch (type) {

case 0x05: //关键帧

[self decode:frame withSize:size];

break;

case 0x06:

//NSLog(@"SEI");//增强信息

break;

case 0x07: //sps

_spsSize = naluSize;

_sps = malloc(_spsSize);

memcpy(_sps, &frame[4], _spsSize);

break;

case 0x08: //pps

_ppsSize = naluSize;

_pps = malloc(_ppsSize);

memcpy(_pps, &frame[4], _ppsSize);

break;

default: //其他帧(1-5)

[self decode:frame withSize:size];

break;

}

}

// 解码函数

- (void)decode:(uint8_t *)frame withSize:(uint32_t)frameSize {

CVPixelBufferRef outputPixelBuffer = NULL;

CMBlockBufferRef blockBuffer = NULL;

CMBlockBufferFlags flag0 = 0;

OSStatus status = CMBlockBufferCreateWithMemoryBlock(kCFAllocatorDefault, frame, frameSize, kCFAllocatorNull, NULL, 0, frameSize, flag0, &blockBuffer);

if (status != kCMBlockBufferNoErr) {

NSLog(@"Video hard decode create blockBuffer error code=%d", (int)status);

return;

}

CMSampleBufferRef sampleBuffer = NULL;

const size_t sampleSizeArray[] = {frameSize};

status = CMSampleBufferCreateReady(kCFAllocatorDefault, blockBuffer, _decodeDesc, 1, 0, NULL, 1, sampleSizeArray, &sampleBuffer);

if (status != noErr || !sampleBuffer) {

NSLog(@"Video hard decode create sampleBuffer failed status=%d", (int)status);

CFRelease(blockBuffer);

return;

}

//解码

//向视频解码器提示使用低功耗模式是可以的

VTDecodeFrameFlags flag1 = kVTDecodeFrame_1xRealTimePlayback;

//异步解码

VTDecodeInfoFlags infoFlag = kVTDecodeInfo_Asynchronous;

//解码数据

/*

参数1: 解码session

参数2: 源数据 包含一个或多个视频帧的CMsampleBuffer

参数3: 解码标志

参数4: 解码后数据outputPixelBuffer

参数5: 同步/异步解码标识

*/

status = VTDecompressionSessionDecodeFrame(_decoderSession, sampleBuffer, flag1, &outputPixelBuffer, &infoFlag);

if (status == kVTInvalidSessionErr) {

NSLog(@"Video hard decode InvalidSessionErr status =%d", (int)status);

} else if (status == kVTVideoDecoderBadDataErr) {

NSLog(@"Video hard decode BadData status =%d", (int)status);

} else if (status != noErr) {

NSLog(@"Video hard decode failed status =%d", (int)status);

}

CFRelease(sampleBuffer);

CFRelease(blockBuffer);

}

解码回调方法

void videoDecompressionOutputCallback(void * CM_NULLABLE decompressionOutputRefCon,

void * CM_NULLABLE sourceFrameRefCon,

OSStatus status,

VTDecodeInfoFlags infoFlags,

CM_NULLABLE CVImageBufferRef imageBuffer,

CMTime presentationTimeStamp,

CMTime presentationDuration ) {

if (status != noErr) {

NSLog(@"Video hard decode callback error status=%d", (int)status);

return;

}

//解码后的数据sourceFrameRefCon -> CVPixelBufferRef

CVPixelBufferRef *outputPixelBuffer = (CVPixelBufferRef *)sourceFrameRefCon;

*outputPixelBuffer = CVPixelBufferRetain(imageBuffer);

//获取self

Demo2ViewController *decoder = (__bridge Demo2ViewController *)(decompressionOutputRefCon);

//调用回调队列

dispatch_async(decoder.decoderCallbackQueue, ^{

// 这里异步把解码后的数据(imageBuffer)传输出去

//释放数据

CVPixelBufferRelease(imageBuffer);

});

}

VTDecompressionOutputCallback 参数解析

/*

* decompressionOutputRefCon:回调的引用

* sourceFrameRefCon:帧的引用

* status:一个状态标识 (包含未定义的代码)

* infoFlags:指示同步/异步解码,或者解码器是否打算丢帧的标识

* imageBuffer:实际图像的缓冲

* presentationTimeStamp:出现的时间戳

* presentationDuration:出现的持续时间

*/

typedef void (*VTDecompressionOutputCallback)(

void * CM_NULLABLE decompressionOutputRefCon,

void * CM_NULLABLE sourceFrameRefCon,

OSStatus status,

VTDecodeInfoFlags infoFlags,

CM_NULLABLE CVImageBufferRef imageBuffer,

CMTime presentationTimeStamp,

CMTime presentationDuration );

完整代码

//

// Demo2ViewController.m

// Demo

//

// Created by Noah on 2020/3/19.

// Copyright © 2020 Noah. All rights reserved.

//

#import "Demo2ViewController.h"

#import <AVFoundation/AVFoundation.h>

#import <VideoToolbox/VideoToolbox.h>

@interface Demo2ViewController ()<AVCaptureVideoDataOutputSampleBufferDelegate>

{

long frameID;

BOOL hasSpsPps;//判断是否已经获取到pps和sps

NSMutableData *sps;

NSMutableData *pps;

uint8_t *_sps;

NSUInteger _spsSize;

uint8_t *_pps;

NSUInteger _ppsSize;

CMVideoFormatDescriptionRef _decodeDesc;

}

@property (nonatomic, strong) AVCaptureSession *captureSession;

@property (nonatomic, strong) dispatch_queue_t captureQueue;

@property (nonatomic, strong) AVCaptureDeviceInput *videoDataInput;

@property (nonatomic, strong) AVCaptureDeviceInput *frontCamera;

@property (nonatomic, strong) AVCaptureDeviceInput *backCamera;

@property (nonatomic, strong) AVCaptureVideoDataOutput *videoDataOutput;

@property (nonatomic, strong) AVCaptureConnection *videoConnection;

@property (nonatomic, strong) AVCaptureVideoPreviewLayer *videoPreviewLayer;

@property (nonatomic) VTCompressionSessionRef encoderSession;

@property (nonatomic, strong) dispatch_queue_t encoderQueue;

@property (nonatomic, strong) dispatch_queue_t encoderCallbackQueue;

@property (nonatomic) VTDecompressionSessionRef decoderSession;

@property (nonatomic, strong) dispatch_queue_t decoderQueue;

@property (nonatomic, strong) dispatch_queue_t decoderCallbackQueue;

// 捕获视频的宽

@property (nonatomic, assign, readonly) NSUInteger width;

// 捕获视频的高

@property (nonatomic, assign, readonly) NSUInteger height;

@end

const Byte StartCode[] = "\x00\x00\x00\x01";

@implementation Demo2ViewController

- (void)viewDidLoad {

[super viewDidLoad];

// Do any additional setup after loading the view.

[self setupVideo];

[self.captureSession startRunning];

}

#pragma mark - 视频初始化

- (void)setupVideo{

NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

for (AVCaptureDevice *device in devices) {

if (device.position == AVCaptureDevicePositionBack) {

self.backCamera = [AVCaptureDeviceInput deviceInputWithDevice:device error:nil];

}else{

self.frontCamera = [AVCaptureDeviceInput deviceInputWithDevice:device error:nil];

}

}

self.videoDataInput = self.backCamera;

self.videoDataOutput = [[AVCaptureVideoDataOutput alloc]init];

[self.videoDataOutput setSampleBufferDelegate:self queue:self.captureQueue];

[self.videoDataOutput setAlwaysDiscardsLateVideoFrames:YES];

[self.videoDataOutput setVideoSettings:@{(__bridge NSString *)kCVPixelBufferPixelFormatTypeKey:@(kCVPixelFormatType_420YpCbCr8BiPlanarFullRange)}];

[self.captureSession beginConfiguration];

if ([self.captureSession canAddInput:self.videoDataInput]) {

[self.captureSession addInput:self.videoDataInput];

}

if ([self.captureSession canAddOutput:self.videoDataOutput]) {

[self.captureSession addOutput:self.videoDataOutput];

}

[self setVideoPreset];

[self.captureSession commitConfiguration];

self.videoConnection = [self.videoDataOutput connectionWithMediaType:AVMediaTypeVideo];

self.videoConnection.videoOrientation = AVCaptureVideoOrientationPortrait;

[self updateFps:25];

self.videoPreviewLayer = [AVCaptureVideoPreviewLayer layerWithSession:self.captureSession];

self.videoPreviewLayer.frame = self.view.bounds;

self.videoPreviewLayer.videoGravity = AVLayerVideoGravityResizeAspectFill;

[self.view.layer addSublayer:self.videoPreviewLayer];

}

- (void)setVideoPreset {

if ([self.captureSession canSetSessionPreset:AVCaptureSessionPreset1920x1080]) {

[self.captureSession setSessionPreset:AVCaptureSessionPreset1920x1080];

_width = 1080; _height = 1920;

} else if ([self.captureSession canSetSessionPreset:AVCaptureSessionPreset1280x720]) {

[self.captureSession setSessionPreset:AVCaptureSessionPreset1280x720];

_width = 720; _height = 1280;

} else if ([self.captureSession canSetSessionPreset:AVCaptureSessionPreset640x480]) {

[self.captureSession setSessionPreset:AVCaptureSessionPreset640x480];

_width = 480; _height = 640;

}

}

-(void)updateFps:(NSInteger) fps{

//获取当前capture设备

NSArray *videoDevices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo];

//遍历所有设备(前后摄像头)

for (AVCaptureDevice *vDevice in videoDevices) {

//获取当前支持的最大fps

float maxRate = [(AVFrameRateRange *)[vDevice.activeFormat.videoSupportedFrameRateRanges objectAtIndex:0] maxFrameRate];

//如果想要设置的fps小于或等于做大fps,就进行修改

if (maxRate >= fps) {

//实际修改fps的代码

if ([vDevice lockForConfiguration:NULL]) {

vDevice.activeVideoMinFrameDuration = CMTimeMake(10, (int)(fps * 10));

vDevice.activeVideoMaxFrameDuration = vDevice.activeVideoMinFrameDuration;

[vDevice unlockForConfiguration];

}

}

}

}

#pragma mark - 懒加载

- (AVCaptureSession *)captureSession{

if (!_captureSession) {

_captureSession = [[AVCaptureSession alloc]init];

}

return _captureSession;

}

- (dispatch_queue_t)captureQueue{

if (!_captureQueue) {

_captureQueue = dispatch_queue_create("capture queue", NULL);

}

return _captureQueue;

}

- (dispatch_queue_t)encoderQueue{

if (!_captureQueue) {

_captureQueue = dispatch_queue_create("encoder queue", NULL);

}

return _captureQueue;

}

- (dispatch_queue_t)encoderCallbackQueue{

if (!_encoderCallbackQueue) {

_encoderCallbackQueue = dispatch_queue_create("encoder callback queue", NULL);

}

return _encoderCallbackQueue;

}

- (dispatch_queue_t)decoderQueue{

if (!_decoderQueue) {

_decoderQueue = dispatch_queue_create("decoder queue", NULL);

}

return _decoderQueue;

}

- (dispatch_queue_t)decoderCallbackQueue{

if (!_decoderCallbackQueue) {

_decoderCallbackQueue = dispatch_queue_create("decoder callback queue", NULL);

}

return _decoderCallbackQueue;

}

#pragma mark - 编码

- (void)encodeInit{

OSStatus status = VTCompressionSessionCreate(kCFAllocatorDefault, (uint32_t)_width, (uint32_t)_height, kCMVideoCodecType_H264, NULL, NULL, NULL, encodeCallBack, (__bridge void * _Nullable)(self), &_encoderSession);

if (status != noErr) {

return;

}

// 设置实时编码

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_RealTime, kCFBooleanTrue);

// 设置不需要B帧

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_ProfileLevel, kVTProfileLevel_H264_Baseline_AutoLevel);

// 设置码率均值

CFNumberRef bitRate = (__bridge CFNumberRef)(@(_height*1000));

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_AverageBitRate, bitRate);

// 设置最值码率

CFArrayRef limits = (__bridge CFArrayRef)(@[@(_height*1000/4),@(_height*1000*4)]);

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_DataRateLimits, limits);

// 设置FPS

CFNumberRef fps = (__bridge CFNumberRef)(@(25));

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_ExpectedFrameRate, fps);

// 设置I帧间隔

CFNumberRef maxKeyFrameInterval = (__bridge CFNumberRef)(@(25*2));

status = VTSessionSetProperty(_encoderSession, kVTCompressionPropertyKey_MaxKeyFrameInterval, maxKeyFrameInterval);

// 准备编码

status = VTCompressionSessionPrepareToEncodeFrames(_encoderSession);

}

void encodeCallBack (

void * CM_NULLABLE outputCallbackRefCon,

void * CM_NULLABLE sourceFrameRefCon,

OSStatus status,

VTEncodeInfoFlags infoFlags,

CM_NULLABLE CMSampleBufferRef sampleBuffer ){

if (status != noErr) {

NSLog(@"encodeVideoCallBack: encode error, status = %d",(int)status);

return;

}

if (!CMSampleBufferDataIsReady(sampleBuffer)) {

NSLog(@"encodeVideoCallBack: data is not ready");

return;

}

Demo2ViewController *VC = (__bridge Demo2ViewController *)(outputCallbackRefCon);

// 判断是否为关键帧

BOOL isKeyFrame = NO;

CFArrayRef attachArray = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, true);

isKeyFrame = !CFDictionaryContainsKey(CFArrayGetValueAtIndex(attachArray, 0), kCMSampleAttachmentKey_NotSync);//(注意取反符号)

if (isKeyFrame && !VC->hasSpsPps) {

size_t spsSize, spsCount;

size_t ppsSize, ppsCount;

const uint8_t *spsData, *ppsData;

// 获取图像源格式

CMFormatDescriptionRef formatDesc = CMSampleBufferGetFormatDescription(sampleBuffer);

OSStatus status1 = CMVideoFormatDescriptionGetH264ParameterSetAtIndex(formatDesc, 0, &spsData, &spsSize, &spsCount, 0);

OSStatus status2 = CMVideoFormatDescriptionGetH264ParameterSetAtIndex(formatDesc, 1, &ppsData, &ppsSize, &ppsCount, 0);

if (status1 == noErr && status2 == noErr) {

VC->hasSpsPps = true;

//sps data

VC->sps = [NSMutableData dataWithCapacity:4 + spsSize];

[VC->sps appendBytes:StartCode length:4];

[VC->sps appendBytes:spsData length:spsSize];

//pps data

VC->pps = [NSMutableData dataWithCapacity:4 + ppsSize];

[VC->pps appendBytes:StartCode length:4];

[VC->pps appendBytes:ppsData length:ppsSize];

[VC decodeH264Data:VC->sps];

[VC decodeH264Data:VC->pps];

}

}

// 获取NALU数据

size_t lengthAtOffset, totalLength;

char *dataPoint;

// 将数据复制到dataPoint

CMBlockBufferRef blockBuffer = CMSampleBufferGetDataBuffer(sampleBuffer);

OSStatus error = CMBlockBufferGetDataPointer(blockBuffer, 0, &lengthAtOffset, &totalLength, &dataPoint);

if (error != kCMBlockBufferNoErr) {

NSLog(@"VideoEncodeCallback: get datapoint failed, status = %d", (int)error);

return;

}

// 循环获取nalu数据

size_t offet = 0;

// 返回的nalu数据前四个字节不是0001的startcode(不是系统端的0001),而是大端模式的帧长度length

const int lengthInfoSize = 4;

while (offet < totalLength - lengthInfoSize) {

uint32_t naluLength = 0;

// 获取nalu 数据长度

memcpy(&naluLength, dataPoint + offet, lengthInfoSize);

// 大端转系统端

naluLength = CFSwapInt32BigToHost(naluLength);

// 获取到编码好的视频数据

NSMutableData *data = [NSMutableData dataWithCapacity:4 + naluLength];

[data appendBytes:StartCode length:4];

[data appendBytes:dataPoint + offet + lengthInfoSize length:naluLength];

dispatch_async(VC.encoderCallbackQueue, ^{

[VC decodeH264Data:data];

});

// 移动下标,继续读取下一个数据

offet += lengthInfoSize + naluLength;

}

}

- (void)encodeSampleBuffer:(CMSampleBufferRef)sampleBuffer{

if (!self.encoderSession) {

[self encodeInit];

}

CFRetain(sampleBuffer);

dispatch_async(self.encoderQueue, ^{

CVImageBufferRef imageBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

CMTime TimeStamp = CMTimeMake(self->frameID, 1000);

self->frameID++;

CMTime duration = kCMTimeInvalid;

VTEncodeInfoFlags infoFlagsOut;

OSStatus status = VTCompressionSessionEncodeFrame(self.encoderSession, imageBuffer, TimeStamp, duration, NULL, NULL, &infoFlagsOut);

if (status != noErr) {

NSLog(@"error");

}

CFRelease(sampleBuffer);

});

}

#pragma mark - 视频解码

// 视频解码器初始化

- (void)decodeVideoInit {

const uint8_t * const parameterSetPointers[2] = {_sps, _pps};

const size_t parameterSetSizes[2] = {_spsSize, _ppsSize};

int naluHeaderLen = 4;

// 根据sps pps配置解码参数

OSStatus status = CMVideoFormatDescriptionCreateFromH264ParameterSets(kCFAllocatorDefault, 2, parameterSetPointers, parameterSetSizes, naluHeaderLen, &_decodeDesc);

if (status != noErr) {

NSLog(@"Video hard DecodeSession create H264ParameterSets(sps, pps) failed status= %d", (int)status);

return;

}

// 配置视频输出参数

NSDictionary *destinationPixBufferAttrs =

@{

(id)kCVPixelBufferPixelFormatTypeKey: [NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarFullRange], //iOS上 nv12(uvuv排布) 而不是nv21(vuvu排布)

(id)kCVPixelBufferWidthKey: [NSNumber numberWithInteger:_width],

(id)kCVPixelBufferHeightKey: [NSNumber numberWithInteger:_height],

(id)kCVPixelBufferOpenGLCompatibilityKey: [NSNumber numberWithBool:true]

};

VTDecompressionOutputCallbackRecord callbackRecord;

callbackRecord.decompressionOutputCallback = videoDecompressionOutputCallback;

callbackRecord.decompressionOutputRefCon = (__bridge void * _Nullable)(self);

// 创建解码器

status = VTDecompressionSessionCreate(kCFAllocatorDefault, _decodeDesc, NULL, (__bridge CFDictionaryRef _Nullable)(destinationPixBufferAttrs), &callbackRecord, &_decoderSession);

if (status != noErr) {

NSLog(@"Video hard DecodeSession create failed status= %d", (int)status);

return;

}

// 设置解码的实时

VTSessionSetProperty(_decoderSession, kVTDecompressionPropertyKey_RealTime, kCFBooleanTrue);

}

/**解码回调函数*/

void videoDecompressionOutputCallback(void * CM_NULLABLE decompressionOutputRefCon,

void * CM_NULLABLE sourceFrameRefCon,

OSStatus status,

VTDecodeInfoFlags infoFlags,

CM_NULLABLE CVImageBufferRef imageBuffer,

CMTime presentationTimeStamp,

CMTime presentationDuration ) {

if (status != noErr) {

NSLog(@"Video hard decode callback error status=%d", (int)status);

return;

}

//解码后的数据sourceFrameRefCon -> CVPixelBufferRef

CVPixelBufferRef *outputPixelBuffer = (CVPixelBufferRef *)sourceFrameRefCon;

*outputPixelBuffer = CVPixelBufferRetain(imageBuffer);

//获取self

Demo2ViewController *decoder = (__bridge Demo2ViewController *)(decompressionOutputRefCon);

//调用回调队列

dispatch_async(decoder.decoderCallbackQueue, ^{

// 这里异步把解码后的数据(imageBuffer)传输出去

//释放数据

CVPixelBufferRelease(imageBuffer);

});

}

// 解码

- (void)decodeH264Data:(NSData *)h264Data {

if (!self.decoderSession) {

[self decodeVideoInit];

}

uint8_t *frame = (uint8_t *)h264Data.bytes;

uint32_t size = (uint32_t)h264Data.length;

int type = (frame[4] & 0x1F);

// 将NALU的开始码转为4字节大端NALU的长度信息

uint32_t naluSize = size - 4;

uint8_t *pNaluSize = (uint8_t *)(&naluSize);

frame[0] = *(pNaluSize + 3);

frame[1] = *(pNaluSize + 2);

frame[2] = *(pNaluSize + 1);

frame[3] = *(pNaluSize);

switch (type) {

case 0x05: //关键帧

[self decode:frame withSize:size];

break;

case 0x06:

//NSLog(@"SEI");//增强信息

break;

case 0x07: //sps

_spsSize = naluSize;

_sps = malloc(_spsSize);

memcpy(_sps, &frame[4], _spsSize);

break;

case 0x08: //pps

_ppsSize = naluSize;

_pps = malloc(_ppsSize);

memcpy(_pps, &frame[4], _ppsSize);

break;

default: //其他帧(1-5)

[self decode:frame withSize:size];

break;

}

}

// 解码函数

- (void)decode:(uint8_t *)frame withSize:(uint32_t)frameSize {

CVPixelBufferRef outputPixelBuffer = NULL;

CMBlockBufferRef blockBuffer = NULL;

CMBlockBufferFlags flag0 = 0;

OSStatus status = CMBlockBufferCreateWithMemoryBlock(kCFAllocatorDefault, frame, frameSize, kCFAllocatorNull, NULL, 0, frameSize, flag0, &blockBuffer);

if (status != kCMBlockBufferNoErr) {

NSLog(@"Video hard decode create blockBuffer error code=%d", (int)status);

return;

}

CMSampleBufferRef sampleBuffer = NULL;

const size_t sampleSizeArray[] = {frameSize};

status = CMSampleBufferCreateReady(kCFAllocatorDefault, blockBuffer, _decodeDesc, 1, 0, NULL, 1, sampleSizeArray, &sampleBuffer);

if (status != noErr || !sampleBuffer) {

NSLog(@"Video hard decode create sampleBuffer failed status=%d", (int)status);

CFRelease(blockBuffer);

return;

}

//解码

//向视频解码器提示使用低功耗模式是可以的

VTDecodeFrameFlags flag1 = kVTDecodeFrame_1xRealTimePlayback;

//异步解码

VTDecodeInfoFlags infoFlag = kVTDecodeInfo_Asynchronous;

//解码数据

/*

参数1: 解码session

参数2: 源数据 包含一个或多个视频帧的CMsampleBuffer

参数3: 解码标志

参数4: 解码后数据outputPixelBuffer

参数5: 同步/异步解码标识

*/

status = VTDecompressionSessionDecodeFrame(_decoderSession, sampleBuffer, flag1, &outputPixelBuffer, &infoFlag);

if (status == kVTInvalidSessionErr) {

NSLog(@"Video hard decode InvalidSessionErr status =%d", (int)status);

} else if (status == kVTVideoDecoderBadDataErr) {

NSLog(@"Video hard decode BadData status =%d", (int)status);

} else if (status != noErr) {

NSLog(@"Video hard decode failed status =%d", (int)status);

}

CFRelease(sampleBuffer);

CFRelease(blockBuffer);

}

#pragma mark - AVCaptureVideoDataOutputSampleBufferDelegate

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection{

if (connection == self.videoConnection) {

[self encodeSampleBuffer:sampleBuffer];

}

}

#pragma mark - 析构

- (void)dealloc {

if (_decoderSession) {

VTDecompressionSessionInvalidate(_decoderSession);

CFRelease(_decoderSession);

_decoderSession = NULL;

}

if (_encoderSession) {

VTCompressionSessionInvalidate(_encoderSession);

CFRelease(_encoderSession);

_encoderSession = NULL;

}

}

@end

AudioToolbox

编码部分

初始化

- (void)setupAudioConverter:(CMSampleBufferRef)sampleBuffer{

// 通过实时的解码数据来获取输入的信息

AudioStreamBasicDescription inputDescription = *CMAudioFormatDescriptionGetStreamBasicDescription(CMSampleBufferGetFormatDescription(sampleBuffer));

// 定义pcm输出信息

AudioStreamBasicDescription outputDescription =

{

.mSampleRate = 44100,

.mFormatID = kAudioFormatMPEG4AAC,

.mFormatFlags = kMPEG4Object_AAC_LC,

.mBytesPerPacket = 0,

.mFramesPerPacket = 1024,

.mBytesPerFrame = 0,

.mChannelsPerFrame = 1,

.mBitsPerChannel = 0,

.mReserved = 0

};

// 创建解码器

OSStatus status = AudioConverterNew(&inputDescription, &outputDescription, &_audioConverter);

if (status != noErr) {

NSLog(@"error");

return;

}

// 设置输出质量

UInt32 temp = kAudioConverterQuality_High;

AudioConverterSetProperty(_audioConverter, kAudioConverterCodecQuality, sizeof(temp), &temp);

// 设置比特率

UInt32 bitRate = 96000;

AudioConverterSetProperty(_audioConverter, kAudioConverterEncodeBitRate, sizeof(bitRate), &bitRate);

}

首先通过实时的音频数据获取输入的信息,再定义pcm输出信息,然后通过这两个参数去创建解码器。创建成功之后设置一些解码器的属性。

编码函数

- (void)setAudioSampleBuffer:(CMSampleBufferRef)sampleBuffer{

CFRetain(sampleBuffer);

if (!self.audioConverter) {

[self setupAudioConverter:sampleBuffer];

}

dispatch_async(self.encodeQueue, ^{

CMBlockBufferRef bufferRef = CMSampleBufferGetDataBuffer(sampleBuffer);

CFRetain(bufferRef);

CMBlockBufferGetDataPointer(bufferRef, 0, NULL, &self->_pcmBufferSize, &self->_pcmBuffer);

char *pcmbuffer = malloc(self->_pcmBufferSize);

memset(pcmbuffer, 0, self->_pcmBufferSize);

AudioBufferList audioBufferList = {0};

audioBufferList.mNumberBuffers = 1;

audioBufferList.mBuffers[0].mData = pcmbuffer;

audioBufferList.mBuffers[0].mDataByteSize = (uint32_t)self->_pcmBufferSize;

audioBufferList.mBuffers[0].mNumberChannels = 1;

UInt32 dataPacketSize = 1;

OSStatus status = AudioConverterFillComplexBuffer(self.audioConverter, aacEncodeInputDataProc, (__bridge void * _Nullable)(self), &dataPacketSize, &audioBufferList, NULL);

if (status == noErr) {

NSData *aacData = [NSData dataWithBytes:audioBufferList.mBuffers[0].mData length:audioBufferList.mBuffers[0].mDataByteSize];

free(pcmbuffer);

//添加ADTS头,想要获取裸流时,请忽略添加ADTS头,写入文件时,必须添加

//NSData *adtsHeader = [self adtsDataForPacketLength:rawAAC.length];

//NSMutableData *fullData = [NSMutableData dataWithCapacity:adtsHeader.length + rawAAC.length];

//[fullData appendData:adtsHeader];

//[fullData appendData:rawAAC];

dispatch_async(self.encodeCallbackQueue, ^{

[self decodeAudioAACData:aacData];

});

}

CFRelease(bufferRef);

CFRelease(sampleBuffer);

});

}

音频解码和视频解码不一样,首先要获取出pcm的数据存放在一个全局变量里面,然后创建一个AudioBufferList用于接收数据,解码工作在解码回调里面。把回调的指针以及创建的AudioBufferList传入AudioConverterFillComplexBuffer中,这样就会不断的解码数据了。

编码回调

static OSStatus aacEncodeInputDataProc(

AudioConverterRef inAudioConverter,

UInt32 *ioNumberDataPackets,

AudioBufferList *ioData,

AudioStreamPacketDescription **outDataPacketDescription,

void *inUserData){

AudioViewController *audioVC = (__bridge AudioViewController *)(inUserData);

if (!audioVC.pcmBufferSize) {

*ioNumberDataPackets = 0;

return -1;

}

// 填充数据

ioData->mBuffers[0].mDataByteSize = (UInt32)audioVC.pcmBufferSize;

ioData->mBuffers[0].mData = audioVC.pcmBuffer;

ioData->mBuffers[0].mNumberChannels = 1;

audioVC.pcmBufferSize = 0;

*ioNumberDataPackets = 1;

return noErr;

}

编码回调函数里面做了填充PCM数据的工作,这样就可以进行编码

解码部分

初始化

- (void)setupEncoder {

//输出参数pcm

AudioStreamBasicDescription outputAudioDes = {0};

outputAudioDes.mSampleRate = (Float64)self.sampleRate; //采样率

outputAudioDes.mChannelsPerFrame = (UInt32)self.channelCount; //输出声道数

outputAudioDes.mFormatID = kAudioFormatLinearPCM; //输出格式

outputAudioDes.mFormatFlags = (kAudioFormatFlagIsSignedInteger | kAudioFormatFlagIsPacked); //编码 12

outputAudioDes.mFramesPerPacket = 1; //每一个packet帧数 ;

outputAudioDes.mBitsPerChannel = 16; //数据帧中每个通道的采样位数。

outputAudioDes.mBytesPerFrame = outputAudioDes.mBitsPerChannel / 8 *outputAudioDes.mChannelsPerFrame; //每一帧大小(采样位数 / 8 *声道数)

outputAudioDes.mBytesPerPacket = outputAudioDes.mBytesPerFrame * outputAudioDes.mFramesPerPacket; //每个packet大小(帧大小 * 帧数)

outputAudioDes.mReserved = 0; //对其方式 0(8字节对齐)

//输入参数aac

AudioStreamBasicDescription inputAduioDes = {0};

inputAduioDes.mSampleRate = (Float64)self.sampleRate;

inputAduioDes.mFormatID = kAudioFormatMPEG4AAC;

inputAduioDes.mFormatFlags = kMPEG4Object_AAC_LC;

inputAduioDes.mFramesPerPacket = 1024;

inputAduioDes.mChannelsPerFrame = (UInt32)self.channelCount;

//填充输入相关信息

UInt32 inDesSize = sizeof(inputAduioDes);

AudioFormatGetProperty(kAudioFormatProperty_FormatInfo, 0, NULL, &inDesSize, &inputAduioDes);

OSStatus status = AudioConverterNew(&inputAduioDes, &outputAudioDes, &_audioDecodeConverter);

if (status != noErr) {

NSLog(@"Error!:硬解码AAC创建失败, status= %d", (int)status);

return;

}

}

解码器的初始化与编码器的初始化类似,不过多阐述

解码函数

- (void)decodeAudioAACData:(NSData *)aacData {

if (!_audioDecodeConverter) {

[self setupEncoder];

}

dispatch_async(self.decodeQueue, ^{

//记录aac 作为参数参入解码回调函数

CCAudioUserData userData = {0};

userData.channelCount = (UInt32)self.channelCount;

userData.data = (char *)[aacData bytes];

userData.size = (UInt32)aacData.length;

userData.packetDesc.mDataByteSize = (UInt32)aacData.length;

userData.packetDesc.mStartOffset = 0;

userData.packetDesc.mVariableFramesInPacket = 0;

//输出大小和packet个数

UInt32 pcmBufferSize = (UInt32)(2048 * self.channelCount);

UInt32 pcmDataPacketSize = 1024;

//创建临时容器pcm

uint8_t *pcmBuffer = malloc(pcmBufferSize);

memset(pcmBuffer, 0, pcmBufferSize);

//输出buffer

AudioBufferList outAudioBufferList = {0};

outAudioBufferList.mNumberBuffers = 1;

outAudioBufferList.mBuffers[0].mNumberChannels = (uint32_t)self.channelCount;

outAudioBufferList.mBuffers[0].mDataByteSize = (UInt32)pcmBufferSize;

outAudioBufferList.mBuffers[0].mData = pcmBuffer;

//输出描述

AudioStreamPacketDescription outputPacketDesc = {0};

//配置填充函数,获取输出数据

OSStatus status = AudioConverterFillComplexBuffer(self->_audioDecodeConverter, AudioDecoderConverterComplexInputDataProc, &userData, &pcmDataPacketSize, &outAudioBufferList, &outputPacketDesc);

if (status != noErr) {

NSLog(@"Error: AAC Decoder error, status=%d",(int)status);

return;

}

//如果获取到数据

if (outAudioBufferList.mBuffers[0].mDataByteSize > 0) {

NSData *rawData = [NSData dataWithBytes:outAudioBufferList.mBuffers[0].mData length:outAudioBufferList.mBuffers[0].mDataByteSize];

dispatch_async(self.decodeCallbackQueue, ^{

// 这里可以处理解码出来的pcm数据

NSLog(@"%@",rawData);

});

}

free(pcmBuffer);

});

}

音频的解码函数和编码函数都是AudioConverterFillComplexBuffer,参数也是一样,唯一的不同是解码后输出的数据是PCM数据

解码回调

static OSStatus AudioDecoderConverterComplexInputDataProc( AudioConverterRef inAudioConverter, UInt32 *ioNumberDataPackets, AudioBufferList *ioData, AudioStreamPacketDescription **outDataPacketDescription, void *inUserData) {

CCAudioUserData *audioDecoder = (CCAudioUserData *)(inUserData);

if (audioDecoder->size <= 0) {

ioNumberDataPackets = 0;

return -1;

}

//填充数据

*outDataPacketDescription = &audioDecoder->packetDesc;

(*outDataPacketDescription)[0].mStartOffset = 0;

(*outDataPacketDescription)[0].mDataByteSize = audioDecoder->size;

(*outDataPacketDescription)[0].mVariableFramesInPacket = 0;

ioData->mBuffers[0].mData = audioDecoder->data;

ioData->mBuffers[0].mDataByteSize = audioDecoder->size;

ioData->mBuffers[0].mNumberChannels = audioDecoder->channelCount;

return noErr;

}

完整代码

//

// AudioViewController.m

// Demo

//

// Created by Noah on 2020/3/21.

// Copyright © 2020 Noah. All rights reserved.

//

#import "AudioViewController.h"

#import <AVFoundation/AVFoundation.h>

#import <AudioToolbox/AudioToolbox.h>

typedef struct {

char * data;

UInt32 size;

UInt32 channelCount;

AudioStreamPacketDescription packetDesc;

} CCAudioUserData;

@interface AudioViewController ()<AVCaptureAudioDataOutputSampleBufferDelegate>

@property (nonatomic, strong) AVCaptureSession *captureSession;

@property (nonatomic, strong) dispatch_queue_t captureQueue;

@property (nonatomic, strong) AVCaptureInput *audioDeviceInput;

@property (nonatomic, strong) AVCaptureAudioDataOutput *audioDeviceOutput;

@property (nonatomic, strong) dispatch_queue_t encodeQueue;

@property (nonatomic, strong) dispatch_queue_t encodeCallbackQueue;

@property (nonatomic, strong) AVCaptureConnection *audioConnettion;

@property (nonatomic, unsafe_unretained) AudioConverterRef audioConverter;

@property (nonatomic) char *pcmBuffer;

@property (nonatomic) size_t pcmBufferSize;

@property (nonatomic, strong) dispatch_queue_t decodeQueue;

@property (nonatomic, strong) dispatch_queue_t decodeCallbackQueue;

@property (nonatomic) AudioConverterRef audioDecodeConverter;

@property (nonatomic) char *aacBuffer;

@property (nonatomic) UInt32 aacBufferSize;

/**码率*/

@property (nonatomic, assign) NSInteger bitrate;//(96000)

/**声道*/

@property (nonatomic, assign) NSInteger channelCount;//(1)

/**采样率*/

@property (nonatomic, assign) NSInteger sampleRate;//(默认44100)

/**采样点量化*/

@property (nonatomic, assign) NSInteger sampleSize;//(16)

@end

@implementation AudioViewController

- (void)viewDidLoad {

[super viewDidLoad];

// Do any additional setup after loading the view.

self.bitrate = 96000;

self.channelCount = 1;

self.sampleRate = 44100;

self.sampleSize = 16;

[self setupAudio];

[self.captureSession startRunning];

}

#pragma mark - 懒加载

- (AVCaptureSession *)captureSession{

if (!_captureSession) {

_captureSession = [[AVCaptureSession alloc]init];

}

return _captureSession;

}

- (dispatch_queue_t)captureQueue{

if (!_captureQueue) {

_captureQueue = dispatch_queue_create("capture queue", NULL);

}

return _captureQueue;

}

- (dispatch_queue_t)encodeQueue{

if (!_encodeQueue) {

_encodeQueue = dispatch_queue_create("encode queue", NULL);

}

return _encodeQueue;

}

- (dispatch_queue_t)encodeCallbackQueue{

if (!_encodeCallbackQueue) {

_encodeCallbackQueue = dispatch_queue_create("encode callback queue", NULL);

}

return _encodeCallbackQueue;

}

- (dispatch_queue_t)decodeQueue{

if (!_decodeQueue) {

_decodeQueue = dispatch_queue_create("decode queue", NULL);

}

return _decodeQueue;

}

- (dispatch_queue_t)decodeCallbackQueue{

if (!_decodeCallbackQueue) {

_decodeCallbackQueue = dispatch_queue_create("decode callback queue", NULL);

}

return _decodeCallbackQueue;

}

#pragma mark - 音频初始化

- (void)setupAudio{

AVCaptureDevice *audioDevice = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeAudio];

self.audioDeviceInput = [AVCaptureDeviceInput deviceInputWithDevice:audioDevice error:nil];

self.audioDeviceOutput = [[AVCaptureAudioDataOutput alloc]init];

[self.audioDeviceOutput setSampleBufferDelegate:self queue:self.captureQueue];

[self.captureSession beginConfiguration];

if ([self.captureSession canAddInput:self.audioDeviceInput]) {

[self.captureSession addInput:self.audioDeviceInput];

}

if ([self.captureSession canAddOutput:self.audioDeviceOutput]) {

[self.captureSession addOutput:self.audioDeviceOutput];

}

[self.captureSession commitConfiguration];

self.audioConnettion = [self.audioDeviceOutput connectionWithMediaType:AVMediaTypeAudio];

}

#pragma mark - 音频编码

- (void)setupAudioConverter:(CMSampleBufferRef)sampleBuffer{

AudioStreamBasicDescription inputDescription = *CMAudioFormatDescriptionGetStreamBasicDescription(CMSampleBufferGetFormatDescription(sampleBuffer));

AudioStreamBasicDescription outputDescription =

{

.mSampleRate = 44100,

.mFormatID = kAudioFormatMPEG4AAC,

.mFormatFlags = kMPEG4Object_AAC_LC,

.mBytesPerPacket = 0,

.mFramesPerPacket = 1024,

.mBytesPerFrame = 0,

.mChannelsPerFrame = 1,

.mBitsPerChannel = 0,

.mReserved = 0

};

OSStatus status = AudioConverterNew(&inputDescription, &outputDescription, &_audioConverter);

if (status != noErr) {

NSLog(@"error");

return;

}

UInt32 temp = kAudioConverterQuality_High;

AudioConverterSetProperty(_audioConverter, kAudioConverterCodecQuality, sizeof(temp), &temp);

UInt32 bitRate = 96000;

AudioConverterSetProperty(_audioConverter, kAudioConverterEncodeBitRate, sizeof(bitRate), &bitRate);

}

static OSStatus aacEncodeInputDataProc(

AudioConverterRef inAudioConverter,

UInt32 *ioNumberDataPackets,

AudioBufferList *ioData,

AudioStreamPacketDescription **outDataPacketDescription,

void *inUserData){

AudioViewController *audioVC = (__bridge AudioViewController *)(inUserData);

if (!audioVC.pcmBufferSize) {

*ioNumberDataPackets = 0;

return -1;

}

ioData->mBuffers[0].mDataByteSize = (UInt32)audioVC.pcmBufferSize;

ioData->mBuffers[0].mData = audioVC.pcmBuffer;

ioData->mBuffers[0].mNumberChannels = 1;

audioVC.pcmBufferSize = 0;

*ioNumberDataPackets = 1;

return noErr;

}

- (void)setAudioSampleBuffer:(CMSampleBufferRef)sampleBuffer{

CFRetain(sampleBuffer);

if (!self.audioConverter) {

[self setupAudioConverter:sampleBuffer];

}

dispatch_async(self.encodeQueue, ^{

CMBlockBufferRef bufferRef = CMSampleBufferGetDataBuffer(sampleBuffer);

CFRetain(bufferRef);

CMBlockBufferGetDataPointer(bufferRef, 0, NULL, &self->_pcmBufferSize, &self->_pcmBuffer);

char *pcmbuffer = malloc(self->_pcmBufferSize);

memset(pcmbuffer, 0, self->_pcmBufferSize);

AudioBufferList audioBufferList = {0};

audioBufferList.mNumberBuffers = 1;

audioBufferList.mBuffers[0].mData = pcmbuffer;

audioBufferList.mBuffers[0].mDataByteSize = (uint32_t)self->_pcmBufferSize;

audioBufferList.mBuffers[0].mNumberChannels = 1;

UInt32 dataPacketSize = 1;

OSStatus status = AudioConverterFillComplexBuffer(self.audioConverter, aacEncodeInputDataProc, (__bridge void * _Nullable)(self), &dataPacketSize, &audioBufferList, NULL);

if (status == noErr) {

NSData *aacData = [NSData dataWithBytes:audioBufferList.mBuffers[0].mData length:audioBufferList.mBuffers[0].mDataByteSize];

free(pcmbuffer);

//添加ADTS头,想要获取裸流时,请忽略添加ADTS头,写入文件时,必须添加

// NSData *adtsHeader = [self adtsDataForPacketLength:rawAAC.length];

// NSMutableData *fullData = [NSMutableData dataWithCapacity:adtsHeader.length + rawAAC.length];;

// [fullData appendData:adtsHeader];

// [fullData appendData:rawAAC];

dispatch_async(self.encodeCallbackQueue, ^{

[self decodeAudioAACData:aacData];

});

}

CFRelease(bufferRef);

CFRelease(sampleBuffer);

});

}

// AAC ADtS头

- (NSData*)adtsDataForPacketLength:(NSUInteger)packetLength {

int adtsLength = 7;

char *packet = malloc(sizeof(char) * adtsLength);

// Variables Recycled by addADTStoPacket

int profile = 2; //AAC LC

//39=MediaCodecInfo.CodecProfileLevel.AACObjectELD;

int freqIdx = 4; //3: 48000 Hz、4:44.1KHz、8: 16000 Hz、11: 8000 Hz

int chanCfg = 1; //MPEG-4 Audio Channel Configuration. 1 Channel front-center

NSUInteger fullLength = adtsLength + packetLength;

// fill in ADTS data

packet[0] = (char)0xFF; // 11111111 = syncword

packet[1] = (char)0xF9; // 1111 1 00 1 = syncword MPEG-2 Layer CRC

packet[2] = (char)(((profile-1)<<6) + (freqIdx<<2) +(chanCfg>>2));

packet[3] = (char)(((chanCfg&3)<<6) + (fullLength>>11));

packet[4] = (char)((fullLength&0x7FF) >> 3);

packet[5] = (char)(((fullLength&7)<<5) + 0x1F);

packet[6] = (char)0xFC;

NSData *data = [NSData dataWithBytesNoCopy:packet length:adtsLength freeWhenDone:YES];

return data;

}

#pragma mark - 音频解码

// 初始化

- (void)setupEncoder {

//输出参数pcm

AudioStreamBasicDescription outputAudioDes = {0};

outputAudioDes.mSampleRate = (Float64)self.sampleRate; //采样率

outputAudioDes.mChannelsPerFrame = (UInt32)self.channelCount; //输出声道数

outputAudioDes.mFormatID = kAudioFormatLinearPCM; //输出格式

outputAudioDes.mFormatFlags = (kAudioFormatFlagIsSignedInteger | kAudioFormatFlagIsPacked); //编码 12

outputAudioDes.mFramesPerPacket = 1; //每一个packet帧数 ;

outputAudioDes.mBitsPerChannel = 16; //数据帧中每个通道的采样位数。

outputAudioDes.mBytesPerFrame = outputAudioDes.mBitsPerChannel / 8 *outputAudioDes.mChannelsPerFrame; //每一帧大小(采样位数 / 8 *声道数)

outputAudioDes.mBytesPerPacket = outputAudioDes.mBytesPerFrame * outputAudioDes.mFramesPerPacket; //每个packet大小(帧大小 * 帧数)

outputAudioDes.mReserved = 0; //对其方式 0(8字节对齐)

//输入参数aac

AudioStreamBasicDescription inputAduioDes = {0};

inputAduioDes.mSampleRate = (Float64)self.sampleRate;

inputAduioDes.mFormatID = kAudioFormatMPEG4AAC;

inputAduioDes.mFormatFlags = kMPEG4Object_AAC_LC;

inputAduioDes.mFramesPerPacket = 1024;

inputAduioDes.mChannelsPerFrame = (UInt32)self.channelCount;

//填充输出相关信息

UInt32 inDesSize = sizeof(inputAduioDes);

AudioFormatGetProperty(kAudioFormatProperty_FormatInfo, 0, NULL, &inDesSize, &inputAduioDes);

OSStatus status = AudioConverterNew(&inputAduioDes, &outputAudioDes, &_audioDecodeConverter);

if (status != noErr) {

NSLog(@"Error!:硬解码AAC创建失败, status= %d", (int)status);

return;

}

}

// 解码器回调函数

static OSStatus AudioDecoderConverterComplexInputDataProc( AudioConverterRef inAudioConverter, UInt32 *ioNumberDataPackets, AudioBufferList *ioData, AudioStreamPacketDescription **outDataPacketDescription, void *inUserData) {

CCAudioUserData *audioDecoder = (CCAudioUserData *)(inUserData);

if (audioDecoder->size <= 0) {

ioNumberDataPackets = 0;

return -1;

}

//填充数据

*outDataPacketDescription = &audioDecoder->packetDesc;

(*outDataPacketDescription)[0].mStartOffset = 0;

(*outDataPacketDescription)[0].mDataByteSize = audioDecoder->size;

(*outDataPacketDescription)[0].mVariableFramesInPacket = 0;

ioData->mBuffers[0].mData = audioDecoder->data;

ioData->mBuffers[0].mDataByteSize = audioDecoder->size;

ioData->mBuffers[0].mNumberChannels = audioDecoder->channelCount;

return noErr;

}

- (void)decodeAudioAACData:(NSData *)aacData {

if (!_audioDecodeConverter) {

[self setupEncoder];

}

dispatch_async(self.decodeQueue, ^{

//记录aac 作为参数参入解码回调函数

CCAudioUserData userData = {0};

userData.channelCount = (UInt32)self.channelCount;

userData.data = (char *)[aacData bytes];

userData.size = (UInt32)aacData.length;

userData.packetDesc.mDataByteSize = (UInt32)aacData.length;

userData.packetDesc.mStartOffset = 0;

userData.packetDesc.mVariableFramesInPacket = 0;

//输出大小和packet个数

UInt32 pcmBufferSize = (UInt32)(2048 * self.channelCount);

UInt32 pcmDataPacketSize = 1024;

//创建临时容器pcm

uint8_t *pcmBuffer = malloc(pcmBufferSize);

memset(pcmBuffer, 0, pcmBufferSize);

//输出buffer

AudioBufferList outAudioBufferList = {0};

outAudioBufferList.mNumberBuffers = 1;

outAudioBufferList.mBuffers[0].mNumberChannels = (uint32_t)self.channelCount;

outAudioBufferList.mBuffers[0].mDataByteSize = (UInt32)pcmBufferSize;

outAudioBufferList.mBuffers[0].mData = pcmBuffer;

//输出描述

AudioStreamPacketDescription outputPacketDesc = {0};

//配置填充函数,获取输出数据

OSStatus status = AudioConverterFillComplexBuffer(self->_audioDecodeConverter, AudioDecoderConverterComplexInputDataProc, &userData, &pcmDataPacketSize, &outAudioBufferList, &outputPacketDesc);

if (status != noErr) {

NSLog(@"Error: AAC Decoder error, status=%d",(int)status);

return;

}

//如果获取到数据

if (outAudioBufferList.mBuffers[0].mDataByteSize > 0) {

NSData *rawData = [NSData dataWithBytes:outAudioBufferList.mBuffers[0].mData length:outAudioBufferList.mBuffers[0].mDataByteSize];

dispatch_async(self.decodeCallbackQueue, ^{

// 这里可以处理解码出来的pcm数据

NSLog(@"%@",rawData);

});

}

free(pcmBuffer);

});

}

#pragma mark - AVCaptureAudioDataOutputSampleBufferDelegate

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection{

if (connection == self.audioConnettion) {

[self setAudioSampleBuffer:sampleBuffer];

}

}

#pragma mark - 析构

- (void)dealloc {

if (_audioConverter) {

AudioConverterDispose(_audioConverter);

_audioConverter = NULL;

}

if (_audioDecodeConverter) {

AudioConverterDispose(_audioDecodeConverter);

_audioDecodeConverter = NULL;

}

}

@end