前言

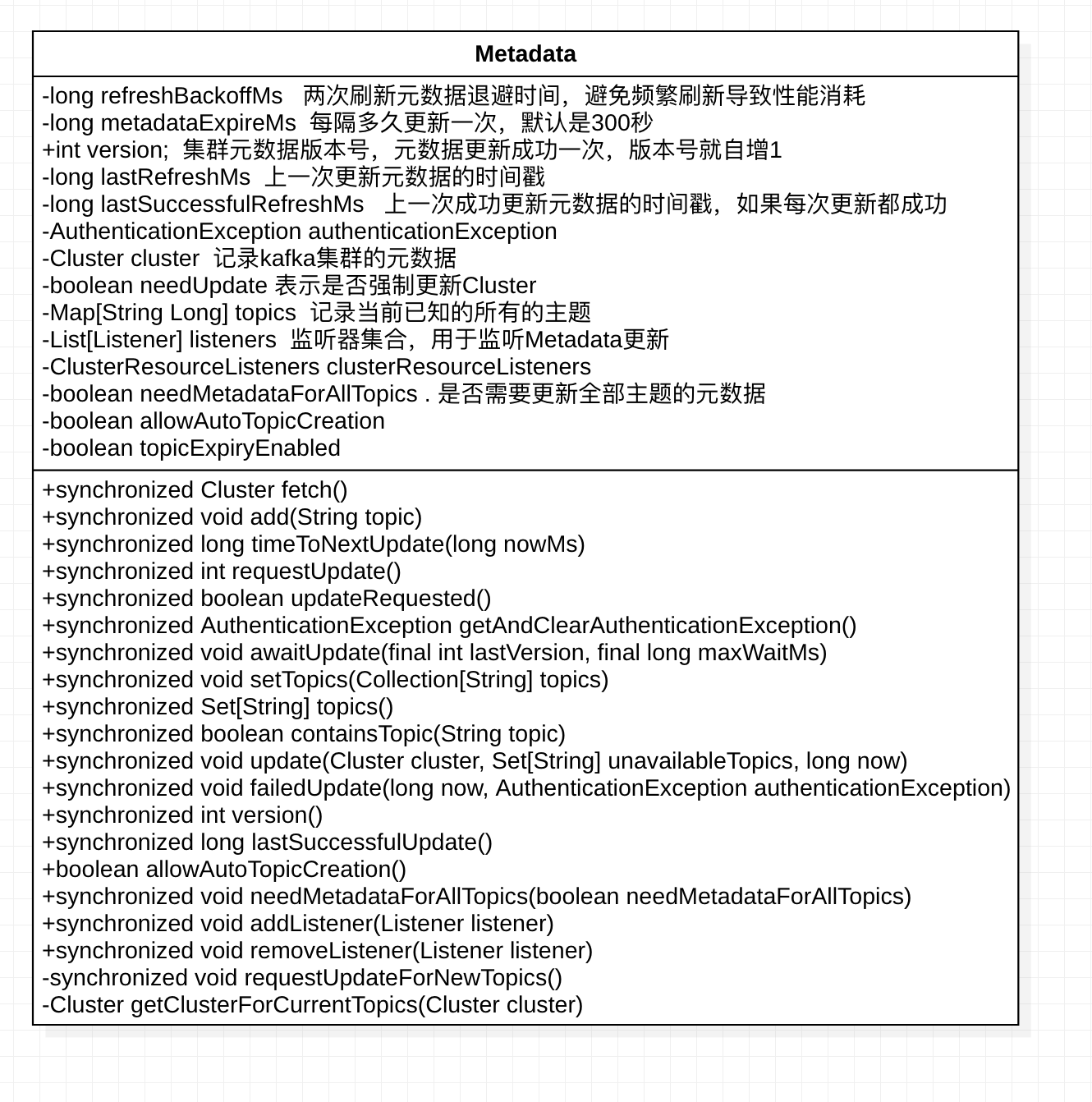

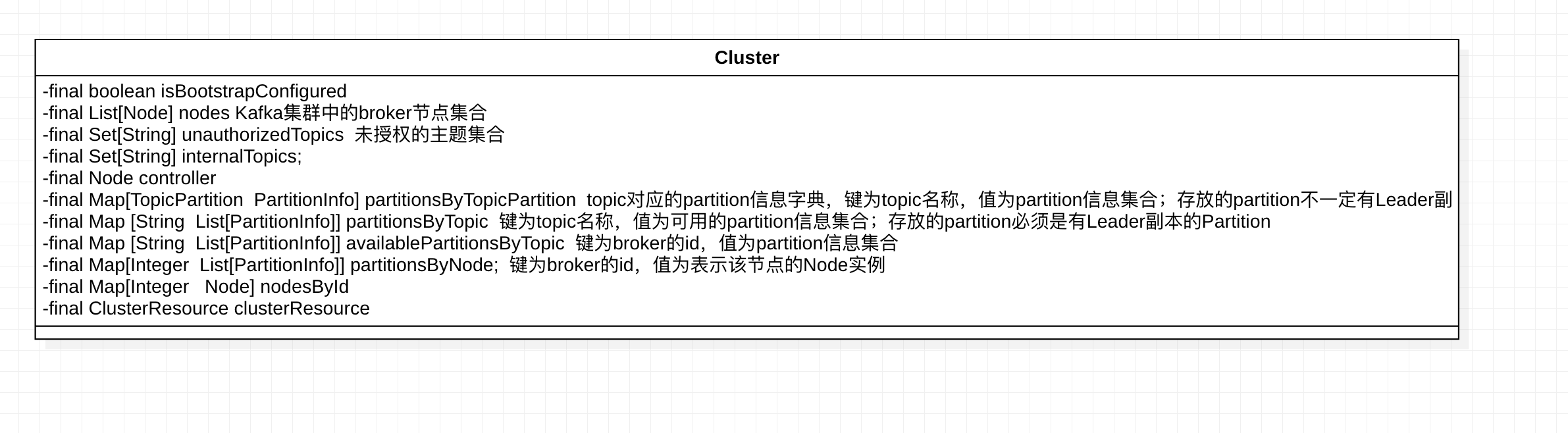

kafka客户端通过CLuster类来封装整个kafka集群的信息,Metadata类可以认为是Cluster类的上一层封装,主要功能是集群信息更新的管理。本文主要介绍3个部分的内容:MetaData是什么,MetaData如何更新数据的流程,MetaData更新触发的场景

MetaData是什么

MetaData的类的介绍

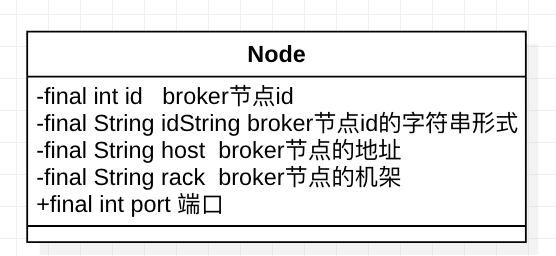

- Node: broker节点的相关的信息:

- host,port等相关信息,用于socket的连接

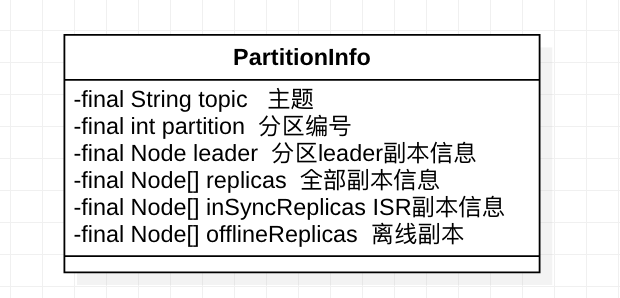

- PartitionInfo: 分区信息

- topic名称,partition 分区序号

- leader: 当前分区的leader,唯一进行通信的节点

- inSyncReplicas: ISR的节点(follower角色)

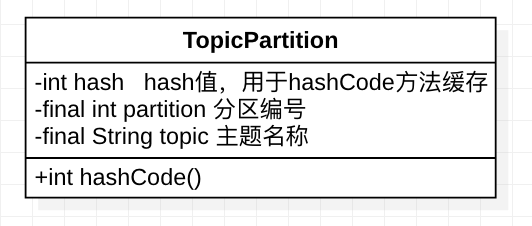

- TopicPartition: 每个topic和每个分区组成的唯一索引。代表一个分区标识

从上面的映射关系,可以看到,kafka是以分区为最小管理粒度,然后分区中存在一个Leader负责交互。Cluster代表整个kafka集群的一个实体类,MetaData的角色相当于Cluster的在客户端的维护者

MetaData如何进行更新

在讲解Producer的时候已经描述过,每个Producer新建的时候,都会创建一个新的metaData,在第一次调用doSend()时会存在一个阻塞等待更新的函数:

private Future<RecordMetadata> doSend(ProducerRecord<K, V> record, Callback callback) {

...

// first make sure the metadata for the topic is available

ClusterAndWaitTime clusterAndWaitTime = waitOnMetadata(record.topic(), record.partition(), maxBlockTimeMs);

long remainingWaitMs = Math.max(0, maxBlockTimeMs - clusterAndWaitTime.waitedOnMetadataMs);

Cluster cluster = clusterAndWaitTime.cluster;

...

}

来看一下waitOnMetadata方法:

//等待更新metaData

private ClusterAndWaitTime waitOnMetadata(String topic, Integer partition, long maxWaitMs) throws InterruptedException {

//如果metadata不存在当前topic的元数据,会触发一次强制刷新,metaData中的needUpdate置为true

metadata.add(topic);

Cluster cluster = metadata.fetch();

Integer partitionsCount = cluster.partitionCountForTopic(topic);

// Return cached metadata if we have it, and if the record's partition is either undefined

// or within the known partition range

if (partitionsCount != null && (partition == null || partition < partitionsCount))

return new ClusterAndWaitTime(cluster, 0);

long begin = time.milliseconds();

long remainingWaitMs = maxWaitMs;

long elapsed;

// Issue metadata requests until we have metadata for the topic or maxWaitTimeMs is exceeded.

// In case we already have cached metadata for the topic, but the requested partition is greater

// than expected, issue an update request only once. This is necessary in case the metadata

// is stale and the number of partitions for this topic has increased in the meantime.

do {

log.trace("Requesting metadata update for topic {}.", topic);

metadata.add(topic);

//该标记位用于保存当前的版本号,如果大于这个版本,表示更新成功了

int version = metadata.requestUpdate();

//唤醒sender线程,立即执行更新命令

sender.wakeup();

try {

// 阻塞等待更新成功

metadata.awaitUpdate(version, remainingWaitMs);

} catch (TimeoutException ex) {

throw new TimeoutException("Failed to update metadata after " + maxWaitMs + " ms.");

}

//到这里,应该是当前cluster更新成功了

cluster = metadata.fetch();

elapsed = time.milliseconds() - begin;

if (elapsed >= maxWaitMs)

throw new TimeoutException("Failed to update metadata after " + maxWaitMs + " ms.");

if (cluster.unauthorizedTopics().contains(topic))

throw new TopicAuthorizationException(topic);

remainingWaitMs = maxWaitMs - elapsed;

partitionsCount = cluster.partitionCountForTopic(topic);

//一直等到topic信息更新成功,或者超时抛出异常

} while (partitionsCount == null);

// 这里表示前面的消息投递分配策略存在问题

if (partition != null && partition >= partitionsCount) {

throw new KafkaException(

String.format("Invalid partition given with record: %d is not in the range [0...%d).", partition, partitionsCount));

}

return new ClusterAndWaitTime(cluster, elapsed);

}

从上面的代码中,可以看到执行真正更新流程的是sender类,顾名思义,他的作用就是不断的触发消息的发送,而真正执行发送的是NetworkClient。NetworkClient负责实际元数据更新命令的发送和响应处理,来看一下 。NetworkClient.poll(pollTimeout, now)方法

@Override

public List<ClientResponse> poll(long timeout, long now) {

...

long metadataTimeout = metadataUpdater.maybeUpdate(now);

try {

this.selector.poll(Utils.min(timeout, metadataTimeout, requestTimeoutMs));

} catch (IOException e) {

log.error("Unexpected error during I/O", e);

}

// process completed actions

long updatedNow = this.time.milliseconds();

List<ClientResponse> responses = new ArrayList<>();

handleCompletedSends(responses, updatedNow);

handleCompletedReceives(responses, updatedNow);

handleDisconnections(responses, updatedNow);

handleConnections();

handleInitiateApiVersionRequests(updatedNow);

handleTimedOutRequests(responses, updatedNow);

completeResponses(responses);

return responses;

- metadataUpdater.maybeUpdate(now) 该方法主要是判断是否需要更新元数据,如果需要,则发送更新命令,然后返回最大等待时间

- handleCompletedReceives(responses, updatedNow) 在这个方法中处理更新命令的返回

来看一下maybeUpdate

@Override

public long maybeUpdate(long now) {

// 这里表示定时刷新的策略下还需要等多久

long timeToNextMetadataUpdate = metadata.timeToNextUpdate(now);

//如果已经在更新中,等待结果时,则返回请求超时时间

long waitForMetadataFetch = this.metadataFetchInProgress ? requestTimeoutMs : 0;

//取两个最大值

long metadataTimeout = Math.max(timeToNextMetadataUpdate, waitForMetadataFetch);

if (metadataTimeout > 0) {

return metadataTimeout;

}

//到这里表示需要立即更新,取最空闲的节点

Node node = leastLoadedNode(now);

if (node == null) {

log.debug("Give up sending metadata request since no node is available");

return reconnectBackoffMs;

}

//执行更新操作

return maybeUpdate(now, node);

}

private long maybeUpdate(long now, Node node) {

String nodeConnectionId = node.idString();

//判断当前node的状态

if (canSendRequest(nodeConnectionId)) {

//标记正在处理,防止并发请求更新

this.metadataFetchInProgress = true;

//这里是构建不同的请求帧

MetadataRequest.Builder metadataRequest;

if (metadata.needMetadataForAllTopics())

metadataRequest = MetadataRequest.Builder.allTopics();

else

metadataRequest = new MetadataRequest.Builder(new ArrayList<>(metadata.topics()),

metadata.allowAutoTopicCreation());

log.debug("Sending metadata request {} to node {}", metadataRequest, node);

//发送具体爹请求信息

sendInternalMetadataRequest(metadataRequest, nodeConnectionId, now);

返回请求超时间

return requestTimeoutMs;

}

//判断是否Node正在连接

if (isAnyNodeConnecting()) {

//重连超时时间

return reconnectBackoffMs;

}

//如果存在可用的Node,则尝试初始化连接

if (connectionStates.canConnect(nodeConnectionId, now)) {

initiateConnect(node, now);

return reconnectBackoffMs;

}

//到这里就表示game off,阻塞等待有新的节点使用

return Long.MAX_VALUE;

}

上面的更新中包含了所有的情况:

- 如果 node 可以发送请求,则直接发送请求;

- 如果该 node 正在建立连接,则直接返回重新连接超时时间,等待更新成功;

- 如果该 node 还没建立连接,则向 broker 初始化链接。 而 KafkaProducer 线程之前是一直阻塞在两个 while 循环中,直到 metadata 更新

从上述的代码中可以看出:整个流程会一直重试。知道Cluster数据更新成功

- sender 线程第一次调用 poll() 方法时,初始化与 node 的连接;

- sender 线程第二次调用 poll() 方法时,发送 Metadata 请求;

- sender阻塞等待一定时间,如果有响应返回,则获取 metadataResponse,并更新 metadata

如果cluster更新成功后,producer就不会被阻塞,可以顺畅工作了,NetworkClient 接收到 Server 端对 Metadata 请求的响应后,更新 Metadata 信息。

private void handleCompletedReceives(List<ClientResponse> responses, long now) {

for (NetworkReceive receive : this.selector.completedReceives()) {

...

//如果是MetadataResponse类的响应,交由metadataUpdater来处理

if (req.isInternalRequest && body instanceof MetadataResponse)

metadataUpdater.handleCompletedMetadataResponse(req.header, now, (MetadataResponse) body);

...

}

}

那么继续看metadataUpdater

@Override

public void handleCompletedMetadataResponse(RequestHeader requestHeader, long now, MetadataResponse response) {

this.metadataFetchInProgress = false;

//从响应中返回集群信息

Cluster cluster = response.cluster();

Map<String, Errors> errors = response.errors();

if (cluster.nodes().size() > 0) {

//然后更新到metadata中

this.metadata.update(cluster, response.unavailableTopics(), now);

} else {

log.trace("Ignoring empty metadata response with correlation id {}.", requestHeader.correlationId());

this.metadata.failedUpdate(now, null);

}

}

具体response如何解析,有兴趣的同学可以自行看一下。然后看一下metadata的成功处理和失败处理

public synchronized void update(Cluster cluster, Set<String> unavailableTopics, long now) {

Objects.requireNonNull(cluster, "cluster should not be null");

//更新成功更新时间

this.needUpdate = false;

this.lastRefreshMs = now;

this.lastSuccessfulRefreshMs = now;

//增加版本号

this.version += 1;

//如果topic存在过期刷新的配置,则刷新时间

if (topicExpiryEnabled) {

for (Iterator<Map.Entry<String, Long>> it = topics.entrySet().iterator(); it.hasNext(); ) {

Map.Entry<String, Long> entry = it.next();

long expireMs = entry.getValue();

if (expireMs == TOPIC_EXPIRY_NEEDS_UPDATE)

entry.setValue(now + TOPIC_EXPIRY_MS);

else if (expireMs <= now) {

it.remove();

log.debug("Removing unused topic {} from the metadata list, expiryMs {} now {}", entry.getKey(), expireMs, now);

}

}

}

//通知事件监听者

for (Listener listener: listeners)

listener.onMetadataUpdate(cluster, unavailableTopics);

String previousClusterId = cluster.clusterResource().clusterId();

//全局更新和局部更新的差别

if (this.needMetadataForAllTopics) {

this.needUpdate = false;

this.cluster = getClusterForCurrentTopics(cluster);

} else {

this.cluster = cluster;

}

if (!cluster.isBootstrapConfigured()) {

String clusterId = cluster.clusterResource().clusterId();

if (clusterId == null ? previousClusterId != null : !clusterId.equals(previousClusterId))

log.info("Cluster ID: {}", cluster.clusterResource().clusterId());

clusterResourceListeners.onUpdate(cluster.clusterResource());

}

//唤醒所有的wait

notifyAll();

log.debug("Updated cluster metadata version {} to {}", this.version, this.cluster);

}

public synchronized void failedUpdate(long now, AuthenticationException authenticationException) {

//只刷新最后更新的时间

this.lastRefreshMs = now;

this.authenticationException = authenticationException;

if (authenticationException != null)

this.notifyAll();

}

到这里,更新就结束了

MetaData更新触发的场景

metaData的默认机制是定时更新, 可以看一下metadata.timeToNextUpdate(now)的实现

public synchronized long timeToNextUpdate(long nowMs) {

long timeToExpire = needUpdate ? 0 : Math.max(this.lastSuccessfulRefreshMs + this.metadataExpireMs - nowMs, 0);

long timeToAllowUpdate = this.lastRefreshMs + this.refreshBackoffMs - nowMs;

return Math.max(timeToExpire, timeToAllowUpdate);

}

- 如果needUpdate为true,则表示触发立即刷新

- metadataExpireMs 表示metadata信息有效的周期,由配置项

metadataExpireMs决定。默认是5分钟 - refreshBackoffMs 失败重试时间,防止在某些场景下,做无谓的尝试。

来看一下强制进行刷新的场景:

- 在NetworkClient调用poll存在超时请求时:handleTimedOutRequests

- 在NetworkClient调用poll处理断开连接时:handleDisconnections

- 初始化一个Node连接时,会进行强制刷新:

- 发送消息时,如果无法找到 partition 的 leader;

- 处理 Producer 响应时,如果返回关于 Metadata 过期的异常(InvalidMetadataException) 和 topic/partition不存在时(UnknownTopicOrPartitionException)

- 发送消息时,如果无法找到 partition 的 leader