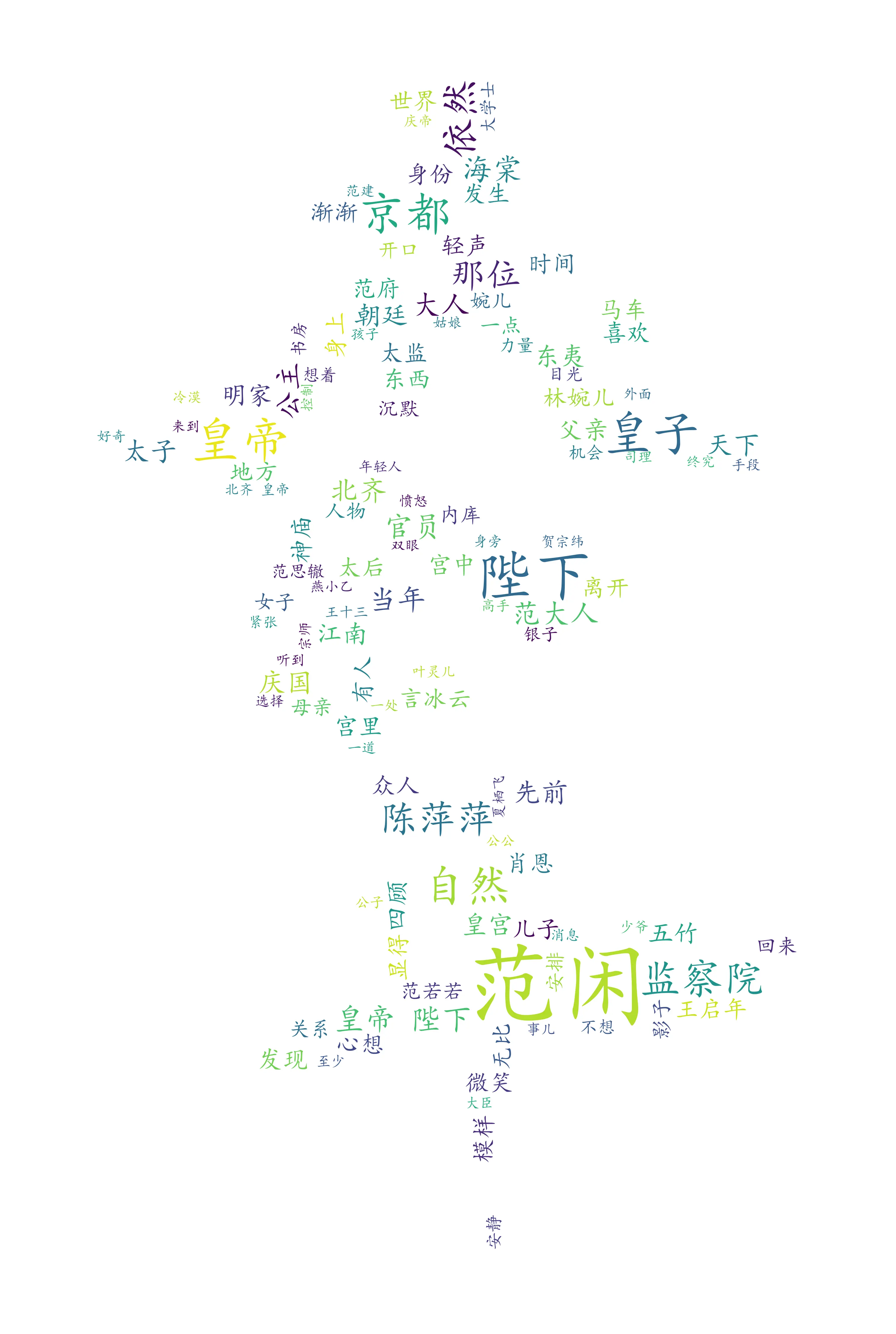

一、概述

使用jieba分词和wordcloud生产 小说的词云库

二、效果图

三、流程

import jieba

from wordcloud import WordCloud

import os

import numpy

import PIL.Image as Image

cur_path=os.path.dirname(__file__)

mask_pic = numpy.array(Image.open(os.path.join(cur_path, 'aa.jpg')))

1、分词方法

def myjieba(txt) :

jieba.add_word('范若若')

jieba.add_word('林婉儿')

jieba.add_word('长公主')

wordlist=jieba.cut(txt)

return " ".join(wordlist)

2、获取中文停用词库(排除无用词)

def get_stopwords():

dir_name_path=os.path.join(cur_path, '停用词库.txt')

stopwords = set()

with open(dir_name_path, 'r', encoding='utf-8') as f:

text=f.read()

textlist = text.split('\n')

return textlist

3、词云设置并生成图片

with open(os.path.join(cur_path, '庆余年.txt'), encoding='utf-8') as fp:

txt=fp.read()

txt=myjieba(txt)

wordcloud = WordCloud(font_path = 'C:\Windows\Fonts\simkai.TTF',

background_color = 'white',

max_words = 120,

max_font_size = 66,

scale=6,

stopwords = get_stopwords(),

mask=mask_pic

).generate(txt)

image = wordcloud.to_image()

image.show()

wordcloud.to_file(cur_path+"temp.png")