上一篇文章中我们已经简单分享过了k8s的各个组件,我们已经知道了pod是由运行在各个node上的kubelet拉起来的,那么这次我们主要从源码(1.10版本)来分析kubelet主要是怎么工作的。

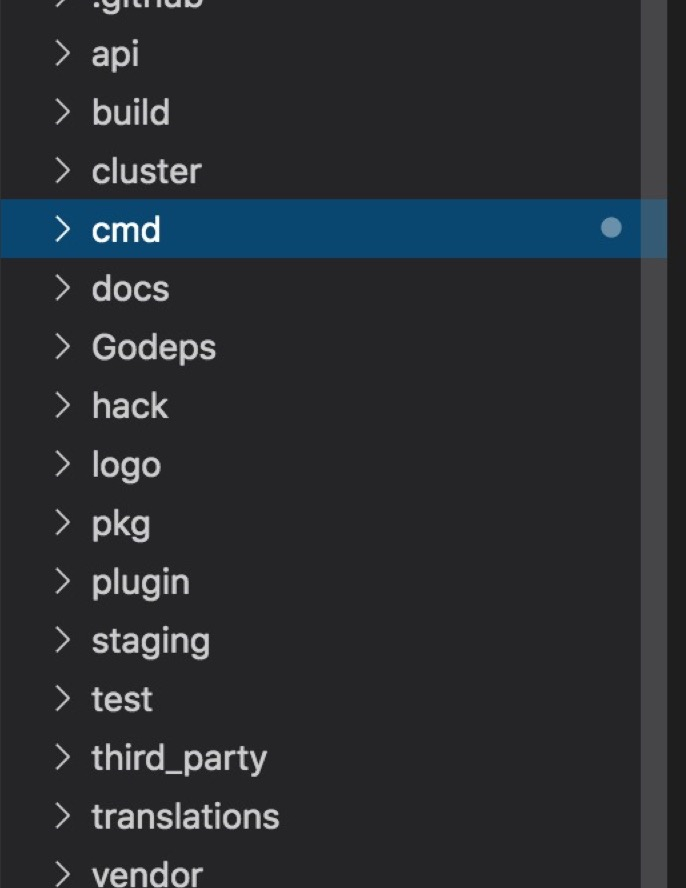

k8s的代码结构比较清晰,如下图所示

我们先看cmd/kubelet/kubelet.go,这个是kubelet的main入口,代码如下:

func main() {

rand.Seed(time.Now().UnixNano())

command := app.NewKubeletCommand()

logs.InitLogs()

defer logs.FlushLogs()

if err := command.Execute(); err != nil {

os.Exit(1)

}

}

在这里我们关心的是RunKubelet的实现

func RunKubelet(kubeServer *options.KubeletServer, kubeDeps *kubelet.Dependencies, runOnce bool) error {

hostname, err := nodeutil.GetHostname(kubeServer.HostnameOverride)

if err != nil {

return err

}

// Query the cloud provider for our node name, default to hostname if kubeDeps.Cloud == nil

nodeName, err := getNodeName(kubeDeps.Cloud, hostname)

if err != nil {

return err

}

// Setup event recorder if required.

makeEventRecorder(kubeDeps, nodeName)

capabilities.Initialize(capabilities.Capabilities{

AllowPrivileged: true,

})

credentialprovider.SetPreferredDockercfgPath(kubeServer.RootDirectory)

klog.V(2).Infof("Using root directory: %v", kubeServer.RootDirectory)

if kubeDeps.OSInterface == nil {

kubeDeps.OSInterface = kubecontainer.RealOS{}

}

k, err := createAndInitKubelet(&kubeServer.KubeletConfiguration,

kubeDeps,

&kubeServer.ContainerRuntimeOptions,

kubeServer.ContainerRuntime,

kubeServer.RuntimeCgroups,

kubeServer.HostnameOverride,

kubeServer.NodeIP,

kubeServer.ProviderID,

kubeServer.CloudProvider,

kubeServer.CertDirectory,

kubeServer.RootDirectory,

kubeServer.RegisterNode,

kubeServer.RegisterWithTaints,

kubeServer.AllowedUnsafeSysctls,

kubeServer.RemoteRuntimeEndpoint,

kubeServer.RemoteImageEndpoint,

kubeServer.ExperimentalMounterPath,

kubeServer.ExperimentalKernelMemcgNotification,

kubeServer.ExperimentalCheckNodeCapabilitiesBeforeMount,

kubeServer.ExperimentalNodeAllocatableIgnoreEvictionThreshold,

kubeServer.MinimumGCAge,

kubeServer.MaxPerPodContainerCount,

kubeServer.MaxContainerCount,

kubeServer.MasterServiceNamespace,

kubeServer.RegisterSchedulable,

kubeServer.NonMasqueradeCIDR,

kubeServer.KeepTerminatedPodVolumes,

kubeServer.NodeLabels,

kubeServer.SeccompProfileRoot,

kubeServer.BootstrapCheckpointPath,

kubeServer.NodeStatusMaxImages)

if err != nil {

return fmt.Errorf("failed to create kubelet: %v", err)

}

// NewMainKubelet should have set up a pod source config if one didn't exist

// when the builder was run. This is just a precaution.

if kubeDeps.PodConfig == nil {

return fmt.Errorf("failed to create kubelet, pod source config was nil")

}

podCfg := kubeDeps.PodConfig

rlimit.RlimitNumFiles(uint64(kubeServer.MaxOpenFiles))

// process pods and exit.

if runOnce {

if _, err := k.RunOnce(podCfg.Updates()); err != nil {

return fmt.Errorf("runonce failed: %v", err)

}

klog.Info("Started kubelet as runonce")

} else {

startKubelet(k, podCfg, &kubeServer.KubeletConfiguration, kubeDeps, kubeServer.EnableCAdvisorJSONEndpoints, kubeServer.EnableServer)

klog.Info("Started kubelet")

}

return nil

}

- createAndInitKubelet,这个方法初始化了一个kubelet的实例,该函数里面调用的makePodSourceConfig颇为值得注意,该函数会调用NewListWatchFromClient(后面会单开文章再写list-watch的实现),然后监听从apiserver获取的pods更新信息。

- startKubelet,里面最主要的方法是

k.Run(podCfg.Updates()),然后还有一些http的服务,暂时忽略。先说说k.Run,代码实现如下。

// Run starts the kubelet reacting to config updates

func (kl *Kubelet) Run(updates <-chan kubetypes.PodUpdate) {

if kl.logServer == nil {

kl.logServer = http.StripPrefix("/logs/", http.FileServer(http.Dir("/var/log/")))

}

if kl.kubeClient == nil {

klog.Warning("No api server defined - no node status update will be sent.")

}

// Start the cloud provider sync manager

if kl.cloudResourceSyncManager != nil {

go kl.cloudResourceSyncManager.Run(wait.NeverStop)

}

if err := kl.initializeModules(); err != nil {

kl.recorder.Eventf(kl.nodeRef, v1.EventTypeWarning, events.KubeletSetupFailed, err.Error())

klog.Fatal(err)

}

// Start volume manager

go kl.volumeManager.Run(kl.sourcesReady, wait.NeverStop)

if kl.kubeClient != nil {

// Start syncing node status immediately, this may set up things the runtime needs to run.

go wait.Until(kl.syncNodeStatus, kl.nodeStatusUpdateFrequency, wait.NeverStop)

go kl.fastStatusUpdateOnce()

// start syncing lease

if utilfeature.DefaultFeatureGate.Enabled(features.NodeLease) {

go kl.nodeLeaseController.Run(wait.NeverStop)

}

}

go wait.Until(kl.updateRuntimeUp, 5*time.Second, wait.NeverStop)

// Start loop to sync iptables util rules

if kl.makeIPTablesUtilChains {

go wait.Until(kl.syncNetworkUtil, 1*time.Minute, wait.NeverStop)

}

// Start a goroutine responsible for killing pods (that are not properly

// handled by pod workers).

go wait.Until(kl.podKiller, 1*time.Second, wait.NeverStop)

// Start component sync loops.

kl.statusManager.Start()

kl.probeManager.Start()

// Start syncing RuntimeClasses if enabled.

if kl.runtimeClassManager != nil {

kl.runtimeClassManager.Start(wait.NeverStop)

}

// Start the pod lifecycle event generator.

kl.pleg.Start()

kl.syncLoop(updates, kl)

}

在这个方法内,完成的是kubelet的最主要流程。主要包括以下:

- volumeManager,volume相关管理;

- syncNodeStatus,定时同步Node状态;

- fastStatusUpdateOnce,定时检查indexer cache,并且更新相关状态;

- nodeLeaseController,Run 同步租约;

- updateRuntimeUp,定时更新Runtime状态;

- syncNetworkUtil,定时同步网络状态;

- podKiller,定时清理死亡的pod;

- statusManager,pod状态管理;

- probeManager,pod探针管理;

- syncLoop

syncLoop,是这里面的主循环,监听不同channel(http, file, apiserver)的podupdate数据,如果发现有更新,就将pod同步到期望状态。这里面的主要操作是通过syncLoopIteration实现的。代码如下

func (kl *Kubelet) syncLoopIteration(configCh <-chan kubetypes.PodUpdate, handler SyncHandler,

syncCh <-chan time.Time, housekeepingCh <-chan time.Time, plegCh <-chan *pleg.PodLifecycleEvent) bool {

select {

case u, open := <-configCh:

// Update from a config source; dispatch it to the right handler

// callback.

if !open {

klog.Errorf("Update channel is closed. Exiting the sync loop.")

return false

}

switch u.Op {

case kubetypes.ADD:

klog.V(2).Infof("SyncLoop (ADD, %q): %q", u.Source, format.Pods(u.Pods))

// After restarting, kubelet will get all existing pods through

// ADD as if they are new pods. These pods will then go through the

// admission process and *may* be rejected. This can be resolved

// once we have checkpointing.

handler.HandlePodAdditions(u.Pods)

case kubetypes.UPDATE:

klog.V(2).Infof("SyncLoop (UPDATE, %q): %q", u.Source, format.PodsWithDeletionTimestamps(u.Pods))

handler.HandlePodUpdates(u.Pods)

case kubetypes.REMOVE:

klog.V(2).Infof("SyncLoop (REMOVE, %q): %q", u.Source, format.Pods(u.Pods))

handler.HandlePodRemoves(u.Pods)

case kubetypes.RECONCILE:

klog.V(4).Infof("SyncLoop (RECONCILE, %q): %q", u.Source, format.Pods(u.Pods))

handler.HandlePodReconcile(u.Pods)

case kubetypes.DELETE:

klog.V(2).Infof("SyncLoop (DELETE, %q): %q", u.Source, format.Pods(u.Pods))

// DELETE is treated as a UPDATE because of graceful deletion.

handler.HandlePodUpdates(u.Pods)

case kubetypes.RESTORE:

klog.V(2).Infof("SyncLoop (RESTORE, %q): %q", u.Source, format.Pods(u.Pods))

// These are pods restored from the checkpoint. Treat them as new

// pods.

handler.HandlePodAdditions(u.Pods)

case kubetypes.SET:

// TODO: Do we want to support this?

klog.Errorf("Kubelet does not support snapshot update")

}

if u.Op != kubetypes.RESTORE {

// If the update type is RESTORE, it means that the update is from

// the pod checkpoints and may be incomplete. Do not mark the

// source as ready.

// Mark the source ready after receiving at least one update from the

// source. Once all the sources are marked ready, various cleanup

// routines will start reclaiming resources. It is important that this

// takes place only after kubelet calls the update handler to process

// the update to ensure the internal pod cache is up-to-date.

kl.sourcesReady.AddSource(u.Source)

}

case e := <-plegCh:

if isSyncPodWorthy(e) {

// PLEG event for a pod; sync it.

if pod, ok := kl.podManager.GetPodByUID(e.ID); ok {

klog.V(2).Infof("SyncLoop (PLEG): %q, event: %#v", format.Pod(pod), e)

handler.HandlePodSyncs([]*v1.Pod{pod})

} else {

// If the pod no longer exists, ignore the event.

klog.V(4).Infof("SyncLoop (PLEG): ignore irrelevant event: %#v", e)

}

}

if e.Type == pleg.ContainerDied {

if containerID, ok := e.Data.(string); ok {

kl.cleanUpContainersInPod(e.ID, containerID)

}

}

case <-syncCh:

// Sync pods waiting for sync

podsToSync := kl.getPodsToSync()

if len(podsToSync) == 0 {

break

}

klog.V(4).Infof("SyncLoop (SYNC): %d pods; %s", len(podsToSync), format.Pods(podsToSync))

handler.HandlePodSyncs(podsToSync)

case update := <-kl.livenessManager.Updates():

if update.Result == proberesults.Failure {

// The liveness manager detected a failure; sync the pod.

// We should not use the pod from livenessManager, because it is never updated after

// initialization.

pod, ok := kl.podManager.GetPodByUID(update.PodUID)

if !ok {

// If the pod no longer exists, ignore the update.

klog.V(4).Infof("SyncLoop (container unhealthy): ignore irrelevant update: %#v", update)

break

}

klog.V(1).Infof("SyncLoop (container unhealthy): %q", format.Pod(pod))

handler.HandlePodSyncs([]*v1.Pod{pod})

}

case <-housekeepingCh:

if !kl.sourcesReady.AllReady() {

// If the sources aren't ready or volume manager has not yet synced the states,

// skip housekeeping, as we may accidentally delete pods from unready sources.

klog.V(4).Infof("SyncLoop (housekeeping, skipped): sources aren't ready yet.")

} else {

klog.V(4).Infof("SyncLoop (housekeeping)")

if err := handler.HandlePodCleanups(); err != nil {

klog.Errorf("Failed cleaning pods: %v", err)

}

}

}

return true

}

到这里,基本上应该都清楚了。接下来就是调用runtime去做对应的操作。