前言:

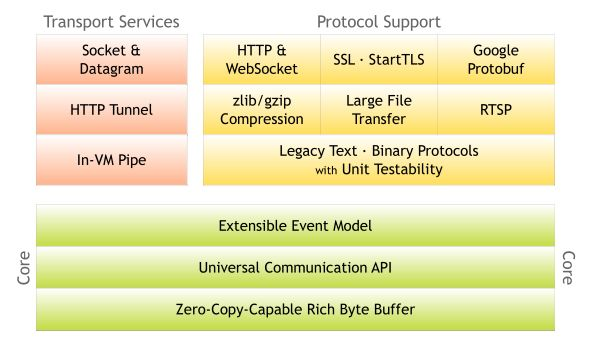

Netty是一个异步事件驱动的网络应用程序框架用于快速开发可维护的高性能协议服务器和客户端。

特点:

-

统一的API,适用于不同的协议(阻塞和非阻塞)

-

基于灵活、可扩展的事件驱动模型

-

高度可定制的线程模型

环境准备:

拉取 https://github.com/netty/netty/tree/3.9

命令:

git clone https://github.com/netty/netty.gitGit checkout -b 3.9 origin/3.9netty概览:

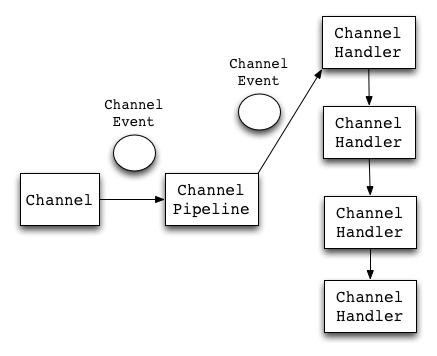

在netty中,Channel是通讯的载体,ChannelHandler是数据处理的执行者,ChannelPipeline是数据处理的通道;

数据流向分析:

以DefaultChannelPipeline为例,讲述已注册到管道pipeline的ChannelHandler如何处理数据?

public void sendDownstream(ChannelEvent e) { DefaultChannelHandlerContext tail = getActualDownstreamContext(this.tail); if (tail == null) { try { getSink().eventSunk(this, e); return; } catch (Throwable t) { notifyHandlerException(e, t); return; } } sendDownstream(tail, e); } void sendDownstream(DefaultChannelHandlerContext ctx, ChannelEvent e) { if (e instanceof UpstreamMessageEvent) { throw new IllegalArgumentException("cannot send an upstream event to downstream"); } try { ((ChannelDownstreamHandler) ctx.getHandler()).handleDownstream(ctx, e); } catch (Throwable t) { // Unlike an upstream event, a downstream event usually has an // incomplete future which is supposed to be updated by ChannelSink. // However, if an exception is raised before the event reaches at // ChannelSink, the future is not going to be updated, so we update // here. e.getFuture().setFailure(t); notifyHandlerException(e, t); } }downStream方向 :业务对象 =》 数据流

从pipeline管道tail对象起依次调用注册的ChannelDownstreamHandler处理器,最终经由ChannelSink的eventSunk根据事件触发boss或者worker线程池处理任务。

public void sendUpstream(ChannelEvent e) { DefaultChannelHandlerContext head = getActualUpstreamContext(this.head); if (head == null) { if (logger.isWarnEnabled()) { logger.warn( "The pipeline contains no upstream handlers; discarding: " + e); } return; } sendUpstream(head, e); } void sendUpstream(DefaultChannelHandlerContext ctx, ChannelEvent e) { try { ((ChannelUpstreamHandler) ctx.getHandler()).handleUpstream(ctx, e); } catch (Throwable t) { notifyHandlerException(e, t); } }

upStream方向 :数据流 =》 业务对象

从pipeline管道head对象开始依次调用注册的ChannelUpstreamHandler处理器,最终将数据流转为业务层的业务对象。

这点很重要,会影响到我们注册处理器的逻辑。

bootstrap.setPipelineFactory(new ChannelPipelineFactory() { public ChannelPipeline getPipeline() throws Exception { ChannelPipeline pipeline = new DefaultChannelPipeline(); pipeline.addLast("decoder", new NettyProtocolDecoder()); pipeline.addLast("encoder", new NettyProtocolEncoder()); pipeline.addLast("handler", new NettyServerHandler(threadPool)); return pipeline; } }); // 代码源自:https://github.com/bluedavy/McQueenRPC对于ChannelSink如何真正地触发数据写出去?暂仅考虑MessageEvent,可以看到将消息写到channel所在worker线程的队列中,并调用writeFromUserCode触发worker发送数据。

// 代码源自:org.jboss.netty.channel.socket.nio.NioServerSocketPipelineSinkpublic void eventSunk( ChannelPipeline pipeline, ChannelEvent e) throws Exception { Channel channel = e.getChannel(); if (channel instanceof NioServerSocketChannel) { handleServerSocket(e); } else if (channel instanceof NioSocketChannel) { handleAcceptedSocket(e); } } private static void handleAcceptedSocket(ChannelEvent e) { // 省略ChannelStateEvent处理 ... // else if (e instanceof MessageEvent) { MessageEvent event = (MessageEvent) e; NioSocketChannel channel = (NioSocketChannel) event.getChannel(); boolean offered = channel.writeBufferQueue.offer(event); assert offered; channel.worker.writeFromUserCode(channel); } }写模型:

对于存在队列中的数据如何依次发送呢?如果不可写了该怎么处理?(写入过快来不及发送,导致缓冲区满了)

// 代码源自:org.jboss.netty.channel.socket.nio.AbstractNioWorker#write0synchronized (channel.writeLock) { channel.inWriteNowLoop = true; // 1、 此循环用于拿出队列的数据 for (;;) { MessageEvent evt = channel.currentWriteEvent; SendBuffer buf = null; ChannelFuture future = null; try { if (evt == null) { if ((channel.currentWriteEvent = evt = writeBuffer.poll()) == null) { removeOpWrite = true; channel.writeSuspended = false; break; } future = evt.getFuture(); channel.currentWriteBuffer = buf = sendBufferPool.acquire(evt.getMessage()); } else { future = evt.getFuture(); buf = channel.currentWriteBuffer; } long localWrittenBytes = 0; for (int i = writeSpinCount; i > 0; i --) { // 真正的写入处理 localWrittenBytes = buf.transferTo(ch); //写入的数据大于0,跳出本次循环,如为零则循环writeSpinCount, // 如再次期间localWrittenBytes>0,说明再次可写。 if (localWrittenBytes != 0) { writtenBytes += localWrittenBytes; break; } if (buf.finished()) { break; } } } } }可以看到依次处理队列中的消息,如果有消息,则调用buf.transferTo(ch)将数据发送出去,如果返回localWrittenBytes大于0,跳出本次循环,如为零则循环writeSpinCount,如再次期间localWrittenBytes>0,说明再次可写。并调用fireWriteComplete触发上游,这里暂时忽略结果处理。

说明:触发写不止这种情况,channel可写的情况下也会触发将队列清空。

读模型分析:

写的流程已经分析完成,读模型相对简单,当监听到读事件时,即会触发worker读取数据装载成buffer,调用handler处理。

protected boolean read(SelectionKey k) { final SocketChannel ch = (SocketChannel) k.channel(); final NioSocketChannel channel = (NioSocketChannel) k.attachment(); final ReceiveBufferSizePredictor predictor = channel.getConfig().getReceiveBufferSizePredictor(); final int predictedRecvBufSize = predictor.nextReceiveBufferSize(); final ChannelBufferFactory bufferFactory = channel.getConfig().getBufferFactory(); int ret = 0; int readBytes = 0; boolean failure = true; ByteBuffer bb = recvBufferPool.get(predictedRecvBufSize).order(bufferFactory.getDefaultOrder()); try { while ((ret = ch.read(bb)) > 0) { readBytes += ret; if (!bb.hasRemaining()) { break; } } failure = false; } catch (ClosedChannelException e) { // Can happen, and does not need a user attention. } catch (Throwable t) { fireExceptionCaught(channel, t); } if (readBytes > 0) { bb.flip(); final ChannelBuffer buffer = bufferFactory.getBuffer(readBytes); buffer.setBytes(0, bb); buffer.writerIndex(readBytes); // Update the predictor. predictor.previousReceiveBufferSize(readBytes); // 触发上游回调 fireMessageReceived(channel, buffer); } }后续:

后续会先对Netty特性基于源码分析,并设计个便捷可配置的压测模型,方便开发中快速针对不同通讯实现压测。

可以点击阅读原文获取《Nip trick and trip》,相信可以使得netty的理解更深入。