An illustrative introduction to Fisher’s Linear Discriminant

To deal with classification problems with two or more classes, most Machine Learning (ML) algorithms work the same way.

Usually, they apply some kind of transformation to the input data with the effect of reducing the original input dimensions to a smaller number. The goal is to project the data into a new space. Then, once projected, the algorithm tries to classify the points by finding a linear separation.

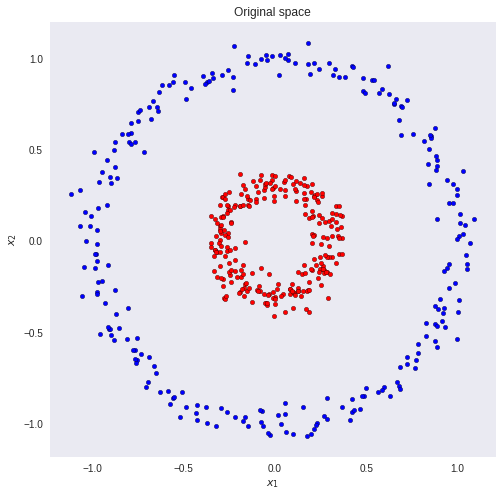

For problems with small input dimensions, the task is somewhat easier. Take the following dataset as an example.

Suppose we want to classify the red and blue circles correctly.

It is clear that with a simple linear model we will not get a good result. There is no linear combination of the inputs and weights that maps the inputs to their correct classes. But what if we could transform the data so that we could draw a line that separates the two classes?

That is what happens if we square the two input feature-vectors. Now, a linear model will easily classify the blue and red points.