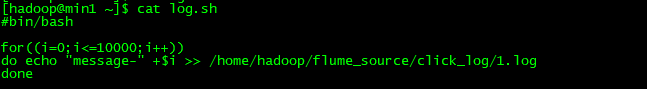

1.写一个shell脚本log.sh,产生数据

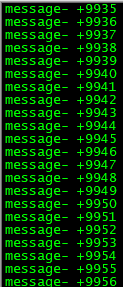

#bin/bash for((i=0;i<=10000;i++)) do echo "message-" +$i >> /home/hadoop/flume_source/click_log/1.log done

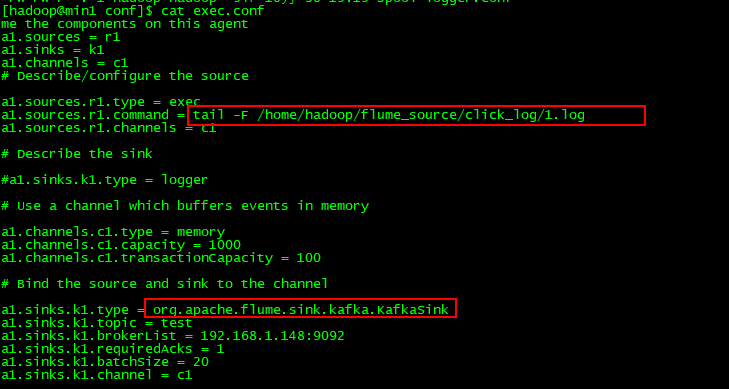

a1.sources = r1 a1.sinks = k1 a1.channels = c1

Describe/configure the source

a1.sources.r1.type = exec a1.sources.r1.command = tail -F /home/hadoop/flume_source/click_log/1.log a1.sources.r1.channels = c1

Describe the sink

Use a channel which buffers events in memory

a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100

Bind the source and sink to the channel

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink a1.sinks.k1.topic = test a1.sinks.k1.brokerList = 192.168.1.148:9092 a1.sinks.k1.requiredAcks = 1 a1.sinks.k1.batchSize = 20 a1.sinks.k1.channel = c1