环境

剖析 actor

根据 thingsboard 的官方文档可以知道其 rule engine 的设计使用了 actor 模型。以下关于 actor 的描述摘抄自官方文档。

Actor model enables high performance concurrent processing of messages from devices as long as server-side API calls

只要服务器端 API 调用,Actor 模型就可以对来自设备的消息进行高性能并发处理

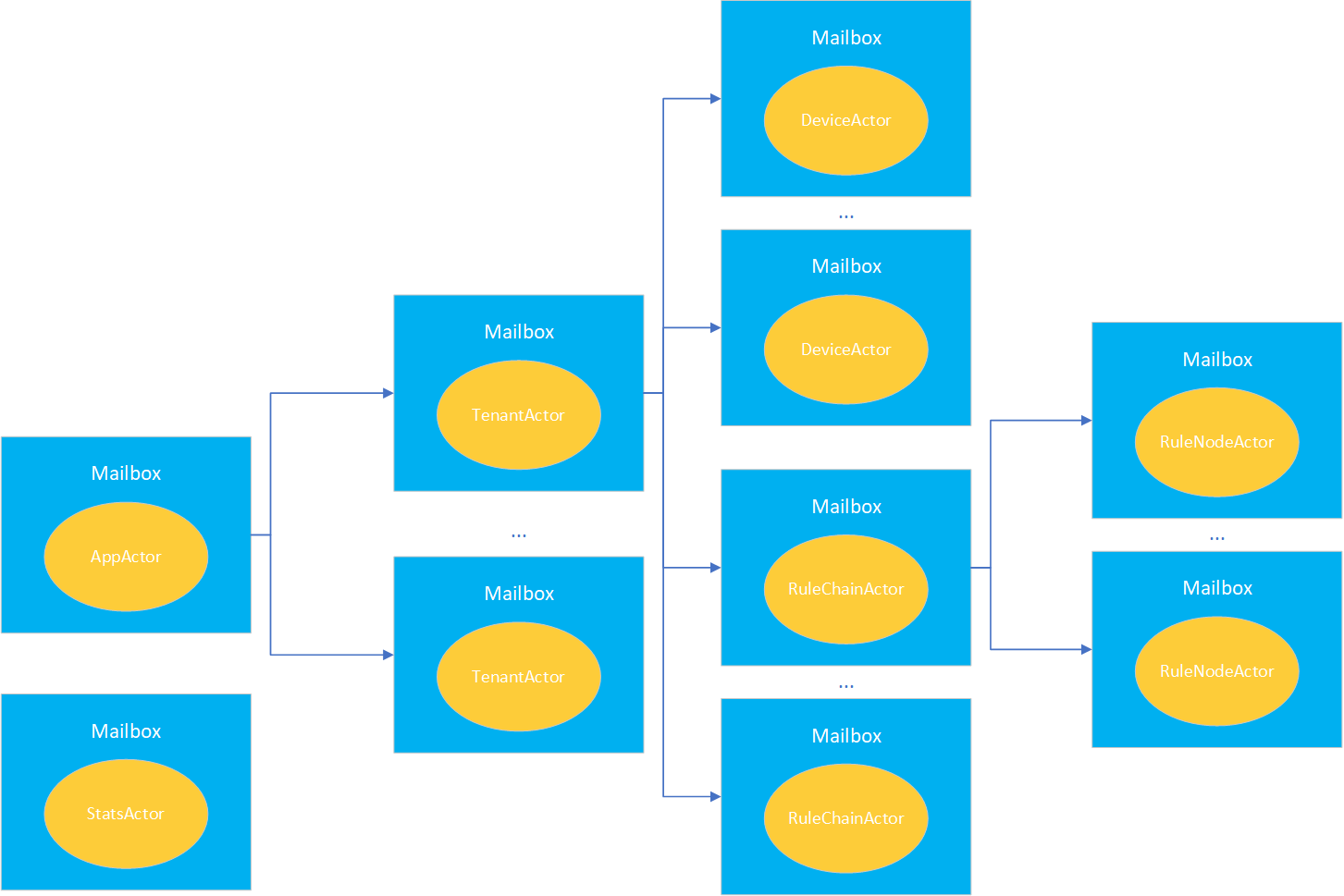

ThingsBoard uses own Actor System implementation (sharpened for our use cases) with following actor hierarchies

ThingsBoard 使用自己的 Actor System 实现(针对我们的用例进行了改进),具有以下 actor 层次结构

The brief description of each actor’s functionality is listed below

下面列出了每个 actor 的功能的简要说明

- App Actor - responsible for management of tenant actors. An instance of this actor is always present in memory.

App Actor:负责管理多个 tenant actor。该 actor 的一个实例总是在内存中

- Tenant Actor - responsible for management of tenant device & rule chain actors. An instance of this actor is always present in memory.

Tenant Actor:负责管理多个 tenant device 和 rule chain actor。该 actor 的一个实例总是在内存中

- Device Actor - maintain state of the device: active sessions, subscriptions, pending RPC commands, etc. Caches current device attributes in memory for performance reasons. An actor is created when the first message from the device is processed. The actor is stopped when there is no messages from devices for a certain time.

Device Actor:维护 device 的状态:active sessions, subscriptions, pending RPC commands 等等。出于性能原因在内存中缓存当前 device attribute。当处理来自设备的第一条消息时创建一个 actor。当一段时间内没有来自多个设备的消息时,actor 停止

- Rule Chain Actor - process incoming messages and dispatches them to rule node actors. An instance of this actor is always present in memory.

Rule Chain Actor:处理传入消息并将它们分派给 rule node actor。该 actor 的一个实例总是在内存中

- Rule Node Actor - process incoming messages, and report results back to rule chain actor. An instance of this actor is always present in memory.

Rule Node Actor:处理传入的消息,并将结果报告回 rule chain actor。该 actor 的一个实例总是在内存中

本文聚焦于 Core Actors 的实现流程。

actor 创建流程

创建 AppActor

创建 AppActor 的代码:

// 在 DefaultActorService 的 Bean 初始化完成后就开始初始化 actor 系统

@PostConstruct

public void initActorSystem() {

log.info("Initializing actor system.");

actorContext.setActorService(this);

TbActorSystemSettings settings = new TbActorSystemSettings(actorThroughput, schedulerPoolSize, maxActorInitAttempts);

system = new DefaultTbActorSystem(settings);

// 分别给 app、tenant、device 和 rule 分配大小为 1、2、4、4 的线程池

system.createDispatcher(APP_DISPATCHER_NAME, initDispatcherExecutor(APP_DISPATCHER_NAME, appDispatcherSize));

system.createDispatcher(TENANT_DISPATCHER_NAME, initDispatcherExecutor(TENANT_DISPATCHER_NAME, tenantDispatcherSize));

system.createDispatcher(DEVICE_DISPATCHER_NAME, initDispatcherExecutor(DEVICE_DISPATCHER_NAME, deviceDispatcherSize));

system.createDispatcher(RULE_DISPATCHER_NAME, initDispatcherExecutor(RULE_DISPATCHER_NAME, ruleDispatcherSize));

actorContext.setActorSystem(system);

// 创建一个 app 的根 actor

appActor = system.createRootActor(APP_DISPATCHER_NAME, new AppActor.ActorCreator(actorContext));

actorContext.setAppActor(appActor);

// 创建一个 tenant 的根 actor

TbActorRef statsActor = system.createRootActor(TENANT_DISPATCHER_NAME, new StatsActor.ActorCreator(actorContext, "StatsActor"));

actorContext.setStatsActor(statsActor);

log.info("Actor system initialized.");

}

其中 doProcess 方法包含创建 TenantActor 的代码:

// 此段代码在 AppActor 内

@Override

protected boolean doProcess(TbActorMsg msg) {

if (!ruleChainsInitialized) {

// 创建 TenantActor

initTenantActors();

ruleChainsInitialized = true;

if (msg.getMsgType() != MsgType.APP_INIT_MSG && msg.getMsgType() != MsgType.PARTITION_CHANGE_MSG) {

log.warn("Rule Chains initialized by unexpected message: {}", msg);

}

}

switch (msg.getMsgType()) {

// ...

}

return true;

}

// ...

private void initTenantActors() {

log.info("Starting main system actor.");

try {

if (systemContext.isTenantComponentsInitEnabled()) {

PageDataIterable<Tenant> tenantIterator = new PageDataIterable<>(tenantService::findTenants, ENTITY_PACK_LIMIT);

// 此处遍历创建 TenantActor

for (Tenant tenant : tenantIterator) {

log.debug("[{}] Creating tenant actor", tenant.getId());

getOrCreateTenantActor(tenant.getId());

log.debug("[{}] Tenant actor created.", tenant.getId());

}

}

log.info("Main system actor started.");

} catch (Exception e) {

log.warn("Unknown failure", e);

}

}

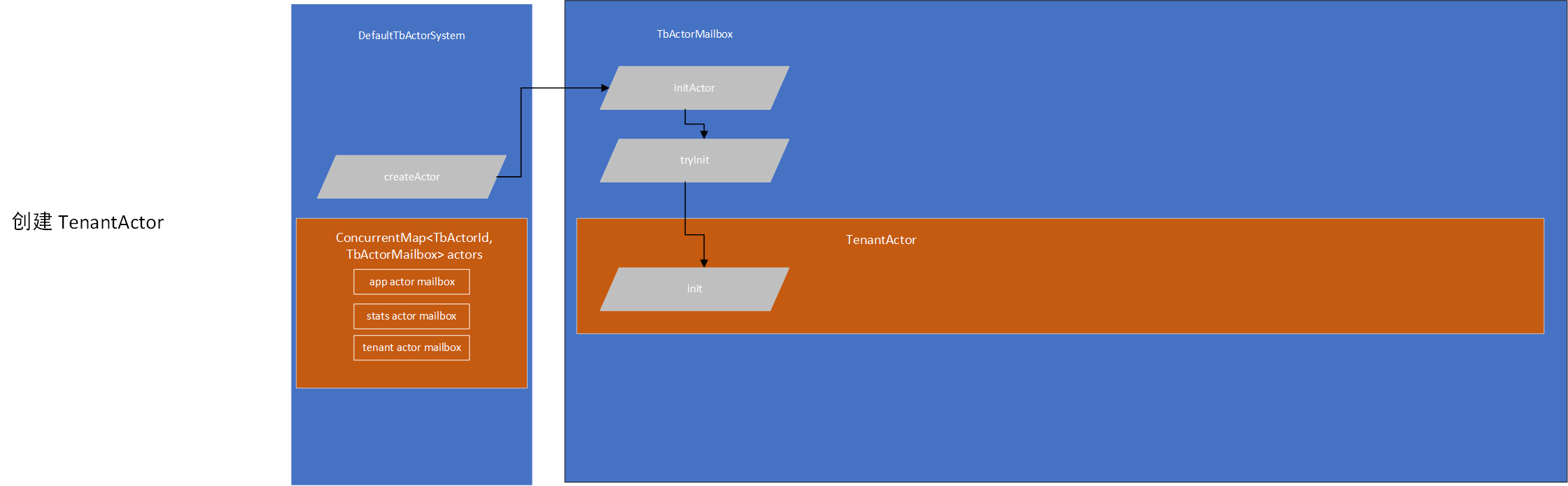

创建 TenantActor

其中 init 方法包含创建 RulechainActor 的代码:

@Override

public void init(TbActorCtx ctx) throws TbActorException {

super.init(ctx);

log.debug("[{}] Starting tenant actor.", tenantId);

try {

Tenant tenant = systemContext.getTenantService().findTenantById(tenantId);

if (tenant == null) {

// ...

} else {

// ...

if (isRuleEngine) {

try {

if (getApiUsageState().isReExecEnabled()) {

log.debug("[{}] Going to init rule chains", tenantId);

// 创建 RulechainActor

initRuleChains();

} else {

log.info("[{}] Skip init of the rule chains due to API limits", tenantId);

}

} catch (Exception e) {

cantFindTenant = true;

}

}

log.debug("[{}] Tenant actor started.", tenantId);

}

} catch (Exception e) {

log.warn("[{}] Unknown failure", tenantId, e);

}

}

创建 RuleChainActor

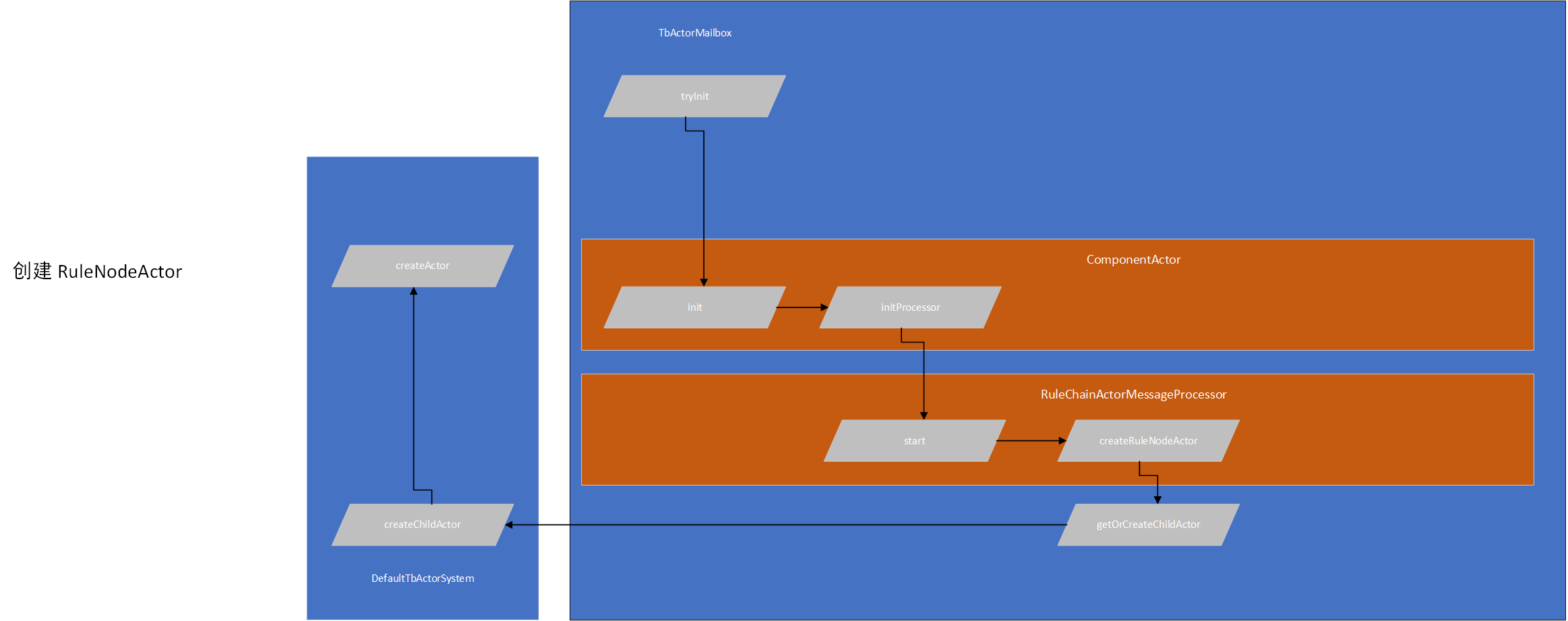

在 createActor 方法中会调用创建 RuleNodeActor 时的 tryinit 方法

创建 RuleNodeActor

创建 RuleNodeActor 的代码:

// 此处代码在 RuleChainActorMessageProcessor 中

@Override

public void start(TbActorCtx context) {

if (!started) {

RuleChain ruleChain = service.findRuleChainById(tenantId, entityId);

if (ruleChain != null && RuleChainType.CORE.equals(ruleChain.getType())) {

List<RuleNode> ruleNodeList = service.getRuleChainNodes(tenantId, entityId);

log.trace("[{}][{}] Starting rule chain with {} nodes", tenantId, entityId, ruleNodeList.size());

// Creating and starting the actors;

for (RuleNode ruleNode : ruleNodeList) {

log.trace("[{}][{}] Creating rule node [{}]: {}", entityId, ruleNode.getId(), ruleNode.getName(), ruleNode);

TbActorRef ruleNodeActor = createRuleNodeActor(context, ruleNode);

nodeActors.put(ruleNode.getId(), new RuleNodeCtx(tenantId, self, ruleNodeActor, ruleNode));

}

initRoutes(ruleChain, ruleNodeList);

started = true;

}

} else {

onUpdate(context);

}

}

创建 DeviceActor

小结

在程序刚开始运行时,先创建 AppActor 和 StatsActor,在创建 AppActor 的过程中创建所有 TenantActor,在创建每个 TenantActor 的过程中创建其拥有的所有 RuleChain 对应的 RuleChainActor,在创建每个 RuleChainActor 的过程中创建其拥有的所有 RuleNode 对应的 RuleNodeActor。

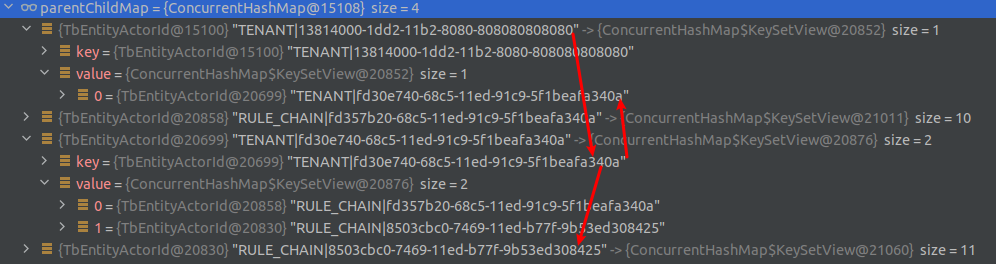

actor 间的关系

在 debug 的过程中可以观察到不同 actor 间的父子关系

刚好对应项目中的设置:

刚好对应项目中的设置:

- app

- 租户__1

- rule_chain_1

- 若干个 rule node

- rule_chain_2

- 若干个 rule node

- rule_chain_1

- 租户__1

因此 actor 间的关系可以总结成如下关系图:

mailbox 相当存储消息的消息队列,实际的消息处理是在该 mailbox 对应的线程池调用相应的 actor。 比如,TenantActor 的 mailbox 以队列的形式存储着消息,然后 tenant 对应的线程池(在创建 AppActor 时已经为不同的 mailbox 创建了线程池)分配一个线程来调用 TenantActor 里的方法来处理消息。

消息传输流程

前面知道了每个 actor 之间的关系,那么不同 actor 处理的消息都是什么呢? 处理消息的逻辑都在每个 actor 的 doprocess 方法里。 AppActor 处理消息的逻辑:

@Override

protected boolean doProcess(TbActorMsg msg) {

// ...

switch (msg.getMsgType()) {

case APP_INIT_MSG:

break;

case PARTITION_CHANGE_MSG:

ctx.broadcastToChildren(msg);

break;

case COMPONENT_LIFE_CYCLE_MSG:

onComponentLifecycleMsg((ComponentLifecycleMsg) msg);

break;

case QUEUE_TO_RULE_ENGINE_MSG:

onQueueToRuleEngineMsg((QueueToRuleEngineMsg) msg);

break;

case TRANSPORT_TO_DEVICE_ACTOR_MSG:

onToDeviceActorMsg((TenantAwareMsg) msg, false);

break;

case DEVICE_ATTRIBUTES_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_CREDENTIALS_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_NAME_OR_TYPE_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_EDGE_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_RPC_REQUEST_TO_DEVICE_ACTOR_MSG:

case DEVICE_RPC_RESPONSE_TO_DEVICE_ACTOR_MSG:

case SERVER_RPC_RESPONSE_TO_DEVICE_ACTOR_MSG:

case REMOVE_RPC_TO_DEVICE_ACTOR_MSG:

// 分发消息到 DeviceActor

onToDeviceActorMsg((TenantAwareMsg) msg, true);

break;

case EDGE_EVENT_UPDATE_TO_EDGE_SESSION_MSG:

// 分发消息到 TenantActor

onToTenantActorMsg((EdgeEventUpdateMsg) msg);

break;

case SESSION_TIMEOUT_MSG:

ctx.broadcastToChildrenByType(msg, EntityType.TENANT);

break;

default:

return false;

}

return true;

}

可见,AppActor 可以分发消息给 DeviceActor 、TenantActor 和 rule engine,以及广播消息到子 actor。

TenantActor 处理消息的逻辑:

@Override

protected boolean doProcess(TbActorMsg msg) {

// ...

switch (msg.getMsgType()) {

case PARTITION_CHANGE_MSG:

PartitionChangeMsg partitionChangeMsg = (PartitionChangeMsg) msg;

ServiceType serviceType = partitionChangeMsg.getServiceType();

if (ServiceType.TB_RULE_ENGINE.equals(serviceType)) {

//To Rule Chain Actors

broadcast(msg);

} else if (ServiceType.TB_CORE.equals(serviceType)) {

List<TbActorId> deviceActorIds = ctx.filterChildren(new TbEntityTypeActorIdPredicate(EntityType.DEVICE) {

@Override

protected boolean testEntityId(EntityId entityId) {

return super.testEntityId(entityId) && !isMyPartition(entityId);

}

});

deviceActorIds.forEach(id -> ctx.stop(id));

}

break;

case COMPONENT_LIFE_CYCLE_MSG:

onComponentLifecycleMsg((ComponentLifecycleMsg) msg);

break;

case QUEUE_TO_RULE_ENGINE_MSG:

onQueueToRuleEngineMsg((QueueToRuleEngineMsg) msg);

break;

case TRANSPORT_TO_DEVICE_ACTOR_MSG:

onToDeviceActorMsg((DeviceAwareMsg) msg, false);

break;

case DEVICE_ATTRIBUTES_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_CREDENTIALS_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_NAME_OR_TYPE_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_EDGE_UPDATE_TO_DEVICE_ACTOR_MSG:

case DEVICE_RPC_REQUEST_TO_DEVICE_ACTOR_MSG:

case DEVICE_RPC_RESPONSE_TO_DEVICE_ACTOR_MSG:

case SERVER_RPC_RESPONSE_TO_DEVICE_ACTOR_MSG:

case REMOVE_RPC_TO_DEVICE_ACTOR_MSG:

// 分发消息到 DeviceActor

onToDeviceActorMsg((DeviceAwareMsg) msg, true);

break;

case SESSION_TIMEOUT_MSG:

ctx.broadcastToChildrenByType(msg, EntityType.DEVICE);

break;

case RULE_CHAIN_INPUT_MSG:

case RULE_CHAIN_OUTPUT_MSG:

case RULE_CHAIN_TO_RULE_CHAIN_MSG:

onRuleChainMsg((RuleChainAwareMsg) msg);

break;

case EDGE_EVENT_UPDATE_TO_EDGE_SESSION_MSG:

onToEdgeSessionMsg((EdgeEventUpdateMsg) msg);

break;

default:

return false;

}

return true;

}

TenantActor 将消息分发到 DeviceActor 和 rule engine。

DeviceActor 处理消息的逻辑:

@Override

protected boolean doProcess(TbActorMsg msg) {

switch (msg.getMsgType()) {

case TRANSPORT_TO_DEVICE_ACTOR_MSG: // 📌

processor.process(ctx, (TransportToDeviceActorMsgWrapper) msg);

break;

case DEVICE_ATTRIBUTES_UPDATE_TO_DEVICE_ACTOR_MSG:

processor.processAttributesUpdate(ctx, (DeviceAttributesEventNotificationMsg) msg);

break;

case DEVICE_CREDENTIALS_UPDATE_TO_DEVICE_ACTOR_MSG:

processor.processCredentialsUpdate(msg);

break;

case DEVICE_NAME_OR_TYPE_UPDATE_TO_DEVICE_ACTOR_MSG:

processor.processNameOrTypeUpdate((DeviceNameOrTypeUpdateMsg) msg);

break;

case DEVICE_RPC_REQUEST_TO_DEVICE_ACTOR_MSG:

processor.processRpcRequest(ctx, (ToDeviceRpcRequestActorMsg) msg);

break;

case DEVICE_RPC_RESPONSE_TO_DEVICE_ACTOR_MSG:

processor.processRpcResponsesFromEdge(ctx, (FromDeviceRpcResponseActorMsg) msg);

break;

case DEVICE_ACTOR_SERVER_SIDE_RPC_TIMEOUT_MSG:

processor.processServerSideRpcTimeout(ctx, (DeviceActorServerSideRpcTimeoutMsg) msg);

break;

case SESSION_TIMEOUT_MSG:

processor.checkSessionsTimeout();

break;

case DEVICE_EDGE_UPDATE_TO_DEVICE_ACTOR_MSG:

processor.processEdgeUpdate((DeviceEdgeUpdateMsg) msg);

break;

case REMOVE_RPC_TO_DEVICE_ACTOR_MSG:

processor.processRemoveRpc(ctx, (RemoveRpcActorMsg) msg);

break;

default:

return false;

}

return true;

}

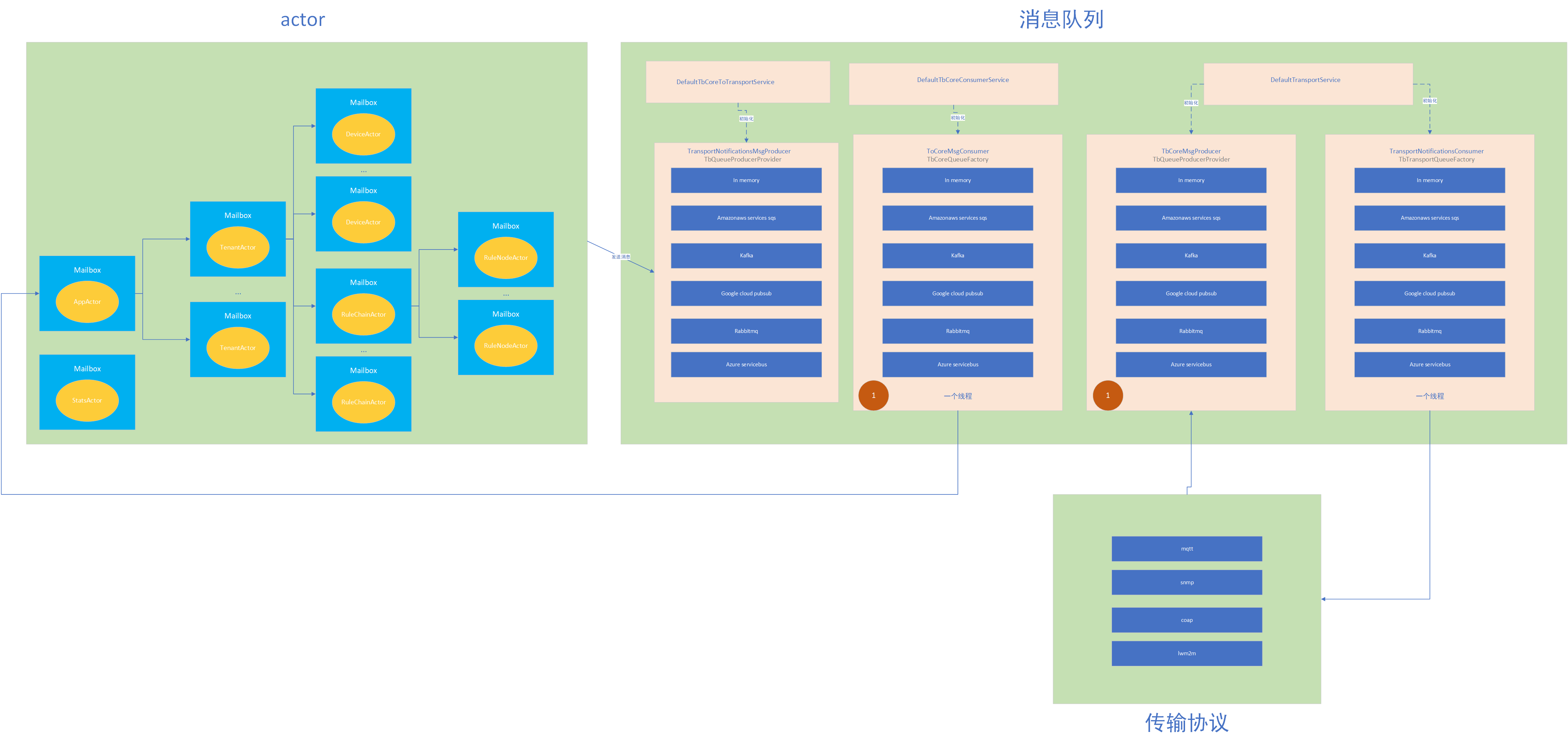

在二次开发中比较经常接触到的就是将消息发送到 device 中,所以结合以上的消息处理逻辑,可以知道发送到 device 的消息先经过 AppActor 转发到拥有该 device 的 tenant 所对应的 TenantActor 中,最终转发到该 device 对应的 DeviceActor 来处理消息。可以发现 actor 间的传输流向和 actor 的树状结构不谋而合。DeviceActor 最终会调用 DeviceActorMessageProcessor 的对象 processor 的 sendToTransport() 方法:

private void sendToTransport(GetAttributeResponseMsg responseMsg, SessionInfoProto sessionInfo) {

ToTransportMsg msg = ToTransportMsg.newBuilder()

.setSessionIdMSB(sessionInfo.getSessionIdMSB())

.setSessionIdLSB(sessionInfo.getSessionIdLSB())

.setGetAttributesResponse(responseMsg).build();

systemContext.getTbCoreToTransportService().process(sessionInfo.getNodeId(), msg); // 📌

}

getTbCoreToTransportService() 会返回 DefaultTbCoreToTransportService 的实例,调用的方法 process 会将消息发送到消息队列的生产者中。

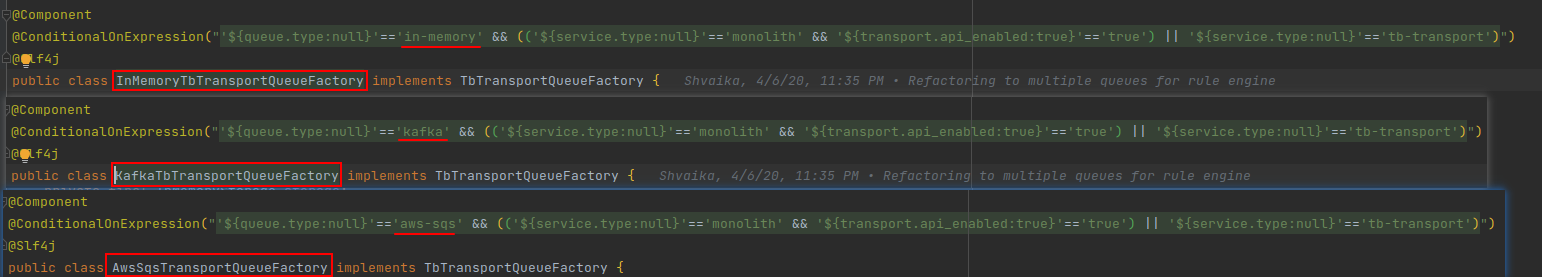

thingsboard 集成了多种消息队列,那么它是如何切换不同的消息队列实现呢?

public interface TbTransportQueueFactory extends TbUsageStatsClientQueueFactory {

TbQueueRequestTemplate<TbProtoQueueMsg<TransportApiRequestMsg>, TbProtoQueueMsg<TransportApiResponseMsg>> createTransportApiRequestTemplate();

TbQueueProducer<TbProtoQueueMsg<ToRuleEngineMsg>> createRuleEngineMsgProducer();

TbQueueProducer<TbProtoQueueMsg<ToCoreMsg>> createTbCoreMsgProducer();

TbQueueConsumer<TbProtoQueueMsg<ToTransportMsg>> createTransportNotificationsConsumer();

}

使用了工厂模式来创建不同消息队列的工厂类

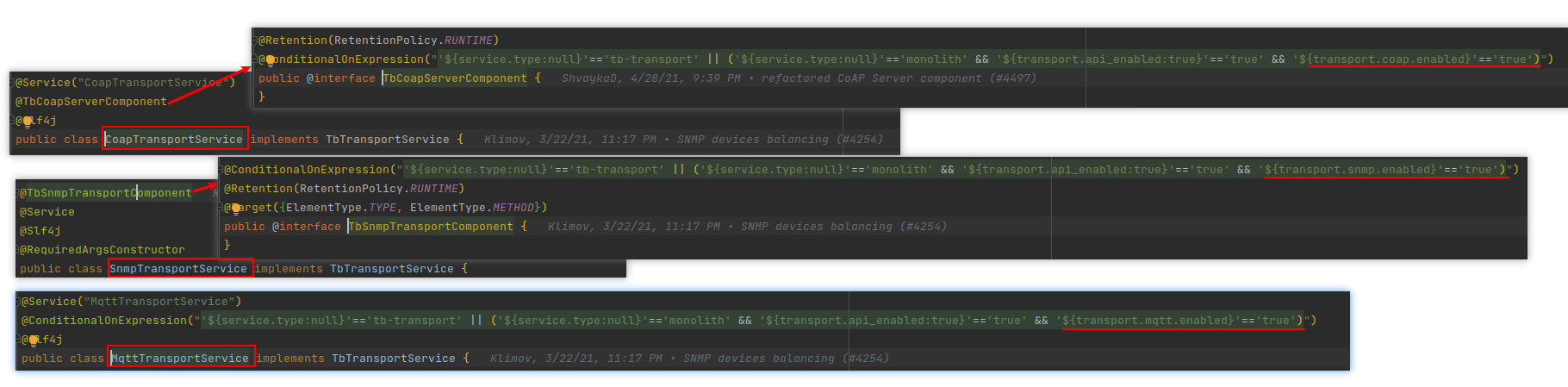

接着查看每个实现类所使用的注解,可以发现每个 bean 会选择性地创建

进行选择具体消息队列的地方在项目的配置文件 thingsboard.yml 中:

在确定消息队列后,使用对应的工厂类来创建消息队列的生产者和消费者

现在知道了消息的传输流程大致为 actor->消息队列,但还缺少许多细节,比如 actor 从哪里接收数据?消息队列有哪些消费者和生产者?

因为已知 actor 的入口为 AppActor,所以可以找一下哪里调用了 AppActor 处理数据的逻辑,而处理数据的逻辑都在 doProcess 方法中,因此定位到 AppActor 中的 doProcess 方法,不断往上找可能的调用者。不出意料,在 ActorSystemContext 类中找到了调用的地方:

public void tell(TbActorMsg tbActorMsg) {

appActor.tell(tbActorMsg);

}

继续往上找最终会发现在 DefaultTbCoreConsumerService 中分配一个消息队列的消费者来读取数据并将该数据放入 AppActor 中。灵活运用这种方法翻阅代码,最终可以得出以下流程图:

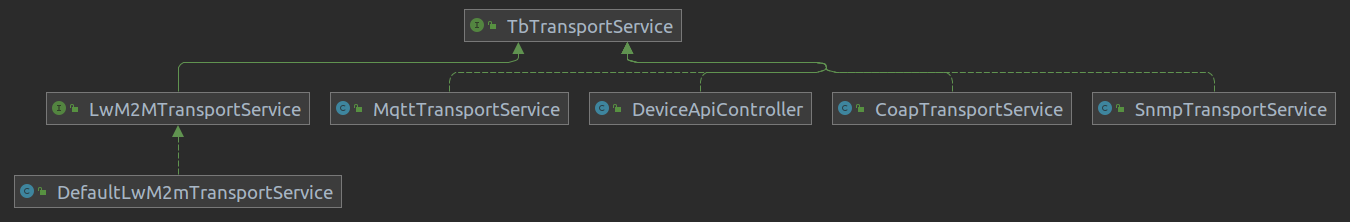

从上图可以看到 thingsboard 支持不同的通信协议,那么它是如何切换不同的传输协议的呢?

首先,thingsboard 支持以下传输协议:

接着看一下每个实现类头部的注解,会发现传输协议的切换类似消息队列