开启掘金成长之旅!这是我参与「掘金日新计划 · 12 月更文挑战」的第31天,点击查看活动详情

点击register BPServiceActor.java

void register(NamespaceInfo nsInfo) throws IOException {

// 创建注册信息

DatanodeRegistration newBpRegistration = bpos.createRegistration();

LOG.info(this + " beginning handshake with NN");

while (shouldRun()) {

try {

// Use returned registration from namenode with updated fields

// 把注册信息发送给NN(DN调用接口方法,执行在NN)

newBpRegistration = bpNamenode.registerDatanode(newBpRegistration);

newBpRegistration.setNamespaceInfo(nsInfo);

bpRegistration = newBpRegistration;

break;

} catch(EOFException e) { // namenode might have just restarted

LOG.info("Problem connecting to server: " + nnAddr + " :"

+ e.getLocalizedMessage());

sleepAndLogInterrupts(1000, "connecting to server");

} catch(SocketTimeoutException e) { // namenode is busy

LOG.info("Problem connecting to server: " + nnAddr);

sleepAndLogInterrupts(1000, "connecting to server");

}

}

… …

}

ctrl + n 搜索NameNodeRpcServer NameNodeRpcServer.java ctrl + f 在NameNodeRpcServer.java中搜索registerDatanode

public DatanodeRegistration registerDatanode(DatanodeRegistration nodeReg)

throws IOException {

checkNNStartup();

verifySoftwareVersion(nodeReg);

// 注册DN

namesystem.registerDatanode(nodeReg);

return nodeReg;

}

FSNamesystem.java

void registerDatanode(DatanodeRegistration nodeReg) throws IOException {

writeLock();

try {

blockManager.registerDatanode(nodeReg);

} finally {

writeUnlock("registerDatanode");

}

}

BlockManager.java

public void registerDatanode(DatanodeRegistration nodeReg)

throws IOException {

assert namesystem.hasWriteLock();

datanodeManager.registerDatanode(nodeReg);

bmSafeMode.checkSafeMode();

}

public void registerDatanode(DatanodeRegistration nodeReg)

throws DisallowedDatanodeException, UnresolvedTopologyException {

... ...

// register new datanode 注册DN

addDatanode(nodeDescr);

blockManager.getBlockReportLeaseManager().register(nodeDescr);

// also treat the registration message as a heartbeat

// no need to update its timestamp

// because its is done when the descriptor is created

// 将DN添加到心跳管理

heartbeatManager.addDatanode(nodeDescr);

heartbeatManager.updateDnStat(nodeDescr);

incrementVersionCount(nodeReg.getSoftwareVersion());

startAdminOperationIfNecessary(nodeDescr);

success = true;

... ...

}

void addDatanode(final DatanodeDescriptor node) {

// To keep host2DatanodeMap consistent with datanodeMap,

// remove from host2DatanodeMap the datanodeDescriptor removed

// from datanodeMap before adding node to host2DatanodeMap.

synchronized(this) {

host2DatanodeMap.remove(datanodeMap.put(node.getDatanodeUuid(), node));

}

networktopology.add(node); // may throw InvalidTopologyException

host2DatanodeMap.add(node);

checkIfClusterIsNowMultiRack(node);

resolveUpgradeDomain(node);

… …

}

3.7 向NN发送心跳

点击BPServiceActor.java中的run方法中的offerService方法 BPServiceActor.java

private void offerService() throws Exception {

while (shouldRun()) {

... ...

HeartbeatResponse resp = null;

if (sendHeartbeat) {

boolean requestBlockReportLease = (fullBlockReportLeaseId == 0) &&

scheduler.isBlockReportDue(startTime);

if (!dn.areHeartbeatsDisabledForTests()) {

// 发送心跳信息

resp = sendHeartBeat(requestBlockReportLease);

assert resp != null;

if (resp.getFullBlockReportLeaseId() != 0) {

if (fullBlockReportLeaseId != 0) {

... ...

}

fullBlockReportLeaseId = resp.getFullBlockReportLeaseId();

}

... ...

}

}

... ...

}

}

HeartbeatResponse sendHeartBeat(boolean requestBlockReportLease)

throws IOException {

... ...

// 通过NN的RPC客户端发送给NN

HeartbeatResponse response = bpNamenode.sendHeartbeat(bpRegistration,

reports,

dn.getFSDataset().getCacheCapacity(),

dn.getFSDataset().getCacheUsed(),

dn.getXmitsInProgress(),

dn.getXceiverCount(),

numFailedVolumes,

volumeFailureSummary,

requestBlockReportLease,

slowPeers,

slowDisks);

... ...

}

ctrl + n 搜索NameNodeRpcServer NameNodeRpcServer.java ctrl + f 在NameNodeRpcServer.java中搜索sendHeartbeat

public HeartbeatResponse sendHeartbeat(DatanodeRegistration nodeReg,

StorageReport[] report, long dnCacheCapacity, long dnCacheUsed,

int xmitsInProgress, int xceiverCount,

int failedVolumes, VolumeFailureSummary volumeFailureSummary,

boolean requestFullBlockReportLease,

@Nonnull SlowPeerReports slowPeers,

@Nonnull SlowDiskReports slowDisks) throws IOException {

checkNNStartup();

verifyRequest(nodeReg);

// 处理DN发送的心跳

return namesystem.handleHeartbeat(nodeReg, report,

dnCacheCapacity, dnCacheUsed, xceiverCount, xmitsInProgress,

failedVolumes, volumeFailureSummary, requestFullBlockReportLease,

slowPeers, slowDisks);

}

HeartbeatResponse handleHeartbeat(DatanodeRegistration nodeReg,

StorageReport[] reports, long cacheCapacity, long cacheUsed,

int xceiverCount, int xmitsInProgress, int failedVolumes,

VolumeFailureSummary volumeFailureSummary,

boolean requestFullBlockReportLease,

@Nonnull SlowPeerReports slowPeers,

@Nonnull SlowDiskReports slowDisks) throws IOException {

readLock();

try {

//get datanode commands

final int maxTransfer = blockManager.getMaxReplicationStreams()

- xmitsInProgress;

// 处理DN发送过来的心跳

DatanodeCommand[] cmds = blockManager.getDatanodeManager().handleHeartbeat(

nodeReg, reports, getBlockPoolId(), cacheCapacity, cacheUsed,

xceiverCount, maxTransfer, failedVolumes, volumeFailureSummary,

slowPeers, slowDisks);

long blockReportLeaseId = 0;

if (requestFullBlockReportLease) {

blockReportLeaseId = blockManager.requestBlockReportLeaseId(nodeReg);

}

//create ha status

final NNHAStatusHeartbeat haState = new NNHAStatusHeartbeat(

haContext.getState().getServiceState(),

getFSImage().getCorrectLastAppliedOrWrittenTxId());

// 响应DN的心跳

return new HeartbeatResponse(cmds, haState, rollingUpgradeInfo,

blockReportLeaseId);

} finally {

readUnlock("handleHeartbeat");

}

}

点击handleHeartbeat DatanodeManager.java

public DatanodeCommand[] handleHeartbeat(DatanodeRegistration nodeReg,

StorageReport[] reports, final String blockPoolId,

long cacheCapacity, long cacheUsed, int xceiverCount,

int maxTransfers, int failedVolumes,

VolumeFailureSummary volumeFailureSummary,

@Nonnull SlowPeerReports slowPeers,

@Nonnull SlowDiskReports slowDisks) throws IOException {

... ...

heartbeatManager.updateHeartbeat(nodeinfo, reports, cacheCapacity,

cacheUsed, xceiverCount, failedVolumes, volumeFailureSummary);

... ...

}

HeartbeatManager.java

synchronized void updateHeartbeat(final DatanodeDescriptor node,

StorageReport[] reports, long cacheCapacity, long cacheUsed,

int xceiverCount, int failedVolumes,

VolumeFailureSummary volumeFailureSummary) {

stats.subtract(node);

blockManager.updateHeartbeat(node, reports, cacheCapacity, cacheUsed,

xceiverCount, failedVolumes, volumeFailureSummary);

stats.add(node);

}

BlockManager.java

void updateHeartbeat(DatanodeDescriptor node, StorageReport[] reports,

long cacheCapacity, long cacheUsed, int xceiverCount, int failedVolumes,

VolumeFailureSummary volumeFailureSummary) {

for (StorageReport report: reports) {

providedStorageMap.updateStorage(node, report.getStorage());

}

node.updateHeartbeat(reports, cacheCapacity, cacheUsed, xceiverCount,

failedVolumes, volumeFailureSummary);

}

DatanodeDescriptor.java

void updateHeartbeat(StorageReport[] reports, long cacheCapacity,

long cacheUsed, int xceiverCount, int volFailures,

VolumeFailureSummary volumeFailureSummary) {

updateHeartbeatState(reports, cacheCapacity, cacheUsed, xceiverCount,

volFailures, volumeFailureSummary);

heartbeatedSinceRegistration = true;

}

void updateHeartbeatState(StorageReport[] reports, long cacheCapacity,

long cacheUsed, int xceiverCount, int volFailures,

VolumeFailureSummary volumeFailureSummary) {

// 更新存储

updateStorageStats(reports, cacheCapacity, cacheUsed, xceiverCount,

volFailures, volumeFailureSummary);

// 更新心跳时间

setLastUpdate(Time.now());

setLastUpdateMonotonic(Time.monotonicNow());

rollBlocksScheduled(getLastUpdateMonotonic());

}

private void updateStorageStats(StorageReport[] reports, long cacheCapacity,

long cacheUsed, int xceiverCount, int volFailures,

VolumeFailureSummary volumeFailureSummary) {

long totalCapacity = 0;

long totalRemaining = 0;

long totalBlockPoolUsed = 0;

long totalDfsUsed = 0;

long totalNonDfsUsed = 0;

… …

setCacheCapacity(cacheCapacity);

setCacheUsed(cacheUsed);

setXceiverCount(xceiverCount);

this.volumeFailures = volFailures;

this.volumeFailureSummary = volumeFailureSummary;

for (StorageReport report : reports) {

DatanodeStorageInfo storage =

storageMap.get(report.getStorage().getStorageID());

if (checkFailedStorages) {

failedStorageInfos.remove(storage);

}

storage.receivedHeartbeat(report);

// skip accounting for capacity of PROVIDED storages!

if (StorageType.PROVIDED.equals(storage.getStorageType())) {

continue;

}

totalCapacity += report.getCapacity();

totalRemaining += report.getRemaining();

totalBlockPoolUsed += report.getBlockPoolUsed();

totalDfsUsed += report.getDfsUsed();

totalNonDfsUsed += report.getNonDfsUsed();

}

// Update total metrics for the node.

// 更新存储相关信息

setCapacity(totalCapacity);

setRemaining(totalRemaining);

setBlockPoolUsed(totalBlockPoolUsed);

setDfsUsed(totalDfsUsed);

setNonDfsUsed(totalNonDfsUsed);

if (checkFailedStorages) {

updateFailedStorage(failedStorageInfos);

}

long storageMapSize;

synchronized (storageMap) {

storageMapSize = storageMap.size();

}

if (storageMapSize != reports.length) {

pruneStorageMap(reports);

}

}

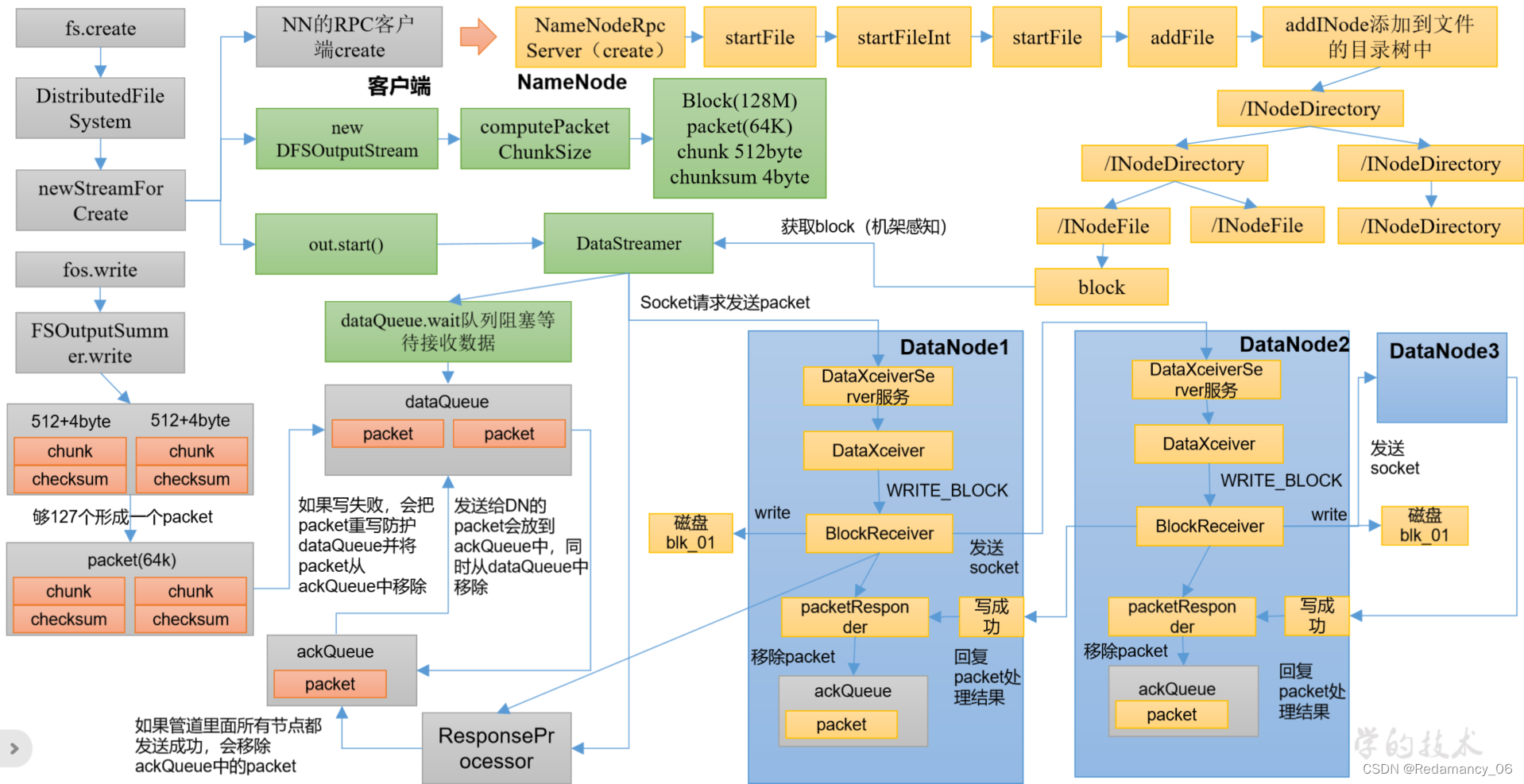

第四章 HDFS上传源码解析

4.1 HDFS的写数据流程

4.2 HDFS上传源码解析

4.3create创建过程

添加依赖

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>3.1.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs-client</artifactId>

<version>3.1.3</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.30</version>

</dependency>

</dependencies>

4.3.1 DN向NN发起创建请求

用户自己写的代码

@Test

public void testPut2() throws IOException {

FSDataOutputStream fos = fs.create(new Path("/input"));

fos.write("hello world".getBytes());

}

FileSystem.java

public FSDataOutputStream create(Path f) throws IOException {

return create(f, true);

}

public FSDataOutputStream create(Path f, boolean overwrite)

throws IOException {

return create(f, overwrite,

getConf().getInt(IO_FILE_BUFFER_SIZE_KEY,

IO_FILE_BUFFER_SIZE_DEFAULT),

getDefaultReplication(f),

getDefaultBlockSize(f));

}

public FSDataOutputStream create(Path f,

boolean overwrite,

int bufferSize,

short replication,

long blockSize) throws IOException {

return create(f, overwrite, bufferSize, replication, blockSize, null);

}

public FSDataOutputStream create(Path f,

boolean overwrite,

int bufferSize,

short replication,

long blockSize,

Progressable progress

) throws IOException {

return this.create(f, FsCreateModes.applyUMask(

FsPermission.getFileDefault(), FsPermission.getUMask(getConf())),

overwrite, bufferSize, replication, blockSize, progress);

}

public abstract FSDataOutputStream create(Path f,

FsPermission permission,

boolean overwrite,

int bufferSize,

short replication,

long blockSize,

Progressable progress) throws IOException;