itoken项目简介

开发环境

- 操作系统: Windows 10 Enterprise

- 开发工具: Intellij IDEA

- 数据库: MySql 5.7.22

- Java SDK: Oracle JAK 1.8.152

部署环境

- 操作系统: Linux Ubuntu Server 16.04 X64

- 虚拟化技术: VMware + Docker

项目管理工具

- 项目构建: Maven + Nexus

- 代码管理: Git + GitLab

- 镜像管理: Docker Registry

后台主要技术栈

- 核心框架: Spring Boot + Spring Cloud

- 视图框架: Spring MVC

- 页面引擎: Thymeleaf

- ORM框架: tk.mybatis 简化 MyBatis 开发

- 数据库连接池: Alibaba Druid

- 数据库缓存: Redis Sentinel

- 消息中间件: RabbitMQ

- 接口文档引擎: Swagger2 RESTful 风格 API 文档生成

- 全文检索引擎: ELasticSearch

- 分布式链路追中: ZipKin

- 分布式文件系统: Alibaba FastDFS

- 分布式服务监控: Spring Boot Admin

- 分布式协调系统: Spring Cloud Eureka

- 分布式配置中心: Spring Cloud Config

- 分布式日志系统: ELK(ElasticSearch + Logstash + Kibana)

- 反向代理负载均衡: Nginx

前段主要技术栈

- 前端框架: Bootstrap + JQurey

- 前端模板: AdminLTE

自动化运维

- 持续集成: GitLab

- 持续交付: Jenkins

- 容器编排: Kubernetes

项目架构

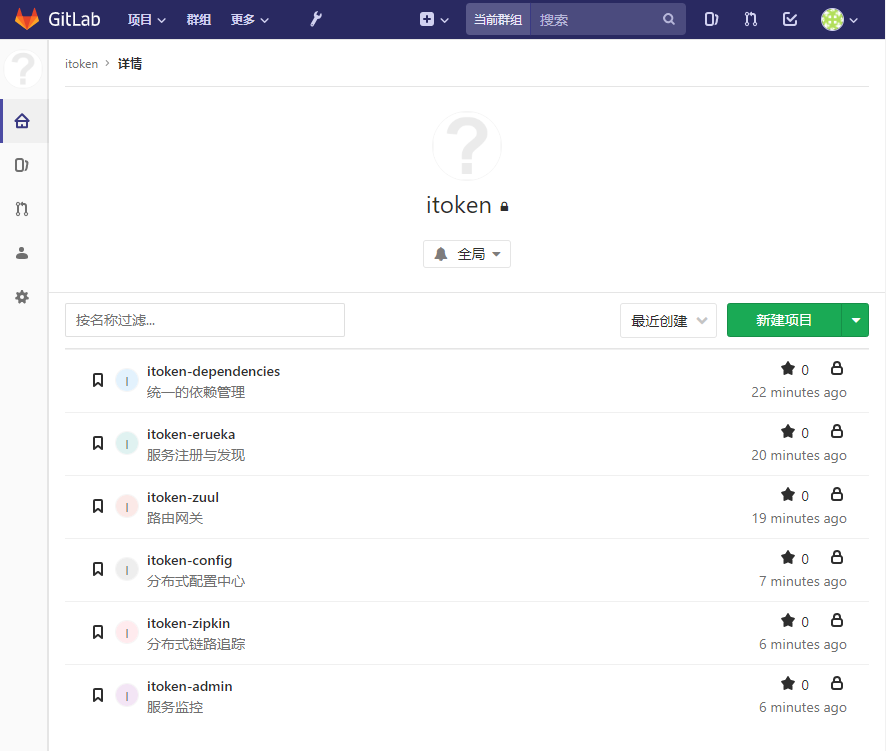

创建项目组和项目

Spring Cloud 基础服务部署

- 集群最少要有3台计算机组成

-

将项目pull到linux系统中

-

打包项目

mvn clean package

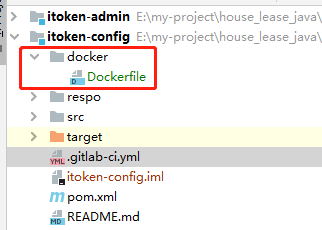

- 编辑对应的镜像的Dockerfile

FROM openjdk:8-jre

RUN mkdir /app

COPY itoken-config-1.0.0-SNAPSHOT.jar /app/

CMD java -jar /app/itoken-config-1.0.0-SNAPSHOT.jar --spring.profiles.active=prod

EXPOSE 8888

- 构建项目镜像并上传到Registry

docker build -t 47.112.214.6:5000/itoken-config .

- 测试镜像是否正确的方法

docker run -it 47.112.214.6:5000/itoken-config "/bin/bash"

java -jar itoken-eureka-1.0.0-SNAPSHOT.jar --spring.profiles.active=prod

或者

docker run -p 8888:8888 47.112.214.6:5000/itoken-config

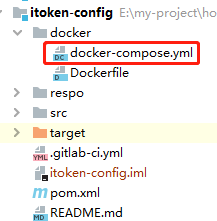

- 创建docker-compose.yml文件

- vim docker-compose.yml

- 编写docker-compose 文件内容

## 配置单个应用程序

version: '3.1'

services:

itoken-config:

restart: always

image: 47.112.215.6:5000/itoken-config

container_name: itoken-config

ports:

- 8888:8888

## 配置集群

version: '3.1'

services:

itoken-eureka-1:

restart: always

image: 47.112.215.6:5000/itoken-eureka

container_name: itoken-eureka-1

ports:

- 8761:8761

itoken-eureka-2:

restart: always

image: 47.112.215.6:5000/itoken-eureka

container_name: itoken-eureka-2

ports:

- 8861:8761

itoken-eureka-3:

restart: always

image: 47.112.215.6:5000/itoken-eureka

container_name: itoken-eureka-3

ports:

- 8961:8761

- 跟踪项目容器启动情况

docker logs -f ac70f745fbbc

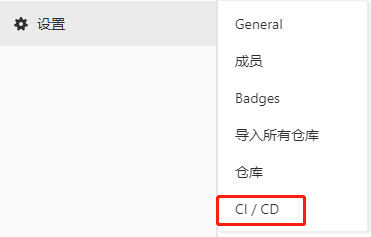

部署持续集成

- 持续集成指的是,频繁地(一天多次)将代码集成到主干。

## 快速发现错误。每完成一点更新,就集成到主干,可以快速发现错误,定位错误也比较容易。

## 防止分支大幅偏离主干。如果不是经常集成,主干又在不断更新,会导致以后集成的难度变大,甚至难以集成。

- Pipeline(管道)

一次Pipeline其实相当于一次构建任务,里面可以包含多个流程,如安装依赖、运行测试、编译、部署测试服务器、部署生产服务器等流程。

任何提交或者Merge Request的合并都可以触发Pipeline

- Stages(阶段)

Stages表示构建阶段,说白了就是上面提到的流程。我们可以在一次Pipeline中定义多个Stages,这些Stages会有以下特点

1. 会按照顺序运行

2. 所有Stages完成,构建任务才会成功

3. 一个Stage失败,后面的Stages不会执行,构建任务失败。

- Jobs(任务)

Jobs表示构建工作,表示某个Stage里面执行的工作。我们可以在Stages里面定义多个Jobs,这些Jobs会有以下特点:

1. 相同Stage中的Jobs会并行执行

2. 相同Stage中的Jobs都执行成功时,该Stage才会成功

3. 如果任何一个Job失败,那么该Stage失败,即该构建任务失败

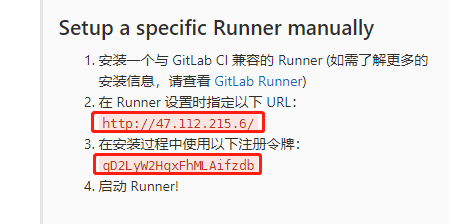

使用GitLab持续执行(GitLab Runner)

- 创建GitLab Runner持续集成容器的docker-compose.yml

version: '3.1'

services:

gitlab-runner:

## 镜像构建操作(build:+目录名字)

build: environment

restart: always

container_name: gitlab-runner

## 以真正的root管理员权限登入操作容器

privileged: true

volumes:

- /usr/local/docker/runner/config:/etc/gitlab-runner

- /var/run/docker.sock:/var/run/docker.sock

- 创建GitLab Runner持续集成镜像的Dockerfile

FROM gitlab/gitlab-runner:v11.0.2

MAINTAINER mrchen <365984197@qq.com>

# 修改软件源

RUN echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial main restricted universe multiverse' > /etc/apt/sources.list && \

echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted universe multiverse' >> /etc/apt/sources.list && \

echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted universe multiverse' >> /etc/apt/sources.list && \

echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse' >> /etc/apt/sources.list && \

apt-get update -y && \

apt-get clean

# 安装Docker

RUN apt-get -y install apt-transport-https ca-certificates curl software-properties-common && \

apt-get update && apt-get install -y gnupg2 && \

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | apt-key add - && \

curl -fsSL get.docker.com -o get-docker.sh && \

sh get-docker.sh --mirror Aliyun

COPY daemon.json /etc/docker/daemon.json

# 安装Docker Compose

WORKDIR /usr/local/bin

RUN curl -L https://get.daocloud.io/docker/compose/releases/download/1.25.0/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

RUN chmod +x docker-compose

# 安装Java

RUN mkdir -p /usr/local/java

WORKDIR /usr/local/java

COPY jdk-8u251-linux-x64.tar.gz /usr/local/java

RUN tar -zxvf jdk-8u251-linux-x64.tar.gz && \

rm -fr jdk-8u251-linux-x64.tar.gz

# 安装Maven

RUN mkdir -p /usr/local/maven

WORKDIR /usr/local/maven

#RUN wget https://raw.githubusercontent.com/topsale/resources/master/maven/apache-maven-3.5.3-bin.tar.gz

COPY apache-maven-3.6.3-bin.zip /usr/local/maven

RUN apt-get install unzip

RUN unzip apache-maven-3.6.3-bin.zip && \

rm -fr apache-maven-3.6.3-bin.zip

# COPY settings.xml /usr/local/maven/apache-maven-3.6.3-bin.zip/conf/setting.xml

# 配置环境变量

ENV JAVA_HOME /usr/local/java/jdk1.8.0_251

ENV MAVEN_HOME /usr/local/maven/apache-maven-3.6.3

ENV PATH $PATH:$JAVE_HOME/bin:$MAVEN_HOME/bin

WORKDIR /

- 注册Runner

docker exec -it gitlab-runner gitlab-runner register

# 输入 GitLab 地址

Please enter the gitlab-ci coordinator URL (e.g. https://gitlab.com/):

http://47.112.215.6/

# 输入 GitLab Token

Please enter the gitlab-ci token for this runner:

1Lxq_f1NRfCfeNbE5WRh

# 输入 Runner 的说明

Please enter the gitlab-ci description for this runner:

可以为空

# 设置 Tag,可以用于指定在构建规定的 tag 时触发 ci

Please enter this gitlab-ci tags for this runner (comma separated):

# 选择 runner 执行器,这里我们选择的是 shell

Please enter the executor:virtualbox, docker+machine,parallels,shell,ssh,docker-ssh+machine,kubernetes,docker,docker-ssh:

shell

- 在项目工程下创建.gitlab-ci.yml文件

- 增加Dockerfile文件

FROM openjdk:8-jre

MAINTAINER mrchen <365984197qq.com>

ENV APP_VERSION 1.0.0-SNAPSHOT

# 等待其它应用上线的插件

#ENV DOCKERIZE_VERSION v0.6.1

#RUN weget https://github.com/jwilder/dockerize/releases/download/$DOCKERIZE_VERSION/dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz \

# && tar -C /usr/local/bin -xzvf dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz \

# && rm dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz

RUN mkdir /app

COPY itoken-config-$APP_VERSION.jar /app/app.jar

# 执行多个初始化命令

#ENTRYPOINT ["dockerize", "-timeout", "5m", "-wait", "tcp://192.168.75.128:8888", "java", "-Djava.security.egd=file:/dev/./urandom", "-jar", ""]

ENTRYPOINT ["java", "-Djava.security.egd=file:/dev/./urandom", "-jar", "/app/app.jar", "--spring.profiles.active=prod"]

EXPOSE 8888

- 增加docker-compose.yml文件

version: '3.1'

services:

itoken-config:

restart: always

image: 47.112.215.6:5000/itoken-config

container_name: itoken-config

ports:

- 8888:8888

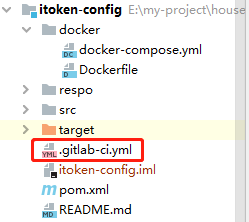

- 增加.gitlab-ci.yml文件

stages:

- build

- push

- run

- clean

build:

stage: build

script:

- /usr/local/maven/apache-maven-3.6.3/bin/mvn clean package

- cp target/itoken-config-1.0.0-SNAPSHOT.jar docker/

- cd docker

- docker build -t 47.112.215.6:5000/itoken-config .

push:

stage: push

script:

- docker push 47.112.215.6:5000/itoken-config

run:

stage: run

script:

- cd docker

- docker-compose down

- docker-compose up -d

clean:

stage: clean

script:

- docker rmi $(docker images -q -f dangling=true)

-

将上述文件提交到gitLab即可触发脚本

-

启动多个runner项目需要更改networks

version: '3.1'

services:

itoken-eureka:

restart: always

image: 47.112.215.6:5000/itoken-eureka

container_name: itoken-eureka

ports:

- 8761:8761

networks:

- eureka-network

networks:

eureka-network:

生产环境项目秒速回滚

- 使用linux的ln软链接命令

- 使用docker的镜像重启回滚到以前版本

使用微服务开发管理员服务

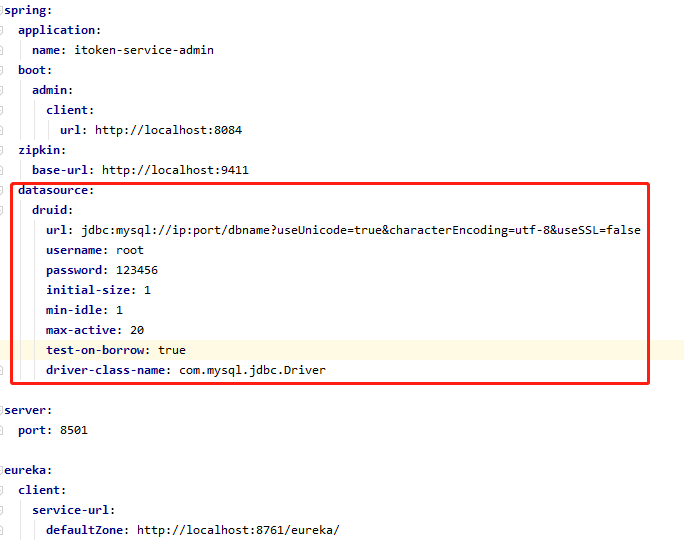

- 添加druid连接池和mysql数据库配置

- 添加pom.xml依赖

<spring-boot-alibaba-druid.version>1.1.10</spring-boot-alibaba-druid.version>

<mysql.version>5.1.46</mysql.version>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>${spring-boot-alibaba-druid.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>${mysql.version}</version>

<scope>runtime</scope>

</dependency>

- 增加application.yml的datasource配置

datasource:

druid:

url: jdbc:mysql://ip:port/dbname?useUnicode=true&characterEncoding=utf-8&useSSL=false

username: root

password: 123456

initial-size: 1

min-idle: 1

max-active: 20

test-on-borrow: true

driver-class-name: com.mysql.jdbc.Driver

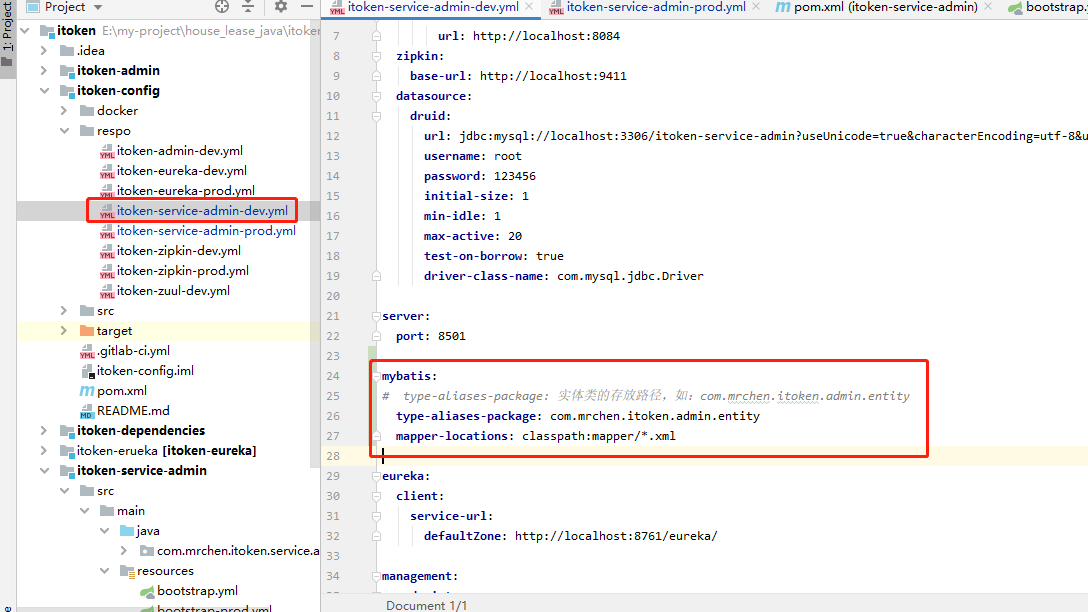

- 配置tk.mybatis简化mybatis开发

- 添加依赖包

<dependency>

<groupId>tk.mybatis</groupId>

<artifactId>mapper-spring-boot-starter</artifactId>

<version>2.0.2</version>

</dependency>

- 添加mybatis配置

- 添加MyMapper接口

- 添加PageHelper类方法

- 配置代码自动生成插件

测试驱动编程的方式进行敏捷开发(TDD)

- 编写测试用例(登陆和注册的测试用例)

- 根据用例方法编写实现

静态文件部署方式(nginx-CDN内容分发网络)

- 基于端口的虚拟主机配置

- 添加docker-compose.yml文件

version: '3.1'

services:

nginx:

restart: always

image: nginx

container_name: nginx

ports:

- 81:80

- 9000:9000

volumes:

- ./conf/nginx.conf:/etc/nginx/nginx.conf

- ./wwwroot:/usr/share/nginx/wwwroot

- 添加nginx.conf配置文件

worker_processes 1;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

# 配置虚拟主机 192.168.75.145

# server {

# 监听的ip和端口,配置 192.168.75.145:80

# listen 80;

# 虚拟主机名称这里配置ip地址

# server_name 192.168.75.145;

# 所有的请求都以 / 开始,所有的请求都可以匹配此 location

# location / {

# 使用root 指令指定虚拟主机目录即网页存放目录

# 比如访问 http://ip/index.html 将找到 /usr/local/docker/nginx/wwwroot/html80/index.html

# 比如访问 http://ip/item/index.html 将找到 /usr/local/docker/nginx/wwwroot/html80/item/index.html

# root /usr/share/nginx/html80;

# 指定欢迎页面,按从左到右顺序查找

# index index.html index.html

# }

#}

# 配置虚拟主机 192.168.75.245

server {

listen 8080;

server_name 192.168.75.145;

location / {

root /usr/share/nginx/wwwroot/html8080;

index index.html index.html;

}

}

}

- nginx的反向代理配置

server {

listen 80;

server_name itoken.mrchen.com;

location / {

proxy_pass http://192.168.75.128:9090;

index index.html index.htm;

}

}

- nginx的负载均衡

upstream myapp1 {

server 192.168.94.132:9090 weight=10;

server 192.168.94.132:9091 weight=10;

}

server {

listen 80;

server_name 192.168.94.132

location / {

proxy_pass http://myapp1;

index index.jsp index.html index.htm;

}

}

- nginx反向代理负载均衡实现伪CDN服务器

使用redis做数据缓存

- 缓存(数据查询、短连接、新闻内容、商品内容等等)

- 通过Redis HA(Redis高可用)的方式部署Redis服务

## Redis HA高可用实现的技术

1. keepalived

2. zookeeper

3. sentinel(官方推荐)

- 搭建redis集群

- 编辑docker-compose.yml文件

version: '3.1'

services:

master:

image: redis

container_name: redis-master

ports:

- 6379:6379

slave1:

image: redis

container_name: redis-slave-1

ports:

- 6380:6379

command: redis-server --slaveof redis-master 6379

slave2:

image: redis

container_name: redis-slave-2

ports:

- 6381:6379

command: redis-server --slaveof redis-master 6379

- 搭建sentinel高可用监控服务

- 编辑docker-compose.yml配置

version: '3.1'

services:

sentinel1:

image: redis

container_name: redis-sentinel-1

ports:

- 26379:26379

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

values:

- ./sentinel1.conf:/usr/local/etc/redis/sentinel.conf

sentinel2:

image: redis

container_name: redis-sentinel-2

ports:

- 26380:26379

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

values:

- ./sentinel2.conf:/usr/local/etc/redis/sentinel.conf

sentinel3:

image: redis

container_name: redis-sentinel-3

ports:

- 26381:26379

command: redis-sentinel /usr/local/etc/redis/sentinel.conf

values:

- ./sentinel3.conf:/usr/local/etc/redis/sentinel.conf

* 添加sentinel.conf配置

port 26379

dir /tmp

# 自定义集群名,其中 127.0.0.1 为 redis-master 的 ip, 6379 redis-master 的端口,2 为最小投票数 (因为有 3 台 Sentinel 所以可以设置成 2)

sentinel monitor mymaster 127.0.0.1 6379 2

sentinel down-after-milliseconds mymaster 30000

sentinel parallel-syncs mymaster 1

sentinel failover-timeout mymaster 180000

sentinel deny-scripts-reconfig yes

- 使用lettuce连上redis服务

搭建redis的服务提供者(提供缓存服务)

- 添加redis的pom配置

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

- 添加redis的application.yml配置

spring:

redis:

lettuce:

pool:

max-active: 8

max-idle: 8

max-wait: -1ms

min-idle: 0

sentinel:

master: mymaster

nodes: 47.112.215.6:26379,47.112.215.6:26380,47.112.215.6:26381

使用redis实现单点登录服务(需要解决同源策略)

## 通过Cookie共享的方式

* 首先,应用群域名要统一

* 其次,应用群各系统使用的技术要相同

* 第三,cookie本身不安全

## 通过Redis记录登陆信息的方式

解决跨域问题(nginx请求文字会跨域)

- 使用CORS(跨资源共享)解决跨域问题

* IE浏览器版本不能低于10

服务器端实现CORS接口即可,在header中设置:Access-Control-Allow-Origin

- 使用JSONP解决跨域问题

- 使用Nginx反向代理解决跨域问题

worker_processes 1;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

# 配置虚拟主机 192.168.75.245

server {

listen 80;

server_name 47.112.215.6;

location / {

add_header Access-Control-Allow-Origin *;

add_header Access-Control-Allow-Headers X-Requested-With;

add_header Access-Control-Allow-Methods GET,POST,OPTIONS;

root /usr/share/nginx;

index index.html index.html;

}

}

}

Spring Boot MyBatis Redis 实现二级缓存(数据不常变化,减轻数据库压力)

- MyBatis缓存介绍

## 一级缓存

MyBatis会在表示会话的sqlSession对象中建立一个简单的缓存,将每次查询到的结果缓存起来,当下次查询的时候如果判断先前有个完全一样的查询会直接从缓存返回数据(内存级别)

## 二级缓存(第三方缓存)-缓存共享

- 配置 MyBatis二级缓存

- 开启MyBatis 二级缓存

mybatis:

configuration:

cache-enabled: true

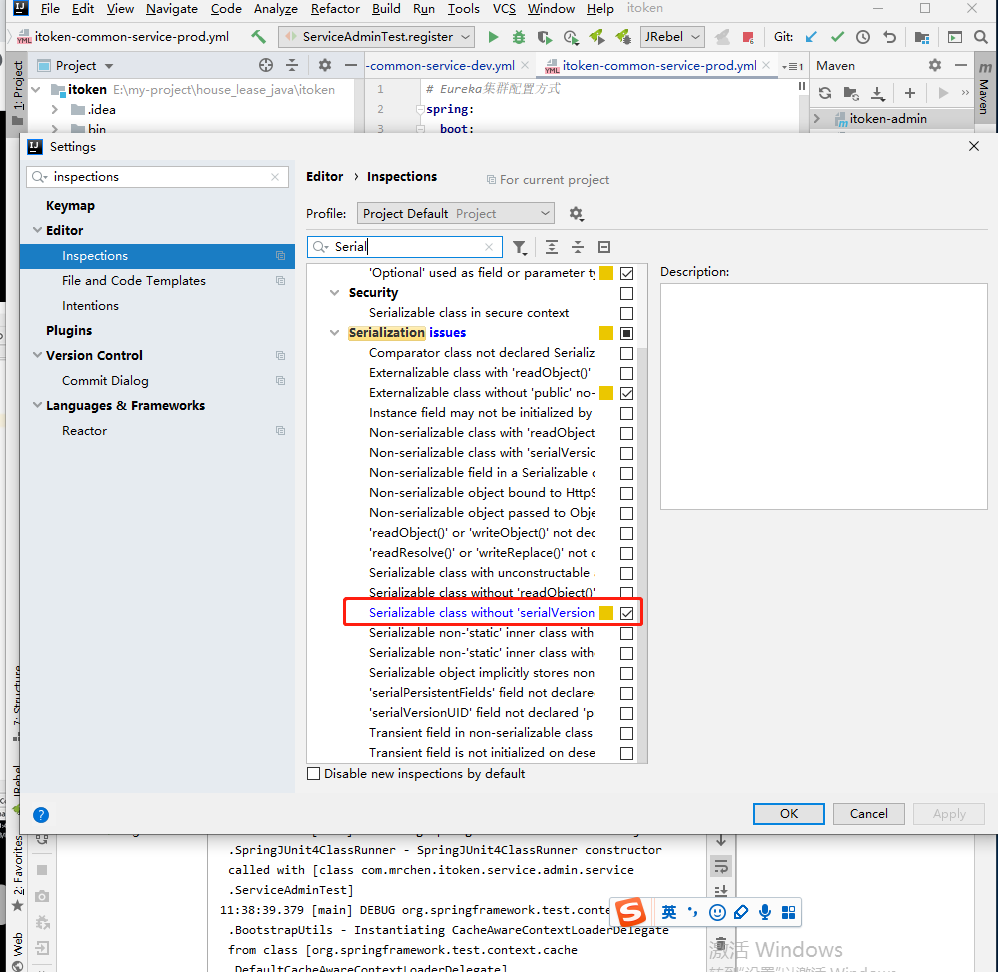

- idea增加serialVersionUID提示设置

- 实体类实现序列化接口并声明序列号

private static final long serialVersionUID = 8461546412131L;

- 创建相关工具类

实现Spring ApplicationContextAware接口,用于手动注入Bean

ApplicationContextHolder

- 实现MyBatis Cache接口,用于自定义缓存为Redis

- Mapper接口中增加注解

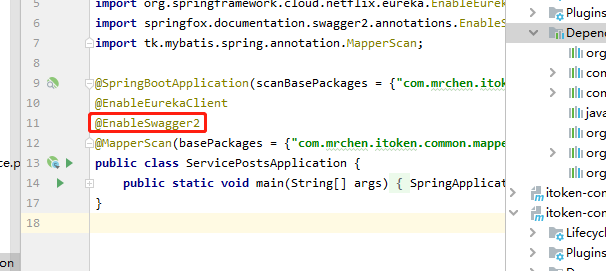

Spring Boot配置Swagger2接口文档引擎

- 添加maven包配置

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger2</artifactId>

<version>2.8.0</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger-ui</artifactId>

<version>2.8.0</version>

</dependency>

- 配置Swagger2(Java配置方式)

- 启动类添加注解

FastDFS的使用

- 创建docker-compose.yml文件

version: '3.1'

services:

fastdfs:

build: environment

restart: always

container_name: fastdfs

volumes:

- ./storage:/fastdfs/storage

network_mode: host

- 创建Dockerfile文件

FROM ubuntu:xenial

MAINTAINER mrchen@qq.com

# 更新数据源

WORKDIR /etc/apt

RUN echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial main restricted universe multiverse' > /etc/apt/sources.list

RUN echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted universe multiverse' >> /etc/apt/sources.list

RUN echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted universe multiverse' >> /etc/apt/sources.list

RUN echo 'deb http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse' >> /etc/apt/sources.list

RUN apt-get update

# 安装依赖

RUN apt-get install make gcc libpcre3-dev zlib1g-dev --assume-yes

# 复制工具包

ADD fastdfs.tar.gz /usr/local/src

ADD fastdfs-nginx-module.tar.gz /usr/local/src

ADD libfastcommon.tar.gz /usr/local/src

ADD nginx-1.15.4.tar.gz /usr/local/src

# 安装 libfastcommon

WORKDIR /usr/local/src/libfastcommon

RUN ./make.sh && ./make.sh install

# 安装 FastDFS

WORKDIR /usr/local/src/fastdfs

RUN ./make.sh && ./make.sh install

# 安装 FastDFS跟踪器

ADD tracker.conf /etc/fdfs

RUN mkdir -p /fastdfs/tracker

# 配置 FastDFS 存储

ADD storage.conf /etc/fdfs

RUN mkdir -p /fastdfs/storage

# 配置 FastDFS 客户端

ADD client.conf /etc/fdfs

# 配置 fastdfs-nignx-module

ADD config /usr/local/src/fastdfs-nginx-module/src

# FastDFS 与 Nginx 集成

WORKDIR /usr/local/src/nginx-1.15.4

RUN ./configure --add-module=/usr/local/src/fastdfs-nginx-module/src

RUN make && make install

ADD mod_fastdfs.conf /etc/fdfs

WORKDIR /usr/local/src/fastdfs/conf

RUN cp http.conf mine.types /etc/fdfs/

# 配置 Nginx

ADD nginx.conf /usr/local/nginx/conf

COPY entrypoint.sh /usr/local/bin/

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

WORKDIR /

EXPOSE 8888

CMD ["/bin/bash"]

搭建fastDFS客户端

- 安装fastDFS客户端(添加fastdfs-client-java依赖)

<dependency>

<groupId>org.csource</groupId>

<artifactId>fastdfs-client-java</artifactId>

<version>1.29-SNAPSHOT</version>

</dependency>

- 添加云配置

fastdfs.base.url: http://192.168.94.132:8887/

storage:

type: fastdfs

fastdfs:

tracker_server: 192.168.94.132:22122

RabbitMQ的使用

- RabbitMQ的安装

- 编写docker-compose.yml文件

version: '3.1'

services:

rabbitmq:

restart: always

image: rabbitmq:management

container_name: rabbitmq

ports:

- 5672:5672

- 15672:15672

environment:

TZ: Asia/Shanghai

RABBITMQ_DEFAULT_USER: rabbit

RABBITMQ_DEFAULT_PASS: 123456

volumes:

- ./data:/var/lib/rabbitmq

- RabbitMQ的使用