继上篇控制篇的后续文章,其中还会用到Texture外接纹理,在Flutter中进行软解码

前面相关文章

参考文章

Scrcpy服务端

咱们还是从它的源码入手,scrcpy有一个main函数的,这样才能被app_process直接启动,所以我们直接看它main函数

public static void main(String... args) throws Exception {

System.out.println("service starting...");

Thread.setDefaultUncaughtExceptionHandler(new Thread.UncaughtExceptionHandler() {

@Override

public void uncaughtException(Thread t, Throwable e) {

Ln.e("Exception on thread " + t, e);

suggestFix(e);

}

});

// unlinkSelf();

Options options = createOptions(args);

scrcpy(options);

}

它是没有注释的(头大,不写注释),我自己加了些print来调试,unlinkSelf是它删除自己的一个方法,我方便调试便注释了 main函数都比较简单,Options是它自己封装的一个参数的对象,简单说就是将命令行中启动时的参数封装到了对象里面

CLASSPATH=/data/local/tmp/scrcpy-server.jar app_process ./ com.genymobile.scrcpy.Server 1.12.1 0 8000000 0 true - true true

也就是

1.12.1 0 8000000 0 true - true true

createOptions返回的就是这个对象 随后调用了scrcpy这个函数,并将解析后的参数对象传了进去 scrcpy函数

private static void scrcpy(Options options) throws IOException {

final Device device = new Device(options);

boolean tunnelForward = options.isTunnelForward();

try (DesktopConnection connection = DesktopConnection.open(device, tunnelForward)) {

ScreenEncoder screenEncoder = new ScreenEncoder(options.getSendFrameMeta(), options.getBitRate(), options.getMaxFps());

if (options.getControl()) {

Controller controller = new Controller(device, connection);

// asynchronous

//

startController(controller);

startDeviceMessageSender(controller.getSender());

}

try {

// synchronous

screenEncoder.streamScreen(device, connection.getVideoFd());

} catch (IOException e) {

// this is expected on close

Ln.d("Screen streaming stopped");

}

}

}

其中try中的语句调用的函数

public static DesktopConnection open(Device device, boolean tunnelForward) throws IOException {

LocalSocket videoSocket;

LocalSocket controlSocket;

if (tunnelForward) {

LocalServerSocket localServerSocket = new LocalServerSocket(SOCKET_NAME);

try {

System.out.println("Waiting for video socket connection...");

videoSocket = localServerSocket.accept();

System.out.println("video socket is connected.");

// send one byte so the client may read() to detect a connection error

videoSocket.getOutputStream().write(0);

try {

System.out.println("Waiting for input socket connection...");

controlSocket = localServerSocket.accept();

System.out.println("input socket is connected.");

} catch (IOException | RuntimeException e) {

videoSocket.close();

throw e;

}

} finally {

localServerSocket.close();

}

} else {

videoSocket = connect(SOCKET_NAME);

try {

controlSocket = connect(SOCKET_NAME);

} catch (IOException | RuntimeException e) {

videoSocket.close();

throw e;

}

}

DesktopConnection connection = new DesktopConnection(videoSocket, controlSocket);

Size videoSize = device.getScreenInfo().getVideoSize();

connection.send(Device.getDeviceName(), videoSize.getWidth(), videoSize.getHeight());

return connection;

}

open函数负责创建两个socket并阻塞一直等到这两个socket分别被连接,随后open函数内发送了该设备的名称,宽度,高度,这些在投屏的视频流的解析需要用到 发送设备名与宽高的函数

private void send(String deviceName, int width, int height) throws IOException {

byte[] buffer = new byte[DEVICE_NAME_FIELD_LENGTH + 4];

byte[] deviceNameBytes = deviceName.getBytes(StandardCharsets.UTF_8);

int len = StringUtils.getUtf8TruncationIndex(deviceNameBytes, DEVICE_NAME_FIELD_LENGTH - 1);

System.arraycopy(deviceNameBytes, 0, buffer, 0, len);

// byte[] are always 0-initialized in java, no need to set '\0' explicitly

buffer[DEVICE_NAME_FIELD_LENGTH] = (byte) (width >> 8);

buffer[DEVICE_NAME_FIELD_LENGTH + 1] = (byte) width;

buffer[DEVICE_NAME_FIELD_LENGTH + 2] = (byte) (height >> 8);

buffer[DEVICE_NAME_FIELD_LENGTH + 3] = (byte) height;

IO.writeFully(videoFd, buffer, 0, buffer.length);

}

DEVICE_NAME_FIELD_LENGTH是一个常量为64,所以在视频流的socket被连接后,首先发送了一个字符0(貌似是为了客户端检测连接成功,类似于一次握手的感觉),随后发送了68个字节长度的字符,64个字节为设备名,4个字节为设备的宽高,它这里也是用的移位运算将大于255的数存进了两个字节,所以我们在客户端也要对应的解析。

Flutter客户端

理一下大致思路,第一个socket是视频输出,第二个是对设备的控制 Flutter是不能直接解码视频的,这也是我上一篇文章提到的重要性。 所以就是

-

Flutter-->Plugin-->安卓原生调用ffmpeg相关解码且连接第一个socket

-

dart中连接第二个socket对设备进行控制

c++中

所以我们在native中去连接第一个socket,并立即解码服务端的视频流数据

自定义一个c++ socket类(网上找的轮子自己改了一点)

ScoketConnection.cpp

//

// Created by Cry on 2018-12-20.

//

#include "SocketConnection.h"

#include <string.h>

#include <unistd.h>

bool SocketConnection::connect_server() {

//创建Socket

client_conn = socket(PF_INET, SOCK_STREAM, 0);

if (!client_conn) {

perror("can not create socket!!");

return false;

}

struct sockaddr_in in_addr;

memset(&in_addr, 0, sizeof(sockaddr_in));

in_addr.sin_port = htons(5005);

in_addr.sin_family = AF_INET;

in_addr.sin_addr.s_addr = inet_addr("127.0.0.1");

int ret = connect(client_conn, (struct sockaddr *) &in_addr, sizeof(struct sockaddr));

if (ret < 0) {

perror("socket connect error!!\\n");

return false;

}

return true;

}

void SocketConnection::close_client() {

if (client_conn >= 0) {

shutdown(client_conn, SHUT_RDWR);

close(client_conn);

client_conn = 0;

}

}

int SocketConnection::send_to_(uint8_t *buf, int len) {

if (!client_conn) {

return 0;

}

return send(client_conn, buf, len, 0);

}

int SocketConnection::recv_from_(uint8_t *buf, int len) {

if (!client_conn) {

return 0;

}

//rev 和 read 的区别 https://blog.csdn.net/superbfly/article/details/72782264

return recv(client_conn, buf, len, 0);

}

头文件

//

// Created by Cry on 2018-12-20.

//

#ifndef ASREMOTE_SOCKETCONNECTION_H

#define ASREMOTE_SOCKETCONNECTION_H

#include <sys/socket.h>

#include <arpa/inet.h>

#include <zconf.h>

#include <cstdio>

/**

* 这里的方法均阻塞

*/

class SocketConnection {

public:

int client_conn;

/**

* 连接Socket

*/

bool connect_server();

/**

* 关闭Socket

*/

void close_client();

/**

* Socket 发送

*/

int send_to_(uint8_t *buf, int len);

/**

* Socket 接受

*/

int recv_from_(uint8_t *buf, int len);

};

#endif //ASREMOTE_SOCKETCONNECTION_H

为了方便在cpp中对socket的连接

连接与解码对应的函数

SocketConnection *socketConnection;

LOGD("正在连接");

socketConnection = new SocketConnection();

if (!socketConnection->connect_server()) {

return;

}

LOGD("连接成功");

LOGD是调用的java的Log.d

连接成功后需要将前面服务端传出的一个字符0给读取掉

uint8_t zeroChar[1];

//这次接收是为了将服务端发送过来的空字节

socketConnection->recv_from_(reinterpret_cast<uint8_t *>(zeroChar), 1);

随即接收设备信息

uint8_t deviceInfo[68];

socketConnection->recv_from_(reinterpret_cast<uint8_t *>(deviceInfo), 68);

LOGD("设备名===========>%s", deviceInfo);

int width=deviceInfo[64]<<8|deviceInfo[65];

int height=deviceInfo[66]<<8|deviceInfo[67];

LOGD("设备的宽为%d",width);

LOGD("设备的高为%d",height);

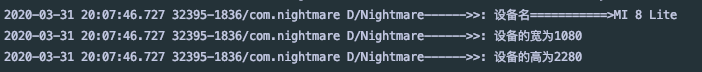

看一下调试图

SocketConnection *socketConnection;

LOGD("正在连接");

socketConnection = new SocketConnection();

if (!socketConnection->connect_server()) {

return;

}

LOGD("连接成功");

uint8_t zeroChar[1];

//这次接收是为了将服务端发送过来的空字节

socketConnection->recv_from_(reinterpret_cast<uint8_t *>(zeroChar), 1);

uint8_t deviceInfo[68];

socketConnection->recv_from_(reinterpret_cast<uint8_t *>(deviceInfo), 68);

LOGD("设备名===========>%s", deviceInfo);

int width=deviceInfo[64]<<8|deviceInfo[65];

int height=deviceInfo[66]<<8|deviceInfo[67];

LOGD("设备的宽为%d",width);

LOGD("设备的高为%d",height);

// std::cout<<

//初始化ffmpeg网络模块

avformat_network_init();

AVFormatContext *formatContext = avformat_alloc_context();

unsigned char *buffer = static_cast<unsigned char *>(av_malloc(BUF_SIZE));

AVIOContext *avio_ctx = avio_alloc_context(buffer, BUF_SIZE,

0, socketConnection,

read_socket_buffer, NULL,

NULL);

formatContext->pb = avio_ctx;

int ret = avformat_open_input(&formatContext, NULL, NULL, NULL);

if (ret < 0) {

LOGD("avformat_open_input error :%s\n", av_err2str(ret));

return;

}

LOGD("打开成功");

//下面内容为传统解码视频必须的语句,用于此会一直获取不到,阻塞线程

//为分配的AVFormatContext 结构体中填充数据

// if (avformat_find_stream_info(formatContext, NULL) < 0) {

// LOGD("读取输入的视频流信息失败。");

// return;

// }

LOGD("当前视频数据,包含的数据流数量:%d", formatContext->nb_streams);

//下面是传统解码获取解码器的方法,这里直接设置解码器为h264

//找到"视频流".AVFormatContext 结构体中的nb_streams字段存储的就是当前视频文件中所包含的总数据流数量——

//视频流,音频流,字幕流

// for (int i = 0; i < formatContext->nb_streams; i++) {

//

// //如果是数据流的编码格式为AVMEDIA_TYPE_VIDEO——视频流。

// if (formatContext->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO) {

// video_stream_index = i;//记录视频流下标

// break;

// }

// }

// if (video_stream_index == -1) {

// LOGD("没有找到 视频流。");

// return;

// }

//通过编解码器的id——codec_id 获取对应(视频)流解码器

AVCodec *videoDecoder = avcodec_find_decoder(AV_CODEC_ID_H264);

if (videoDecoder == NULL) {

LOGD("未找到对应的流解码器。");

return;

}

LOGD("成功找打解码器。");

//通过解码器分配(并用 默认值 初始化)一个解码器context

AVCodecContext *codecContext = avcodec_alloc_context3(videoDecoder);

if (codecContext == NULL) {

LOGD("分配 解码器上下文失败。");

return;

}

LOGD("分配 解码器上下文成功。");

//更具指定的编码器值填充编码器上下文

// if (avcodec_parameters_to_context(codecContext, codecParameters) < 0) {

// LOGD("填充编解码器上下文失败。");

// return;

// }

//

// LOGD("填充编解码器上下文成功。");

//通过所给的编解码器初始化编解码器上下文

if (avcodec_open2(codecContext, videoDecoder, NULL) < 0) {

LOGD("初始化 解码器上下文失败。");

return;

}

LOGD("初始化 解码器上下文成功。");

AVPixelFormat dstFormat = AV_PIX_FMT_RGBA;

codecContext->pix_fmt = AV_PIX_FMT_YUV420P;

//分配存储压缩数据的结构体对象AVPacket

//如果是视频流,AVPacket会包含一帧的压缩数据。

//但如果是音频则可能会包含多帧的压缩数据

AVPacket *packet = av_packet_alloc();

//分配解码后的每一数据信息的结构体(指针)

AVFrame *frame = av_frame_alloc();

//分配最终显示出来的目标帧信息的结构体(指针)

AVFrame *outFrame = av_frame_alloc();

codecContext->width = width;

codecContext->height = height;

uint8_t *out_buffer = (uint8_t *) av_malloc(

(size_t) av_image_get_buffer_size(dstFormat, codecContext->width, codecContext->height,

1));

//更具指定的数据初始化/填充缓冲区

av_image_fill_arrays(outFrame->data, outFrame->linesize, out_buffer, dstFormat,

codecContext->width, codecContext->height, 1);

//初始化SwsContext

SwsContext *swsContext = sws_getContext(

codecContext->width //原图片的宽

, codecContext->height //源图高

, codecContext->pix_fmt //源图片format

, codecContext->width //目标图的宽

, codecContext->height //目标图的高

, dstFormat, SWS_BICUBIC, NULL, NULL, NULL

);

if (swsContext == NULL) {

LOGD("swsContext==NULL");

return;

}

LOGD("swsContext初始化成功")

//Android 原生绘制工具

ANativeWindow *nativeWindow = ANativeWindow_fromSurface(env, surface);

//定义绘图缓冲区

ANativeWindow_Buffer outBuffer;

//通过设置宽高限制缓冲区中的像素数量,而非屏幕的物流显示尺寸。

//如果缓冲区与物理屏幕的显示尺寸不相符,则实际显示可能会是拉伸,或者被压缩的图像

ANativeWindow_setBuffersGeometry(nativeWindow, codecContext->width, codecContext->height,

WINDOW_FORMAT_RGBA_8888);

//循环读取数据流的下一帧

LOGD("解码中");

while (av_read_frame(formatContext, packet) == 0) {

//讲原始数据发送到解码器

int sendPacketState = avcodec_send_packet(codecContext, packet);

if (sendPacketState == 0) {

int receiveFrameState = avcodec_receive_frame(codecContext, frame);

if (receiveFrameState == 0) {

//锁定窗口绘图界面

ANativeWindow_lock(nativeWindow, &outBuffer, NULL);

//对输出图像进行色彩,分辨率缩放,滤波处理

sws_scale(swsContext, (const uint8_t *const *) frame->data, frame->linesize, 0,

frame->height, outFrame->data, outFrame->linesize);

uint8_t *dst = (uint8_t *) outBuffer.bits;

//解码后的像素数据首地址

//这里由于使用的是RGBA格式,所以解码图像数据只保存在data[0]中。但如果是YUV就会有data[0]

//data[1],data[2]

uint8_t *src = outFrame->data[0];

//获取一行字节数

int oneLineByte = outBuffer.stride * 4;

//复制一行内存的实际数量

int srcStride = outFrame->linesize[0];

for (int i = 0; i < codecContext->height; i++) {

memcpy(dst + i * oneLineByte, src + i * srcStride, srcStride);

}

//解锁

ANativeWindow_unlockAndPost(nativeWindow);

//进行短暂休眠。如果休眠时间太长会导致播放的每帧画面有延迟感,如果短会有加速播放的感觉。

//一般一每秒60帧——16毫秒一帧的时间进行休眠

} else if (receiveFrameState == AVERROR(EAGAIN)) {

LOGD("从解码器-接收-数据失败:AVERROR(EAGAIN)");

} else if (receiveFrameState == AVERROR_EOF) {

LOGD("从解码器-接收-数据失败:AVERROR_EOF");

} else if (receiveFrameState == AVERROR(EINVAL)) {

LOGD("从解码器-接收-数据失败:AVERROR(EINVAL)");

} else {

LOGD("从解码器-接收-数据失败:未知");

}

} else if (sendPacketState == AVERROR(EAGAIN)) {//发送数据被拒绝,必须尝试先读取数据

LOGD("向解码器-发送-数据包失败:AVERROR(EAGAIN)");//解码器已经刷新数据但是没有新的数据包能发送给解码器

} else if (sendPacketState == AVERROR_EOF) {

LOGD("向解码器-发送-数据失败:AVERROR_EOF");

} else if (sendPacketState == AVERROR(EINVAL)) {//遍解码器没有打开,或者当前是编码器,也或者需要刷新数据

LOGD("向解码器-发送-数据失败:AVERROR(EINVAL)");

} else if (sendPacketState == AVERROR(ENOMEM)) {//数据包无法压如解码器队列,也可能是解码器解码错误

LOGD("向解码器-发送-数据失败:AVERROR(ENOMEM)");

} else {

LOGD("向解码器-发送-数据失败:未知");

}

av_packet_unref(packet);

}

ANativeWindow_release(nativeWindow);

av_frame_free(&outFrame);

av_frame_free(&frame);

av_packet_free(&packet);

avcodec_free_context(&codecContext);

avformat_close_input(&formatContext);

avformat_free_context(formatContext);

留意上面的注释都是不能放开的,对音视频解码有过了解的就会知道注释部分其实是正常播放视频一定会走的流程,在这是行不通的

如何渲染

通过前面文章提到的Flutter创建Surface方法,获取到对应的textureId后交给Flutter渲染出对应的画面,将其对象传到上面函数体内,由cpp直接操作这个窗口,

dart中

init() async {

SystemChrome.setEnabledSystemUIOverlays([SystemUiOverlay.bottom]);

texTureId = await videoPlugin.invokeMethod("");

setState(() {});

networkManager = NetworkManager("127.0.0.1", 5005);

await networkManager.init();

}

NetworkManager是一个简单的socket封装类

class NetworkManager {

final String host;

final int port;

Socket socket;

static Stream<List<int>> mStream;

Int8List cacheData = Int8List(0);

NetworkManager(this.host, this.port);

Future<void> init() async {

try {

socket = await Socket.connect(host, port, timeout: Duration(seconds: 3));

} catch (e) {

print("连接socket出现异常,e=${e.toString()}");

}

mStream = socket.asBroadcastStream();

// socket.listen(decodeHandle,

// onError: errorHandler, onDone: doneHandler, cancelOnError: false);

}

***

我们在C++中获取到了设备的大小,但是dart这边并没有拿到,而Flutter Texture这个Widget会默认撑满整个屏幕 所以我们在dart也需要拿到设备的宽高

ProcessResult _result = await Process.run(

"sh",

["-c", "adb -s $currentIp shell wm size"],

environment: {"PATH": EnvirPath.binPath},

runInShell: true,

);

var tmp=_result.stdout.replaceAll("Physical size: ", "").toString().split("x");

int width=int.tryParse(tmp[0]);

int height=int.tryParse(tmp[1]);

$currentIp是当前设备的ip,为了防止有多个设备连接的情况,adb是交叉编译到安卓设备的, 最后我们用AspectRatio将Texture包起来,

AspectRatio(

aspectRatio:

width / height,

***

这样在客户端Texture的显示也会保持远程设备屏幕的比例

控制

当然会用GestureDetector 如下

GestureDetector(

behavior: HitTestBehavior.translucent,

onPanDown: (details) {

onPanDown = Offset(details.globalPosition.dx / fulldx,

(details.globalPosition.dy) / fulldy);

int x = (onPanDown.dx * fulldx*window.devicePixelRatio).toInt();

int y = (onPanDown.dy * fulldy*window.devicePixelRatio).toInt();

networkManager.sendByte([

2,

0,

2,

3,

4,

5,

6,

7,

8,

9,

x >> 24,

x << 8 >> 24,

x << 16 >> 24,

x << 24 >> 24,

y >> 24,

y << 8 >> 24,

y << 16 >> 24,

y << 24 >> 24,

1080 >> 8,

1080 << 8 >> 8,

2280 >> 8,

2280 << 8 >> 8,

0,

0,

0,

0,

0,

0

]);

newOffset = Offset(details.globalPosition.dx / fulldx,

(details.globalPosition.dy) / fulldy);

},

onPanUpdate: (details) {

newOffset = Offset(details.globalPosition.dx / fulldx,

(details.globalPosition.dy) / fulldy);

int x = (newOffset.dx * fulldx*window.devicePixelRatio).toInt();

int y = (newOffset.dy * fulldy*window.devicePixelRatio).toInt();

networkManager.sendByte([

2,

2,

2,

3,

4,

5,

6,

7,

8,

9,

x >> 24,

x << 8 >> 24,

x << 16 >> 24,

x << 24 >> 24,

y >> 24,

y << 8 >> 24,

y << 16 >> 24,

y << 24 >> 24,

1080 >> 8,

1080 << 8 >> 8,

2280 >> 8,

2280 << 8 >> 8,

0,

0,

0,

0,

0,

0

]);

},

onPanEnd: (details) async {

int x = (newOffset.dx * fulldx*window.devicePixelRatio).toInt();

int y = (newOffset.dy * fulldy*window.devicePixelRatio).toInt();

networkManager.sendByte([

2,

1,

2,

3,

4,

5,

6,

7,

8,

9,

x >> 24,

x << 8 >> 24,

x << 16 >> 24,

x << 24 >> 24,

y >> 24,

y << 8 >> 24,

y << 16 >> 24,

y << 24 >> 24,

1080 >> 8,

1080 << 8 >> 8,

2280 >> 8,

2280 << 8 >> 8,

0,

0,

0,

0,

0,

0

]);

},

child: Container(

alignment: Alignment.topLeft,

// color: MToolkitColors.appColor.withOpacity(0.5),

// child: Image.memory(Uint8List.fromList(list)),

width: fulldx,

height: fulldy,

),

),

ok,最后效果(允许我用上篇帖子的gif)

- 显示有延迟

- 目前只能在安卓局域网或者otg控制安卓

- Linux等桌面端由于没有视频播放的方案(我正尝试能否也创建一个opengl的surface,但最后都没有成功,实现了一个极其劣质的播放器没法用),但linux通过这样的方案控制端是行得通的 开源需要等我处理本地的一些问题才行