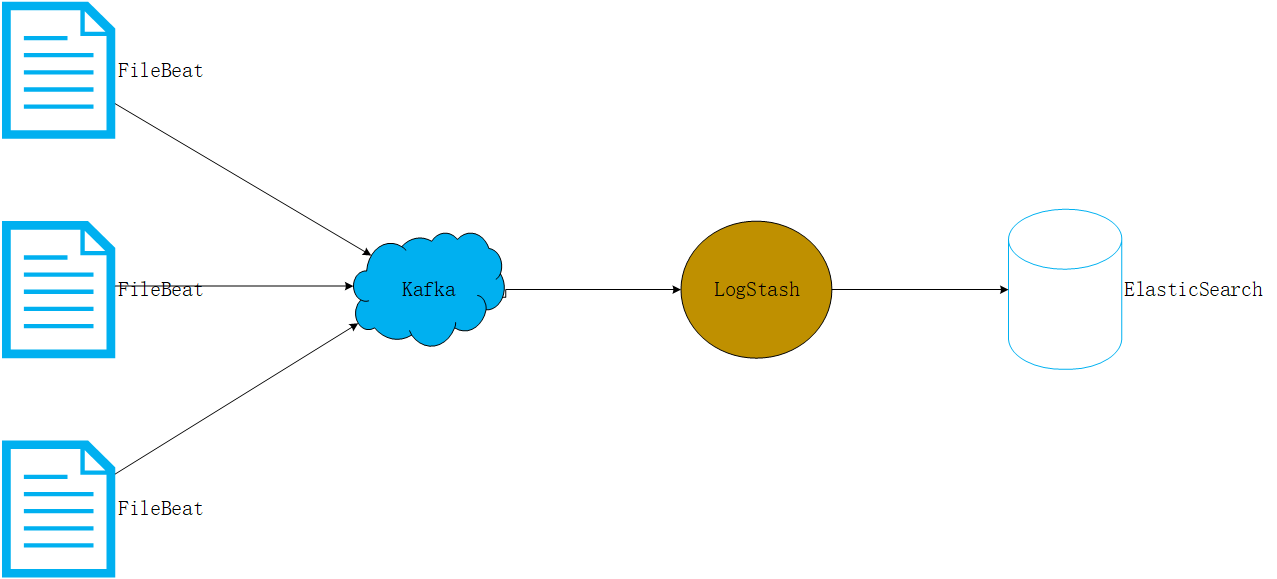

总体架构图:

FileBeat:负责收集系统中各个服务产生的日志,将日志收集至kafka;

Kafka:作为高吞吐量的消息总线服务,暂存日志数据;(为后期扩展格式化日志内容预留,FileBeat写进原始日志的主题,日志内容格式化服务订阅原始日志,格式化完写回kafka另外一个主题,供Logstash订阅消费)

LogStash:订阅kafka上的日志收集主题,将日志过滤,按天分割,上传至ElasticSearch;

ElasticSearch:作为存储日志的数据库;

ElasticSearch索引模板及索引别名:

1.使用ElasticSearch的索引别名,可以查询所有映射到别名的索引数据;2.利用索引模板,自动将新建的索引添加到索引别名的映射下;

创建索引模板:发送以下请求创建索引模板,只要有sys-log-开头的索引创建,自动映射到索引别名sys-logs下

PUT /_template/sys-logs_template

{

"index_patterns" : ["sys-log-*"],

"order": 1,

"settings": {

"number_of_shards": 3,

"number_of_replicas": 1

},

"aliases": {

"sys-logs": {}

},

"mappings": {

"_default_": {

"_all": {

"enabled": false

}

}

}

}

使用别名查询日志:

GET /sys-logs/type/_search { }

LogStash按天切割日志配置:

input {

kafka{

codec => json

bootstrap_servers => ["127.0.0.1:9092"]

client_id => "logstash-0"

group_id => "logstash-group"

auto_offset_reset => "latest" #从最新的偏移量开始消费

decorate_events => false #此属性会将当前topic、offset、group、partition等信息也带到message中

topics => ["sys-logs"] #数组类型,可配置多个topic

retry_backoff_ms => 100

consumer_threads => 3

}

}

filter {

## 使用logDate作为按天切割索引的后缀

ruby {

code => "event.set('@logDate', event.get('LogTime'))"

}

mutate {

convert => ["@logDate", "string"]

gsub => ["@logDate", "(?<=\d{4}\-\d{2}\-\d{2})\s.*", ""]

}

## 如果使用%{+YYYY-MM-dd}按日分割日志,读取的是@timestamp字段,@timestamp不作处理的话取的是UTC时间,跟北京时间会有8小时时差

# ruby {

# code => "event.set('timestamp', event.get('@timestamp').time.localtime + 8*60*60)"

# }

# ruby {

# code => "event.set('@timestamp',event.get('timestamp'))"

# }

# mutate {

# remove_field => ["timestamp"]

# }

}

output {

# 上传到elasticsearch,按天建索引

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "sys-log-%{[@logDate]}"

document_type => "sys-log"

document_id => "%{id}"

}

}

FileBeat配置:

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/test_account/*/logs/filebeat/*.log

fields:

service: sys-log

## 日志主体在message字段中,解析message字段,并删除

processors:

- decode_json_fields:

fields: ["message"]

target: ""

overwrite_keys: true

- drop_fields:

fields: ["message"]

scan_frequency: 8s

close_*: 5m

harvester_limit: 10

spool_size: 256

idle_timeout: 30s

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

output.kafka:

hosts: ["127.0.0.1:9092"]

topic: "sys-logs"

required_acks: 1

max_retries: -1