一、从创建一个Pod&Service开始

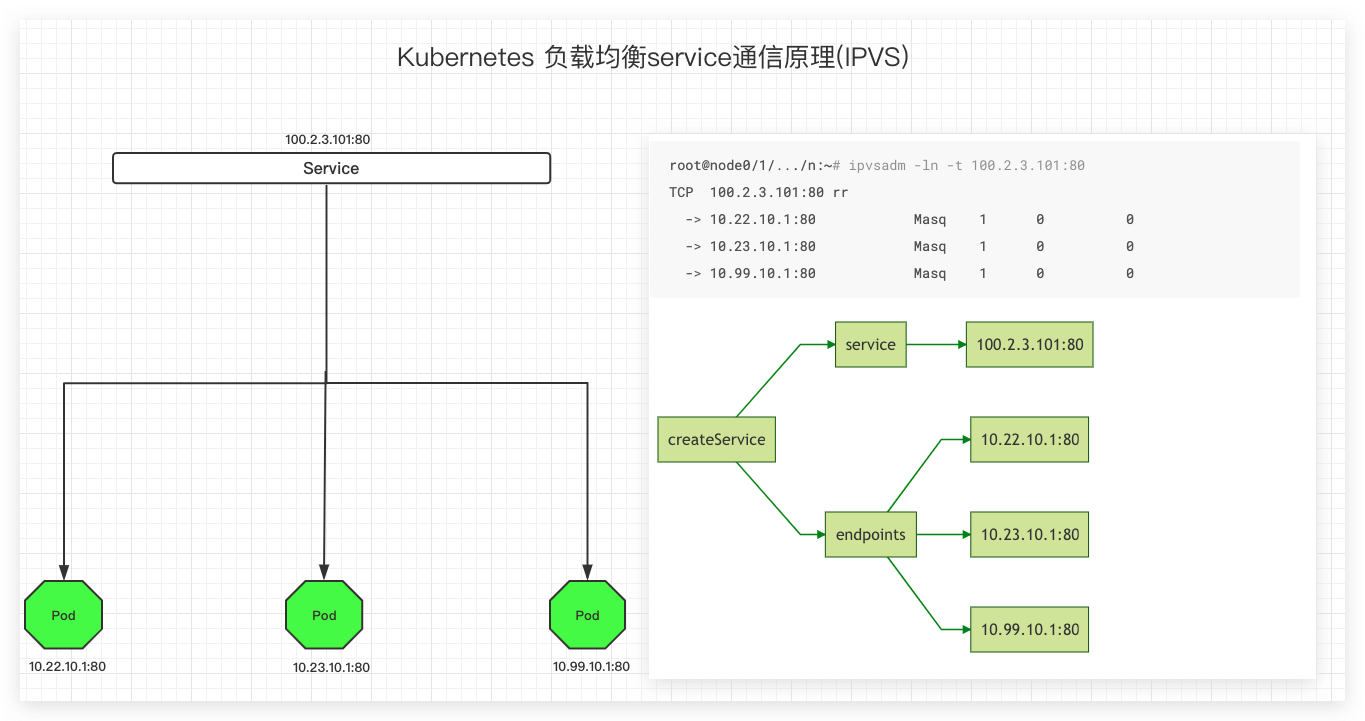

可以看到我们创建了3个POD,并以service暴露其80端口,

kubectl run web --image nginx --replicas 3 && kubectl expose deployment web --port 80

$ [K8sNDev] kubectl get pod,service -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-57cfbc8957-2dmq6 1/1 Running 0 29s 100.74.135.1 node3 <none> <none>

pod/web-57cfbc8957-c2cp4 1/1 Running 0 29s 100.108.11.194 node2 <none> <none>

pod/web-57cfbc8957-qcbvc 1/1 Running 0 29s 100.66.209.194 node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/web ClusterIP 10.104.197.218 <none> 80/TCP 24s run=web

二、查看所有节点上的ipvs规则

可以看到Kube-Proxy在每个节点上都创建了一个service为10.104.197.218:80规则,这条规则对应3条realserver分别为三个Pod的IP和提供服务的端口

uniops@192-168-137-205:~$ supercommod -isudo zk8s "ipvsadm -ln -t 10.104.197.218:80"

zmaster0 znode0 znode1 znode2 znode3 znode4

*************[zmaster0]*************

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.104.197.218:80 rr

-> 100.66.209.194:80 Masq 1 0 0

-> 100.74.135.1:80 Masq 1 0 0

-> 100.108.11.194:80 Masq 1 0 0

*************[znode0]*************

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.104.197.218:80 rr

-> 100.66.209.194:80 Masq 1 0 0

-> 100.74.135.1:80 Masq 1 0 0

-> 100.108.11.194:80 Masq 1 0 0

*************[znode1]*************

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.104.197.218:80 rr

-> 100.66.209.194:80 Masq 1 0 0

-> 100.74.135.1:80 Masq 1 0 0

-> 100.108.11.194:80 Masq 1 0 0

*************[znode2]*************

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.104.197.218:80 rr

-> 100.66.209.194:80 Masq 1 0 0

-> 100.74.135.1:80 Masq 1 0 0

-> 100.108.11.194:80 Masq 1 0 0

*************[znode3]*************

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.104.197.218:80 rr

-> 100.66.209.194:80 Masq 1 0 0

-> 100.74.135.1:80 Masq 1 0 0

-> 100.108.11.194:80 Masq 1 0 0

*************[znode4]*************

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.104.197.218:80 rr

-> 100.66.209.194:80 Masq 1 0 0

-> 100.74.135.1:80 Masq 1 0 0

-> 100.108.11.194:80 Masq 1 0 0

三、访问测试

每个节点都能访问到对应的Pod所提供的服务,其原理就是通过ipvs规则进行访问

uniops@192-168-137-205:~$ supercommod -i zk8s "curl -sL -w '状态码:%{http_code}' 10.104.197.218 -o /dev/null"

zmaster0 znode0 znode1 znode2 znode3 znode4

*************[zmaster0]*************

状态码:200

*************[znode0]*************

状态码:200

*************[znode1]*************

状态码:200

*************[znode2]*************

状态码:200

*************[znode3]*************

状态码:200

*************[znode4]*************

状态码:200

四、问题验证:访问请求经过service到Pod,那么流量经不经过service?

- 为了方便测试,先把pod的数量缩为1

kubectl scale --replicas 1 deployment web

$ [K8sNDev] kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-57cfbc8957-c2cp4 1/1 Running 0 19m 100.108.11.194 node2 <none> <none>

2.在主节点访问service/web,tcpdump抓包看数据包传输

- 查看node2上的route规则

看到100.108.11.194这个ip是个cali51bd9379fb6直连的,所以我们要抓这个设备上的包

root@node2:~# ip route

default via 192.168.137.1 dev enp4s3 proto static

10.0.0.0/8 via 192.168.138.1 dev enp4s4 proto static

100.66.209.192/26 via 192.168.138.52 dev tunl0 proto bird onlink

100.74.135.0/26 via 192.168.138.54 dev tunl0 proto bird onlink

100.94.229.192/26 via 192.168.138.51 dev tunl0 proto bird onlink

blackhole 100.108.11.192/26 proto bird

100.108.11.194 dev cali51bd9379fb6 scope link

100.117.189.64/26 via 192.168.138.50 dev tunl0 proto bird onlink

100.127.10.64/26 via 192.168.138.55 dev tunl0 proto bird onlink

134.0.0.0/8 via 192.168.137.1 dev enp4s3 proto static

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

192.168.137.0/24 dev enp4s3 proto kernel scope link src 192.168.137.93

192.168.138.0/24 dev enp4s4 proto kernel scope link src 192.168.138.53

- master0访问10.104.197.218

uniops@master0:~$ curl -sL -w "状态码:%{http_code}\n" 10.104.197.218 -o /dev/null

状态码:200

- 在node2上查看抓包报文

可以看到只有100.117网段和100.108网段之间的握手信息和数据传输报文,并未见到serviceIP 10.104.197.218的报文信息,100.117网段在上述路由表中可以看到是通过192.168.138.50这个ip网关镜像集群中容器间的网络通信,这个ip为master0上的一块物理网卡

root@node2:~# tcpdump -i cali51bd9379fb6

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on cali51bd9379fb6, link-type EN10MB (Ethernet), capture size 262144 bytes

16:31:03.550900 IP 100.117.189.64.47562 > 100.108.11.194.http: Flags [S], seq 2890103564, win 43690, options [mss 65495,sackOK,TS val 4158018476 ecr 0,nop,wscale 7], length 0

16:31:03.550928 IP 100.108.11.194.http > 100.117.189.64.47562: Flags [S.], seq 1837307039, ack 2890103565, win 27760, options [mss 1400,sackOK,TS val 3255762932 ecr 4158018476,nop,wscale 7], length 0

16:31:03.551283 IP 100.117.189.64.47562 > 100.108.11.194.http: Flags [.], ack 1, win 342, options [nop,nop,TS val 4158018476 ecr 3255762932], length 0

16:31:03.551287 IP 100.117.189.64.47562 > 100.108.11.194.http: Flags [P.], seq 1:79, ack 1, win 342, options [nop,nop,TS val 4158018476 ecr 3255762932], length 78: HTTP: GET / HTTP/1.1

16:31:03.551320 IP 100.108.11.194.http > 100.117.189.64.47562: Flags [.], ack 79, win 217, options [nop,nop,TS val 3255762932 ecr 4158018476], length 0

16:31:03.551453 IP 100.108.11.194.http > 100.117.189.64.47562: Flags [P.], seq 1:239, ack 79, win 217, options [nop,nop,TS val 3255762933 ecr 4158018476], length 238: HTTP: HTTP/1.1 200 OK

16:31:03.551513 IP 100.108.11.194.http > 100.117.189.64.47562: Flags [P.], seq 239:851, ack 79, win 217, options [nop,nop,TS val 3255762933 ecr 4158018476], length 612: HTTP

结论,访问Pod的网络流量并不会经过service,请求直接通过service直接访问Pod中的服务