Author : Ali0th

Date : 2019-4-6

前言

最近上手大数据,入门一下hadoop,单机部署撸了几天,终于部署起来了,遇到了不少坑。这篇文章把我整个过程码下来了,包括了各个步骤和报错处理。

环境

CentOS release 6.4

openjdk version "1.8.0_201"

系统服务

关闭 iptables

# 关闭防火墙:

service iptables stop

# 从开机启动项中移除防火墙

chkconfig iptables off

关闭selinux服务(重启生效)

vim /etc/selinux/config

SELINUX=disabled

hostname 配置

vim /etc/sysconfig/network

这里默认为 localhost.localdomain

ssh 免密登录

ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

chmod 0600 ~/.ssh/authorized_keys

测试是否成功,执行下面命令,若不用输入密码则成功。

ssh localhost

java 安装

使用 yum 安装

# 查看yum包含的jdk版本

yum search java

# 安装jdk

yum install java-1.8.0-openjdk-devel.x86_64 : OpenJDK Development Environment

查看环境变量

export

vi /etc/profile

查看 jvm 目录

ll /usr/lib/jvm/

输出如下,其中java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64 为JAVA_HOME目录。

[root@localhost java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64]# ll /usr/lib/jvm/

total 4

lrwxrwxrwx. 1 root root 26 Apr 3 15:47 java -> /etc/alternatives/java_sdk

lrwxrwxrwx. 1 root root 32 Apr 3 15:47 java-1.8.0 -> /etc/alternatives/java_sdk_1.8.0

drwxr-xr-x. 7 root root 4096 Apr 3 15:47 java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64

lrwxrwxrwx. 1 root root 48 Apr 3 15:47 java-1.8.0-openjdk.x86_64 -> java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64

lrwxrwxrwx. 1 root root 34 Apr 3 15:47 java-openjdk -> /etc/alternatives/java_sdk_openjdk

lrwxrwxrwx. 1 root root 21 Apr 3 15:47 jre -> /etc/alternatives/jre

lrwxrwxrwx. 1 root root 27 Apr 3 15:47 jre-1.8.0 -> /etc/alternatives/jre_1.8.0

lrwxrwxrwx. 1 root root 52 Apr 3 15:47 jre-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64 -> java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64/jre

lrwxrwxrwx. 1 root root 52 Apr 3 15:47 jre-1.8.0-openjdk.x86_64 -> java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64/jre

lrwxrwxrwx. 1 root root 29 Apr 3 15:47 jre-openjdk -> /etc/alternatives/jre_openjdk

配置全局变量:

vim /etc/profile

添加环境配置如下:

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64

export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin

全局变量立即生效

source /etc/profile

hadoop 下载与相关环境配置

创建账户

groupadd hadoop

useradd hadoop -g hadoop

ll -d /home/hadoop

grep hadoop /etc/passwd /etc/shadow /etc/group

passwd hadoop # hadoop123

以 root 執行 visudo, 將 hadoop 加入 sudoers,在 root ALL=(ALL) ALL 下加入。

hadoop ALL=(ALL) ALL

下载 hadoop 并解压。

wget http://apache.claz.org/hadoop/common/hadoop-3.1.2/hadoop-3.1.2.tar.gz

tar -xzf hadoop-3.1.2.tar.gz

sudo mv hadoop-3.1.2 /usr/local/hadoop

添加启动项。

vim /etc/profile

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

创建数据存储目录:

- NameNode 数据存放目录: /usr/local/data/hadoop/name

- SecondaryNameNode 数据存放目录: /usr/local/data/hadoop/secondary

- DataNode 数据存放目录: /usr/local/data/hadoop/data

- 临时数据存放目录: /usr/local/data/hadoop/tmp

mkdir -p /usr/local/data/hadoop/name

mkdir -p /usr/local/data/hadoop/secondary

mkdir -p /usr/local/data/hadoop/data

mkdir -p /usr/local/data/hadoop/tmp

hadoop 配置与启动

配置 hadoop-env.sh、hdfs-site.xml、core-site.xml、mappred-site.xml、yarn-site.xml

进入配置目录:

cd /usr/local/hadoop/etc/hadoop/

hadoop-env.sh

添加JAVA_HOME

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-2.el6_10.x86_64

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost.localdomain:9000</value>

<description>hdfs内部通讯访问地址</description>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/data/</value>

<description>hadoop数据存放</description>

</property>

</configuration>

hdfs-site.xml

<!--

# replication 副本数量

# 因为是伪分布式 设置为1

# 新版本的 hadoop 块默认大小为128mb

-->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

mapred-site.xml

# yran 集群

mv mapred-site.xml.template mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

yarn-site.xml

arn.resourcemanager.hostname yarn集群的老大

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>localhost.localdomain</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

dfs 启动

格式化hadoop文件系统

cd /usr/local/hadoop

./bin/hdfs namenode -format

启动 dfs

./sbin/start-dfs.sh

使用 jps 查看服务是否已经启动

[hadoop@localhost hadoop]$ jps

6466 NameNode

6932 Jps

6790 SecondaryNameNode

6584 DataNode

全部启动

./sbin/start-all.sh

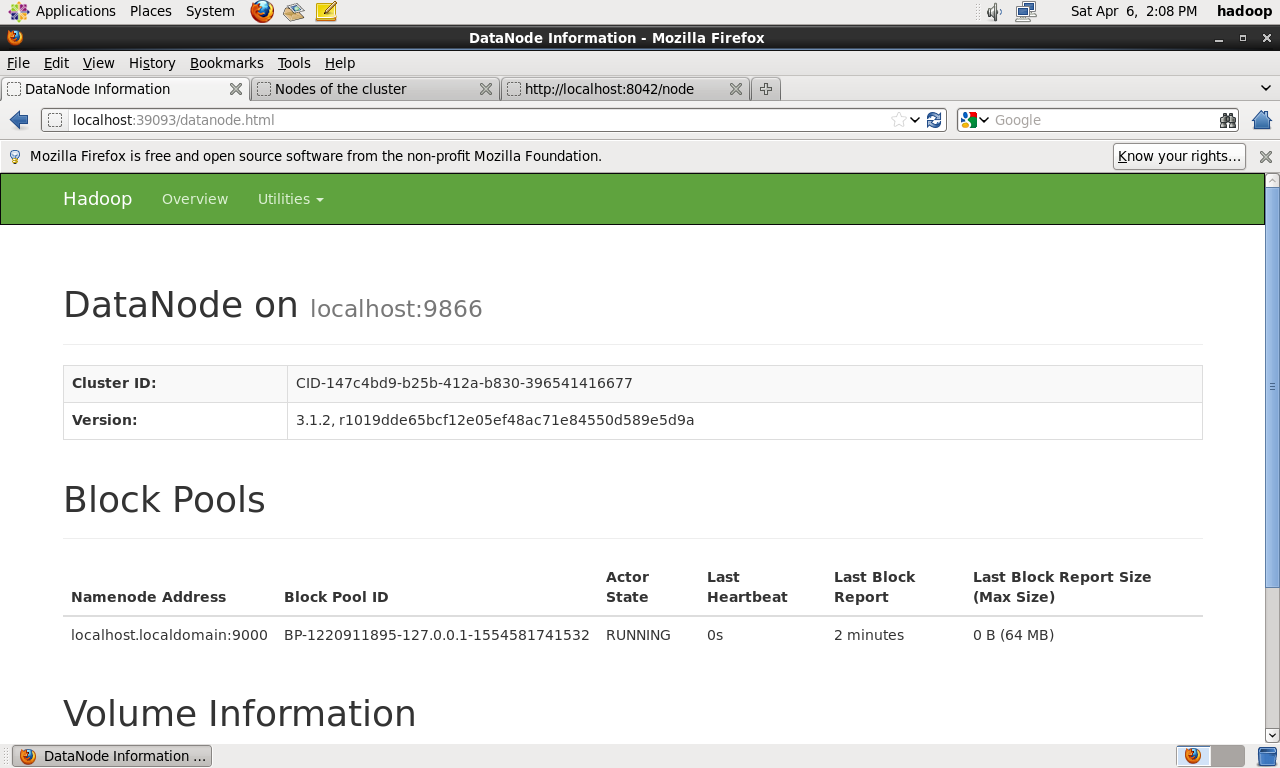

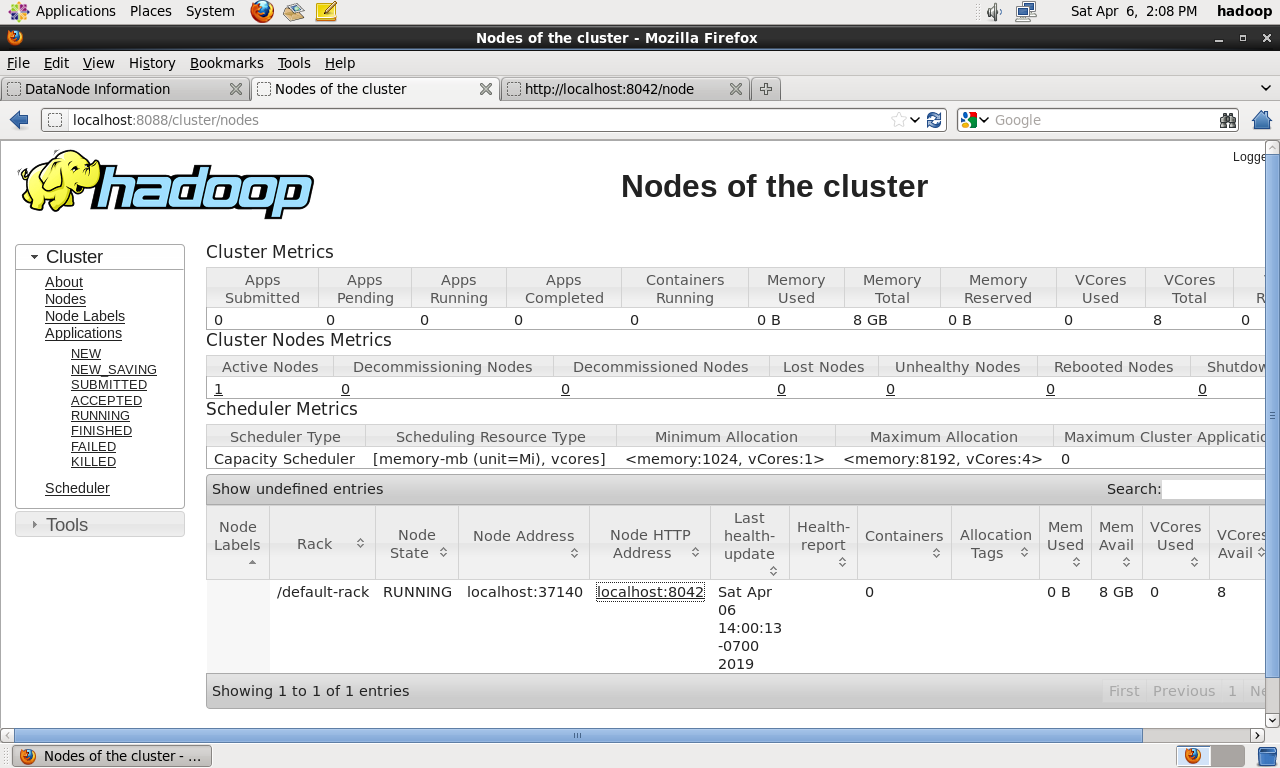

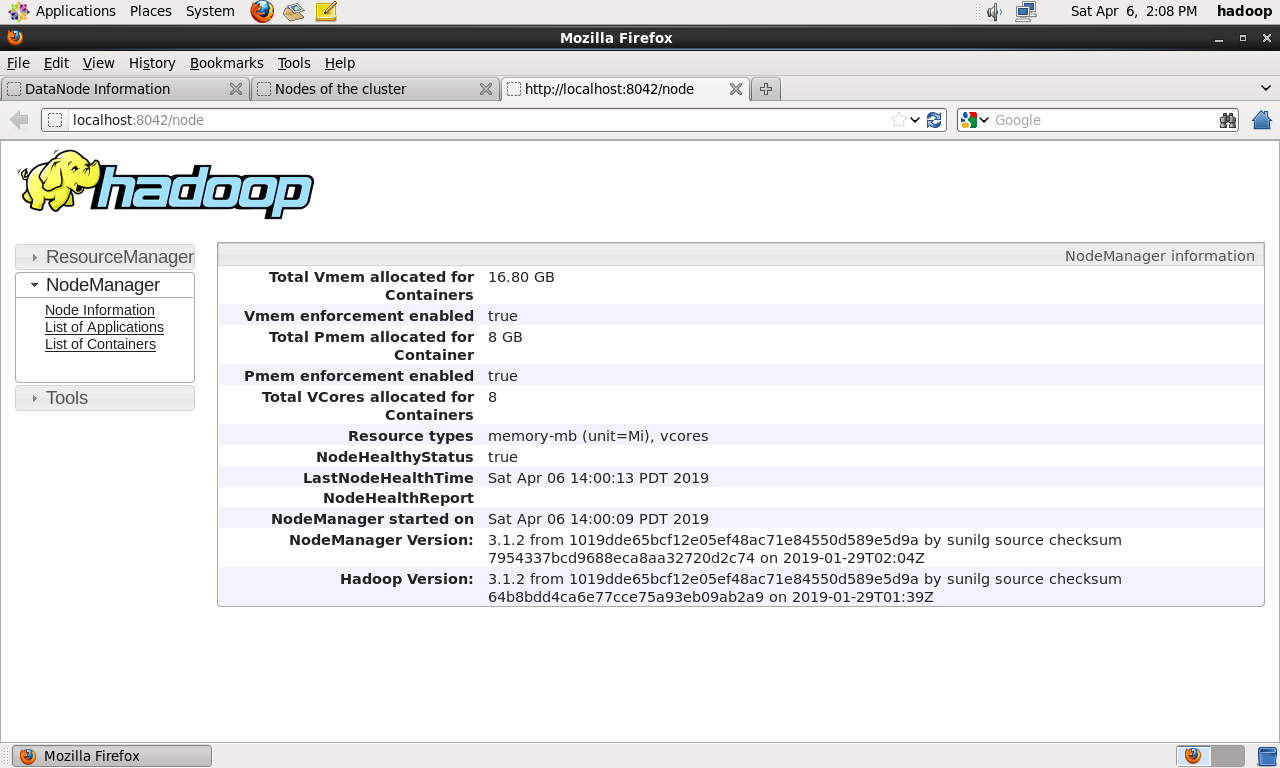

结果截图如下:

报错

问题:

localhost: Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password).

解决:

如果无法使用ssh无密码连接其他节点的主机,那么在启动hadoop的时候会出现的输入其他主机的密码,即使正确输入也无法认证

问题:

localhost: ERROR: Unable to write in /usr/local/hadoop/logs. Aborting.

解决:

sudo chmod 777 -R /usr/local/hadoop/

问题:

localhost: /usr/local/hadoop/bin/../libexec/hadoop-functions.sh: line 1842: /tmp/hadoop-hadoop-namenode.pid: Permission denied localhost: ERROR: Cannot write namenode pid /tmp/hadoop-hadoop-namenode.pid.

解决:

修改 hadoop-env.sh

export HADOOP_PID_DIR=/usr/local/hadoop/tmp/pid

问题:

[hadoop@localhost hadoop]$ ./sbin/start-dfs.sh Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [localhost] 2019-04-06 07:26:22,110 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

解决:

stackoverflow.com/questions/1…

cd /usr/local/hadoop/lib

ldd libhadoop.so.1.0.0

./libhadoop.so.1.0.0: /lib64/libc.so.6: version `GLIBC_2.14' not found (required by ./libhadoop.so.1.0.0)

linux-vdso.so.1 => (0x00007fff901ff000)

libdl.so.2 => /lib64/libdl.so.2 (0x00007f8ceda5d000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00007f8ced83f000)

libc.so.6 => /lib64/libc.so.6 (0x00007f8ced4ac000)

/lib64/ld-linux-x86-64.so.2 (0x00000031c1e00000)

# download

wget http://ftp.gnu.org/gnu/glibc/glibc-2.14.tar.bz2

wget http://ftp.gnu.org/gnu/glibc/glibc-linuxthreads-2.5.tar.bz2

# 解压

tar -xjvf glibc-2.14.tar.bz2

cd glibc-2.14

tar -xjvf ../glibc-linuxthreads-2.5.tar.bz2

# 加上优化开关,否则会出现错误'#error "glibc cannot be compiled without optimization"'

cd ../

export CFLAGS="-g -O2"

glibc-2.14/configure --prefix=/usr --disable-profile --enable-add-ons --with-headers=/usr/include --with-binutils=/usr/bin --disable-sanity-checks

make

sudo make install

问题:

2019-04-06 13:08:58,376 INFO util.ExitUtil: Exiting with status 1: java.io.IOException: Cannot remove current directory: /usr/local/data/hadoop/tmp/dfs/name/current

解决:

权限问题

sudo chown -R hadoop:hadoop /usr/local/data/hadoop/tmp

sudo chmod -R a+w /usr/local/data/hadoop/tmp